Zeynep Tufekci: Thank you so much for that kind introduction. I’m thrilled to be here. And, part of writing a book is a lot of things I wanted to say are in the book. So I want to talk a little bit about some of the things I’ve been worried about and thinking about over the past year. My book, Twitter and Tear Gas (it got its title from a Twitter poll; that’s how bad I am at titles), is about social movements and how they ebb and flow, and how we seem to have so many tools empowering us. And yet, authoritarianism seems to be on the rise all around us. So that’s the book. I was puzzling about that, and now I’m thinking more about how are the technologies we’re building compatible and aiding the slide into authoritarianism, and also thinking why aren’t social movements that we have able to counter all this since we are indeed empowered.

So a little bit about me. My name is Zeynep. It is really Tufekci. (Yes, the K and C—the C comes after the K.) And I started out as a former programmer. I was a kid really into math and science. I loved physics. I loved math. And I thought, “I am going to be a physicist when I grow up!” Because that’s what a lot of kids think when they’re young. And I grew up in Turkey in the shadow of the 1980 military coup. So I grew up in a very censored environment where there’s one TV station—we didn’t really get to see much on that.

And as I was kinda making my way through thinking about my own future as a little kid, you know, you imagining myself as a physicist, I found out (as it happens to a lot of kids who are into math and science), I found out about the atom bomb and nuclear weapons and nuclear war. And I thought whoa, this isn’t good. I could become a physicist, I could be maybe good at it, but then would I be doing something really morally questionable? Would I have these huge questions to answer?

And because of family circumstances I also needed a job as soon as I could. And I was a practical kid that way, too. So I thought instead of physics let me pick a profession that I enjoy, that’s got something to do with math and science, that doesn’t have ethical issues. So I picked computers. [laughs]

So that’s…I think I just told everybody that my predictive ability is not that good. But you know, right now my friends in physics, they’re in CERN debating who’s going to get the Nobel Prize and all of that for the Higgs boson research, while my computer scientist friends are building oh, killer robots, manipulating algorithms, all of that. So here we are. I did pick a topic with a lot of ethical implications.

Also I started working as a programmer pretty early on. And one of the first companies I worked for was IBM. And it had this amazing global intranet. And back then there wasn’t even Internet in Turkey, right. So all of a sudden I could just sort of go on a forum and email people around the world. And I had this mainframe that had to be something something to localize a [MIDI?] machine. It was this multiplatform complex thing. And I could find the person who’d written the original program and he’d be like, “Oh here you go.” Someone in Japan would answer me. I thought, “This is amazing. This is gonna change the world. This is great!”

So I felt really empowered and hopeful with the idea, because again in Turkey, very censored environment. And then the Internet came to Turkey. And then I came to the United States. And I’d been studying this in mostly very hopeful— I’m personally pretty… I’m an optimist by personality, too. But I’m getting more and more worried about this transition we’re at that you see in a lot of history of technology, where the early technology starts with you know the rebels, the pirates, it’s great, it’s amazing, it’s gonna change the world, we think all these great things.

And it has that potential, very often it has that potential. You know radio was a two-way thing where people around the world talked early on. And then World War I came and it became a tool of war. So I started thinking more about are we at that inflection point. And increasingly I think we are. I don’t think it’s too late, but I think we have a lot of things that we should worry about. So that’s what I’m going to talk about in my slides.

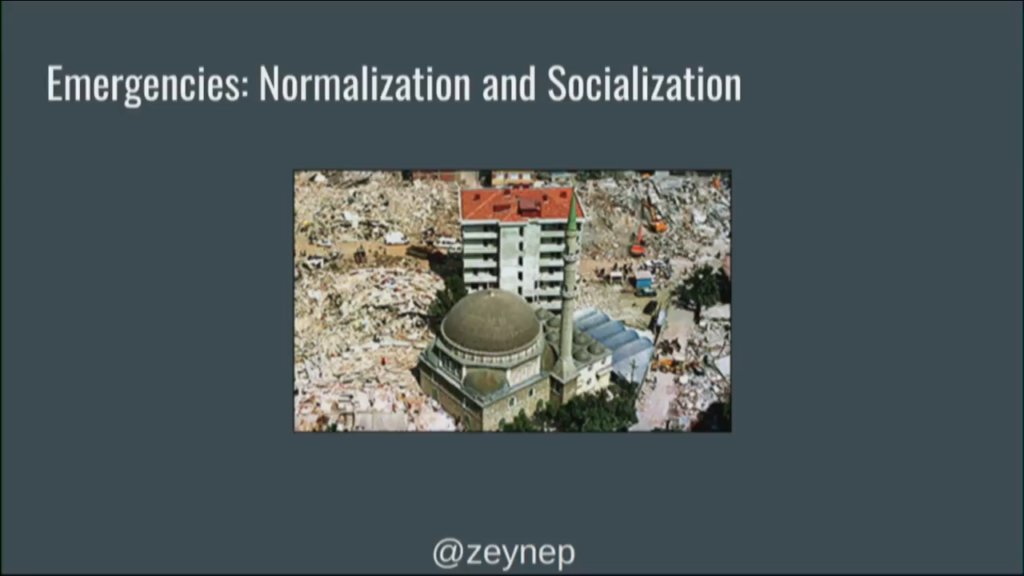

So. I want to talk a little bit about how we normalize and socialize ourselves in emergencies. Because I think it’s important, because I think the tech world is not panicking enough. And my first experience with how we normalize emergencies comes from a pretty awful experience. This is a 1999 earthquake in Turkey in my childhood hometown, that I was by coincidence in Istanbul for during the earthquake. And then hearing about it—feeling it; it was a very strong one—I rushed to the area with some rescue teams.

See, around the world when you have an earthquake, rescue teams around the world rush to it because it kind of makes sense, right. No one country can have all the rescue teams you need. Earthquakes are rare events. They’re not simultaneous. So every country has a few of those. And then when something happens people all rush. So, so far so good.

Unfortunately Turkey was really underprepared for this quake. This is sort of the iconic picture of it. And you can see it was very capricious. A building would be standing, the next one would be down. And I rushed back with a team from the US who had just arrived. It was really chaotic, because the country wasn’t prepared even though it was on a fault line. So we’re in a shock and the infrastructure’s not there. For example, teams needed transportation because when earthquake teams arrive, they bring their earthquake-specific equipment, but they expect transportation, fuel, lights, to be provided locally because why would you carry all that from around the world? It makes sense.

Even transportation wasn’t arranged. What happened is a friend of mine, she just flagged a city bus—regular bus. She said, “I’ve got an earthquake team who doesn’t have transportation.” And the bus driver was like, “Oh, okay,” and just told all the passengers get out, please, thank you. Took the team in. Drove three hours to the area. And like a week later I saw him sleeping on a little bench, all stubbled, his bus still transporting. He acted…he made a reasoned choice in an emergency. And I hope he never got punished for it.

One of the things we needed was lights. So I spent a lot of time that week acquiring lights and fuel. And by acquiring I mean break into houses that were still standing. I teamed up with a policeman because he seemed to know how to break into houses—I don’t know that story, how that happened but whatever. We broke into houses and we stole especially torchiere halogen lights. They were great. And we were in a rush because with an earthquake you have this golden period. Every hour counts if you can pull people—under the rubble before they perish. That’s amazing, that’s great. But every hour they’re facing danger they may die.

So it was really interesting to sort of step back and think, “Well, I’m breaking into houses, with a policeman, and nobody’s batting an eye.” Why should they, right? And in fact if anything they’re just cheering us on and saying, “Oh, just be quick,” because there’s still aftershocks.

Three days into it, especially with people who hadn’t lost immediate family, people are just completely… Not completely, they’re still trauma. Let me correctly say this. People had become normalized into routines. Three days, four days. It was just really eye-opening for me to see. They’d just go to the rubble and pull a chair and sit down and have a cup of tea. We’d do all these things—aundry, dishes, kids playing, chatting. That experience got me to look into how do people react in emergencies? How do people react to crises? How do people react to moments where there’s danger?

And your image of it might be this. People panicking and there’s this crisis and let’s just sort of try to calm people down. If you actually talk to crisis people, they tell you again and again we do not panic correctly. We don’t panic in time. We panic very late, and then all at once. And this is true for acute emergencies when there’s a crisis moment. Also for an earthquake. Also for things that are happening slowly over a few years, five years, ten years. People don’t panic in time and organize in time.

Why am I talking about this to a bunch of technology people? Because I think we are not panicking properly at this historic inflection point that has many scary downsides. But we are not there yet. There’s a lot to be done and this is why I’m giving these talks that are more like how do we deal with this emergency situation the way it is. We kind of let things get to this point, how do we deal with this?

Right. This is what I don’t want us to do, all the calm.

So the authoritarian slide, let’s talk about this. It happens slow then fast. People normalize and socially reassure each other as it happens. He won’t win the nomination. He won’t win the election. He won’t do what he says. Turkey will be fine. Le Pen won’t win. EU will stand. You know? We tell each other these things and they don’t happen.

But they happen. And you can sort of read history and see how that happens. And the tech world is turning from rebellious pirates to compliant CEOs, increasingly. It is going to happen. History says it’s going to happen. I have a lot of friends in the tech world as a former programmer, as somebody in this world. I trust a lot of them. I trust their values. And they keep coming to me and saying, “Trust me.” And I’m like, you shouldn’t trust yourself because you’re not in charge. There are big, powerful forces that work here. Those companies, they may be run by well-meaning people right now. They will comply. They will be coerced. They will be purchased. They will be made to. World War I came and the Navy was like, “Radio? It’s ours. Everybody off.” And that was that.

The idea that the workers in this space tend to be more progressive, more liberatory, is some sort of guarantee, history tells us is illusory. And yet I hear this again and again from people who tell me, “I work here. I trust the people. We have internal dialogue,” etc. I don’t want to rest on that.

All the signs are here. We talked about them—recent elections, we have polarization, elite failure. And tech is involved in every bit of this. I talk about misinformation, polarization, loss of news. But it’s also the economy. And we’ll talk a little bit about this. The technology economy is lopsided and has created a lot of tensions. A lot of you in this room are on the beneficiary side of this, more or less. But to outside the tech world, it is a very scary moment. “What will my kids do for a living?” is a very scary moment for people.

So anything about technology is the current thing. The tech world wants to talk about anything but how technology may be compatible with and aiding this kind of authoritarian slide.

Now, everything is multi-causal. So you go to tech people and they say it’s polarization, it’s this, it’s that. It’s true. Anything that you want to talk about is multi-causal. But this is a big part of it. Imagine if early 20th century, we talked about cars as an ecology. What will they do? We imagined different kinds of cities. We thought about the social consequences of suburbanization and maybe didn’t go that way. We thought about climate change, which was talked about even in the 19th century. We talked about other things, public transportation. What if we hadn’t had cars go the path they did. We’d probably be in a much better world, for lots of things.

So the warnings we have. These are my things—the elite tech that right now a lot of people tell me, “I’m here. We’re really good. They can’t replace us.” That’s going to change. You’re going to have machine learning off the shelf that’s going to be easier and easier and more commodified. Toolmakers ideals do not rule their tools. Especially something like computing. And most management history tells us we’ll either succumb, or be coerced, or become compliant. It just happens historically.

And the moonshots tell you a lot, right. The tech world is very focused on otherworldly things. Colonizing Mars, living forever, uploading… I mean, these are fun things to chat about, maybe 2am in a dorm room. But they’re not really the moonshots. We have complicated problems here.

So let’s talk about what is this— Why am I talking about technology as compatible with authoritarianism? Let’s talk about what I mean. So the rest of the talk I’m going to go into these things. The case I’m going to make for how we’ve gotten to this particular point and what are the features of technology that are compatible, endorse, already aiding, our slide into emergent authoritarianism around the world.

One, surveillance is baked into everything we’re doing. It’s just baked into everything. Ads… I mean think about it, when was the last time you bought something from an ad? Not very many times. The ads on the Internet are not worth a lot unless you deeply profile someone and/or silently manipulate them. Or nudge them. That means that anything that’s ad-supported is necessarily driven towards this.

And even when they’re not driven towards this, they are very very tempted to sell the data they have, because that’s where everything is going. We’ve built an economy in the technology world that is surveillant by nature. And it’s going to become worse with sensors and IoT. We’re such a surveillant world right now that you can turn off your phone you can do everything, and there are so many cameras around and very good face recognition. The people you’re with will post about you. It is not possible at the moment to escape the surveillant economy of the Internet and be a participant in the civic sphere.

I work with refugees in North Carolina. Most everything happens on Facebook, or WhatsApp, or a few things like that. If I want to be part of that work, I can’t avoid the surveillant platforms. I’m not going to get people to sort of communicate with me outside of those.

Now the business model of these ad-driven companies is increasingly selling your attention. This is really significant because for most of human history we had, the problem wasn’t too much information. The problem was too little information. It’s a little like food, right. For most of human history we didn’t have enough to eat. So if your great-great-great-great-great-great-grandparents liked eating, knew how to eat and gained weight really well, it worked great for you. It was a survival skill. But right now we live in a world where there’s too much food and not enough sort of moving around. We’re sedentary. So we face an issue with how do we deal with this? How do we deal with the crisis of an environment that’s completely different from the one that we evolved for for so long?

We have the same problem now in that we’re still the people who do the purchasing. We’re still the people who do the voting. The day is twenty-four hours. And getting our attention has become this crucial bottleneck, this gatekeeping thing to so many things. So, we don’t just have surveillance, we have these structures that are getting better and better at capturing our attention in all sorts of efficacious ways. Social media, games, apps, content, politics, anything you want talk about. Getting our attention not just by doing something random, but profiling us and manipulating us and understanding us in ways that are asymmetric, that we don’t understand but they do, has become important.

And these aren’t— Like I’m just sort of laying out some of the dynamics. They’re all going to come together.

So we have increasingly smart, surveillant persuasion architectures. Architectures aimed at persuading us to do something. At the moment it’s clicking on an ad. And that seems like a waste. We’re just clicking on an ad. You know. It’s kind of a waste of our energy. But increasingly it is going to be persuading us to support something, to think of something, to imagine something.

I’d give you two examples. A couple of years ago, I think 2012, four years ago, I wrote an op ed about the big data practices of the Obama smart campaign. A lot of people said this is one of the smartest campaigns, used digital data, microtargeting, identified, gave everybody a score, knew who was persuadable, who was mobilizable. A lot of A/B testing. All the sort of top of the line stuff.

And I thought, know what? It doesn’t matter whether you support this candidate or not. These things are dangerous for democracy. Not because persuading people is bad, but doing it in an environment of information asymmetry where you’ve got all this stuff about them and then you can talk to them privately without it being public, right. If I sort of target a Facebook ad just at you? That’s…just that you. Like, there’s no public counter to it. If you saw it on TV, so does the other side and maybe they can try to reach you and counter it. But here you can’t do it.

And I started giving examples. For example we know that when people are fearful, they tend to vote for authoritarians. When they’re scared, they tend to vote for strong men and women. And look at how much, for example, content about terrorism occupies both our social media and our mass media. Now, I’m from Turkey, right. This is a problem, this is a real problem for the Middle East. This is a horrible problem. There’s been so many acts of terrorism, so many mass casualties. In the Western world, it’s not even a rounding error to a weekend’s traffic fatalities.

I’m not saying I’m not horrified. It’s horrible—I hate every single incident. It’s a crisis in that every act of terrorism is horrific. But the disproportionate amount of attention it gets is a way that people get fearful. And we know that as people get fearful, a lot of them vote for authoritarians. But that’s not true for everyone. Some people get pissed off at being manipulated and being scaremongered. So in the past, you kinda had to do it to everyone.

So in 2012 I said what if you could just find the people who could psychologically, personality-wise, be motivated by fear to vote for an authoritarian and just target them silently? They can’t even counter this. So back then a lot of my friends who worked in the Obama team and other sort of political campaigns said, “No! This won’t happen. We’re just persuading people. This is fine.” And I said look, I’m not talking about your candidate. I’m talking about the long-term health of our democracy if you’ve got public communication that has become private-tailored potential manipulation.

So fast-forward to [2016]. There’s already talk that the Trump campaign data team says they did this. That they silently targeted on Facebook so you didn’t see it, but just the people they targeted saw. They targeted young black men especially in places like Philadelphia, and some Haitians in Florida, and a few other key districts, to scare them about Hillary Clinton specifically. They weren’t trying to persuade, they were just trying to demobilize.

Now, they may be exaggerating how good they were at this. But that’s where things are. So I can’t vouch how much they did it, although I have some independent confirmation. Heard from young black men in Philly. They did get targeted like this. And we have an election that was decided by less than a 100,000 votes. How many people were demobilized in ways that we didn’t see publicly? What were they told? We don’t know. Only Facebook knows.

It’s just four years later and you’re already seeing this idea. And they also tried—this Trump data team tried—to do personality analysis. Just using Facebook Likes you can analyze people’s big five personality—openness, extroversion, introversion, all of that. So they already tried to use that. Again, somebody might say well they weren’t that good at it. But I’m telling you it’s getting better and better. This is where things are going.

So having smart surveillant persuasion architectures controlling the whole environment, experimental A/B testing, social science, social science aware and driven, these are sort of these… They sound like great tools. They are not only great tools, they’re also tools for manipulating the public. And since they’re so centralized and so non-public, we don’t know how much more widespread this is going to get.

So I’ll give you an example from yesterday that I was ranting a lot on Twitter. Did you see Amazon has this Echo Look that it’s a little camera that you’re supposed to put in your bedroom? And it’s going to use machine learning stuff to tell you which of your outfits is better. Like you don’t know enough judgment in your life, right? You need to have some machine learning algorithm…

So there’s going to be all these sort of— Because it’s going to work on this training data, so I will just await the first scandal about sort of the biases about race, biases about weight, and all of that. So that will…almost predictable. But there’s something else happening. If you upload a picture of yourself to Amazon every day, current machine learning‑y algorithms (that’s usually the best way) can identify the onset of something like depression, likely months before any clinical sign. If I have a picture of you every day, smile, the subtle things, machine learning algorithms can pick up on this. They can already do this.

Just your social media data… Which isn’t very rich, especially on Twitter—it’s just short… I have a friend who’s done it. She can predict the onset of depression with high probabilities months before any clinical symptoms. She’s thinking postpartum depression intervention, right. So she’s thinking great things.

I’m thinking about the ad agency copy I read about the advertisers who were openly pondering how do we best sell makeup to women. And I’m quoting. They said, “We know it works best,” they’ve tested it, “when women feel fat, lonely, or depressed.” That’s when they’re ready for their beauty intervention. I love reading trade magazines where people are kinda honest. It’s really the best place.

So, Amazon’s going to know when you’re feeling somewhat depressed or likely they’re going to know whether you’re losing or gaining weight. They’re going to know how’s your posture. They’re going to know a lot of things. If it’s a video they can probably analyze your vital signs, how much blood flow to your face. You can measure heartbeat. It’s kind of amazing with high enough resolution.

Where will this data go? What will Amazon do with it? And who else will eventually maybe get access to it? You know, Amazon people will say, “We’re committed to privacy and we’ll do this and we’ll do that.” And I’m like, once you develop a tool you don’t get to control all that will go with it. So, how long before these persuasion architectures we’re building are used for more than selling us “beauty interventions” or whatever else they want to sell us? How long before they’re also working on our mind, the politics, they’re already here.

So the algorithmic attention manipulation, which is engagement and pageview-driven, has a lot of consequences. If you go on YouTube— See, I watch a lot of stuff on YouTube for work. I keep opening new GMail accounts or going on incognito because it pollutes my own GMail recommendations. This is something I’ve noticed about it and I’ve talked to lots of people, and we noticed the same thing.

If you watch something about vegetarianism, YouTube says, “Would you like to watch something about veganism?” Not good enough. If you watch Trump it’s like, “Would you like to watch some white supremacists?” If you watch a somewhat you know, radically but not violent Islamic… You know, somebody who’s kind of a dissident in some way, maybe even, you gets suggested ISIS‑y videos. It’s constantly pushing you to the edge of wherever you are, and it’s doing this algorithmically.

So I kept pondering why is it doing this, because this isn’t YouTube people sitting down and saying let’s push people. And if you watch something about the Democrats you get conspiracy left. You get these suggestions. Why are they doing this? I think this is what’s happening—we know from social science research. If you’re kind of in a polarized moment, and if you feel like you took the red pill and your eyes have been opened. You got some deep truth. You go down that rabbit hole. So if I can get you obsessed or somewhat more interested in a more extreme version of what you are sort of [meh?] interested in. If I can pull you to the edge, you’re probably going to spend a lot of time clicking on video after video.

So our algorithmic attention manipulation architectures do two things. They highlight things that polarize, because that drives engagement. Or they highlight things that are really saccharine, syrupy, spirit of like human soaring kind of stuff that are unrealistic. Also cat videos but that’s fine. No objections. Puppies are fine, too. So what you have here is this realization by the algorithms that if we get a little upset about something we go down the rabbit hole, and that’s good for engagement, pageviews. Or if we feel really warm and sweet about something, we kind of go “awww,” that’s good.

So we’re having this weirdo thing where my Facebook is either people quarreling about the crazy stuff, or really sweet stuff. And like, is there nothing in the middle? Can we have some sort of middle—well there is of course. But the mundane stuff isn’t as engaging. And if this is what’s driving my engagement and out of this what I see, you have the swing. And it is not healthy for our public life or personal lives to be on this constant swing. It pulls to the edges.

So all of this encourages filter bubbles and polarization. Not because we’re not already prone to it. The current thing I hear is, “Well everything encourages— because that’s kind of humans.” Well yes. It is our tendency. You know what that’s a little bit like? We have a sweet tooth for a very good reason. Your ancestors who didn’t like sugar and salt? I don’t know, you probably wouldn’t be here. It was a very good thing to like sugar and salt, where you had to hunt and gather and had no fridges.

So we have a tendency to like sugar and salt. That doesn’t mean we should serve breakfast, dinner, and lunch composed of only sugar and salt food. So we have a tendency, and these persuasion architectures and algorithmic attention manipulation are feeding us our sweet tooth. And that’s not good for us.

We’re also, at the same time, dismantling structures of accountability through our disruptive tech. Right now I think like 89% of all and when he goes to Facebook, Google. So we’ve got all the ad money going to these algorithmic engagement-driven, pageview-driven, ad-driven profiling, surveillant systems. Now, I use them both, right. I’ve written about how good they were for many things. So I’m not unaware of all the good things that come out of having this connectedness.

But having connectedness be driven by algorithms that prioritize profiling, surveillance, and serving ads is not the only way to get connected. We could have many other ways where we could use our technology to connect to one another in deep ways, but yet we are here and all the money’s going there, and local newspapers to national newspapers are being hollowed out.

And this is really crucial, especially at the local level because if you don’t have local newspapers (I’m watching this happen all over the country), local corruption starts going unchecked. And then it starts kind of filtering upwards from there. The local corruption gets unchecked and you have corrupt local politicians. And then you have state-level corruption. And then you have the national thing.

And I see all this sort of in the tech world, “Oh, let’s spend $10 million to create a research institute…” And look, I’m a professor. Research grants sound great to me. But here, let me save you the money. Take that money, divide it into every local newspaper, however many they are, and just give it to them. Right now, we don’t really need a lot of research. We need funding for the work itself that we know what it is. I mean, again, it really sounds great to do more research for me as a professional research person. I’m like, I have little to add. The problem is that ad money that used to finance—in a historic accident—used to finance information that was surrounded by journalistic ethics, now fuels misinformation on social platforms.

I don’t mean to say newspapers were perfect, okay. I spend a lot of time criticizing how horrible they are in so many ways. But they were a crucial part of a liberal democracy. We needed to make them better, instead we knocked out whatever was good for them.

We also have a lot of technology that’s explicitly knocking out structures of accountability. Now, I think it’s a very good idea to call a taxi from your smartphone. I have zero problem with the idea of an Uber, of sorts. And I think if you want to complain about taxis and what a monopoly they were and good riddance to them you know, I’m with you. But the problem is the current disruptive model, like Uber’s and the rest of them, also the way they’re structured, is not just creating the convenience, it is trying to escape any kind of accountability and oversight we had over these things.

So there are worse things than having to have taxis that are crappy. It is having a system that’s escaping the institutional accountability structures we built. For example you have some duties to your [employees]. And if you can just make them all contractors and pretend they’re not your [employees], and you pretend you don’t have them… And that’s kind of lack of accountability structures. And the way Facebook says, “Oh, we’re not a media company, it’s just the users,” you constantly push accountability away from you. That is not healthy.

This is even true for things like Bitcoin, which is innovative and disruptive and interesting. But you know what? There’s a reason that we use the money we use, because it’s tied to yes imperfect, yes not great— You know, I’m a movement person my whole life. I understand the sort of objections to the nation-state. But you know what’s worse than a nation-state? A bunch of people with zero accountability are like, “Let’s just do this.”

This lack of accountability is a core problem even if the unaccountable people in the beginning are kinda good people and they’re our friends and we’re like, “Oh, it’s okay. It’s in their hands. They’ll do good.” That is not how history works.

So the labor realities of the new economy are not compatible with a middle class-supported democracy. This is this crucial, huge, political problem. This is not a problem that can be solved within technology, but this a crucial problem. Facebook right now employs what? Ten, twenty thousand people—maybe a couple thousand are engineers. It’s very top-heavy. General Motors at its height, when it was the dominant company, employed maybe half a million people? in pretty good jobs. And with a supply chain with a lot of good jobs.

Right now you have a couple thousand engineers at a company like Facebook making really good money, really smart people, a lot of good people. And the rest is basically minimum wage. Think of Amazon, right. It’s a bunch of great engineers. They can create something like Echo Look. (To give you fashion judgment.) And warehouse jobs. This is not an economy structure that can support a mass base democracy. It just won’t. People will vote for whoever’s going to burn things down, or promise to burn things down, on their behalf.

So the skilled labor. The other thing is this is distributing the labor around the world so you have a race to the bottom rather than race to the [top] for many many jobs. The sort of outsourcing— I’m for jobs being distributed, in some sense. We don’t want them all here, we want all the rest of the world— But it has to be lifting everybody up more.

So we also have increasing centralization as part of this. One of it is network effects. And the other is data hunger. I’m on Facebook because so much of my friends and family are on Facebook. It’s also a great tool in many ways. The product keeps getting better. The alternatives just aren’t feasible for me because I can’t get my friends to email me. I can’t—just…doesn’t work. I’ve tried. They can use Facebook, they can use Messenger. There are a lot of parts of the world where Facebook is the de facto communication mechanism. And Messenger.

And also for something like Google to work so well, it needs all that data. That means once you’ve got all the data, you’re in this really dominant place where the people without the data can’t compete with you. Their algorithms won’t work well. Their profiling won’t work well.

And also security. I work on movement stuff a lot, as I said. And I work with a lot of people in precarious situations and repressive regimes. I tell them if your threat model isn’t the US government or Google, use GMail. I’m like don’t use anything that doesn’t have a 5,200 person security team, because it’s not safe.

Long story, I don’t have to explain to this crowd the way Internet started TCP/IP was built as a trusted network and all of that. But that is also driving centralization because you don’t feel secure. How many major platforms have yet not been hacked? Like, count with one hand maybe, that have not been hacked. So you gravitate towards them. So this is also driving why you get Facebook, Google, Amazon, eBay, a couple more. They’re just not easy to knock down. Which means when they do what they do you don’t have a means to use the market to punish them. Because there isn’t meaningful consumer choice.

There’s asymmetry in information. They know so much about us, how many of us have any clue how much data’s about us and how it’s being used? We have no access to it. When Facebook CEO Mark Zuckerberg, he bought a house, he bought the houses around him. Why? He wanted privacy. I don’t blame him. I don’t begrudge him his privacy, right. But we don’t have it. We have a sort of complete asymmetry in what we get to see versus what these centralized platforms and increasingly governments get to see about us. This asymmetry is deeply disempowering.

Machine intelligence deployed against us. So, algorithms, computer programs… Algorithm’s got this second meaning now, it just means complex computer programs, so here I can just say… Especially machine learning programs, right. What’s happening is that we’ve got this really interesting powerful tool that can chew through all that data and do some linear algebra and some regression, and spit out pretty powerful classifications.

Who should we hire? Give it some training data, divide it into people who work high performance, low performers. Churn churn churn, train. And then you give it a new a new batch. It says hire those, don’t hire those.

The problem is you don’t understand what it’s doing. It’s powerful, but it’s kind of an alien intelligence. We tend to think of it as a smart human? It’s not, okay. It’s a completely different intelligence type. It’s kinda alien. And it’s also powerful. So for all we know, it’s churning through that social media data and figuring out who’s likely to be clinically depressed in the next six months, probabilistically. You have no idea if it’s doing that or not, or what else it’s picking up on. We’re putting an alien intelligence we don’t fully understand, that has pretty good predictive probabilities, in charge of decision-making in a lot of gatekeeping situations, without understanding what on earth they’re doing.

And already in the tech world, you talk to people at high tech companies and they’re like, “No, we don’t understand our machine learning algorithms. We’re trying to dive into it a little bit.” It’s now spreading to the ordinary corporate world. They don’t understand it at all, and they don’t even care. It’s cheap, it works, let’s use it to classify.

But what is it picking up on? What is the decision-making? Do we really want a world in which a hiring algorithm weeds out everybody prone to clinical depression with some good probabilistic ability, 90% of them? It’s a problem if it’s right. It’s a problem when it’s wrong.

And these things can infer non-disclosed patterns. Even if you never told Facebook your sexual orientation… I mean, it’s 90%+ probability it can guess it. It can guess your race if you never told it. It can guess…well, forget the pictures. It can guess just from your personality types. There’s so many things that can be computationally inferred about you, predictably powerful, that you’ve never disclosed. And people can’t think like this. I didn’t disclose it but it can be inferred about me.

And bias laundering. A lot of the training data has human biases built into it and now it goes through the machine learning algorithm—you’re like, “Oh, the machine did it.” So this is the opacity and the error pattern that we don’t understand, how are they going to fail? This isn’t a human intelligence. It’s going to fail in non-human ways. These are all our big challenges with biases.

Now why is this compatible with authoritarianism? Think Orwell—ah! Think Huxley, not Orwell. This is my subconscious error. Orwell thought about this totalitarian state where they dragged you and sort of tortured you. That is not really the modern authoritarianism. Modern authoritarianism is increasingly going to be about nudging you in this balance between fear and complacency. And manipulation, right. If they can profile each one a few, understand your desires… What are you vulnerable about? What do you like? How do we keep you quiet? How do we keep you moving politically? The more they understand it one by one, the more they can manipulate this. Because again, it’s asymmetric. It’s an immersive environment, they control it.

Effects of surveillance. In a lot of cases, and I know this from working on movements for so long, is that once you become really aware of surveillance it’s not necessarily this sudden thing where everybody shuts up. It’s self-censorship. People stop posting stuff. They stop talking politics. They stop even thinking to themselves because you’re not going to really feel comfortable sharing it. Surveillance coupled with repression is a very effective tool for spiral of silence. And control, manipulation, and persuasion in the hands of authoritarians.

I want to give just one example from history before I conclude. Films, movies. I like watching a good movie, I like documentaries, I like the craft. It’s really interesting. But early 20th century filmmaking craft was developed by people who ended up in the service of fascism, violent and virulent racism, and there are two very striking examples.

One of them is The Birth of a Nation. This is early 20th century. It’s this horrible, racist…just murderous movie. It is the reason the KKK got restarted. It spread like wildfire—it went viral. It also used the tools of the craft very well. The way we think of A/B testing, dynamic architecture, experimental stuff, social science, engagement, all the things we think of our tools of our craft, the craft of moviemaking, it was a leap forward. And it ended up with lighting the fire that recreated the KKK. Of course it was on a bedrock of racism. But you have to light those fires for them to go someplace.

The second one is a scene from Triumph of the Will, which was shot by Leni Riefenstahl. There’s a great documentary, I recommend it to everybody in tech, The Wonderful, Horrible Life of Leni Riefenstahl. She lived to be 99. She was this filmmaker, artist, actress, gorgeous woman. And really, she developed the craft of filmmaking. She was really into it. So when Hitler asked her, when the Nazis asked her to film that she was like, “Great! I can practice my craft.” And you got The Triumph of the Will and the propaganda regime that was so consequential and efficacious in helping the rights of the Third Reich. And for years after she was like, “I was just practicing my craft.” But craft is not a tool that you can control. It’s not something that’s neutral.

So! Why am I so depressive this morning? I’m not. Because I don’t think we’re there. I just see this inflection point and I feel like we don’t have to do things this way. It’s going that way. We don’t have to do things this way. And one of the things that I really feel hopeful about is that there is this big divergence at the moment between where the world is going; and what in general the tech community thinks; and that technology workers are still a very privileged, unique group in that they can most often walk out of a job and walk into another one.

I talk to people at very large tech companies, they say all sorts of things. They have no fear. I’m like, “Aren’t you afraid?” They’re like, “No, I’ll just walk into another job.” They make a lot of money. So there’s demand. There’s still huge demand. And what I’m saying is this will change. Ten, twenty years, fifteen years, I don’t know when. Like with other technologies, it will start requiring less and less specialization. The deskilling of the work will move upward the chain. You’ll get more and more minority.

But right now there’s a large number of technology people who are the ones creating these tools. Who are the ones that have the ability for the most part to walk into a job that pays a reasonable wage. And these companies can’t do this without them.

So this is what I’m thinking. I’m not going to end up with sort of an answer, but how do we do this so that this great tool, the one that I had this hope for when I discovered it in Turkey, how do we do this so that we take the initiative and not just create another algorithm to get people to click on more ads, another surveillant architecture, another thing that will be used potentially in the future by authoritarians?

I just want to think, how do we become like that bus driver? The one that said “Oh wait. You know, an earthquake team needs transportation. Alright, everybody get out.” I want that initiative. And I think we can do it, because once again we have leverage as people in this sector. We have leverage that a lot of other people don’t have.

So this is where I’m going to end. And I’m easy to find. Thank you for listening. And I just hope to have this conversation with more and more people. Thank you.

Presider: Wow. Uh.

Zeynep Tufekci: I’m an optimist. I promise you. If I didn’t have a lot of hope, I just wouldn’t bother with all of this. I just…I’m projecting into the future, and partly… I’m from Turkey, so you know the person who’s seen a movie, a horror movie, and that’s yelling, “Basement! Don’t go into the basement. You with the red shirt, do not go into the basement.” That’s how I feel like. I’m also from North Carolina, so I kind of feel like let’s not go into that basement. So I think there’s hope and time.

Presider: Wow. So I took so many notes my pen is dry now. But, I will focus on a couple of questions here. You mentioned a lot of things in a light that is harmful to many people. And I think many of us in the audience, and certainly myself, have heard a lot of these technologies described as revolutions in marketing technology. As great new ways to unlock customer value. And these paths forward to evolve your business, right. That “digital transformation.” How do you reconcile, as a technology worker, being told one narrative and then living this other reality?

Tufekci: Well, see the thing is when you’re building something, you’re just thinking oh, I’m building value, right. I’m just building value. I’m just helping somebody…some customer value. You know, finding consumers. But every time you collect data about someone, there are these ethical questions. What’s the youth policy? How are you keeping it secure? Do you need to retain it? How much retention are you going to do?

So there are all these ethical questions that I feel like we’re skipping over. We’re kind of not even thinking about it. We’re just saying let’s do it this way. And what I want to encourage people to think is, every time you measure something about someone and write it down or record it and they don’t even know that you did it, you create this situation that’s, once again, compatible with a lot of other things.

So yes, you may be also creating for the consumer. So that may well be true. Those things can both be true. But you’re also building the infrastructure for all these other things.

Presider: There are a lot of folks here who are individual developers, individual engineers, that might be asking themselves what role do I play in this? I’m just— And I don’t want to say I’m just taking orders—

Tufekci: We are.

Presider: I’m just filing an issue. I’m just filling this task, you know. I don’t get a say in how my tool gets uses, I just build it.

Tufekci: We do get a say in how our tools get used, though, right. I mean this is the thing. And I think we’ve got to do a couple of things. I think we’ve got to assert more interest in how our tool get used. So if you’re working in a large company, there’s a lot of ways to assert. But even if you’re working as an individual person, you can think of how this is this tool going to be used. And maybe more importantly, can we build alternative tools? Can we build systems that create the convenience and value that we want but don’t have all the overhead that comes with it?

I mean I’ve been sort of…asking for this. It’s not going to happen, apparently. If you look at even Facebook, right, one of the largest companies in the world. What is it? $400 billion or something like that? If you read its filings, it’s making like ten, twenty dollars a year, per person? So all this data surveillance and persuasion architecture and manipulation and misinformation and fake news and all the stuff that we deal with and all its harmful effects? Give me a subscription version of this. Make me the customer, to have your connected world. You know, even if it meant you were worth I don’t know, ten billion instead of four hundred billion, I think people can survive on that?

There are all these things that are these alternative paths that we haven’t taken, we haven’t explored. And I think everyone from sort of the individual user to someone who’s building a tool, to people working in big tech companies, can say, “Is really our imagination going to be so limited that we’re going to build all the stuff that is so compatible with authoritarianism just to get people to click on a…shoe ad?” I mean, we’re selling ourselves really cheap. We’re building authoritarianism for such a cheap, cheap thing. That’s what I’m thinking, that maybe we can build alternatives.

Presider: One tension that I struggled with with this talk is this notion of authoritarianism, and maybe a comical reduction would be to think of them as this Big Bad, in Joss Whedon terms. There’s this big, potentially malevolent force that wants to manipulate us. But it’s hard to reconcile that with some of the public imagery of let’s say the buffoonery that surrounds some of the authoritarian forces in the global stage. So these people can barely finish a speech sometimes, but we’re also trying to think of them as ones who would actively manipulate us. How do we reconcile these possibilities.

Tufekci: Well, if you read a— I mean, I don’t want to say we’re in the Weimer Republic and we’ve got a new Hitler—we don’t. Okay, so it’s kinda clear I don’t want to trip on Godwin’s Law here. But, if you read Hitler’s biographies, he was a buffoon. He was an idiot in so many ways. But he was very good at one thing, and one thing, and that was firing up people in speeches. If you read his biography, he’s a failed painter…he’s a failed everything. And then he gets in a beer hall and starts spitting out conspiracy craziness. And holds people’s attention. And Hubris is a really good book on this. And then all of a sudden there’s one thing he’s good at and he’s really good at it.

So I’m not going to say our authoritarians are these super smart clever things that are going to be these omnipotent, omniscient things that do this. But they could at the same time be very good at tapping into a fear, a sense of instability, a lot of complicated things. Legacy of racism, a lot of things that kinda exist, kind of converging. They could tap into that very effectively. And while you would be saying—and I have said this so many times in the past few years—how could people believe this? They can. It’s complicated and we can both sympathize and not sympathize we can do whatever we want to. But it is quite possible for authoritarianism to be ruled by not the smartest people, but very often they will find a Leni Riefenstahl to hire.

So you know, Hitler may not have been very smart about a lot of things. But he was good at the beer hall speech. And that regime did hire one of the most talented filmmakers of the day. So I’m like, we’re the talented people of our day. How do we not go work for them? Because some people will work for them directly, and some people will work for them in directly in that the tool you built will then be used to further authoritarianism. And we saw this. Facebook’s algorithm is so happily prone to misinformation… It monetizes misinformation. You can go viral with something crazy, the UX is flat so you can’t tell Denver Guardian—the fake one—from a reputable newspaper. If it starts an argument in your mentions, its engagement. Facebook’s going to push it to more people.

So you built a tool that’s supposed to connect people, well it did connect people. But around this. It was very compatible with it. So it doesn’t really matter if you thought the tool was going to be used for something, if it has these features this is where it’s going to go. And people have warned them about this, for example. Before the election they were going ehhh. But here we are.

And I’m not saying— Everything’s multi-causal. In a close election, it wasn’t one thing. But I follow social media pretty closely on this thing. Misinformation was… It had a spike. It had a huge spike in this election. And it was partly spammers just monetizing Facebook’s algorithm, Google’s ad networks. And it was part of this polarizing environment feeding into it. And it was partly dark targeting ads. It was all of this combined. And technology was at the center of enabling new things, furthering existing difficult things.

Presider: So… And this’ll be my last question. Give me a second to formulate this here. As technology workers who have quite a bit of privilege already, and whose tools are being potentially used to strike at those who are less privileged than us, what then… Oh man, how do I even put this?

For those who are being targeted, for those who are manipulated by information easily, for those who are less savvy about technology than we are, what is our responsibility to them, as technology workers, given that we have access to this ecosystem that they may not?

Tufekci: Right. So this is where I get into my wide diversity is important spiel. And I don’t mean diversity of like, token diversity in that you just bring more Stanford CS women into the room. I mean, I identify as a geek person. I love my tribe. But we kinda have our own sort of tendencies and specialties?

What we really need is more people in the room where design is being done. More designers. And just people we talk to in all sorts of… From all levels of society, that can help us think of what’s happening. Because it’s not always easy to imagine how something’s going to play out. But very often, the people from those communities, they’re going to be the canaries in the mine. Because you can sit with them either, if we had more of them, making the technology. And until then we’re just listening to more of them.

I’ll give you an example from Facebook. I give Facebook examples because it’s more familiar with people. It’s not that Facebook’s the only problem, not at all. But a couple years ago do you remember when Facebook had a year-end thing where it said “It’s been a great year!” And it algorithmically picked a picture and put a party theme around it.

So, it came to people’s attention, especially Facebook’s attention, because someone from our community, a CSS creator, a technologist very well-loved, had a very unfortunate, horrific tragedy that year. He lost his 6 year-old daughter to cancer. And a lot of us knew about it because he was blogging, and he was a blogger already, good writer, technologist. And you know, everybody was heartbroken.

So what does Facebook’s algorithm do at the end of the year? Picks up a picture of his daughter, puts a party theme around it, says it’s been a great year. Right during the holidays, you know. It’s been six months, they’re struggling. He wrote this heartbroken post about algorithmic cruelty. He was very kind, in fact, what he wrote.

So how could this happen? Well, head of Facebook product at that point was a 26 year-old Stanford graduate, whose stock options might’ve been great. He’s surrounded by other Stanford graduates who’s stock options are great. They work at Facebook. They live in San Francisco. It’s been a great year! Right?

The kind of failure of imagination, that it might not have been a great year for a billion and a half people. This is so elementary. I mean you do not need a Stanford CS degree. I am telling you, literally walk out, pull three people, and ask them, “Do you think it’s been a great year for a billion and a half people?” You will get the correct answer.

And this is what I mean. We can be so narrow. Some of the smartest people in the world can make such obvious, horrible mistakes, right. And this should call for humility. We need to be talking not just among ourselves. We need to broaden who gets to be a technologist. And there are a lot of issues about doing it as fast as we can. And even if we can’t do it right now and it’ll take some time, I am literally saying pull people off the street and say, “Is this a good idea?” and you will get insights that we as a geeky community, a well-paid community, more male than female, less some minorities… I mean, we won’t have those life experiences to envision all the potentials. And I think that’s a really healthy way to go, is to try to think through.

As I said, a lot of times I think through things partly because I’m from Turkey and that kinda informs my understanding. Making those intersections is really important, and that’s why broadening, really bringing more life experiences into how we think about technology, would help us do it better and would also help avoid such horrible mistakes that we keep seeing again and again, again. And that’s kinda my little pitch about that.

Presider: Thank you.

Tufekci: Thank you.

Presider: Thank you so much.

Further Reference

Session description at the DrupalCon site