In ten years of doing research and collaborations and being mentored, I’ve learned to see disabled people as doing the most creative work that is also the most urgent work of body meeting world. And I think it’s really critical to keep both of these things closely together.

Archive (Page 1 of 4)

Self-sovereign identity is what sits in the middle enabling individuals to manage all these different relationships in a way that is significantly less complex than each of those institutions needing to have a business relationship with each other to see those credentials.

Community is always part of a system that we sometimes can or cannot see or recognize. And in Gerard O’Neill’s proposals for these islands in space, those communities…were supposed to perform a very specific function in a larger system. They were supposed to be experiments.

I personally am not worried about settlements. I think they’re so far in the future that we can’t predict what they’ll look like. We can’t even keep human beings, particularly a lot of human beings, alive in space or have real settlements, the way we envision a colony or a settlement. I don’t think the lack of sovereignty is going to hurt any of this.

I think we’re already moving into a very—uncomfortably for most of us, into a place where nation-states, governments, are being forced to cede authority to corporations. And that is going to, I assume, happen faster and faster. And if you throw in space, if you throw in the limitlessness of space, then I mean…the sky’s the limit so to speak. I don’t know what the…where that takes us.

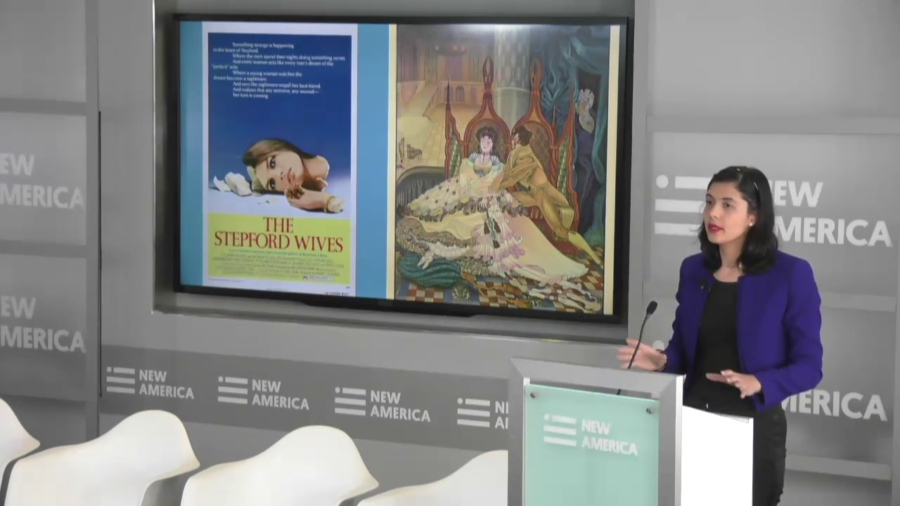

AI Policy Futures is a research effort to explore the relationship between science fiction around AI and the social imaginaries of AI. What those social measures can teach us about real technology policy today. We seem to tell the same few stories about AI, and they’re not very helpful.

This is going to be a conversation about science fiction not just as a cultural phenomenon, or a body of work of different kinds, but also as a kind of method or a tool.

We came up with the idea to write a short paper…trying to make some sense of those many narratives that we have around artificial intelligence and see if we could divide them up into different hopes and different fears.

When data scientists talk about bias, we talk about quantifiable bias that is a result of let’s say incomplete or incorrect data. And data scientists love living in that world—it’s very comfortable. Why? Because once it’s quantified if you can point out the error you just fix the error. What this does not ask is should you have built the facial recognition technology in the first place?