The goal of MICrONS is threefold. One is they asked us to go and measure the activity in a living brain while an animal actually learns to do something, and watch how that activity changes. Two, to take that brain out and map exhaustively the “wiring diagram” of every neuron connecting to every other neuron in that animal’s brain in the particular region. And then third, to use those two pieces of information to build better machine learning. So let it never be said that IARPA is unambitious.

Archive (Page 1 of 6)

What is this condition? I would summarize it as people extending computational systems by offering their bodies, their senses, and their cognition. And specifically, bodies and minds that can be easily plugged in and later easily be discarded. So bodies and minds algorithmically managed and under the permanent pressure of constant availability, efficiency, and perpetual self-optimization.

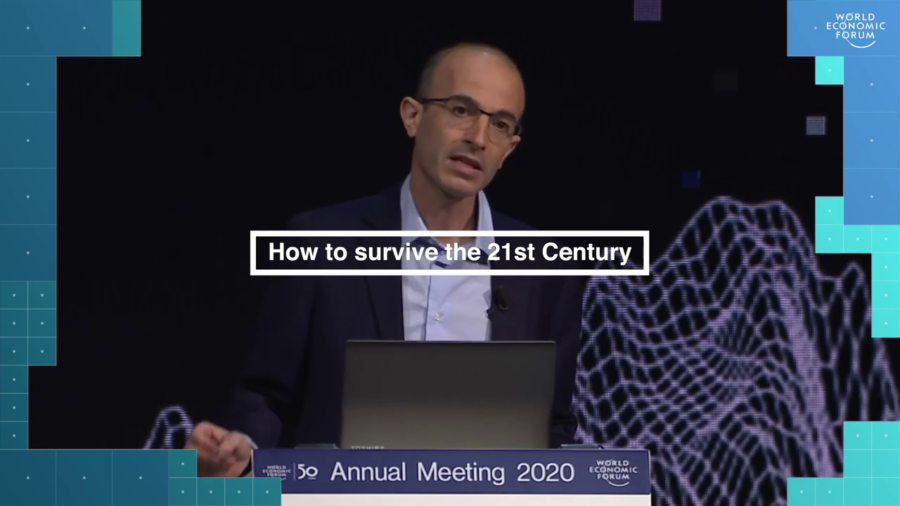

Of all the different issues we face, three problems pose existential challenges to our species. These three existential challenges are nuclear war, ecological collapse, and technological disruption. We should focus on them.

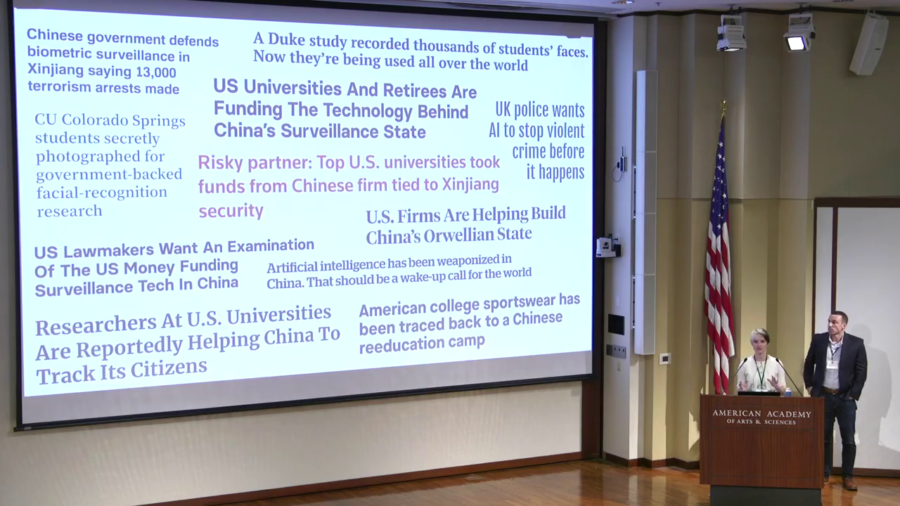

We wanted to look at how surveillance, how these algorithmic decisionmaking systems and surveillance systems feed into this kind of targeting decisionmaking. And in particular what we’re going to talk about today is the role of the AI research community. How that research ends up in the real world being used with real-world consequences.

Positionality is the specific position or perspective that an individual takes given their past experiences, their knowledge; their worldview is shaped by positionality. It’s a unique but partial view of the world. And when we’re designing machines we’re embedding positionality into those machines with all of the choices we’re making about what counts and what doesn’t count.

AI Blindspot is a discovery process for spotting unconscious biases and structural inequalities in AI systems.

I’m just going to say it, I would like to completely blow up employment classification as we know it. I do not think that defining full-time work as the place where you get benefits, and part-time work as the place where you have to fight to get a full-time job, is an appropriate way of addressing this labor market.

AI Policy Futures is a research effort to explore the relationship between science fiction around AI and the social imaginaries of AI. What those social measures can teach us about real technology policy today. We seem to tell the same few stories about AI, and they’re not very helpful.