Kevin Bankston: Next up I’d like to reintroduce Chris Noessel, who in addition to his day job as a lead designer with IBM’s Watson team somehow finds the time to write books like Designing Agentive Technology: AI That Works for People and, a personal favorite of mine, Make It So: Interaction Design Lessons from Science Fiction, which led to him establishing the scifiinterfaces.com blog where he’s been doing some amazing work surveying the stories we are and aren’t telling each other about AI, which he’s going to talk about right now in our third and final solo talk.

Chris Noessel So, hi. Thank you for that introduction, that saves me a little bit of time in what I’m about to do. I am an author. I’m a designer of non-Watson AI at IBM in my day job. And I am here to talk to you about a study that I’ve done for scifiinterfaces.com.

Let me begin with a hypothetical. And let’s say we were to go out and ask…take a poll of the vox populi, the voice in the street, and ask them “What role would you say that AI should play in medical diagnosis?” Then we think about what their answers would be if we showed them this:

Baymax from the Big Hero 6 movie. Then think about how their answers would change if we then showed them this:

Which is the holographic doctor that we just mentioned from the Voyager series of Star Trek.

And then how of course would their answers change if we were reminded them of Ash from Alien, who was ostensibly a doctor on that ship. Right?

These examples serve to illustrate that how people think about AI depends largely on how they know AI. And to the point, how the most people know AI is through science fiction, which sort of raises the question, yeah? What stories are we telling ourselves about AI in science fiction?

So, I first came on this question during an AI retreat in Norway that was an unconference that was sort of sprung on us. And they said, “Okay, what do you want to do here?” And I had just completed an analysis of the Forbidden Planet movie in the context of the Fermi Paradox. And it required me to sort of do this really broad-scope analysis, unlike the normal ones that I do in the blog. So I simply asked that one.

But to answer that question takes a lot. I thought of course that I could do it in like, a two-hour conference setting but no, it took me several months after I got home from that setting. Because what I needed to do was look at all of science fiction movies and television shows. And that’s quite a lot. I don’t think I’ve captured them all and of course I am bound by English speaking for the most part. I am certainly bound by movies and television. But I wound up with 147 titles in total that I included, and actually all the data is live in a Google sheet that you can access if you like.

But I took a look at each one of those titles and I tried to interpolate what the takeaway is. I said okay, if you were to watch this story and leave the cinema or get up off your couch and be asked the question “So what should we do about AI?”

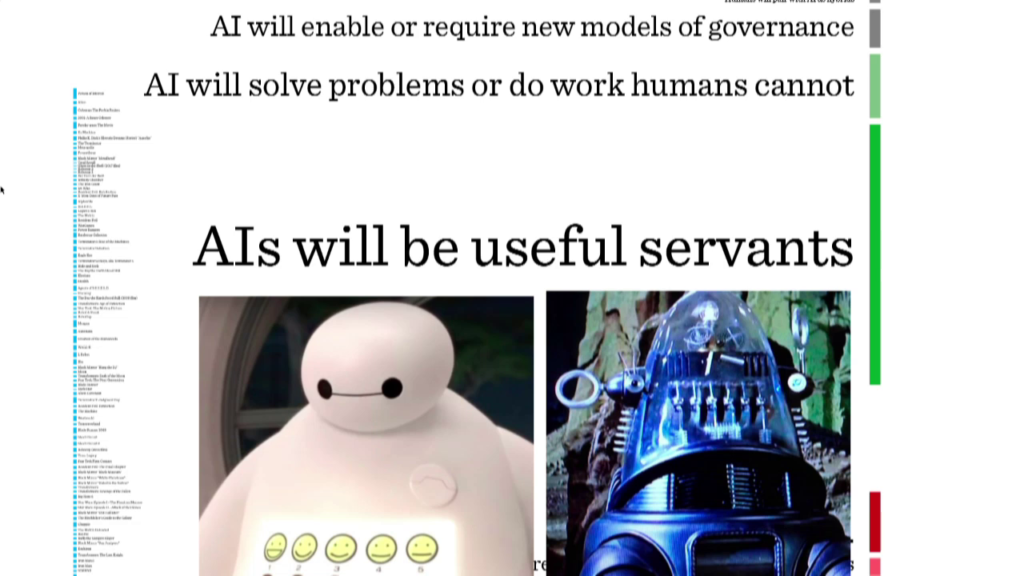

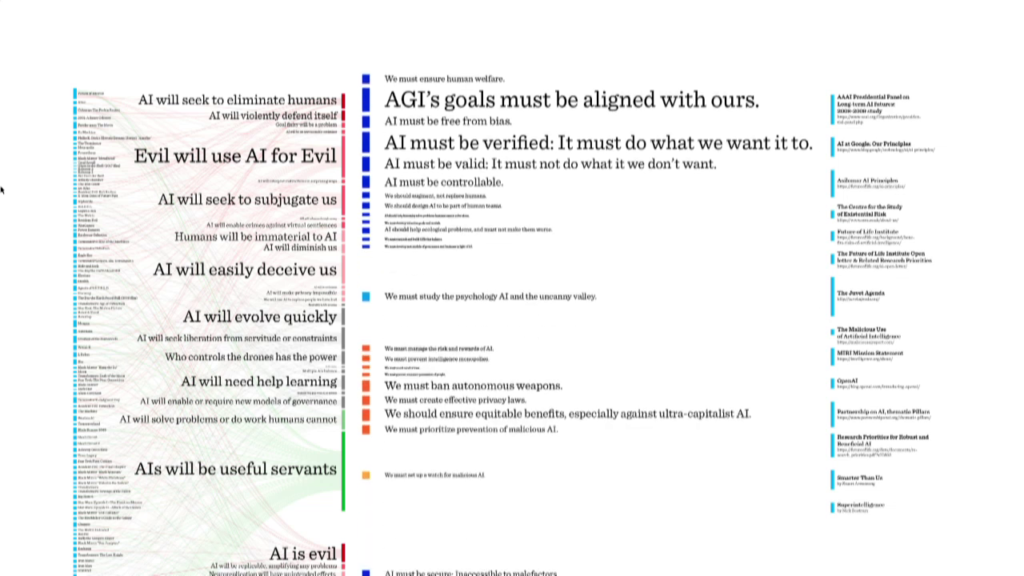

That led to a series of takeaways. And those takeaways run quite the gamut. Everything from “Evil will use AI for Evil” to “AI will seek to subjugate us,” which is the perennial Terminator example but of course even the Sentinels in The Matrix. In the diagram that I’m slowly building behind you, the bigger text actually represented the things that were seen more commonly throughout the survey of movies.

It also included things like “AI will be useful servants.” I mentioned that sort of happy era of sci-fi AI. Robby is part of that, and much more recently Baymax.

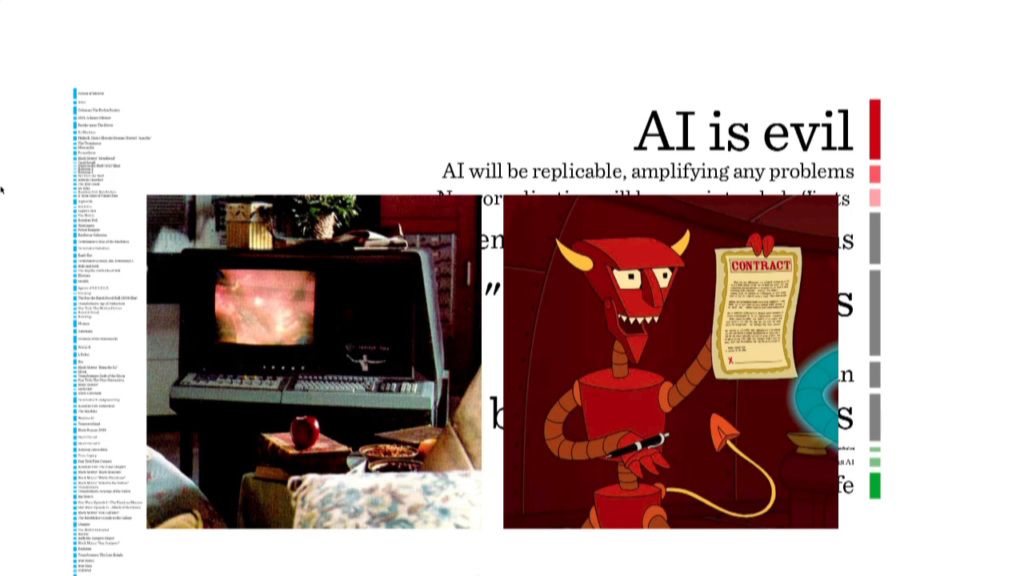

And it includes things like AI is just straight-up evil. Like you turn on the machine and it’s trying to kill you. There are comic examples like the Robot Devil from Futurama, but also bad and disturbing movies like Demon seed with the Proteus IV AI.

So once I did that I had thirty-five takeaways that all connected back to the 147 properties that I had gathered together. And if you head on to the website you can actually see. It’s hard to see in this projection but there are lines that connect which movies and which TV shows connect to which takeaways. So if you’re enough of a nerd like me, you can actually study and say “Where’s RoboCop fit in all this?”

So that was my analysis of okay, what stories are we currently telling. It’s a bottom-up analysis. It’s a folksonomy. But it gave me a basis.

Now to answer the other side of that question, how do we know what stories we should tell about AI? That’s a tough one. It’s a big value judgment. I’m certainly not going to make it, so I let some other people make it. And those particular people were the people who had produced think tank pieces, thought pieces, or written books on the larger subject of AI. I thought I would have a lot more than I did. I wound up with only fourteen manifestos, but they include everything from the AAAI Presidential Panel on Long-Term AI Futures, to the Future of Life Institute. The MIRI mission statement, OpenAI, and Nick Bostrom’s book.

But from these fourteen manifestos—I read them one at a time. And instead of takeaways, which is like what we got from the shows, on this side I was able to say okay well what do they just directly recommend we do about AI?

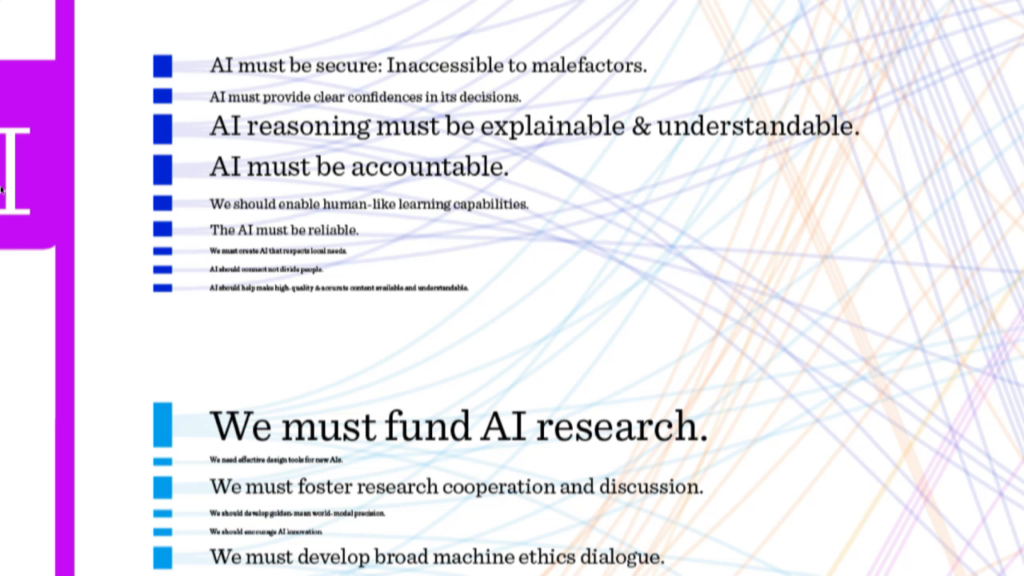

That also gave me another list. That list includes things like “Artificial General Intelligence’s goals must be aligned with ours.” Or “AI must be valid: it must not do what we don’t want.” That’s a nuanced thought. But similar to these takeaways, in this diagram you’ll see that the texts that are larger were more represented in the manifestos. And it included things like “we should ensure equitable benefits, especially against ultra-capitalist AI.” And this really tiny one, “We must set up a watch for malicious AI,” all the way down to the bottom, we must fund AI research. We must manage labor markets upended by AI.

And I won’t go through all of these. I don’t have time. But in total there were fifty-four imperatives that I could sort of pull out from a comparative study of those manifestos.

And so, we have on the left a set of takeaways from science fiction. And we have a set of imperatives on the right from manifestos. And really it’s just a matter of running a diff, if you know that computer terminology. But it’s to be able to say okay, what of here maps to what of here, and then what’s left over?

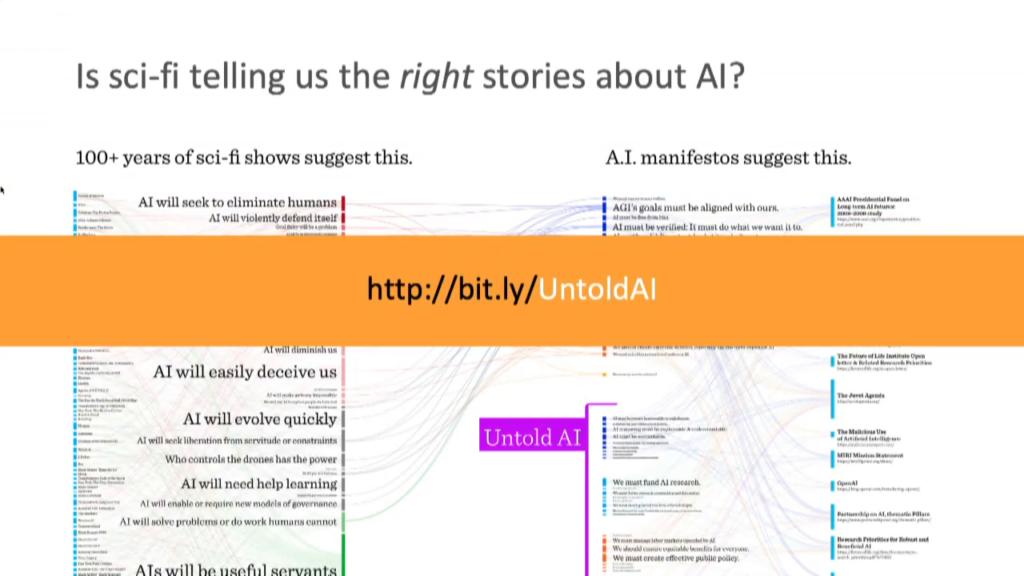

Again this is a lot of data and I did produce a single graphic that you can see at that URL—I’ll show it several times in case you want to write it down.

So a hundred-plus years of sci-fi shows suggests this and AI manifestos suggests this. And then I ran the diff; there are some lines there that are hard to see from this document. The main thing that we find is of course there are some things that map from the left to the right. And those are stories that we are telling, that we should keep on telling.

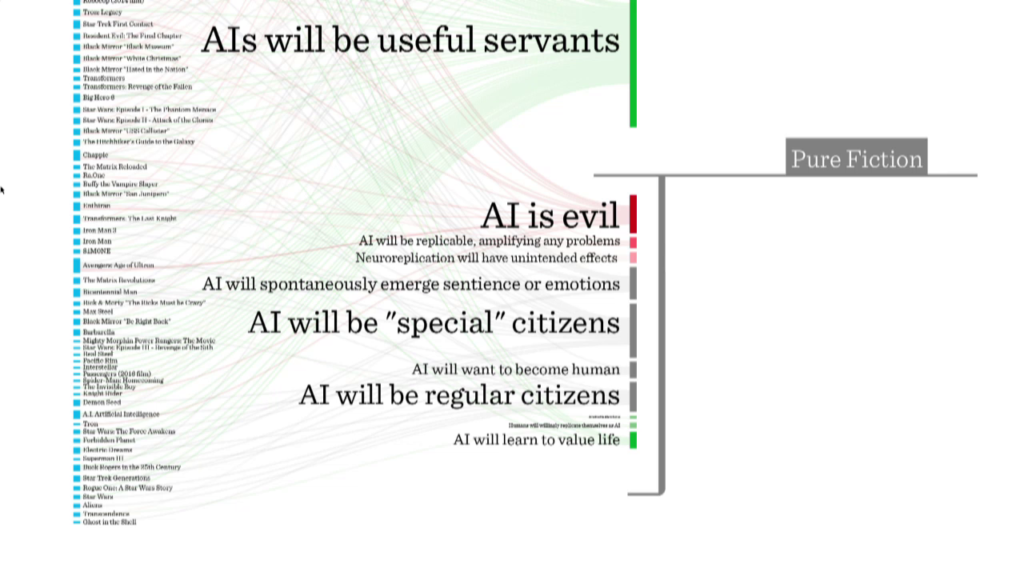

And those are not the interesting ones. The interesting ones are the ones that don’t connect across. So this is the list of those takeaways from science fiction that don’t appear in the manifestos. These we can think of things that are just pure fiction. Things we need to stop telling ourselves. Because they— If we trust the scientists as being the guideposts for our narrative, they include things like AI is evil out of the gate. Now of course, there’s an imperative way up there that says evil people will use AI for evil and that’s still in. But this one right, here nobody believes that AI is just…an evil material that we should never touch.

Interestingly those manifestos are not interested in the citizenship of AI, partially because that’s entailed in general AI, which…manifestos are much more concerned about the near-term here and now. And that includes things like oh, they’ll be regular citizens versus they’ll be special citizens. And even this notion that AI will want to become human. Sorry, Data. Sorry, Star Trek.

So there is a list of pure fiction takeaways that we should stop telling ourselves. That was not the point of the study the point of the study that I wanted to do was on the other side. And that’s the list of things that manifestos tell us that we ought to be talking about in science fiction…but we’re not.

They include everything like “AI reasoning must be explainable and understandable.” I’d completed this right around the time of the GDPR so, I’m really happy that that’s out there. But it includes things like “We should enable human-like learning capabilities.” At a very foundation level it’s got to be reliable, because if it’s not and we depend upon it, what happens? It includes things like “We must create effective public policy.” That includes effective liability, humanitarian and criminal justice laws. It includes things like finding new metrics for measuring the effects of AI and its capabilities.

And again I’m not going to go into those individual things. They’re fascinating, and you can head to the blog posts in order to read them all. And there’s lots of analysis that I did all all over this thing, like that’s the set of takeaways. If you want to know what country produces the sci-fi that is closest to the science, turns out that it’s Britain. The country that’s most obsessed with sci-fi is surprisingly Australia. And of course the most prolific for AI shows is the United States, even though we’re far behind India in our actual production of movies in total.

I even did sort of a… Oh this is a diagram of the valence of sci-fi over time. If you’re interested, it’s slowly improving but it hasn’t reached positive yet. And then I even did an analysis of the takeaways that we have in science fiction based on their Tomatometer readings from Rotten Tomatoes. So you can actually see which ones—if you’re making a sci-fi movie, which takeaways you can bet on and which one you should probably avoid, just for the ratings. But this is all stuff that entailed in the longer series of blog posts.

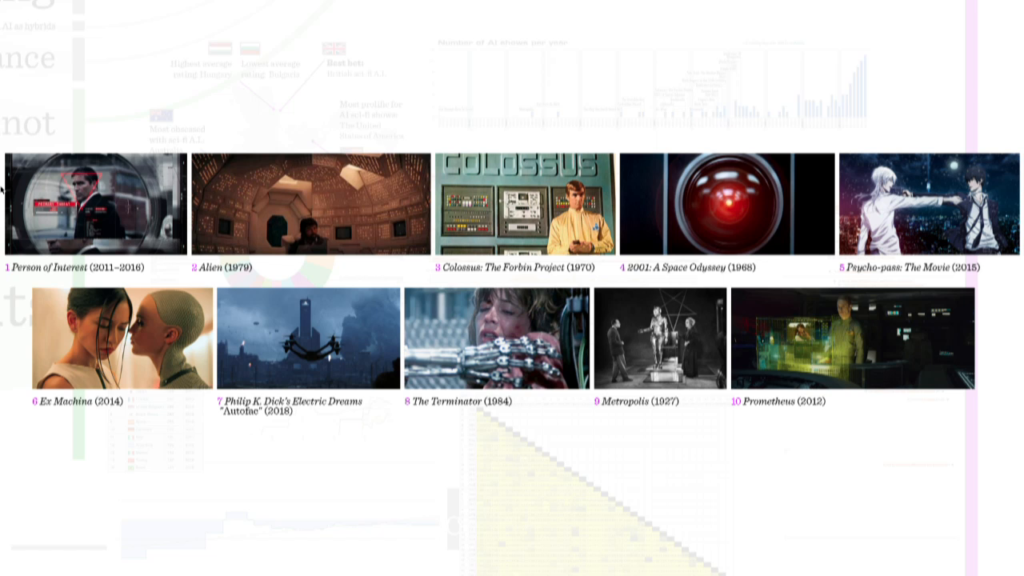

I also include an analysis of what shows stick to the science the best, in order to sort of rewarded them and raise more attention. Damien mentioned Person of Interest and that’s number one in this analysis. But it includes things like Colossus: the Forbin Project, the first Alien, Psycho-pass: The Movie which is the only anime that made this particular list. And even— I don’t like the movie, but the AI in it is pretty tight with Prometheus.

I also included a series of prompts. Which is to say okay, if I were to give a writer’s prompt about some of these ideas can I spark some? This is an example. What if Sherlock Holmes was an inductive AI, and Watson was the comparatively stupid human whose job was to babysit it? Twist: Watson discovers that Holmes created the AI Moriarty for job security.

So, I tried to put these prompts out there to see if anyone’d take the bait. So far no one has, but I’m doing my part.

And then even some of those things I have begun to write on myself since no one else had taken the bait, and tried my first hand at a near-term narrow AI problem with the self-publication of this last year.

Okay. So, that’s a lot to take in and I understand that. It covers like 17,000 words or something on the blog. And so what I wanted to do to summarize all this is what I did on the sort of poster that I created, which is to read off the sort of five categories of findings that I found. These are nuanced so I’m going to read them.

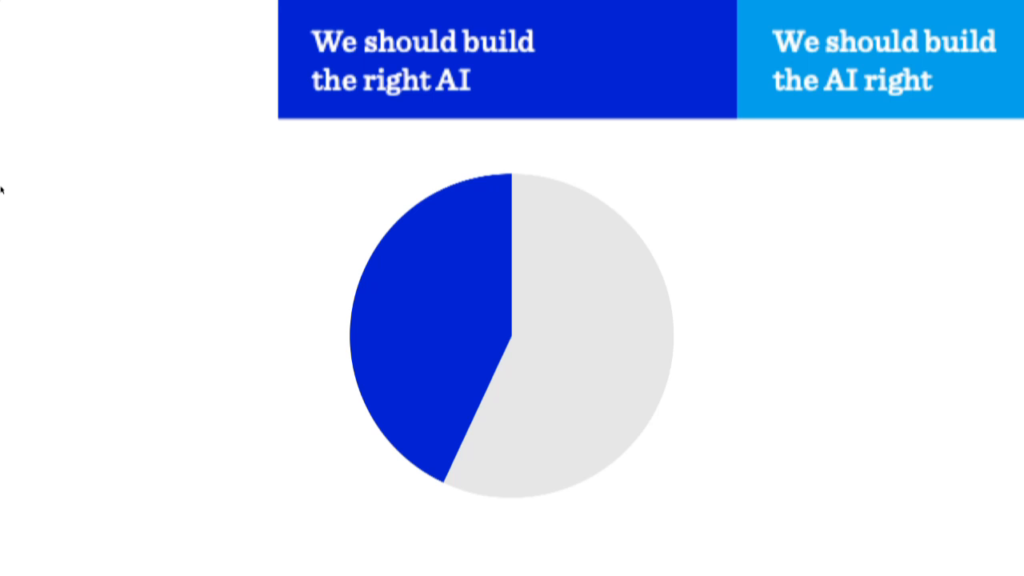

The first category of stories we should be telling ourselves is that We should build the right AI. Narrow AI must be made ethically, and transparently, and equitably or it stands to be a tool used by evil forces to take advantage of global systems and just make things worse. As we work towards general AI we have to ensure that it’s verified, valid, secure, and controllable. And we must also be certain that its incentives are aligned with human welfare before we allow it to evolve into superintelligence and therefore, out of our control. Sadly, sci-fi misses about two-thirds of this in the stories that it tells. And that’s largely I think because of sort of, they’re not telling stories about how we make AI good AI.

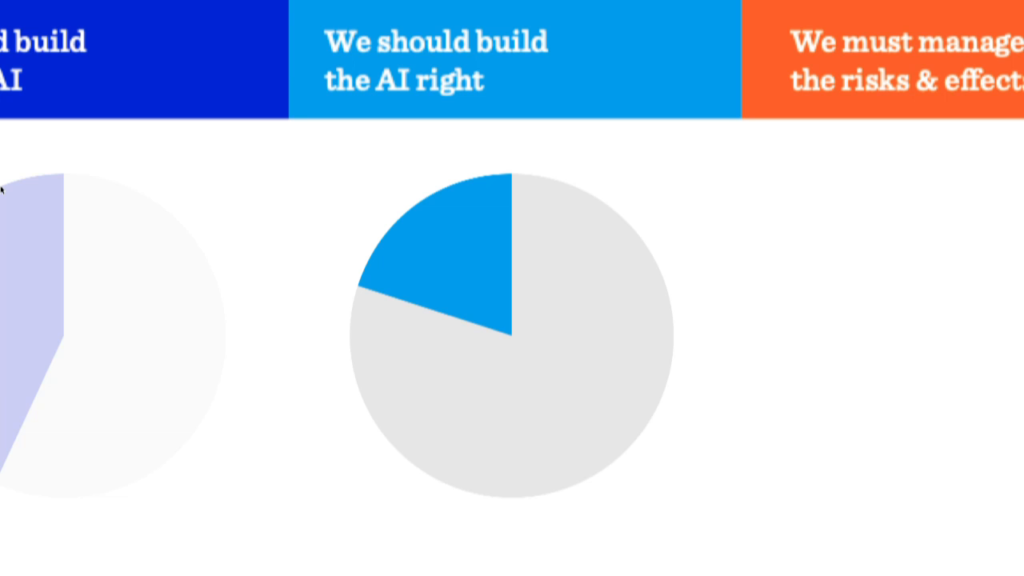

The next category is we should build the AI right. So this is really talking about the process. Like what do we do as we we’re constructing the thing? So we must take care that we are able to go about the building of AI cooperatively, ethically, and effectively. The right people should be in the room throughout to insure diverse perspectives and equitable results. Or if we use the wrong people or the wrong tools, it affects our ability to build the right AI. Or more to the point, it’ll result in an AI that’s wrong in some critical point. Sci-fi misses most of this—nearly 75% of these imperatives from the manifestos just aren’t present in AI.

The third out of five is that it’s our job to manage the risks and the effects of AI. And there weren’t a ton of takeaways related to this, so it means that it’s a very crude sort of metric. But we pursue AI because it carries so much promise to solve so many problems at a scale that humans have never been able to manage ourselves. But AIs carry with them risks that scale as the thing becomes more powerful. So we need ways to clearly understand, test, and articulate those risks so that we can be proactive about avoiding them.

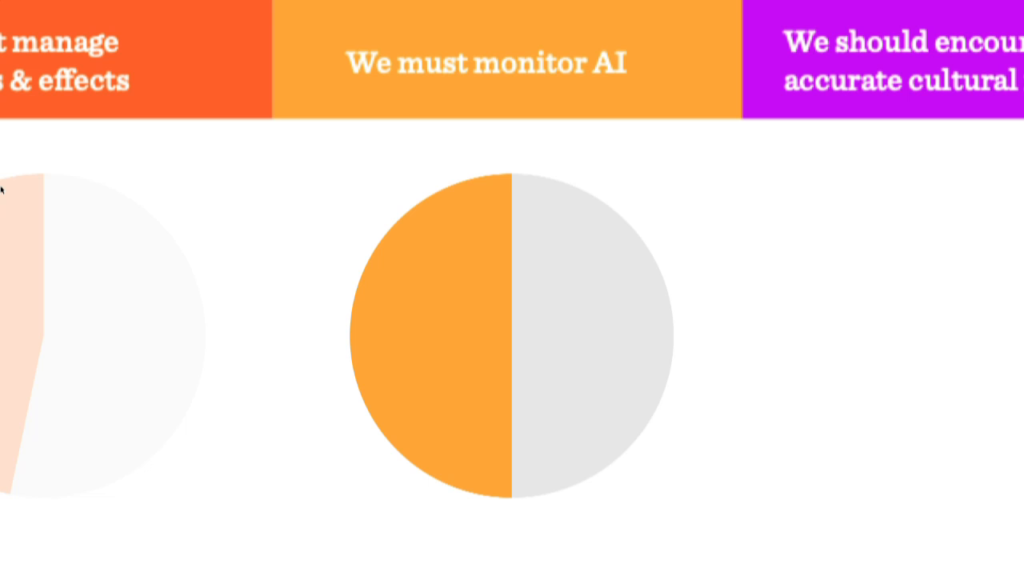

The fourth out of five is that we have to monitor AI. AI that is deterministic isn’t it really worth the name of AI. But building non-deterministic AI mean that it’s also somewhat unpredictable. We don’t know what it’s going to do to us. And can allow for bad faith providers to encode their own interests in the effects. So to watch out for that, and to know if it’s effective, if well-intended AI is going off the rails we have to establish metrics for its capabilities, its performance, and its rationale…and then build the monitors that monitor those things. We only get about half this right.

And the last sort of supercategory in the report card of science fiction is that we should encourage accurate cultural narratives. And it’s very low contrast, but we just don’t talk about this. We don’t talk about telling stories about AI in sci-fi very much. If at all. Certainly not in the survey at all, right. But if we mismanage that narrative, we stand to a negatively impact public perception and certainly legislators (to the point of this thing), and even like encourage Luddite mobs, which nobody needs.

Okay. So, that’s the total report card. The short-form takeaway from sci-fi, as compared to AI manifestos. And the total grade if you will is only about 36.7%. Sci-fi is not doing great. But that’s okay, right. We should have tools such as this analysis in order to poke at the makers of sci-fi, and even to encourage other creators to create new and better and more well-aligned AI. And that’s part of why I’ve done, and part of why I’m trying to popularize the project. If you want to learn more about it, I’m repeating that URL here for you.

If you’re really curious about this kind of work, I wrapped up the Untold AI last year on the blog. I’m dedicating the entire year of 2019 to analyzing aI in sci-fi. But right now I’m in the middle of the process of analyzing gender and its correlations across things like embodiment, subservience, and germane-ness. And you can see that Gendered AI on the Sci-fi Interfaces blog.

And that’s it. I am done with one minute so I have an extra minute if there’s any time for questions. Thank you.