Of course we’re avid, avid watchers of Tucker Carlson. But insofar as he’s like the shit filter, which is that if things make it as far as Tucker Carlson, then they probably have much more like…stuff that we can look at online. And so sometimes he’ll start talking about something and we don’t really understand where it came from and then when we go back online we can find that there’s quite a bit of discourse about “wouldn’t it be funny if people believed this about antifa.”

Archive (Page 1 of 3)

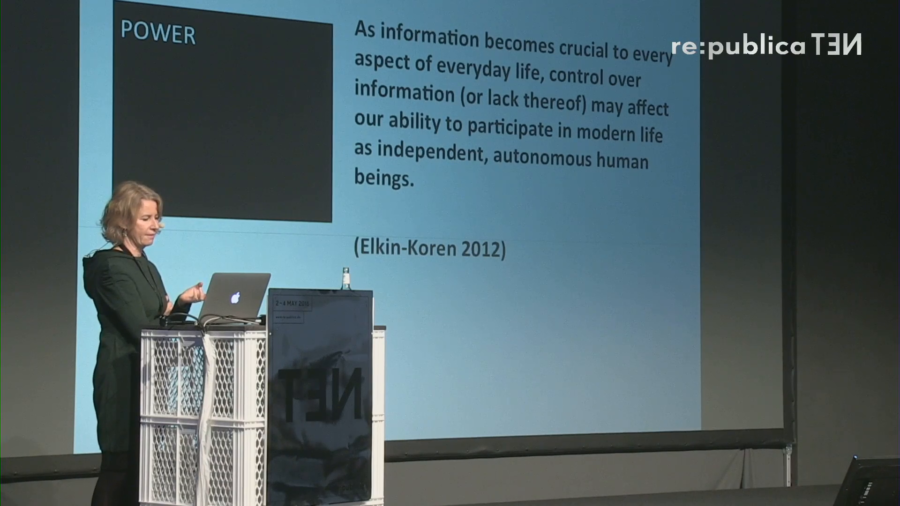

Extremists around the world are increasingly being thrown off of social media. And so…the big question that I’m going to try to answer is, is this effective? Is it good? Is it good for the platforms? Who does it benefit? Is it good for the platforms, is it good for the extremists, is it good for the Internet, is it good for society at large?

We have been documenting and researching into human rights or digital rights violations that are taking place in Palestine and Israel. And one of the most recent case studies or work that we’re looking into is the use of predictive policing by Israel, which is rather a sensitive issue given that there isn’t a lot that we know about the subject.

Dangerous speech, as opposed hate speech, is defined basically as speech that seeks to incite violence against people. And that’s the kind of speech that I’m really concerned about right now. That’s what we’re seeing on the rise in the United States, in Europe, and elsewhere.

I teach my students that design is ongoing risky decision-making. And what I mean by ongoing is that you never really get to stop questioning the assumptions that you’re making and that are underlying what it is that you’re creating—those fundamental premises.

I became tired of knocking on the same doors and either seeing the same people or different people. But I really just felt like I was in this cycle of faux liberation, where I would feel a victory, and the victory was probably formed around the RFP for the grant that we needed to get in order to do our work.