Jillian C. York: Thank you everyone. And I know that everyone here is inside instead of outside where it’s still beautiful. And I know that I’m standing between you and drinks. So I’m going to keep this talk fairly short.

But I want to just preface this by saying that I have to say I don’t have all the solutions to this problem. I don’t think that anyone does. And right now in the US, where I’m from, I heard the news just a couple of hours ago that a young boy, an 8 year-old boy, was lynched in my home state. He survived. He’s in a hospital. But to me, what’s happening in my own country right now is really terrifying and that’s why I’m seeking out solutions.

That said I also have to introduce this by saying that I’ve been working for the past six or seven years as a free speech advocate. And free speech is a really difficult topic in the US right now, because a lot of people believe that free speech means that all speech is equal. I don’t believe that. However, I remain against censorship and I’m going to tell you why.

So first of all what is hate speech? I think that there’s probably a number of different people in this room from different countries, and we all have different ideas about what this means.

So, here’s the United Nations definition. “The advocacy of national, racial or religious hatred that constitutes incitement to discrimination, hostility or violence.” That’s probably one of the better definitions that I’ve seen of this topic.

…content that directly attacks people based on their race; ethnicity; national origin; religious affiliation, sexual orientation; sex, gender, or gender identity; or serious disabilities or diseases

Facebook Community Standards, Hate Speech section [presentation slide]

And then we have Facebook’s definition, which is a little bit more vague. Content directly attacks people based on a number of different reasons, as you can see. And this is how Facebook adjudicates hate speech.

incitement to hatred against segments of the population and refers to calls for violent or arbitrary measures against them, including assaults against the human dignity of others by insulting, maliciously maligning, or defaming segments of the population.

[presentation slide]

We have the German law—I’m living in Germany right now, which is why I chose this one. Incitement to hatred against segments of the population, specific calls for violent or arbitrary measures against them, including assaults against human dignity, by insulting, maliciously maligning, or defaming segments of the population. I like that definition. It’s a little bit more specific and it deals with the topic of incitement, which I’m about to talk about.

1989 Prohibition of Incitement to Hatred Act bans “written material, words, behaviour, visual images or sounds [that], as the case may be, are threatening, abusive or insulting and are intended or, having regard to all the circumstances, are likely to stir up hatred.”

[presentation slide]

This one’s little bit out of date. I was in Dublin last week and I also gave this talk there. And this one looks at Ireland’s hate speech law, which I thought was really interesting (The bold in the text is my own.) that they used the phrase “to stir up hatred,” as though that’s a common understanding that everyone has. And I found that rather interesting. The reason that I put these different laws and different rules on the screen is to demonstrate how much the definitions of hate speech vary by place to place, company to company, government to government. Basically what I mean is that no two people seem to have the same definition of what hate speech means.

And here’s the United States, of course, [screen not visible] where we don’t have hate speech as a concept, legally. In the US, the First Amendment protects all speech, including hateful speech but not including incitement. And that’s why I want to make this line between hate speech and incitement.

So, what is the harm of hate speech? I think that this is clear to a lot of people. I’m sure that many of you have experienced hate speech in one form or another, whether it’s against you are against someone you know, someone you care about, or just a group in your own country.

So this particular slide referred to a specific study that was about the correlation between hateful speech, or inciting speech, against Muslims and hate crimes. That is to say violent crimes against people specifically. This was a US-based study. And what it did was it looked at search terms related to Islam and related to Muslims, such as “kill Muslims” and “are any Muslims not terrorists.” I don’t have the speaker notes, but these are the type of searches that these researchers looked at. And what they found is a direct geographic correlation between those kinds of search terms and hate crimes, based on a period of I think about eleven years across a number of different geographies. And that correlation was very strong. And so we know that hate speech has a direct impact on individuals, that it can in fact have a violent impact on individuals, particularly when that speech is inciting violence specifically.

So, the Dangerous Speech Project is a really interesting project that I suggest everyone look at, which is why I’ve thrown the URL up there. They seek to make a distinction between the concept of hate speech, which can be a number of things as we’ve noted—things that are just hurtful, things that hurt feelings, things that hurt people psychologically. And I don’t want to diminish that. But what they seek to do is draw the difference between things that are merely harming psychologically and things that actually have a direct correlation to violence.

So dangerous speech, as opposed to hate speech, is defined basically as speech that seeks to incite violence against people. And that’s the kind of speech that I’m really concerned about right now. That’s what we’re seeing on the rise in the United States, in Europe, and elsewhere.

Delete Hate Speech or Pay Up, Germany Tells Social Media Companies, The New York Times

And so, what’s happening in result of this is that governments and companies together are seeking ways to throw that speech under the rug and hide it from our eyes. Now what does this mean? Now, on the one hand you have governments like Germany’s government which has had these laws in place for a long time, which seeks to censor that speech based on longstanding laws. And Germany is basically telling social media companies, as this headline says, “Delete speech or pay financially.”

Now, companies like Facebook and Google and Twitter have been resistant to these kinds of laws, but at the same time they also— (Just another one; the EU is also doing the same thing.) At the same time, these companies have also always had their own rules in place that prevent hateful speech. And this is the point that I want to get to, that censorship also has harms.

Now again, I am against censorship but I am not for all speech. And I think that that’s a careful distinction that I need to make at this point in time. Because speech can harm, but censorship can harm, too.

A few years ago, I cofounded a project called onlinecensorship.org. And what we do is we look specifically at the harms of censorship on marginalized populations. We started by encouraging people to submit reports to us when they’d experienced unjust or unjustified censorship. Of course we do receive other kinds of reports, too, from people who are engaging in incitement and violent kinds of racist speech. But a lot of the reports we receive are people who yes, are being censored from other kinds of rules that are not related to hate speech. But we also get reports from people who are censored by companies based on speech that companies deem as hate speech but that actually is not. And here’s a couple of examples of that.

So this is one that came up a few weeks ago. Basically Facebook— I’m not sure how many people here are native English speakers so I’m going to explain this one. The term “dyke” is a term that has been used to harm, to hurt, and to malign people. It’s used against lesbians. But at the same time, lesbians have also chosen—historically, for a long time now—to reclaim that word. And so they say, “Okay, we can say dyke but you can’t use it against us.” And that’s fair. We have a lot of terms like this in the US and I’m sure that in other languages this kind of thing happens as well.

And so what was happening on Facebook is that they were basically systematically censoring this term regardless of the context in which it was used. And what that meant was that people’s expression was limited. People who wanted to use this term in a reclaimed way, in an empowered way, such as this particular comment where she was saying that “People need to quit rewriting history. Dykes do things. #visibilitymatters,” this is a positive statement. But she was nevertheless temporarily kicked off the platform for using just that word. We don’t know if this was algorithmic or human content moderation.

Another example of this has come up a few times in different contexts. But basically this headline is from a writer called Ijeoma Oluo. And she wrote about a particular experience where she had received a lot of racist harassment in a restaurant. And she wrote about it on Facebook, and Facebook censored her post because it contained certain terms. Because the way that she used words—somebody had called her the n‑word—she’d used that word, and Facebook saw that word and censored it.

And the reason that this happens is something that I’ve been studying for a long time. Basically when you’re on a social media platform, you’re encouraged to snitch or to report on your friends and other users. You’ve maybe seen this before. Facebook says, “Okay, you don’t you don’t like this comment? Report it.” And you can say okay, this is hate speech, or I don’t like this comment, or whatever.

But what happens is that that post or that content then goes into a queue, where either a human content moderator or an algorithm determines whether it’s acceptable or not acceptable speech, and then it’s taken down and perhaps there’s also some kind of punitive damage, punishment, meted out to that individual. And in this case she was temporarily suspended from the platform and her posts were deleted.

This is not the only case like this. In fact it’s not the only famous case like this. And in fact it’s not the only famous case like this that happened in the same week. The Washington Post and The New York Times both reported on different circumstances that had happened that same week, all involving black mothers.

And here’s another one. And this has been happening on Twitter pretty consistently recently. This particular case, @meakoopa was a—is; they got their account back, luckily—an LGBTQ academic who had been tweeting about antifa, tweeting against white supremacy, and possibly—I don’t remember this particular case—but I’m going to generalize this because there were a number of cases that week. There were a lot of people tweeting about punching Nazis. And those people were all systematically censored from Twitter. Because that was deemed to be hate speech. When you want to stand up against a violent fascist ideology, apparently according to this company you too are violent and you too must be censored.

And so this is what scares me about censorship, is the fact that it has never in the history of our lives been applied evenly, in a way that does not have a negative trickle-down effect on other individuals and particularly on marginalized groups.

Germany, in a First, Shuts Down Left-Wing Extremist Website, The New York Times

And then of course, we have in Germany just a couple weeks ago for the first time—at least according to The New York Times. I’ve heard that this is now being contested. But Germany used their anti-hate speech laws to shut down a left-wing “extremist” website.

And so we see that once these rules are put in place they can be used against anyone, not just against the people whose ideology we vehemently oppose, but also against the rest of us. And I’ve been censored by these platforms, too. Not particularly in this case, but nevertheless it happens to so many people.

Image: Moises Saman/Magnum, from The Laborers Who Keep Dick Pics and Beheadings Out of Your Facebook Feed, Wired

And then of course I want to bring this up, too, because I think that this is a really interesting perspective that I’ve only been recently introduced to over the past couple of years. There’s a researcher called Sarah T. Roberts; she spoke at re:publica in Berlin this year. And her work has been looking at the labor of content moderation. She’s particularly interested in this as a labor practice and the way the content moderators are affected by the kind of material that they have to look at.

So, what I mean is that you’ve got people, individuals, humans, who are working in outsourced companies in places like India and the Philippines and a number of different countries over the world. They’re also in Germany and other Western democracies. And these are the people who are hired to look at the things that you don’t want to see. So when we say we don’t want to see hate speech on these platforms, we forget about the invisible labor that goes on behind that. The people who have to look at every beheading post, every child sexual abuse image, every pornographic image, every bit of white supremacist content. Just because we don’t see it doesn’t mean that there aren’t a bunch of laborers working quietly, silently, looking at this content all day long and making determinations about it.

This was just an article that I found really interesting. But this is one of the problems of these companies, is that they’re undemocratic. These are companies where—you know, they’re corporations—where individuals are making decisions at the highest level without any input from the public and deciding what is offensive and what’s not offensive.

And so on this particular case I’m not going to explain “camel toe.” If you don’t know it, please just Google it. Don’t make me say this on camera. But nevertheless, what this article is particularly about is Facebook implementation guidelines a few years ago were leaked. And they’ve actually been leaked more recently, too, if you saw The Guardian’s Facebook Files. And what that meant was in this case up they were leaked and what they showed was that “camel toes” (and again, Google it please) are more offensive according to those guidelines than “crushed heads.”

We’ve seen other cases— ProPublica, a wonderful nonprofit media organization that reports on this, also found that “white men” are a protected category on Facebook, moreso than black children. I’m sorry that I don’t have that particular slide in here but I recommend looking for that article. It was really interesting because it contained different slides that demonstrated how Facebook particularly puts together who is protected and who is not protected. And in that case, because one group is… It’s complicated. Again, I encourage you to read the article. But in that case they found basically that the implementation guidelines for these workers were to protect white men over other groups.

And so if we agree… And you don’t have to agree. I understand that some people are in favor of censorship, and to those people I would say I please, implore you to at the very least consider the fact that there is no due process and there is no transparency in these corporations. And please, if you are in fact in favor of censoring hate speech, at the very least ensure that you are fighting for due process, because of all of these individuals who are censored and shouldn’t be.

But, if like me you don’t think that censorship can be implemented fairly, then we have to start thinking about what else we can do about hateful speech. And this is where I fear that I don’t have all the answers. And I think that this is something that I would love to continue the conversation with all of you over the next couple of days.

So what can we do about hateful speech? I think the first thing is counterspeech. And I know that that is not a good enough solution for a lot of people. I’ve said this many times but a lot of people push back and say, “Yes, but counterspeech, not everyone can do that.” It takes privileged to be able to stand up in the face of a white supremacist and say this or that. And so we need to build communities together, to protect each other to fight back against hate speech.

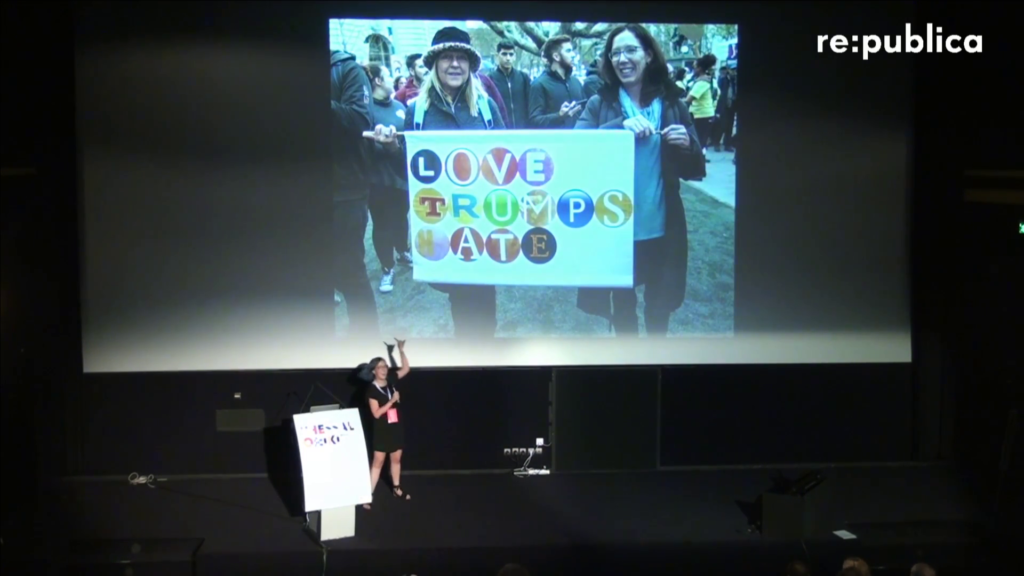

And the first thing there in building community is that we need to think about love. And I know that that sounds crazy, and I know that it sounds insufficient. But at the same time you know, I gave this the title for a reason, “Loving Out Loud in a Time of Hate Speech.” I spend a lot of my day on the Internet, and I spend a lot of time on Twitter, and I’ve seen my own heart rate rise. I’ve seen hate brewing up inside of myself. And I’ve been angry, so angry, over the past year—particularly since November when my country elected an idiot. Not just an idiot but probably a fascist.

And so, I think that this is where we have to consider love. We have to think about what’s happening inside our own heads—the anger that we’re all building toward. We have to think about our communities and how we can band together to fight back against hate speech, and to educate the rest of the people around us, and particularly the people younger than us, about what this means. And I don’t just mean educate people to speak back but educate people about the histories that led to this point.

Like I said at the very beginning of my talk, a young boy in my home state was lynched this week. Now, I’m from New Hampshire in the United States. Many of you probably don’t know it. That’s okay, it’s not very notable for a number of reasons. But, it’s 97% white, and I’m not surprised that these young boys didn’t know what the history that came before them was, because we weren’t taught in school. I barely learned about the Holocaust let alone the history of white supremacy in my own country. And so I encourage you if you’re from a place like I’m from… I know many of you are probably from cities and for that maybe you’re lucky. But nevertheless it’s important to educate the people around us.

And again, I hate that word in the middle. It’s hard for me to even say it. But love does stand up against hate and we have to remember that in every single one of our interactions.

And so, I know that that’s not the entire solution to hate speech. I know that there’s a lot more that we need to talk about. But I want you to consider the effect that censorship has when we apply it to hate speech and when we allow these undemocratic companies to make these decisions for us without our input what that means. And so keep calm, and love not hate. Thank you very much and I’m happy to talk about this more with than any of you over the next couple days. Thanks.