In the next ten years we will see data-driven technologies reconfigure systems in many different sectors, from autonomous vehicles to personalized learning, predictive policing, to precision medicine. While the advances that we will see will create phenomenal new opportunities, they will also create new challenges—and new worries—and it behooves us to start grappling with these issues now so that we can build healthy sociotechnical systems.

Archive (Page 1 of 2)

The premise of our project is really that we are surrounded by machines that are reading what we write, and judging us based on whatever they think we’re saying.

Positionality is the specific position or perspective that an individual takes given their past experiences, their knowledge; their worldview is shaped by positionality. It’s a unique but partial view of the world. And when we’re designing machines we’re embedding positionality into those machines with all of the choices we’re making about what counts and what doesn’t count.

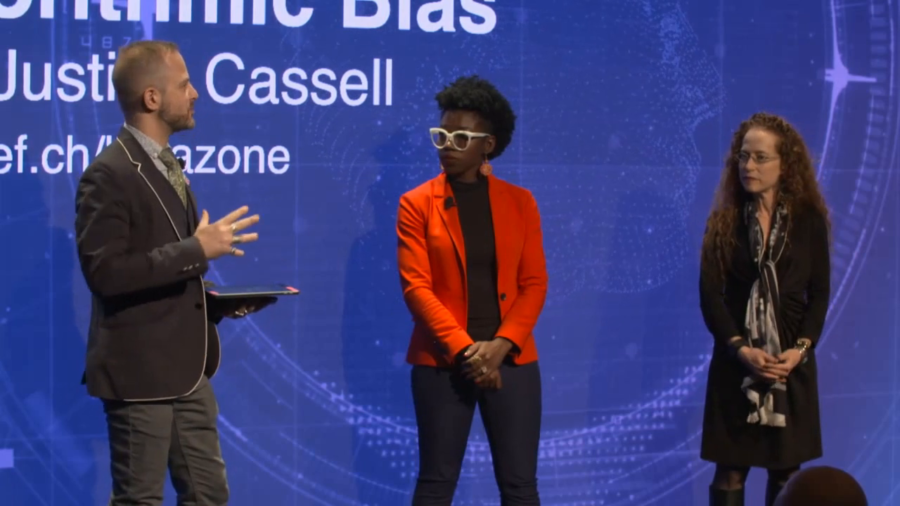

AI Blindspot is a discovery process for spotting unconscious biases and structural inequalities in AI systems.

I think the question I’m trying to formulate is, how in this world of increasing optimization where the algorithms will be accurate… They’ll increasingly be accurate. But their application could lead to discrimination. How do we stop that?

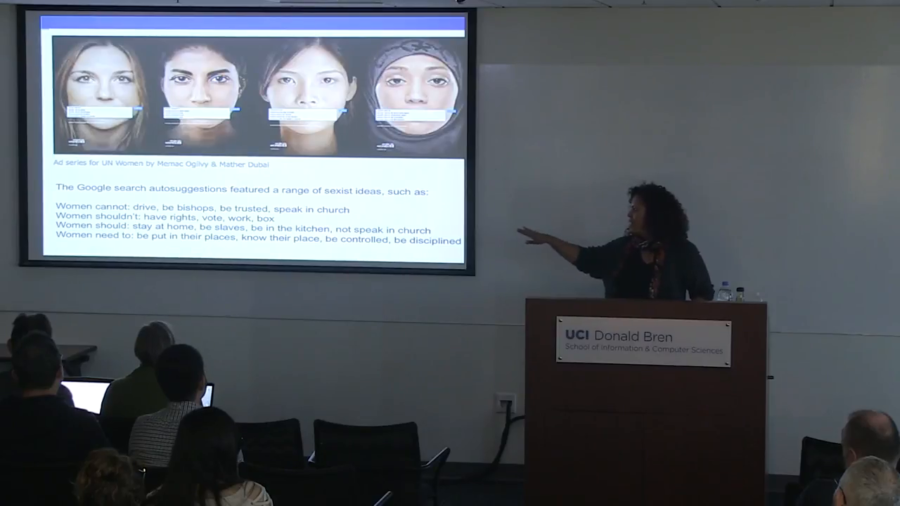

All they have to do is write to journalists and ask questions. And what they do is they ask a journalist a question and be like, “What’s going on with this thing?” And journalists, under pressure to find stories to report, go looking around. They immediately search something in Google. And that becomes the tool of exploitation.

One of the things that I think is really important is that we’re paying attention to how we might be able to recuperate and recover from these kinds of practices. So rather than thinking of this as just a temporary kind of glitch, in fact I’m going to show you several of these glitches and maybe we might see a pattern.

The question is what are we doing in the industry, or what is the machine learning research community doing, to combat instances of algorithmic bias? So I think there is a certain amount of good news, and it’s the good news that I wanted to focus on in my talk today.

Quite often when we’re asking these difficult questions we’re asking about questions where we might not even know how to ask where the line is. But in other cases, when researchers work to advance public knowledge, even on uncontroversial topics, we can still find ourselves forbidden from doing the research or disseminating the research.