Introducer: Welcome everybody for the next talk, “When Algorithms Fail in Our Personal Lives.” It is a one hour talk. Our lovely speaker with us today is Caroline Sinders. She’s a user researcher for IBM. She’s also an artist, a researcher, a video game designer. She’s from the States, and I should also mention that she is a member of the NYC Resistor hacker space. And I see some fans over here. Cool.

We already learned in a bunch of talks over the course of Congress what algorithms do when they fail. Yesterday we learned about how algorithms can discriminate, or not discriminate in the hiring process, and Caroline is going to tell us a little more about when it’s better not to use algorithms because there are some things that algorithms just can’t do, that humans can do.

So please give it up for Caroline and enjoy the talk. Thank you very much.

Caroline Sinders: Hi, everyone. I’m Caroline Sinders. I should probably first specify that I am speaking here of my own accord and not on behalf of IBM. So just FYI. And that this is actually a presentation also on a very strange and specific art project I did back in late November. So, when algorithms fail in our personal lives. This is probably the best way to describe me because I live on the Internet.

I’ve spent the last two years studying online fandoms, communities, Internet culture, and online harassment. And this is what I do for fun outside of work. I think a lot about language and conversation as identifiers, and I spend a lot of time reading the way in which conversations unfold on different subreddits on Reddit, 4chan, 8chan, Wikipedia, the way Wikipedia is used as a conversational tool not just to upload information, and obviously Facebook, Twitter, LinkedIn, and Instagram.

And the one thing I’ve sort of learned from all this is that each of these different platforms have a very different identity and they have a very different way in which conversation sort of has evolved linguistically to that platform. The way we talk on Reddit is a lot different than the way we talk on Twitter. And I think that that is due to the infrastructural design of the platform itself, as well as the ways in which the platform identifies itself to users, so like a code of conduct.

Two years ago, actually like a year and a half ago, I really started focusing on online harassment. I specifically focused on Gamergate. As a video game designer, I saw Gamergate kind of affecting the community around me. I’m not driven to study harassment by why it happens, but rather how. How does harassment unfold on different sorts of platforms, and how do platforms allow for different kinds of communication? And really how open is the general user on a platform? How connected are they to the privacy policies? And how aware are they of how exposed they are, and how permeable their data and information is?

So TLDR, I explore complex emotions and emotional reactions within systems. And I’m going to briefly cover some of the anti-harassment research I’ve done.

So sometimes I write things, the Internet doesn’t like them. Last April, the Internet sent a SWAT team to my mom’s house. If you don’t know what swatting is, it’s a very popular online harassment tactic that manifests itself “IRL.” Oftentimes a fake violent phone call is placed to a local police department. That violent phone call triggers the militarized police to be deployed, and this is what happened to my mom.

Sometimes panels I’m on get cancelled because maybe they’re kind of controversial. I submitted a design panel to SXSW and it was cancelled due to harassment and threats of violence.

So I guess my work seems kind of contentious. I think that it’s pretty straightforward. It’s generally design. I don’t know why anyone would have a really massive opinion around it. But the thing I sort of want to talk about, actually, and what I care about exploring, is how do systems affect behavior? I said earlier I’m here not as an IBM representative, but I spend most of my day job working on Watson, working in artificial intelligence as a user researcher around conversational analytics for chat robots. That’s kind of a mouthful.

What I mean by that is I spend a lot of time working with software that allows users to set up chat robots. So I think a lot about the ways in which I’m designing software to help people design conversations that robots have with people. So when I say I believe that systems affect behavior, I live that every day, and I think about the ways in which the structure of an interface actually will lead people to converse and what that would look like.

In the past two years of doing I guess broad heuristic ethnographic research, what I’ve come to realize is users have a myriad of different problems that can be solved in similar ways, but yield radically different results. Meaning that we can sort of start approaching ways to solve problems of harassment through either new kinds of algorithms or a really flexible UI on top of an algorithm, ie. what if we gave people more robust privacy settings and allowed users to start to articulate the ways in which they’re reachable and how their data, really their conversations, are read?

And I think this because algorithms really aren’t that smart, and language within an algorithm is decontextualized into data. We as users provide context to language. Language is what we make it. But in a system it’s not simply just bits of data. So I was driven by this thought: How could I make a flexible system to solve a variety of different problems, for a variety of different people?

So I did what I do best, and I made a low-res wireframe. I create these speculative wireframes to sort of focus myself on what I thought could be achievable, and then I decided to test it against twenty different users I had interviewed that had been affected by Gamergate, as well as a handful of Gamergaters themselves who would interact with me on Twitter and I would ask them questions about the ways in which they organized themselves, the ways in which they talked to other people, what conversations they were hoping to get out of interacting with em on Twitter.

What I started to learn is that we need to focus on privacy in social media. It needs to be as prevalent and as important as writing content itself. Do you see that gray box at the top? That is a placeholder for a button that sends you to a redesigned privacy page.

From interviewing these twenty users, I got a really robust sense of different kinds of needs and wants users wanted out of Twitter. I interviewed people that had over 100,000 followers that absolutely want to remain completely public. And they want to be reachable at all times. I interviewed some users that had 3,000 followers, that wanted to be completely hidden but still have their tweets treated as media, thus shareable. And I interviewed some users that wanted to not go private (which is a very public statement on Twitter, to have the lock next to you) but wanted to have all the affordances of privacy.

What I’ve added as you can see is these checkmarks to allow a user to start to change the way their written content can be accessed and so really actually change the way in which the amount of users on Twitter could start to read content they’re posting.

One of them is “allow followers of your followers to tweet at you,” so the idea of friends-of-friends. “Do not allow accounts with less than X followers to follow you” or “don’t allow accounts less than X days,” meaning new accounts are often created in moments of harassment campaigns. So if an account was a week old with two followers, that’s probably a troll account. Additionally it also allows users to say, “if you’re not on my level you can’t tweet at me.” Not judging; that’s interaction someone wanted.

And then I started to think more about what does it mean to exist publicly as a person on a platform? Twitter is sort of this mixed identity and mixed emotional state. It’s both professional and personal. It’s used as a networking tool as well as a social aid and a communicative tool. So people either have really persistent aliases or avatars that have followed them from platform to platform but they’re not using their real name. There’s levels of pseudo-anonymity on Twitter. In my case I use my real name and I can’t really undo that. So my needs in using Twitter, especially as a technologist exists in a much different way than, per se, someone who uses it as a casual medium. And we have very different needs.

But through these different kinds of dials, I feel that this serves my needs as well as all of the users I’ve interviewed because we’re able to start to tailor though UI, and be able to pull from very top-levels of information, just mainly around followers, as to how accessible I am.

I added other things such as blocked accounts and blocked tweets. Right now you can only see blocked accounts. You can’t see blocked tweets. What if you could? And I pulled from this because in moments of harassment, even if it’s a sustained stalker, there’s often a tweet that will trigger it. It’s never going to someone’s account, at least within a harassment campaign, you’re not really going to someone’s account and saying, “Today I’m blocking you.” There is often an interaction, a tweet, that will trigger that response. So what if you could see that, start to group the together, and maybe send a report to Twitter or to yourself. The user can start to contextualize “this is a way in which these tweets are linked.” So if there’s a mob harassment campaign, a user could say, “I think all these are linked.” And if Twitter’s implementing any kind of machine learning or natural language processing they’d be able to start batching multiple reports at once and see how they’re all related.

Again, what if you could group mentions together?

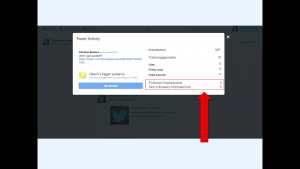

And I added this last night. One thing that I noticed from a lot of my research is that users don’t really have an understanding as to how their language is actually data and how accessible their things are.

I’m sure you’ve heard a variety of stories around tweets going viral. Someone tweets something and then months later it’s dug up. Or they tweet something… In the case of this really well-known incident this woman tweeted a really off-color joke about AIDS, got on a plane, twelve hours later this tweet had completely exploded. The background of that story is that this woman only had a hundred followers and had never had her tweets interacted with very much at all. Not in the sense of with strangers. So for her this was a complete moment of the system kind of breaking. And I wonder if there are ways to start to articulate to users how accessible you are. Even if you feel small, even if you feel like no one is interacting with your tweets, you’re still actually completely open. And the information you send out into the system is media that can be isolated and shared quickly, and that’s sort of the way in which Twitter functions.

So I wondered what if you could just break something down really simply, and just sort of say follower impressions and non-follower impressions to sort of get an idea of as to who’s interacting with your tweet, and who outside of your decentralized social circle on Twitter.

And then I started looking at Facebook. Additionally, I did another round of interviews, specifically for this project I’m getting into, Social Media Breakup Coordinator, where users actually had no idea what the privacy checkup meant. I think this is a great addition. You add a button. You can say “only friends can see this.” But what if Twitter added a popup and then said, “Great, this content right here, this comment. If your friend Jane comments on it, her mom can see it.” It started to really show how extended networks that you’re unconnected to, 2nd and 3rd-party relationships, can actually interact with your information.

I’m really driven by this need and this idea as a designer, what would it look like to have a semi-private space in a public network, and how could I design that? I think about this a lot because our communication on the Internet is asynchronous, right? But a lot of social media creates things as a timeline. This creates a false idea as to how information is actually accessed, and how data is actually stored. And that false information is articulated to users. So what feels like safer spaces, even if you’re completely public because you’re not interacted with, is a lie. It’s a false sense of information. It’s a false sense of safety.

So I wonder with all these varying levels of needs that we have as users, and as we live more and more of our lives digitally and on social media, what would it look like to design a semi-private space in a public network?

The past two years have really hit this on home that there’s this nebulousness surrounding algorithms and social media and the way in which our data is saved. And a lot of that happens when Facebook for instance changed their timeline to be algorithmically driven based off content. Then I think it was last summer, or two summers ago, there was this thing called the Ice Bucket Challenge, and these riots in Ferguson, Missouri. And what happened [was] people realized that Ice Bucket Challenge posts were being weighted above these other protests. And the way to work around that was to include “Ice Bucket Challenge” when you were posting about Ferguson to start to flip and change what you were seeing algorithmically in your timeline. So there’s this idea that users don’t quite know what and why the algorithm will weight things over other things. So when you post something on Facebook, the feedback is, “I have no idea when it’s accessible, how it’s accessible, and if it will be accessed.”

So that let me do this project that I created. I created a fake performance art piece—I mean, it’s a real art piece—called Social Media Breakup Coordinator, where I turned a video game art gallery in New York called Babycastles into a doctor’s office. And I held fifteen-minute therapy/consulting sessions on social media.

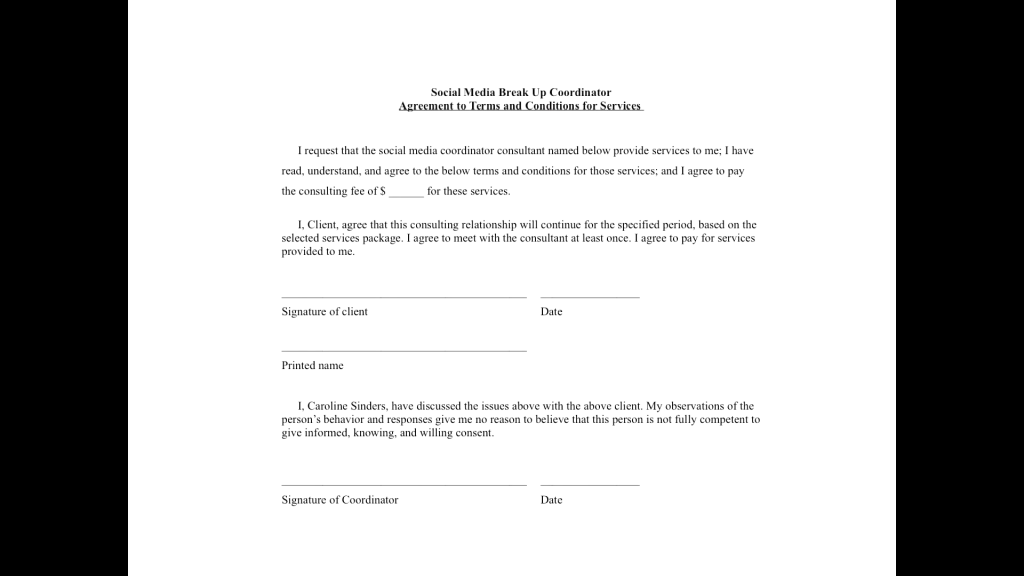

I had users fill out a twenty-two point very standard user quiz around why they were showing up. But then when they sat with me, I had them sign a terms of service agreement, I listened to them, and then I started to write down notes. But before I started this project, I reached out to a variety of different people, because from my research I had sort of started to realize that there’s a lot of different moments where there needs to be human intervention within algorithms within social media.

So how do you start to pull away from different groups that you’ve been associated with? How do you start to cut ties? And how do you start to cut ties between information when you cross-post against different platforms?

A good example of that is what happens if someone in your family dies and that ends up in Facebook memories because you Instagrammed it? What does that feel like, to have that emotional trigger? What does it feel like to quit a job and not be sure if your new coworkers can see your old coworkers? Or if you post something negative about your old job are you still connected to your boss and what can they see? And generally there is this lack of understanding that I found that most general users (probably not most people in this room) have a lack of understanding around how much their information is accessed.

So I was curious on a bunch of levels if people would actually pay me to give them advice. If they would trust me as a professional. And if they would actually engage with my services. And then I was curious if I could actually then covertly teach them the privacy policies of all the different platforms they were on.

When I started this project I realized I needed to talk to a variety of different professionals. I’m a user researcher, so my profession lies in talking to users and designing solutions for them. But as social media starts to overtake more and more aspects of our lives, I realized that there were certain things that I’m not equipped to handle. So what happens if someone has suffered trauma on social media? As a victim of harassment, I still can’t offer anyone feedback on that, and that’s sort of not my place.

So I spoke to a rape crisis counselor, an engineer, a data scientist and professor, and a private therapist, and a private psychiatrist. The takeaway I got was mainly this, and this is something I’d love to impart on most social media engineers and designers: It’s not my job to necessarily tell people what to do, it’s my job to listen to what people need to get done.

An example from that is, let’s say a user came to me for Social Media Breakup Coordinator and said, “I have an abusive boyfriend and he’s horrible and we have a child together and I want to un-Facebook friend him.” It’s not my place necessarily to say, “Okay, wait. Can I know more? Are you close with his family? Let’s start to cut down all these ties.” The reason I would ask that is, thinking as a designer if you’re Facebook friends with someone, and then you’re Facebook friends with their parents, and then it says on that person’s profile who their family is, the system has created more ties to that person, even regardless of if you unfriend them. You would need to block them as well as unfollow all of these other people related to them and tied to their profile to actually really separate.

A lot of the feedback I got was it’s not necessarily your job to tell a user all of that if they’re telling you what exactly they need. You sort of need to listen and guide from there and not really get into, “Well why are you here? What are all these different really specific and highly personal details?”

So why would I do this?

I was just very interested in the ways in which people live their lives online, and I really wanted to see if I could also gather a lot of data from this project. I had sixteen people fill out twenty-two different questions and meet with me and walk through all their different problems. And I was really curious if I could provide solutions the way an algorithm would. I outlined ten different solutions that I could affix to people based of different that they answered in a certain order.

And again the covert point of this project was to sort of teach people about the permeability of their posts and really how privacy is looked at and interacted with on social networks. And with the onset of all these different apps, particularly in America, that are offering to outsource emotional labor to a person, meaning there’s all these new apps that’ve been created of, “We’ll break up with your boyfriend for you,” I was really sort of curious to see if people would actually engage with me face to face.

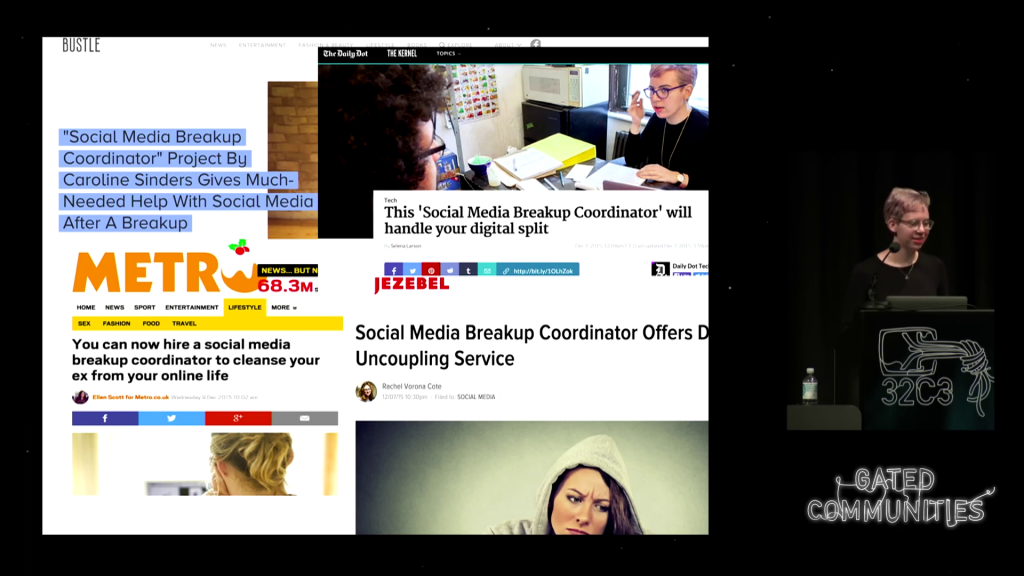

So, when I launched the project people thought it was real. And then the media thought it was real. And it was really hard to explain to, for instance Jezebel, that this was an art project. Because they were like, “But you’re charging people… And you made them sign a contract… Is the contract legally binding?” Yes, it is. “So you charge them money?” I did. “Did you give them fake feedback?” No, the feedback was all sincere. I really legitimately tried to help solve these problems. “But it’s an art project?”

The reason that it’s an art project is to me it’s a massive comment on the sharing economy that’s in America, and just this idea that I could be an emotional Mechanical Turk. And I completely made that by design and intention. Should people be trusting me with their data? Yes, because I am a professional, and I made sure to very very clearly articulate the ways in which I would use their data, how they would be protected, and that I would not share any personal information about them. I went through all of those steps, but is that sort of the negotiation we have with social networks? Do we have that kind of interfacing?

And an even bigger comment was no one every commented on price. I charged $1 a minute to sit and listen all day to people. I only gave them fifteen-minute blocks. It was actually incredibly taxing, physically. It was an all-day event where I think I only gave myself fifteen minutes for lunch. I definitely have a whole new type of respect for therapists. That was grueling.

So before I started the project, I started to break down what platforms I would cover. These are examples of my Post-It Notes. I had covered Resistor in one evening. And I started to break things down based off the four major platforms that are used in America, which is LinkedIn, Facebook, Twitter, and Instagram. I started to break down by what I thought were the four most broad, most universal, social groupings. So friends, family, work, and romantic. Then I started to think about why your romantic partner would friend you on LinkedIn, for example. Or why they would follow you on Instagram. Or why your boss would friend you on all of those platforms.

And I started to attach different emotional responses. So should you LinkedIn connect with your Dad? Maybe? Should you LinkedIn connect with your lover? If you want to… But you don’t have to. But then these are connections…if they’re different party apps that you don’t really use…that you then have to break down later if those relationships sour.

So remember when I said that everyone thought that this project was real? It’s because I went to really great pains to also make it look real. When people showed up, we had a receptionist who had coffee. There was a waiting room, and I had people sign in with the date they arrived, the reason, and I the time of their appointment. There was then a paper version of the quiz if someone walked in. Sometimes you get walk-ins. The doctor gets that all the time.

This is me working. This is what my desk looked like. Everyone got their own folder that I would write their name on. I would write out what I called a receipt. It’s all the advice I’m giving them and I’m taking my own notes. We both got a copy of the terms of service agreement. And then I would send them on their way.

So it looked actually fairly… It looked hacker‑y legit, you know. I mean…a falling-apart building, but I’m giving you legitimate advice and you just paid me $15.

This is our receptionist, Lauren. This is the waiting room. This actually wasn’t posed. I popped my head out and saw a bunch of people sitting and reading. This is me providing advice. And these are some students of mine that showed up. I taught a class on visual storytelling with social media, and they had shown up to observe.

This is also what the breakdown of the terms of service agreement looked like. This is the first page. It’s pretty standard. One of my favorite lines is “My observations of this person’s behavior and responses gives me no reason to believe that this person’s not fully competent to give informed, knowing, and willing consent.”

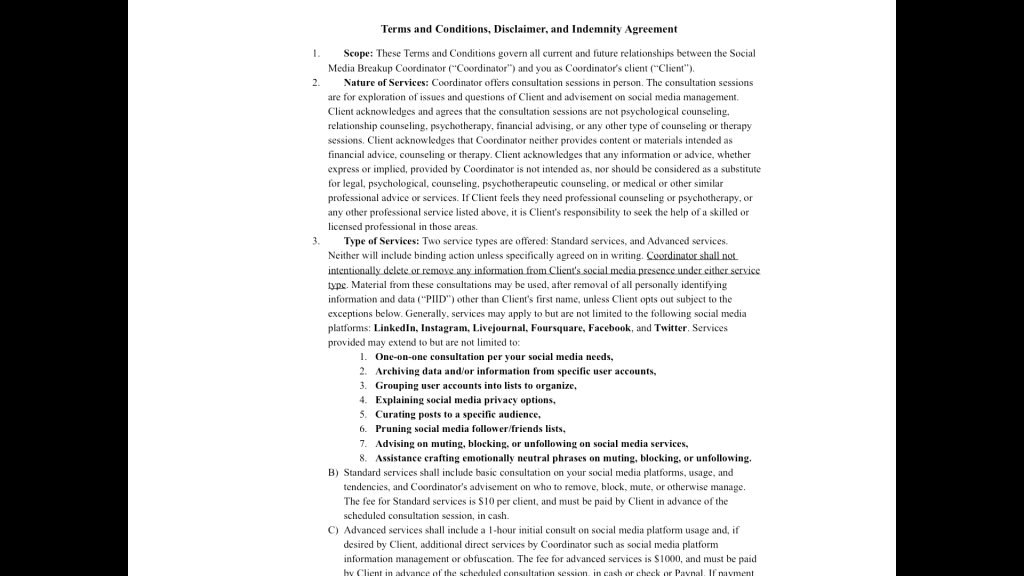

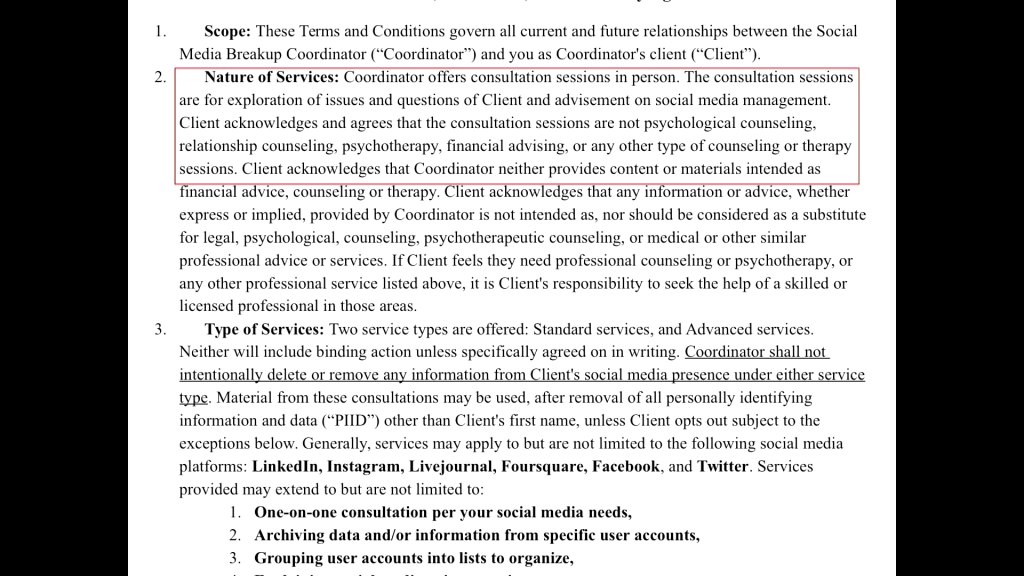

I should also clarify a really dear friend of mind who’s my collaborator, Fred Jennings, works for a law company called Tor Ekeland. They do a lot of digital law cases. He actually drafted this up specifically for me, for the needs of this project. I told him to keep it short, and I told him to bold certain things so users could really see, if they were scanning, what I’m talking about. So as you’ll see, various social media platforms are bolded with what I’m giving.

But then I had him outline the nature of services. And what you’ll see is that the “Client acknowledges the Coordinator provides neither content or materials intended as financial advice, counseling, or therapy.” And I really wanted to highlight I am not a therapist, and this is not a therapy session. If anything, I’m like a really weird SEO advisor that you’ve consulted to maybe talk about your personal life with. But I am definitely not a therapist.

So I had twelve people fill this out online, four people do walk-ins. And what’s fascinating is I only had two people show up and talk to me about heartbreak. This project was not inspired by a breakup. It’s actually about breaking up with social media. I had someone show up and ask me a lot of really specific questions about LinkedIn and her workplace, and what’s the proper way to break up on LinkedIn with your old job. And I was like, “You should just probably unfollow them.” I’m like, “Do you talk to them on Facebook?”

She’s like, “I do.”

I’m like, “Well, don’t do that.”

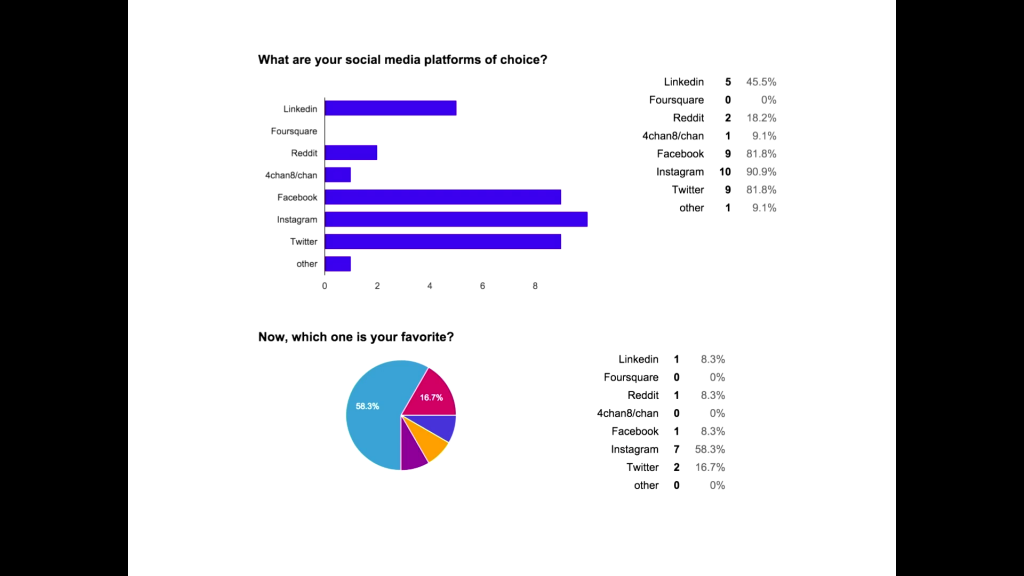

And I started to gather all this really fascinating information, specifically around the ways in which my users were using social media. This is something I’m going to openly share, probably post- this talk, if y’all want to look at what I’m accruing.

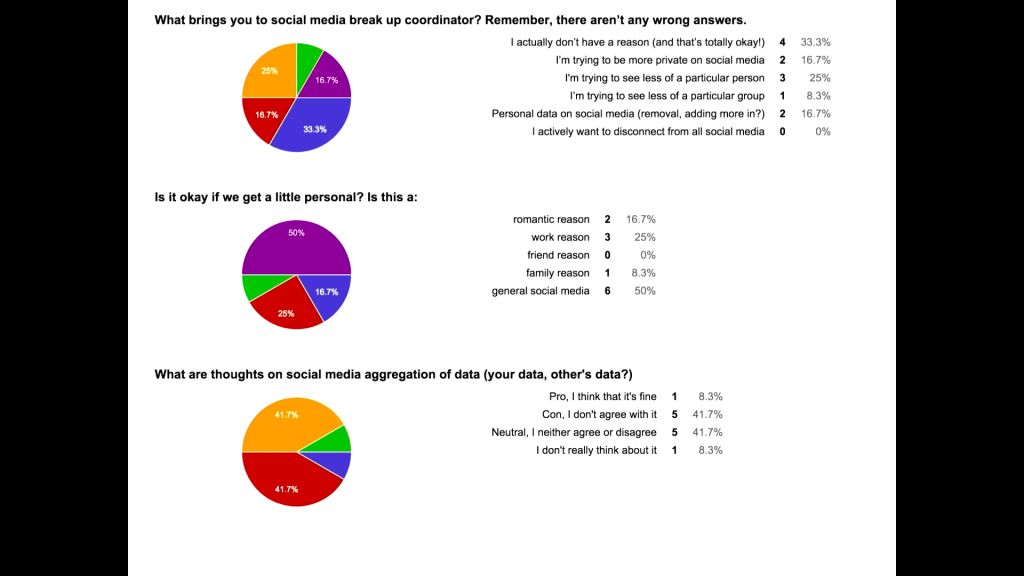

Different things like, let’s get a little personal, “Why are you here?” Romantic reason, work reason, friend, family, general social media? And maybe these questions seem really innocuous, but the way in which I was structuring my personal algorithm I had built, each of these questions triggered a different kind of answer that I could give someone, and I could string answers together. So I could give a combination of Answer A plus Answer D plus Answer J to sort of give someone a highly personalized response to what they’d given me. But this is sort of the way algorithm work. It’s not highly personalized; the combination to the user just feels personalized. And on my end, that was sort of the art project for myself.

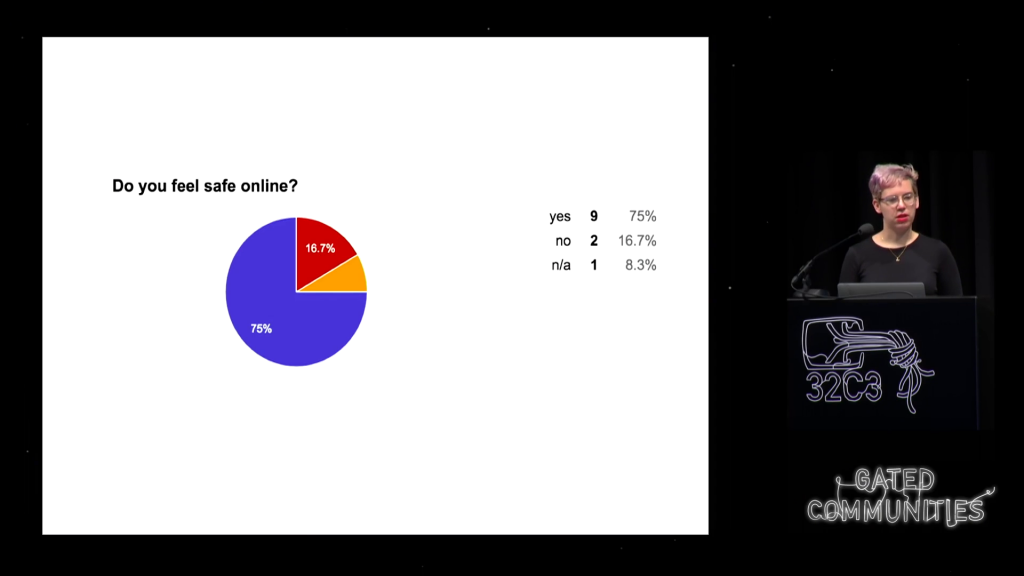

And I asked the general question “Do you feel safe online?” I was slightly surprised only two people said no. But I was more surprised that actually only two people said no. I thought it would be less, and then at times I thought it would be more. Given my research in online harassment, I was prepared for someone to show up and say, “I’m being victimized of harassment,” and I had a whole different answer ready for them.

But just the fact that most people had come with very general problems, I was actually surprised that in general 16% of applicants don’t feel safe online.

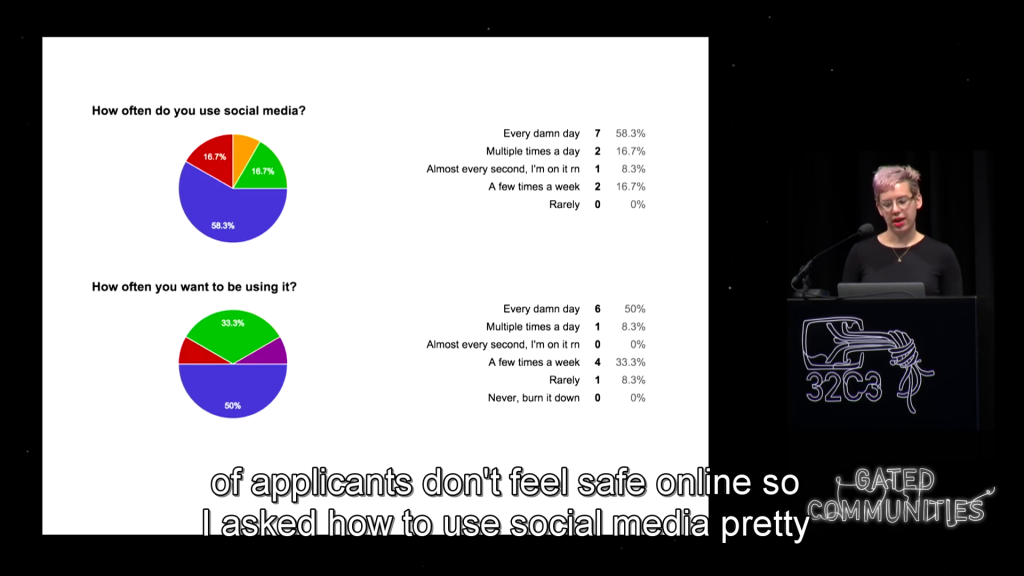

Then I asked how “How often do you use social media?” Pretty much every day. “How often do you want to be using it?” Pretty much every day.

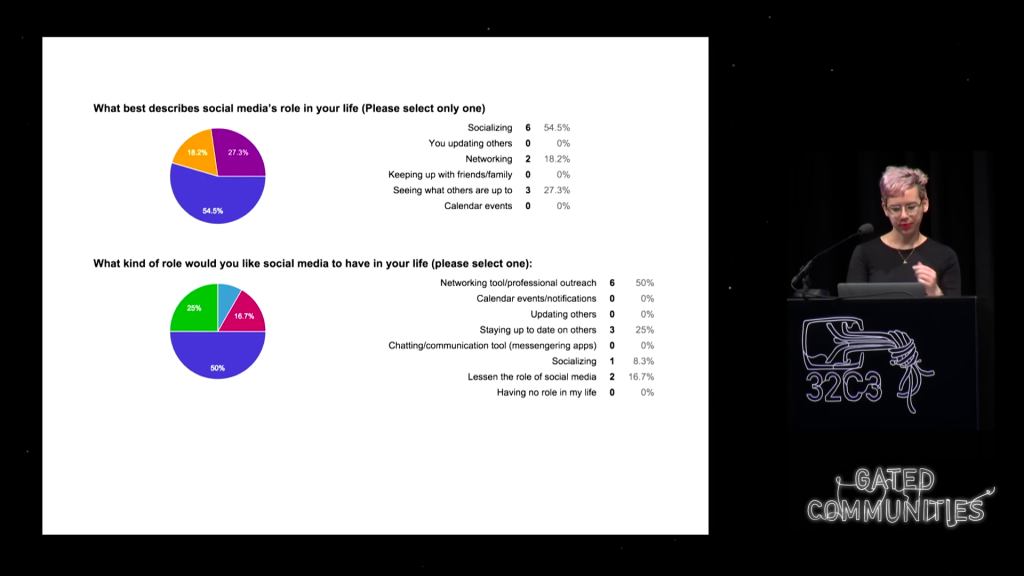

And the one I found the most fascinating was when people described what they used it for. About half of users said they used it for socializing. And when I asked what do you want to use it for, half of users replied with “networking.” So there’s a sort of pull to actually be taken off social media.

And this is what I learned from all of this. A lot of advice I gave people was, “Maybe you should just quit Facebook.”

And that was met with a resounding, “No. How dare you suggest that?”

And I was like, “Okay, great. Let’s pull back. Let me offer something else. Do you have a smartphone?”

“Of course.”

“Delete the app from your phone?”

They’re like, “Oh, that’s brilliant.”

I’m like, “I know, right? Whoa.”

But the one thing I actually found the most fascinating was most people did not understanding Facebook’s privacy checkups. Whenever people talked about that they wanted to socialize less and be less accessible, the first thing I always said was, “Well, what is your privacy checkup? Have you done one?”

They’re like, “Oh my god, what’s that?”

I’m like, “We have a problem.”

And the one thing I found super fascinating is that most users didn’t realize—and this is actually hyper-specific to one user that came through—that you are accessible even with very private settings on Facebook, to non-Facebook-friends chat messaging you. And if you respond to that message, that chat is moved into your general stream of chats and it makes your information accessible to that person you have not friended.

So I had a friend who was like, “I want to be super private but the reason I keep my Facebook open is like what if a young game developer’s trying to reach me?”

And I was like, “Did you know that chat does this?”

He was like, “I had no idea.”

And I was like, “Well, that’s terrifying, but you could maybe use it this way if you’re not concerned about harassment but you’re concerned about being reached.” Because he was more concerned that friends could be exposed through his openness, which I was like that’s a very very considerate [?] way to take your Facebook.

One thing I learned is that no one knew anything about Instagram’s privacy policies, nor did they care. They’re like, “Eh, Instagram’s fine. We don’t care.”

Again, most people wanted to use social media as a networking tool.

But the biggest takeaway was every single person that showed up— And I had a variety of people that were incredibly savvy; they were engineers. They actually thought that they did not understand social media as well as they could, and that they needed someone to help them better understand. And they needed someone to help them better understand who they paid $15 to in a hacker video game space.

And I want that to sort of resonate, because that is a joke. But also really think about the fact that these tools are so nebulous that you would go to a space and pay someone $15 that you’ve never met before that says they’re an expert, to just handle this for you.

And that for me was the biggest takeaway. How can we make things feel more accessible, or better yet let’s make a new platform.

I’m putting this up here because this is one of my biggest pet peeves. You would probably never explain how to SMS someone through screenshots. You’d probably say, “Do you see that little thing on your phone, the chat? Open it up. Write in a number. Say something.”

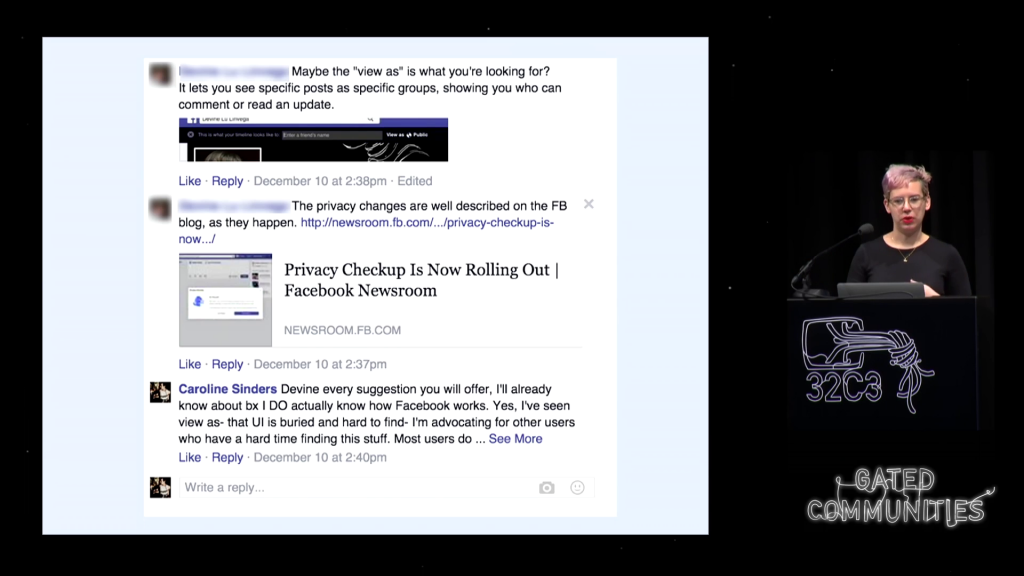

When this project launched, a friend wrote about me and some work I had been doing, and one of her followers legitimately believed that I did not understand Facebook. And he took it upon himself to try to explain Facebook to me. Which other than being kind of insulting because I work for a tech company and I have a Masters in interactive technology, what I found illuminating is the fact that this is not a weird response. This is not unusual, for someone to say, “Oh, right. Facebook is so hard to use when it comes to privacy and creating lists of posts to people that I’m going to screenshot everything for you.”

And that is never the way in which you should explain a communication tool to someone. If you have to screenshot something to someone, you fucked up as a designer. [clapping from audience] And those are my general thoughts on that. I can’t even. I just can’t.

So I guess what I impart to you and all of us here is, let’s make something not shitty.

And I know the reason people use Facebook, and this is not a talk to get off Facebook. I use Facebook. Facebook will persist for a very long time. Think of all the third-party apps that use Facebook as an automatic login. That is a design pattern that reinforces the need of Facebook in everyday users’ lives.

But as a designer and technologist, I want to make something better even if it’s just for my friends. We could do socialmedia.onion? Thoughts? And that’s sort of where the future of this project comes in, as I’m actually working on a social media co-op with two technologists in New York, Dan Phiffer and Max Fenton.

I’m doing another round of Social Media Breakup Coordinator in Oakland in January. And I’m hoping to keep gathering data around the ways users use these platforms through my performance art piece, but also as well as covertly keep [imparting] information on privacy. And what would it be like if we lived our lives just a little less online. And I’m hoping to eventually have a really robust data set that could be used as an actual data set and not just a sampling.

Thanks.

Audience 1: Did you ever ask the question why those people didn’t understand the settings on Facebook, etc. and still used it. I mean, it’s like walking into a gun shop, purchasing a firearm, and you have no idea what to do with it.

Sinders: Right. I think… What I said later in the presentation is we have these design patterns in everyday life that really actually enforce this use of Facebook. So this project was really centered around general users, and a general understanding of technology. We are moving to a very highly digitally-literate and data-literate society, but we’re not there yet. There’s pockets of literacy. This is a really good pocket of literacy right here; we’re a really awesome community.

But one thing I strive is like, the people that are “misusing” or “misunderstanding” Facebook, they’re not like elderly parents. They’re actual cohorts of mine that are my age, people younger, and people even a few years older. And I think the reason is that Facebook is really easy. And it’s highly addicting to use. And it’s like a phone book; everyone’s there. It stores birthdays for you, which is really actually helpful. It’s a fast way to talk to people. But I think the bigger thing is it’s enforced on other sites. Think of all the web sites you go to during a day and how many of them say “log in with Facebook,” “sign up with Facebook.” “Log in with Twitter.”

And those design patterns, which seem really innocuous to us actually are really important. They further enforce the ubiquity of Facebook, because it makes it easy. I mean, I’m shuddering thinking about all the third-party apps that would be associated right now with a Facebook login if you’ve done that for every site. But the common user does not know that. And that’s sort of the issue, I think.

Audience 2: Hi. Thank you very much for this interesting talk. I have basically two questions that kinda are the same, and they are around the art project part of your talk. And the first one is how did you make sure that people would actually understand that this was a piece of performance art. Did you rely on the absurdity of your proposition, that that would be recognized? Because clearly people thought not, and in terms of satire there needs to be some element of exaggeration or something that makes it clear that this is intended as a piece of art. So I was just wondering what your thought was that.

And the second part was where do you— So the data that you get from your piece of art you presented as a research outcome, almost. So that obfuscates the artiness of your project and turns into real-life data. And isn’t that also one of the problems why people are so careless with Instagram, because they see it as art when they photograph their food, whether that’s true or not? That’s open to debate. But they don’t see it as an actual act of data collection.

Sinders: Right. So, I guess to sort of back up. The way in which I describe myself [is] as a speculative designer, and I think about critical design a lot and critical making, and like what is that line. And oftentimes you’re making something real that is sort of making a point. Most people seem to sort of get that this was an art project of mine. And it helped that I was in New York doing this, and I was doing it in an art gallery that’s a video game gallery. So there were arcades in the back of the space.

But certain people, I actually realized… Because I had a couple people phone in that had sort of seen this and had signed up online and were not in New York. And I realized that they didn’t know that this was an art piece. And I kind of went with it. And a lot of that is they were signing a terms of service agreement, I did tell them this is not therapeutic advice, I’m not held liable for any decisions that you make, and I said all that over the phone to make sure that they understood that. And then I told them these are just suggestions. You don’t have to follow them, and you are allowed to push back. And that’s what I tell every participant. I’m giving you these suggestions based off my best-practice knowledge and this algorithm I’ve designed that you don’t get to see.

So your questions are triggering certain results, but you are also allowed to say, “I don’t like that,” and I can tailor them slightly. If you don’t like the result at all, you should take the quiz again. But the bigger thing is that it walks this really weird line. And this is a weird anecdote, but I’m also a portrait photographer. My background’s actually in fine art photography and I got a Masters in interactive technology years later. And my work was my family and I recreating moments post-Hurricane Katrina. So people always ask, “Are these real photographs, Caroline?” Well, they weren’t taken on the fly. I set them up. But they were real to me.

And they’re saying something. And that’s the way in which I would describe this project. It’s not real, but it was real to us in the moment, and it’s commenting on things and also providing real solution.

Audience 3: Hi. Do you know of some software that shows how open you are to other people? For example, a Facebook app or something that shows you a mirror of yourself, more or less.

Sinders: I don’t know of any sort of checker like that. I use a variety of different things. A friend of mind made a really great WiFi sniffer, which is at the extreme end of what you’re talking about. Not that you all should do this, I often will unfollow and refollow, and unfriend and refriend people and change my privacy settings, and then I try to log out and get someone else to log in, see if they’ll let me audit. And then I will see what I look like to other people. That’s a level of insanity that I don’t think most people in this room should necessarily engage with. But I don’t actually know of a checker that lets you see that.

I know that there are analytics systems you can download with Instagram to see who’s unfollowed you and followed you, and who’s following you that follows other people that you know, which currently Instagram does not have that analytic feedback for users. It’s a third-party app you have to download.

Twitter has started to add analytics on the side to sort of give an idea of how successful your tweets were. But they’ll never say like, “This is who didn’t follow you that accessed this tweet today.” But they give you a more robust analytic breakdown of, “Your tweet about puppy dogs did really well, but your tweet about OpSec did not.”

Audience 4: Hi. I really appreciate your insights on visual design and the user experience of data at rest. I’m really curious what your thoughts are on temporal design and the user experience of data in motion. Because you mentioned that one of the things that came out of your interviews was people having a sense of just sort of not understanding social media and feeling like they need help understanding social media.

In programming we talk about code smells, which are sort of features of code and how people use code that are a sign that something’s probably not designed right here. And it seems to me that that sense of misunderstanding is a design smell. Maybe that there’s just too much trying to consume users’ attention and we need to change the rate at which we’re delivering. Anyway, it’s an open-ended question. I’m just really curious what your thoughts are there.

Sinders: So I’ve actually thought about that a lot. I actually haven’t met with any engineers at Facebook or Twitter, but if you’re here I’d love to talk to you. But I met with someone that worked in branding at Twitter and I asked him to just sort of talk about his day job and describe how the branding team targeted ads. Because I figured that was a really good way to get a sense of how the algorithm was working.

He started spouting a lot of buzzwords, as he is prone and wont to do, because he was a coworker of mine from a really old job. But he said something that was really fascinating to me. He was like, “Well you know, Caroline, there’s just so much noise. We have all these different algorithms working, but it’s just so much noise on top of each other and you’re just trying to find this little signal.” So I know for instance with Twitter it’s exactly that problem, that they infrastructurally designed themselves incorrectly, and to combat it you can’t… They’re at a point where I feel like they cannot shut it down and rebuild it and become minimal, with a better working codebase. So they’re building on top of everything.

The reason I also think that is a lot of anti-harassment that they have they’ve been rolling out for verified users and not for the common use base. So if you’re a verified user, the way in which their anti-harassment initiatives work, it works way different and way better for you. They have an algorithm working where you will never see as a verified use certain harassment tweets. They’re catching them before they come to you, and you can look at them later. But there’s all these really highly specific changes— And I have not yet seen a verified user account. No one’s let me log into theirs. (Again, if you have that, let’s chat.) But I’ve seen enough screenshots and read enough about it, and talked to friends who have it. And it’s like Twitter 2.0. It’s just slightly better.

So what I think the bigger issue is, there’s so much data in motion that they can only isolate it for what they are infrastructurally deciding who is a power user, and that power user infrastructurally actually becomes a better power user.

Audience 4: I guess the hidden question I have there is really more of, is Twitter eventually doomed to tear itself apart because it’s triggering people’s System 1 responses instead of their reasoning.

Sinders: I really don’t have an answer to that question, because as a Twitter who’s thought about quitting but I really love the community I have on Twitter, it’s kind of a weird emotional negotiation that I have of like, I don’t know how accessible I am and I face harassment on a usually monthly basis, for a variety of different things. And it’s this weird negotiation I have of, why am I still here? But I actually legitimately like it. And I think that that’s the big issue. It’s like maybe it will pull itself apart, but harassment while it affects a lot of people is also affecting hyper-specific groups of people. And I think a lot of the fear around it, rightly so, is well if it happens to one person it could happen to you because we’re infrastructurally in the same place and we’re both equally open.

I guess I’m not sure. I’m interested to see what happens in the next two or three years, because Twitter is not gaining any new followers at this point. They’re kind of starting to plateau. So they are not growing at a rate at which other social media networks are growing. And that’s a major issue. And dome of that issue could be tied towards bad infrastructural design or really poor code of conduct.

Audience 5: Last year my brother blocked my mom on Facebook and she still vocally beat about it. I mention this because many times our online social network is almost directly mapped or closely intertwined with our offline social network. So did you look into making changes into these social networks that’s online, how does that affect our social network offline? As much as you may unfollow and stop talking to your boss or your former colleagues, you’ll still meet them at conferences and at tea parties. So how do you deal with that change of this network online which does not actually give a clear picture of how your social network looks like. [?] the interaction between the offline and online after that change.

Sinders: I definitely thought about that a lot. Just in general as a researcher I’ve always been really intrigued by societal norms and propriety and what is polite behavior across many different cultures. And I’m speaking as an American but I come from a hyper-specific place in the United States. I come from Louisiana, which is the American South. We’re a hyper-hyper-specific culture. We speak two languages. It’s English and then Cajun, which is an oral-based language. I only know a couple words. But New Orleans where I’m from has the highest rate of birth retention. 75% of people that are born there stay there. So the ways in which I socialize as a New Orleanian is very different than the ways I socialize as a New Yorker.

And that’s true even, I think, when you get even more localized. If you look at Americans versus Canadians versus Mexicans and getting into Latin America. So I thought about that a lot, that actually a lot of the interactions you have offline definitely affect and influence the interactions you have online. So a lot of advice I gave people was also having to break down like, how often do you interface with this person, and let’s think of the most neutral and polite way to break things down.

So yeah, I thought about that a lot. I haven’t yet, with the people I’ve given advice to, said unfriend someone. Oftentimes unless the relationship has incredibly soured, that’s usually the advice. But if it’s in the case of a boss, for instance, my reaction is oftentimes, “Why don’t you reach out to them if they’re an old boss and say, ‘I’d love to keep in touch. Here’s my email. But I’m keeping my Instagram just for friends only.’ ”

Audience 6: From your project, I’m curious to know if you think that social breakup is actually possible or if it’s not really possible because people end up seeing your stuff anyway.

Sinders: Is it bad if my response is “both?” I think that as social media users, for a really long time we’ve been taught to interact with social media in a particular way. And I don’t think that that way is correct. Facebook actively wants you to post more, as does Twitter, and Instagram wants you to share and accumulate followers. And that’s the way in which these networks grow. You’re creating content and that content is analytics, and they package and sell that to advertisers.

I’m politically agnostic on that, but I have my own personal thoughts, as a researcher that’s just what they do. But I think that that push towards sharing and cross-platform sharing, that you can cross-post, perhaps in terms of privacy is a horrible idea. What are you saying, how are you saying it, are all identifiers, and they’re all identifiers that can pinpoint location and who you are, and who you are offline and where you are. And that’s something I often do try to impart to people: what are you saying and when, and does it need to be said online?

So I personally, and I always give this example with people that sit with me, I’m like, “I personally try to not post location but I have a very specific reason I can’t do that.” And I had a lot of internal dialogue of should I post that I’m at CCC? What if someone’s here and they want to talk to me about something that I don’t want to talk about? Or what if I say I’m home, does that make my mom more of a target if someone wanted to try to swat us again?

And those are extreme examples. But it’s also important to think about like, are you accidentally doxxing yourself? If you’re saying, “I’m at the bar downstairs from my apartment. Let’s check in on Foursquare and post that on Instagram,” you’ve pinpointed where you live. And that’s information that people don’t actually need. So I always try to walk this line of like, I think it’s totally find to post pictures of food and family and friends and to do it frequently. But I think it’s important to know are you highlighting where you are and are you highlighting regular patterns in your lives? And are you then amplifying that to a variety of people that you don’t know and you have no idea how many people are accessing it?

Audience 7: [Angel] I’ve got a question from the Internet. Yesterday there was a talk titled “The Possibility of an Army” by Constant Dullaart, who bought thousands of fake accounts. What do you think about these actions?

Sinders: I guess I need a little more context. This person bought thousands of fake accounts to…?

Audience 7: [Angel] Actually, I don’t have any context for that for you.

Sinders: In graduate school, this fantastic ethnographer, Trisha Wang, came to speak to us and the professor, Clay Shirky, at the time was saying he bought her 50,000 followers in a day, and I think he paid like $100. I think it’s really fascinating the ways that that bumps you up into a different sort of social strata, and how it presented her in a completely different way online. That changed the way in which people interacted with her and the amount of followers she started to accrue on a daily basis.

Oh, I think I’m… So, this person created like a thousand different accounts. I think that that’s what that question may have meant.

Audience 7: [Angel] From what I read, he bought them.

Sinders: Oh, he bought them? I would be curious to know why. Like if he was looking at data or if he just bought a thousand followers. But I guess I need a little more information.

Introducer: Alright. Well the person’s not here so we don’t know. We have another question from mic #3.

Audience 8: Hi. My sister had an occasion where someone who she sort of…became a stalker, and didn’t really know her very well, but then started to send really weird messages to her. And it got to a point where they were following her on Instagram and she can’t really control…because this person knows who she is and her friends. She couldn’t control her information and so this person would send stuff based on, “Oh, we bought this blender,” and would deliver it to our house along with letters about like how they would have sex even though they had never really interacted before. And it got really scary and unsafe. And it’s sad for that person but also it became really scary for my sister and she didn’t know where to go and what to do. And when she went to the police and said, “I’m scared that this person might come and touch me when I’m going home late at night. What should I do?” They said, “Well, until something happens we really can’t do anything for you.” So there may be resources out there for people who are facing this, but for those watching this video, what would you recommend them to do?

Sinders: First of all I want to say I’m so sorry for your sister. That’s horrible. And secondly what I would suggest doing is, there are a variety of different non-profits that exist. Crash Override is one once you’ve been harassed in a really specific way. What I would suggest is Smart Girl’s Guide to Privacy has this. It’s this really fantastic book, and they list where you can access, I think, lawyers that are more digitally savvy around digital crimes.

And my recommendation in a case of that with that kind of persistence where it’s a regular person, meaning a regular stalker, it’s one entity and they’re actually starting to sort of move away from social media and moving into letters, you should get a lawyer. And from there figure out ways to assign space between you and the other person across state lines, even. I don’t know the particulars of this case. If this person is in a different state than your sister, that gets a little bit trickier. Are they in the same city? [reply inaudible] So they’re in the same city. There’s a lot more you can do. My recommendation would be to immediately find a lawyer who is well-versed in online harassment. But if that person’s in the same city and they’re sending letters, I think that’s a pretty good reason to start pressing charges. That would be my immediate reaction.

Audience 9: Yes. Thank you for your wonderful talk, first of all. One thing I find myself personally very preoccupied by is not just the question of how to act on social media in the present, but also how to clean up after my past. Certainly things I’ve written or posted before. And I actually find that the obstacle towards doing that is frequently infrastructural. It’s really hard to sort of have an oversight of everything you’ve done in the past. What do you sort of see as the future of design on these platforms? Are they intentionally making it difficult, or have they coded themselves into a corner, and is this going to become a bigger problem?

Sinders: I guess I would say, basing off the way the design is now, I think it’s a mixture of having coded into a corner and also trying to make design minimal. So, a lot of trends in design are around optimization and usability. But we’re optimizing for speed and we’re making things more usable for mobile. But we’re not optimizing or designing for safety. And we’re not optimizing or designing for longevity of life within interacting on these platforms. So I would say it’s a misuse of design priorities. And I think that now there is some pushback with people saying, “How’s this being accessed? There’s harassment persisting on this platform. How’s this happening? Oh, it’s happening because of these reasons,” etc.

I would say that it’s just a misalignment of priorities within a design hierarchy and a coding hierarchy.

Further Reference

This presentation page at the CCC media site, with downloads available.