Roderic N. Crooks: I’m introducing to you Dr. Safiya Noble, Assistant Professor at the University of Southern California’s Annenberg School of Communication. Previously, she was a professor at the Department of Information Studies at UCLA, where she held appointments also in the departments of African American Studies and Gender Studies. She is cofounder of the Information Ethics & Equity Institute, an accrediting body that uses research to train data and information workers in issues related to social justice and workforce diversity.

She is also partner at Stratelligence, a firm that works with organizations to develop strategy based on informatics research in areas that include justice and ethics, labor and management, health and well-being. And as if that weren’t enough, in addition to her current book project Dr. Noble is coeditor of The Intersectional Internet: Race, Sex, Class and Culture Online, and Emotions, Technology and Design: Communication of Feelings Through, With and For Technology. She’s written numerous articles about race, gender, and the information professions, among other topics.

So I’m especially excited that Dr. Noble could join us this quarter. She provides a model for scholarship that is technologically sophisticated, politically engaged, and generative in its approach to disciplinarity. Please join me in welcoming her.

Safiya Noble: Thank you Roderic for that lovely invitation. You all really scored when you got him to come and be part of your team here. We miss him in LA, and he was a favorite of ours at UCLA, so really happy for you to be here. And so pleased to join you this afternoon and to be competing with not just the beautiful sunny, hot, winter day, which still is amazing to me after having spent thirteen years at the University of Illinois in Illinois, to come home and really enjoy my life thoroughly with these days.

So thanks for having me. I thought today I would talk a bit about the forthcoming book, Algorithms of Oppression, and then also maybe leave a little bit of space for us to dialogue. So I’m going to move fairly quickly through some of this, just so that we have enough time to also stay engaged in conversation. I’m going to set even a timer for myself to warn me that we’re just about out of time. Alright.

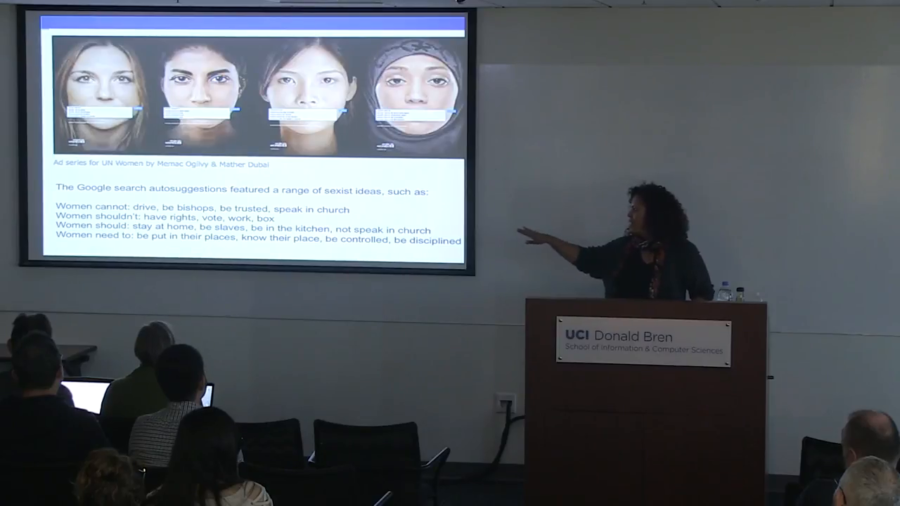

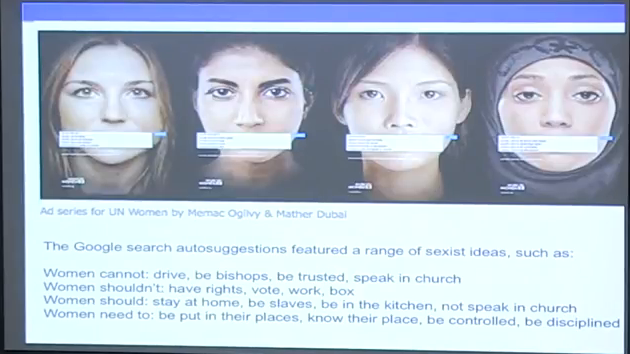

Some of you might be familiar with this. This is a campaign from October 21st of 2013, when the United Nations teamed up with an ad agency, Memac Ogilvy & Mather Dubai where they created this campaign kind of using what they called “genuine Google searches.” This campaign was designed to bring critical attention to kind of the sexist ways in which women were regarded and still denied human rights. Over the mouths of various women of color, were the auto-suggestions that appeared when searches were engaged, and they placed those auto-suggestions over the faces, the mouths of these women.

So for example, when they started to search “women cannot…,” Google auto-populated “drive, be bishops, be trusted, speak in church.” “Women shouldn’t…” have rights vote, work, box. “Women should…” stay at home, be slaves, be in the kitchen, not speak in church. “Women need to…” be put in their places, know their place, be controlled, be disciplined.

Now, what was interesting to me about this campaign when it first appeared is that it really characterized, was presented in a way that many people think about Google search, and particularly auto-suggestion, which is that it is strictly a matter of what is most popular, and that the kinds of things that we find in search are strictly a matter of what’s most popular.

The campaign in fact said, “The ads are shocking because they show just how far we still have to go to achieve gender equality. They are a wake up call, and we hope that the message will travel far,” noted Kareem Shuhaibar, who was a copywriter for the campaign who was kind of quoted in the United Nations web site.

Now, I found this campaign interesting because I thought that maybe we could spend some time looking at campaigns like this and a whole host of kind of failures of Google search to talk about what other processes might in fact be involved with the kind of information that we find there. And quite frankly what I think is at stake, mostly for communities who are already marginalized or disenfranchised and how this might in fact exacerbate that.

So I want to just give— You know, I’m not really want to give trigger warnings in my classes. But I’ll give the trigger warning that if you love Google, you’re going to be of super mad at me later. So that’s your warning.

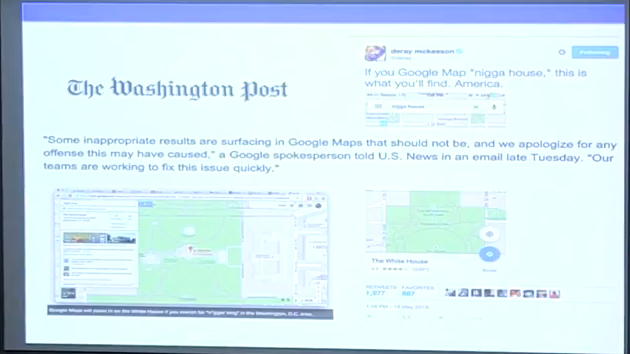

Alright, here’s a story you might be fairly familiar with. Here’s The Washington Post. This was about a year and a half ago. Deray McKesson, who’s a fairly well-known activist on Twitter—some of you might be familiar with him. He became fairly well-known around Ferguson in particular, and advocating for Mike Brown online. He really became super popular after Beyoncé followed him and then he spiked in followers. So I just feel compelled us say that if anyone here knows Beyoncé and you can get her to follow me, it would like really amplify the work. Just throwing that out there.

So Deray tweets,

If you Google Map “nigga house,” this is what you’ll find. America. pic.twitter.com/YWRO1OiYgc

— deray (@deray) May 19, 2015

And what was happening at the time was that if you did a search on the “ ‘n‑word’ house” or the “ ‘n‑word’ king,” Google Maps would take you to the White House. And this was during the presidency, obviously, of Barack Obama, who some of us still wish was president.

So The Washington Post, US News, everyone’s kind of contacting Google, trying to get a quote from them on how could this happen. And this is a very typical kind of Silicon Valley response, or a typical—not just from Google but from many Silicon Valley companies, which is, “Some inappropriate results are surfacing in Google Maps that should not be, and we apologize for any offense this may have caused. Our teams are working to fix this issue quickly.”

There’s kind of two things going on here. First, “we apologize for any offense this may have caused.” I know for me that when my husband says something like you know, “I apologize if you’re offended,” I actually don’t feel apologized to. So it’s like a weird, interesting non-apology apology, which is something that we often see from big corporations in general, which is if there’s like one random person in the world who might’ve been offended by this then we apologize. As if the whole notion of taking responsibility for the results in fact that their algorithm produced would in fact be offensive and we could just really powerful claim that.

But more importantly I think in their statement, that “our teams are working to fix this issue quickly” is really kind of a pointed way in which many tech companies presume that their platforms are working kind of perfectly, and that this is a momentary glitch in a system, right. And so this is another way that we often see discourse coming out of tech companies.

The work that I do is really kind of situated in using kind of a critical information studies lens. So I’m going to talk about that and I’ll share with you some of the people who’ve really influenced the work that I’m doing. But one of the things that I think is really important is that we’re paying attention to how we might be able to recuperate and recover from these kinds of practices. So rather than thinking of this as just a temporary kind of glitch, in fact I’m going to show you several of these glitches and maybe we might see a pattern.

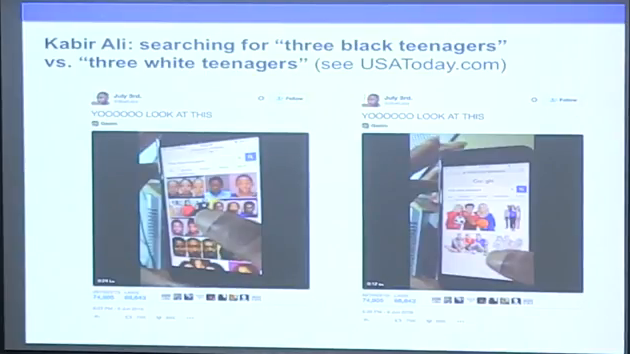

Here we have Kabir Ali. Some of you might remember this. Kabir Ali, about a year ago he took screenshots of a video. He’s a young teenager who has his friend video him as he does a search for “three black teenagers.” And when he does a search on three black teenagers, as you can see almost every single shot is some type of criminalized mug shot-type of image of black teenagers. And then he says to his friend, let’s see what happens when we change one word. And he changes the word “black” to “white,” and then we get some type of strange Getty photography, stock images of white teenagers playing multiple sports apparently at one time that kind of don’t go together. And this is the kind of idealized way in which white teenagers are portrayed.

And so this story also goes viral quickly. Jessica Guynn in fact, who is the tech editor for USA Today (If you want to follow kind of good critical reporting of the tech sector, I would say on take a look at what the tech editors at USA Today are doing.), she covers the story, as do many others. And instead of issuing an apology after that incident, Google just quietly tweaks the algorithm. And the next day @bobsburgersguy on Twitter notices that the algorithm has changed.

https://twitter.com/bobsburgersguy/status/740261645964959744

Now, what’s interesting are again the choices that are made. Google adds in a young white man who’s in court who’s actually being arraigned on charges of hate crime, along with kind of keeping— And so this idea that to correct the reality or a particular truth, we’re going to also add white criminal pictures, again legitimating that the black criminality was actually legitimate. And then you know, we throw in like a couple of black girls playing volleyball, and apparently that’s the fix. Okay. So this is again kind of the quiet response.

Bonnie Kamona, Twitter, 4/5/2016

Here’s another failure. Google searches, this is a story that went viral. When you did image searches on “unprofessional hairstyles for work,” you were given exclusively black women with natural hair. So I wore my unprofessional hairstyles for work—because that’s actually the hairstyle I wear every day—for you. And you know, when you change this to “professional hairstyles for work,” you are given exclusively white women with ponytails and updos. And I often try to explain to my white colleagues that it’s the ponytail and the updo that really make you professional. And also being white.

The algorithmic assessment of information, then, represents a particular knowledge logic, one built on specific presumptions about what knowledge is and how one should identify its most relevant components. That we are now turning to algorithms to identify what we need to know is as momentous as having relied on credentialed experts, the scientific method, common sense, or the word of God.

Gillespie, Tarleton. “The Relevance of Algorithms.” in Media Technologies, ed. Tarleton Gillespie, Pablo Boczkowski, and Kirsten Foot. Cambridge, MA: MIT Press. [presentation slide]

So what does this mean? I mean, one of the things that is really helpful is kind of looking to people like Tarleton Gillespie. I think he says it perfectly in one of his pieces about the relevance and importance of algorithms. He says “that we’re now turning to algorithms to identify what what we need to know is as momentous as having relied on credentialed experts, the scientific method, common sense, or the word of God.”

And I’m going to suggest something that may be common sense to some of you, might seem a little provocative for others. Which is we have this idea that algorithms, or computerization, automated decision-making, somehow can do better than human beings can do in our decision-making, in our assessment. This is kind of part of the discourse around algorithmic decision-making or automated decision-making.

And these practices to me are quite interesting because they rise historically at a moment. In the 1960s we start to see a rise of deep investment in automated decision-making, computerization, the kind of phenomena that moves to the fore into the 1980s, desktop computing. And this coincides historically at the very moment that women and people of color are legally allowed to participate in management and decision-making processes.

So I find this interesting that at the moment that we have more democratization of decision-making in kind of the highest echelons of government, education, and industry, we also have the rise of a belief or an ideology that computers can make better decisions than human beings. And I find this to be an interesting kind of tension, and these are the kinds of things that I look at and explore in my work.

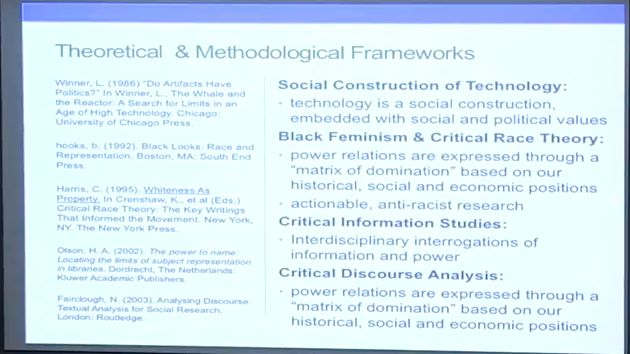

So let me just say you know that every academic has to have a slide that has too many words on it. And that’s this slide. So just bear with me for a minute and I’m going to do better. But I think it’s important especially for grad students who are in the audience and undergraduates, if you’re interested in thinking kind of in an interdisciplinary way about digital technologies, for me when I was a grad student and I started thinking about black feminism and critical race theory and kind of the information science and technology work that I was being exposed to, I was often met with kind of derision around that. In fact I remember I was just sharing with some of the grad students earlier today at lunch that I can remember being in a research lab meeting and one of my professors saying something to me like, “What is black feminism? Who’s ever even heard of that?” And I was like, pretty much everybody in gender studies and black studies. But I guess…they don’t count?

But what I found at the time when I was thinking about racist and sexist bias in algorithms and technology platforms, particularly Google, that was a hard kind of idea to get through. This is like 2010 or so when I kind of first started thinking about and researching this. Now you know, everyone’s talking about biased algorithms. But there was a time when you know, that just didn’t—that like my mother-in-law wasn’t talking about it. Do you know what I’m saying? Like it’s in the headlines now all the time.

So I just encourage you to think about, for those of you who are students, what are the frameworks that you’re pulling in from other places outside of kind of the traditional fields of library and information science, or information science, information studies, computer science, that can help you ask different questions. And that’s what I was interested in doing, is at the time the people who were writing about Google kind of around 2010, ’11, ’12, were really thinking about the economic bias, for example, of Google, and how Google prioritizes its own interests or its investments for example, over others. And we see this in the early work of people like Helen Nissenbaum, who was writing back in 2000 about how search technologies hold bias, or Lucas Introna.

But I was interested in the disproportionate harm that might come to racialized and gendered people through these kinds of biases. And again I think that when we take our own— I think of this in like kind of— You know, I was a sociology major as an undergrad, and so I was really influenced by people like C. Wright Mills in thinking about the private troubles that I had, and might those also be public concerns. I was personally troubled by the fact that I was seeing people who look like me being characterized in a particular way, and people that I cared about who were part of the communities that I was a part of kind of being characterized a particular way. And then I started to realize that this was more of a public phenomenon, not just a private trouble.

So here I’m thinking about things like the social construction of technology. Now, if you’re in gender studies or you’re in ethnic studies, black studies, Latinx studies, American Indian studies, we talk about social constructions of race all the time. Or social constructions of gender. We’re comfortable with those kinds of framings about race and gender not being biological, not being natural, not being fixed, but in fact being a matter of power relations kind of historically situated; fluid, dynamic things that can change over time in their meeting.

And so I was very drawn to the social construction of technology theorists. People like Langdon Winner, Arnold Pacey, who were really helping us understand that the technologies that we’re engaging with are in fact not flat, they’re not neutral, but they’re also laden with power, and they are constructions of human beings. And so then what might human beings be putting into the digital technologies that we’re thinking about?

The other dimension of my work in that it’s black feminist and kind of engaging with critical race theory, is I was interested in, and I continue to be in the things I’m thinking about, interested in…you know, things that are actionable, things that matter to me in the world, and that are legible, also. So for example, when I say you know, “My mother-in-law doesn’t know anything about algorithms.” But I can talk to her. She knows that messed-up things happen to black people. And she can understand that my work is trying to engage there. You know what I’m saying? So these are the kinds of things where when we think about research that can make a difference in the world, I think that’s important. I also use— Obviously I’m trying to bolster this field critical information studies and add a voice to that.

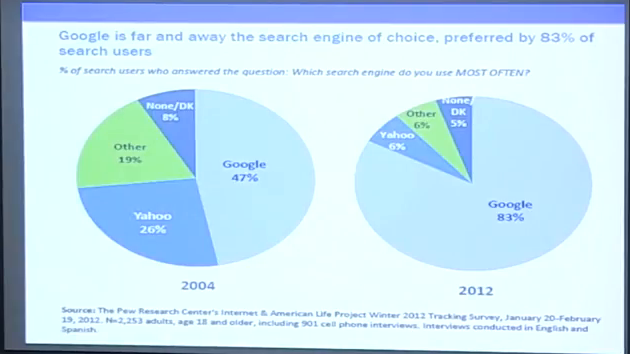

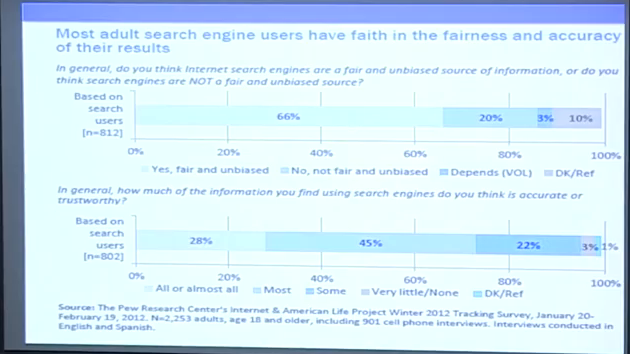

Alright, so why Google? Quickly, because Google is a monopoly and they control the search market, along with other kinds of markets. Pew did a series of studies on search engine use. They did one in 2005. The last one they did was in 2012. They do these kind of tracking studies on people who use search engines and what they think, and I’m just going to share with you a couple of headlines from their last study. Back in 2012, 83% of the market of people who use search engines used Google. More than that if we look at mobile.

So people often ask me—I’m just cutting this off now before we get to the Q&A, don’t ask me why I don’t look at Bing. Because nobody’s using Bing, okay? That’s why. That’s the easy answer. Study the monopoly leaders because everyone’s trying to replicate what they’re doing. And so this is why it’s important to study that.

Here’s what’s especially alarming out of the Pew study. They say that according to these users of search engines, they report generally good outcomes and relatively high confidence in the capabilities of search engines. Seventy-three percent of search engine users say that most or all of the information they find as they use search engines is accurate and trustworthy. Seventy-three percent say accurate and trustworthy. Sixty-six percent of search engine users say search engines are a fair and unbiased source of information.

Alright, so this is interesting to me. Now, I’ve been giving this talk and talking about the book for a while. And you know, it’s interesting to me to give this kind of post- the last presidential election. Because now again, people have a much higher sense of like, “Hey, wait a minute. Maybe platforms are doing something that we hadn’t thought about before in terms of misinformation or circulating disinformation.” I think of it also another way when I’m in a more cynical, pessimistic mood—I might say you know, nobody cared about these kinds of biases when they were biased against women and girls of color, but now everybody cares because it’s thrown a presidential election. So you know, you could think about it whatever way you want to.

Leading search engines give prominence to popular, wealthy, and powerful sites—via the technical mechanisms of crawling, indexing, and ranking algorithms, as well as through human-mediated trading of prominence for a fee at the expense of others.

Nissenbaum, Helen, & Introna, Lucas. Shaping the Web: Why the Politics of Search Engines Matters [presentation slide]

Helen Nissenbaum I think tried to forewarn us back in 2000, you know. She said the leading search engines really give prominence to the popular, wealthy, and powerful sites. Really, her study was that those with more capital are able to influence what happens kind of in the realm of commercial search. And of course this comes at an expense of others who are less well-capitalized. And so I think this is part of what I’m trying to think about in my work.

Now, here’s the part of the talk where you’re going to feel like you’re going to need to hit the wine bar after this, okay. So just bear with me and we’ll get through this part but I think this is really at the epicenter of the reasons why I care about this topic.

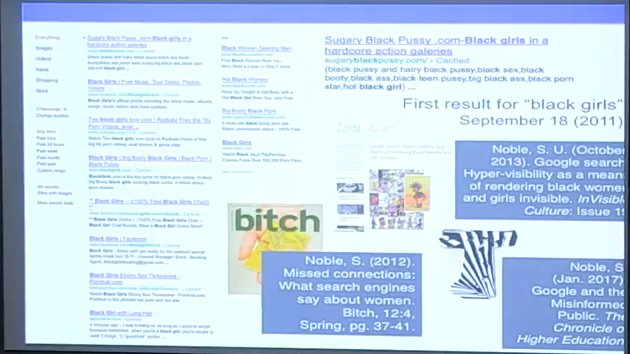

When I first started collecting search results in 2010, 2009, I was looking at a variety of intersectional identities. So I was looking at black girls, Latina girls, Asian girls, Asian-American, American Indian, I was kind of looking at all kinds of different combinations. I looked at boys, I looked at girls, I look at men, I looked at women. Really not trying to essentialize the identities but to think in terms of kind of the common ways in which people are also engaging with identities, whether they’re their own or others’ identities. And I used mostly the kind of categories that’re in the census, because those are oftentimes the kind of ethnic and racial terms that people get socialized around.

And when I first started this work in 2009, the first hit when you did a keyword search on “black girls” was hotblackpussy.com. I’m going to say “pussy” a bunch of times right now and then we’re gonna move forward.

So, this was concerning to me. This was kind of Google’s default settings, right. There was nothing particularly special. And so I was engaged with other graduate students and we were collecting searches with different IP addresses, off campus network, with machines that pretty much the only digital traces were that it came out of the box and we had to connect to a network. But really, machines that didn’t have all of our digital traces personally. Although I will say that I collected searches also on my own laptop, and I’ve been writing about and kind of critiquing Google for a minute. And I still get messed-up results. So this idea that my own traces somehow would influence— I mean, Google’s never quite figured out that I don’t want to see that yet, no matter how many times I write about it or speak about it.

You can see here, by 2011 sugaryblackpussy.com has taken over the headline, followed by Black Girls, which is a UK band. Has anyone heard of the Black Girls? I mean, it’s like—one, okay. It’s a UK band of white guys. They call themselves the Black Girls. They’re incredible at search engine optimization and terrible at musical music distribution. Just throwing that out there because no one’s ever heard about except for [inaudible name].

Okay, so they’re #2 followed by “Two black girls love cock,” this is a porn site; followed by another porn site; another kind of gateway chat site to a porn site; followed by the Black Girls, our UK band again, their Facebook page winning in the SEO game; followed by a porn site, and then by a blog.

So I first wrote about this in 2012. I was really interested in this phenomena, and I contacted Bitch magazine—some of you might be familiar with, they’re like a feminist magazine. It’s really popular in the Bay Area where I used to live. And they critique kind of popular culture. They had a special issue out on cyber culture. And you know, I didn’t have to heart to say nobody’s saying “cyber culture” anymore because it was already out with CFPs. So I just wrote to them and I said, “You should let me write this story about what’s happening in search because this is really important.”

And they wrote back and they said this is not a story because everybody knows when you search for girls online you’re gonna get porn.

And I was like aren’t we like, the feminist magazine. We don’t want to critique it? Talk about it?

And they were like, “No. This is a non-story.”

And I was like you know, it’s more complicated than just what happens specifically to black girls, or Latina girls, or Asian girls. It’s also that girls, that women…all these sites are about women, but women are coded as girls. This is just kind of a fundamental Sexism 101. We could talk about that, too.

It’s not a story. They’re not interested.

So finally I wrote back—because I’m relentless—and I said… This it just a tip for grad students, do that. Like, stay with it. I wrote to them and I said, “I’d like you to do a search on women’s magazines, and just let me know if Bitch magazine shows up in the first five pages.

And then like ten minutes later— I was just visualizing that somebody got the email, and they printed it out and they walked around the office. And they were like, “Can you believe—? Oh my god, maybe there is something here.”

And then they wrote back and they were like okay, you can the story.

So I wrote the story, and one of the things I talked about was like, what does it means that the traditional players, of course, Good Housekeeping, Vogue, Elle…you know, the big, well-capitalized mass media magazines were able to dominate and control the word “women.” And really, unless you looked for “feminist media,” Bitch magazine was not going to be available to you.

And of course what does this mean? The readership of Bitch magazine is kind of like older high school-aged women, kind of into young adults. So this is a prime group of people for whom maybe a concept like feminism would be valuable or interesting, but would be kind of unavailable or inaccessible in the ways that keywords were associated with particular types of media. So this was one of the first places where I wrote about, and then I wrote some academic things, and then I wrote a book about it.

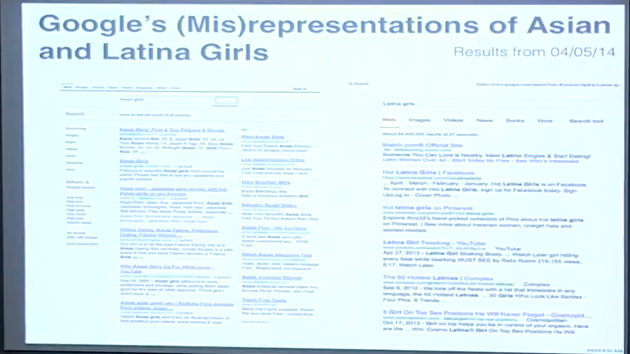

Here we go with Latina and Asian girls. Again, these are all hypersexualized kind of…Asian girls, porn and sex pictures and movies as our first. Going over here to Latina girls, we get a website that’s actually match.com but you can see it doesn’t have a yellow box around it; it isn’t really called out like an ad per se. Followed by Hot Latina Girls on Facebook and a whole series of sexualized representations of Asian and Latina girls.

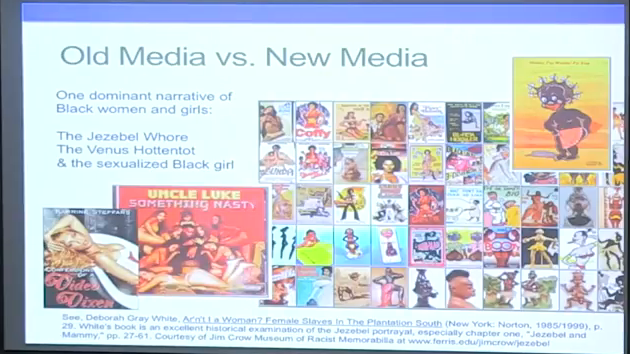

Now, these ideas about the kind of hypersexualization of black women and girls, women of color, this is not new. This is not a new media phenomenon. These are in fact old media practices. There’s a phenomenal resource for you, particularly if you’re teaching students who want to talk about the history of racist and sexist representation in the media and in popular culture. The Jim Crow Museum of Racist Memorabilia is really an amazing kind of online digital collection. It’s also a great collection when you’re teaching about digital collections and you’re thinking about digital libraries.

The Jim Crow Museum really started as a collection of what we would call racist memorabilia by a professor at Ferris State University and was all digitized. And what’s really interesting about this collection that gives us a long—you can see easily a 200-year history of what these kinds of sexualized images does is it also gives us a counter-narrative about what they mean.

So, in the dominant narrative of black women as Jezebels, as Sapphires, and these hypersexualized stereotypes of black women, these are inventions that are used particularly when the enslavement of African people becomes outlawed in the United States and there has to be kind of a mass justification for the reproduction of the slave labor force. And so part of how that justification comes into existence is by characterizing black women as hypersexual, as people who want to have a lot of babies, right, that can be born into enslavement.

And so this is something that’s really important. These stereotypes, they don’t just come out of thin air, and they’re not based in some type of nature or natural proclivity of black women. They’re actually kind of racist, capitalist, sexist stereotypes that are used as part of a kind of economic subjugation of black people and black women. And you can read there quite a bit about longer histories of European fascinations with black sexuality and otherwise.

But this is a highly, deeply commodified kind of stereotype. And of course this is one of the reasons why it’s still present and prevalent in the kinds of information and mediascape that we’re engaging with.

Here’s an image search. This is even back as far as 2014. Now, I have to say you know, 2014, and I’m kind of giving you— One of the things that’s difficult about doing research as all of you now who study the Internet is that the moment you study a particular phenomenon that that happens on the Internet, it changes. So I’d like to think in my own work that I’m kind of capturing these artifacts and then trying to talk about what these moments or what these artifacts represent, so that we can make sense of them. Because it’s likely that we’ll see them again.

Here’s an image search on “black girls.” Again, kind of consistent with the textual discourses in commercial search about black women. I really thought in 2014, honestly, that Sasha and Malia Obama would show up kind of in the top. You know, they were super popular. In 2009, we thought maybe Raven Simone, the last vestiges of her. We know why she’s out now. But you know, by 2009 we kind of thought maybe Raven would still be there, but she was gone, too.

And the linkages— Of course, these images are connected to sites that had a lot of hyperlinks, a lot of capital, that are connected in a network of capitalized and well-trafficked kinds of images.

One of the things that’s interesting to me as a research question is how would black women and girls intervene upon this if the framework of property rights, ownership, and capital…you know, those who have the most money to spend for example in AdWords, who are willing to pay more to have their kinds of content and images rise to the top. How would black girls ever compete economically—or numerically, quite frankly—against that, or in that kind of a commercial ecosystem? And again, who gets to control the narrative and the image of black women and girls has always been very important to me.

Audience Member: How would those images change if you searched for “white girls?”

Noble: White girls are also sexualized. I think you can see when you look over time kind of the hierarchy of the racial order in the United States, in terms of more explicit types of images are always more apparent for women of color. And this is very consistent with the porn industry. For example if you study pornography, which you know…proceed with caution. It’s a depressing topic, quite frankly.

But if you read the porn studies for example literature, you find that in the porn industry white women do we would consider soft, less dangerous types of pornography, both kind of like emotional and physical types, and the representations of white women in pornography are not nearly as explicit as they start to become then for black women. Black women do the most dangerous types of pornography labor in that industry. And I think you see a mapping of that.

Media representations of people of color, particularly African Americans, have been implicated in historical and contemporary racial projects. Such projects use stereotypic images to influence the redistribution of resources in ways that benefit dominant groups at the expense of others.

Davis, Jessica L., & Gandy, Oscar H. Racial Identity and Media Orientation: Exploring the Nature of Constraint

One of the things that’s also interesting when you search for white girls is that white girls don’t characterize themselves as white girls. They’re just girls. And so this is also another phenomenon, that the marking of whiteness is actually a phenomenon that happens by people of color to name whiteness as a project and as a distinguishing feature that people many times who are white do not embody or take on themselves.

So, what else is important about Google and search? I think this is an important study. This was a critique that was written about Epstein and Robertson, who did a great— You know when you show up in Forbes and people are hating on your research that you’re probably doing a good job, okay.

So, Epstein and Robertson did this amazing study in 2013 where they argued that democracy was at risk because search engine results could be manipulated without people knowing it. They had a controlled study, and what they found is that if they gave voters…if they had them do a search on a political candidate and voters saw positive stories about that candidate, they signaled they would vote for that person. And in that same controlled study, if they showed negative stories about a candidate, people said they would not vote for that person.

So, they argued from their study back in 2013 that democracy was at risk because search engine rankings, particularly getting to the first page, was an incredible problem. Because of course we know from other peoples’ research like Matthew Hindman who wrote an important book called The Myth of Digital Democracy that people who have large campaign financing chests to draw from are able to make it into the public awareness, and they’re certainly much more likely to make it to the first page of Google search and be able to control the narrative and the story about their candidates because they have the capital to do so. And they argued in their study that unregulated search engines could pose a serious threat to the democratic system of government, and they certainly have been important players in talking about regulation of search engines.

Philip Bump, Google’s top news link for ‘final election results’ goes to a fake news site with false numbers, The Washington Post, 11/14/2016

Here we have Google’s top news link for final election results. This is the [inaudible] following the presidential election. The first link was a story that led to information here that Trump has in fact won the popular vote. So we know that that is an alternative fact. That is not a real fact. That that did not happen. That President Trump did not win the popular vote. And yet this is the first hit, right, immediately following.

And so, it’s been interesting to me to watch the conversations over the last few months about biased information, some people are calling that fake news, and an incredible emphasis on Facebook. But not necessarily as much of an emphasis on Google. And let’s not forget about the incredible import that Google has. And one of the reasons why I’m so interested in them is because they’ve really come to be, in the public imagination, seen as a legitimate, credible—you remember back to the Pew study—fair, accurate site where people expected that the information they find they can trust. And so this again is something that I think we have to be incredibly cautious about.

I’m going to quickly skim over this because I want to get to a more important topic here before I close out, which is to say that Sergey Brin, one of the cofounders of Google has been asked many times about the manipulation of search results. And here we had a story about white supremacists and Nazis, how they’ve hijacked particular terms. You might be familiar with this, of course. For many years they’ve been able to manipulate and game Google around the word “jews” and “jew,” and how that could be tightly linked to Holocaust denial and white supremacist web sites.

When Sergey Brin is asked about adjusting the algorithm to kind of prevent the cooptation of different kinds of words from white supremacists, I love his—it’s like I almost cannot keep from laughing when I read this. He says that would be “bad technology practice. An important part of our values as a company is that we don’t edit the search results,” Brin said. “What our algorithms produce, whether we like it or not, are the search results. I think people want to know we have unbiased search results.”

Carole Cadwalladr, Google is not ‘just’ a platform. It frames, shapes and distorts how we see the world;

Philip Otermann, Germany to force Facebook, Google and Twitter to act on hate speech, both The Guardian

Except of course when we’re in France and Germany, where it’s against the law to traffic in antisemitism, and then we fully suppress and curate white supremacists’ antisemitic content out.

So we have kind of these different areas that happen for the American US press, and then a different narrative that’s happening in France and Germany, where quite frankly many platforms—not just Google, Facebook, Tumblr (which is Yahoo!-owned), many of these platforms are struggling with trying to manage the flow of disinformation. You have public officials particularly in Europe who are calling for an immediate stop to the kinds of disinformation and misinformation that are flowing through these platforms, with a recognition that they have an incredible amount of harm that can be generated from them.

Of course in the EU, particularly in Germany, there’s such a heightened awareness about the relationship between hate speech and what led to the Holocaust. And so we have different conceptions about freedom of speech than exist in other parts of the world. And I think that maybe we could learn from other places about that, but that’s again another topic for another day.

So the last piece I want to give you and then we’ll open up for some conversation. Here we have the case of Dylan “Storm” Roof. Many of you know Dylan Roof was a 21 year-old white nationalist who opened fire on unsuspecting African-American Christian worshipers at Emanuel African Methodist Episcopal Church in the summer of 2015. I won’t go a lot into the backstory, but this is a site that’s not chosen in vain. This has been kind of a site of radical resistance of white supremacy and of struggle. A site for the organizing and struggle for civil rights and human rights and recognition of African-American people, black people in the United States.

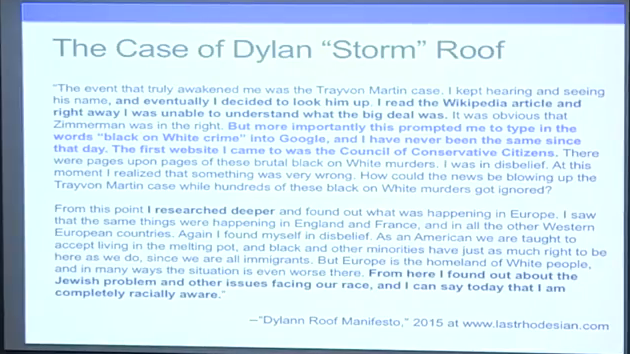

And so Dylan Roof, after the murders—immediately many researchers are turning to the Web and trying to make sense of what’s happening here. And I wrote a whole chapter in the book about about this phenomenon. Within about twenty-four hours, someone on Twitter found Dylan Roof’s kind of online diary at “The Last Rhodesian,” and this was the part of his diary that jumped out to me. He says,

The event that truly awakened me was the Trayvon Martin case. I kept hearing and seeing his name, and eventually I decided to look him up. I read the Wikipedia article and right away I was unable to understand what the big deal was. It was obvious that Zimmerman was in the right. But more importantly this prompted me to type in the words “black on White crime” into Google, and I have never been the same since that day. The first website I came to was the Council of Conservative Citizens. There were pages upon pages of these brutal black on White murders. I was in disbelief. At this moment I realized that something was very wrong. How could the news be blowing up the Trayvon Martin case while hundreds of these black on White murders got ignored?

From this point I researched deeper and found out what was happening in Europe. I saw that the same things were happening in England and France, and in all the other Western European countries. Again I found myself in disbelief. As an American we are taught to accept living in the melting pot, and black and other minorities have just as much right to be here as we do, since we are all immigrants. But Europe is the homeland of White people, and in many ways the situation is even worse there. From here I found out about the Jewish problem and other issues facing our race, and I can say today that I am completely racially aware.

Dylan Roff Manifesto, 2015 at www.lastrhodesian.com [presentation slide; emphasis from slide]

Now, one of the things that’s interesting is that when we try to replicate the search of “black on white crime,” the Council of Conservative Citizens kind of came up again and again. The Council Conservative Citizens, according the Southern Poverty Law Center, is characterized as vehemently racist, alright. You could think of it as the online equivalent to the White Citizens’ Council, which was…when you were as racist as say the KKK, but you were say a mayor, or a judge, or an assembly member, you couldn’t really be in the KKK but you could be in the White Citizens’ Council.

So the Council of Conservative Citizens is like a… You know, you were just in an interest group that cared about conservative values and the interests of white communities. But you weren’t like a night rider, a terrorist, riding out and lynching people, let’s say. Those were kind of the distinctions between the White Citizens’ Council and the KKK. The Council of Conservative Citizens, if you look at their site is really what Jessie Daniels calls a cloaked web site in her great book Cyber Racism.

So, what you don’t get when you do a search on “black on white crime” for example, you don’t get any information that tells you that this is a white supremacist red herring. That this is a phrase that’s used by white supremacists as an organizing kind of moniker. You also don’t get FBI statistics that show you that the majority of murders happen within community. So while we’re very familiar with a phrase like “black on black crime,” the truth is that most white Americans are killed by other white Americans. So I guess we either have to take black on black crime out of our vocabularies or we have to add white on white crime, to make sense of these kinds of phenomena.

You also don’t get access to any kind of black studies literature, or any scholarly kind of framings of what does this mean and what do these kind of movements mean, how are they characterized? Think back to the way the public talks about…in the Pew study. You think that what you get on the first page is fair and accurate, credible and trustworthy. And here you have Dylan Roof who’s engaging with Google, and maybe we could argue a similar way.

I’ll just say that when I was looking for these kinds of stereotypes and trying to make sense of them, our field is not off the hook. I looked for black on white crime in fact in the UCLA library—this is when I was at UCLA.

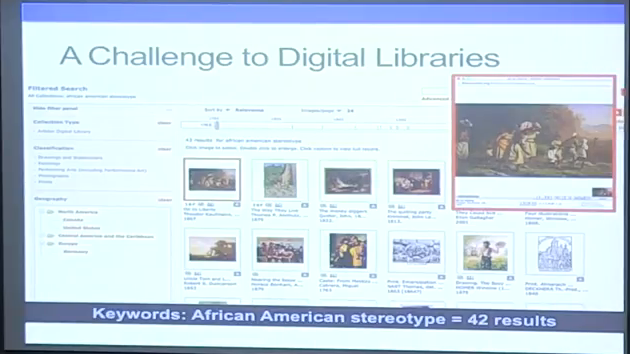

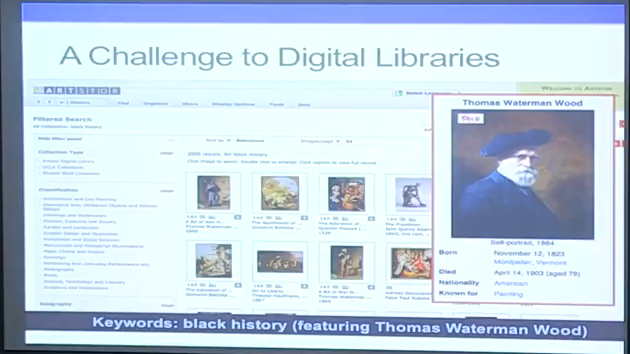

And you know, it’s a challenge. Because if you look for let’s say “racist imagery” like this, very difficult to find. In fact, when I was looking for it, here I am looking for “black stereotypes” in Artstor, which is our largest digital collection of images in the library. Apparently there are only six black stereotypes available, according to Artstor.

Now, we know that’s not true. So now I’m trying to think about well what’s the metadata? What’s happening in the way in which ideas are being characterized. And I thought, well librarians…they’re youngish, maybe they say African-American instead of black. That must be it. That must be the difference.

Except that now we only have forty-two results, and in them we’re getting paintings, oils on canvases…here’s a picture of by Theodore Kaufmann, who was a German painter, painting post civil War. So that’s not exactly the kind of stereotype—I’m not even sure if that is a stereotype.

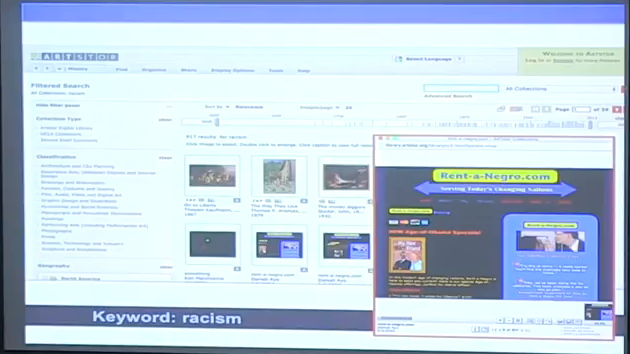

So I thought well let me look on racism again. Here’s racism. In racism, we have images, screenshots of a really phenomenal satirical web site that used to be up called Rent-a-Negro but is now down because Damali Ayo, who was the artist who created this web site right after President Obama was elected— You know, this was like a satire about liberal white people who were all like, “I voted for Obama because I have black friends,” and then they have to produce the black friend at a dinner party and couldn’t? And so they could go rent a Negro. And so this was like a really great, funny web site about that particular phenomena of kind of liberal white racism. But that work, which is really clearly about anti-racist discourse is cataloged here under racism. It’s just interesting

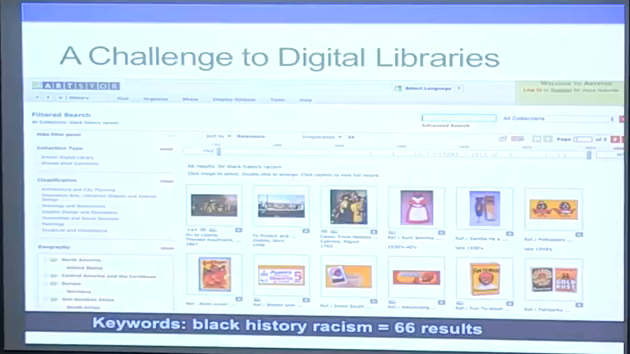

Now I’m looking and I finally start to find some this racist memorabilia of the United States, and it’s characterized kind of— You can find it under the words “black history [and] racism.”

Now, I find this interesting because some of my colleagues and I might argue that if we had been the catalogers, maybe we would have characterized this as white history. Again, a way of thinking and interpreting with different lenses about what’s happening in this particular phenomenon.

“Black history,” just on its own without “racism” gives us back to good old Thomas Waterman Wood and his paintings. And so again we have a lot of work to do. We have this conception that out there in the commercial search spaces it’s terrible or we kind of know especially in our field. But I think we have to also interrogate our own information systems and the way in which these kinds of racialized discourses are produced, reproduced, and go unchallenged.

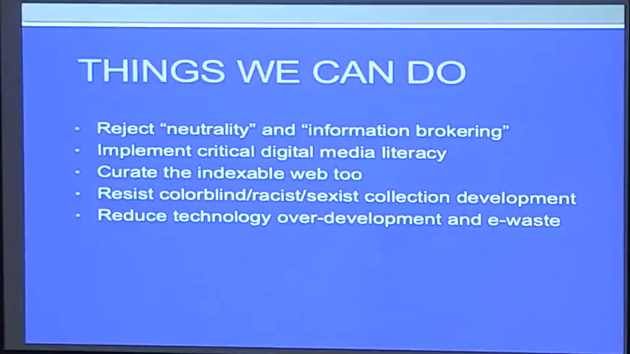

Some of the things I think we can do, I think one of the things we have to do is really reject the idea that our field is neutral and that we’re simply information brokers. We have an incredible responsibility. You know, I often think what it be like if we centered ethnic studies and gender studies at the epicenter of information schools, or at the epicenter of computer science programs. I tell my computer science students, for example, who come and take classes with me, which…you know, you can imagine how hard that is for them sometimes? And they say, “No one in four years has ever talked to me about the implications of the work that I’m doing. I haven’t really ever thought about these things ever.”

And I say to them, “You can’t design technologies for society and you don’t know anything about society.” I just don’t know how that’s possible. So what would it mean if we re-centered the people rather than centering the technology and thought out from a different lens?

So I think I’ll leave it there and give us a couple of minutes for questions. Thank you.

Audience 1: I had a question in terms of how do you think the best approach is to combat these sort of algorithms? Because I know human moderation is one option, but also most of these algorithms are based off of frequency. And if things like pornography are the highest frequency things on the Internet, then how do you combat that in terms of [inaudible] your actual search?

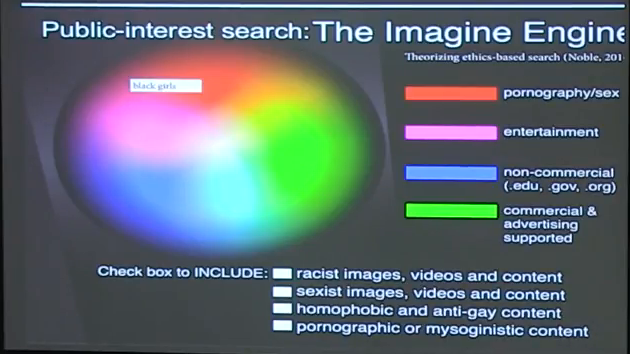

Safiya Noble: I anticipated that question, so don’t think I’m a weirdo that I just go right to the slide. So I kind of knew.

So, in the book I try to theorize a little bit around this. And one of the things that I do is I talk about what would it mean if we had different interfaces? Now, I don’t think there is a technical solution to these problems. So I wan to say that first. I don’t think you can technically mitigate against structural racism and sexism. I think we have to also mitigate at the level of society. But we also have to be mindful if we’re in the epicenter of technology design, we should also be thinking about these issues.

So one of the things I do— Now, my parents were artists. So the color picker tool is actually a thing that’s always been in my life for a really long time. So I thought about it as a metaphor for search. What would it mean if I put my black girls box in the red light district of the Internet? I know what the deal is, right. This is…again, like it’s a counternarrative to ranking as a paradigm. Because here’s the thing. The cultural context of ranking is that if you’re number one… Like, they don’t make a big foamy finger that’s like “we’re number one million three hundred…” Nobody does that. If your number one you’re number one, that’s what matters in the cultural context of the United States, for example, or in the West, or in lots of places. So the first thing I would do is break out of the ranking orientation. Because that has a cultural context of meaning.

If we start getting into something like this, now we actually have the possibility of knowing that everything that we’re indexing is coming from a point of view. This is really hard to do, let me say by the way. I’ve been meeting, working with computer scientists. Super hard. We’re theorizing and trying to get money to experiment with this. And I’m always open to collaborations around this.

But what this does is this gives us an opportunity. Immediately as a user we know like oh, there’s many perspectives that might be happening around, or contexts within which a query could happen.

Also, what if we had opt in to the racism, the sexism, the homophobia? Because right now, for those of us who are in any kind of marginalized identity who are bombarded with that kind of content, which is many people, as the default, we don’t get to unsee it. We don’t get to be unaffected by it. So what if people actually had to choose that they wanted that? That also to me is a cognitive kind of processing. Rather than making it normative, we actually have to take responsibility for normalizing that in our own lives, in our own personal choices.

This is just one metaphor for how we could also reconceptualize the information environment. Again, this isn’t new. But it’s more like keeping at the forefront that the context within which we’re looking for things always has a particular bias. What I’m trying to do here is say there will always be a bias. Maybe there’s a commercial bias. Maybe there’s a pornographic… Maybe there’s an entertainment bias. Maybe there’s a government, kind of noncommercial… Maybe we’re in the realm of blogs and personal opinion and we know that. That’s different than doing a search on “black on white crime” and thinking that the first thing you get has been vetted by a multinational corporation that you trust, with a brand you trust. The Council of Conservative Citizens; that’s what I mean by it.

Audience 2: So I sort of…so to follow up on that a little because I think that notion of the ranking is kind of an interesting thing in here, right. Because, I mean I think people forget, right, before Google there were other search engines that often did not have the notion of taking you directly to the thing, but instead had the notion of showing you the range of stuff.

Noble: Yes. Totally.

Audience 2: So one part is the list as output. One part is the whole notion of the like, “I feel lucky” button; there is one result and I can take you directly to it. And so it may be that also one of the opportunities here to sort of recast things is recasting what one gets back as deliberately a wide trawl, and it’s like well you know, there’s ten things over here, and there’s a hundred things over there, and there’s a thousand things over there. And and that’s the spread. So it might be not just the input but the output.

But the other thing I sort of want to think about a little bit with you is how we better give people a sense of the coupling between search and results, right. It’s not just that there’s a world of things out there, but obviously that it’s statistically affected by search patterns. That is, these things are attempting to respond to what it is that people are looking for, one way or another.

n. Yeah. I mean, the visualization dimensions of information to me are so important to the future of making sense of nonsense, too. I could talk for a while with you about this. Because I think the other dimension kind of of the mathematical formulation, that’s not present. I mean, that would be quite fascinating if you had a loading bar that was showing you a calculation is running that was legible also to say an eight-grader. Maybe it was in text and not in kind of a statistical language. Because you know, those are also shortcuts and representations of ideas. And yet they’re framed I think as like a truth, a mathematical truth. And that’s also quite difficult to dislodge.

So I think that’s happening. I think that making those kinds of things visible is really important. Dislodging the idea even that there is a truth that can be pointed to. I mean, one of the things that is challenging that I’m always trying to share with my students is that they are acculturated to doing a search and believing there is an answer that can be made found in .03 seconds is deeply problematic. Because we’re in a university where we have domains, and departments, and disciplines who have been battling over ideas and interpretations of an idea for thousands of years. And so now, that flies in the face of the actual thing we’re doing here in a university.

And so acculturating people to the idea that there’s an instant result, that a truth can be known quickly, and of course that it’s obscured by some type of formulation that is truthful, right, that’s leading them. A statistical process that’s leading them to something that’s more legitimate than something else, again gets us back into these ideas of who has more voice and more power, more coupling, more visibility online than others. And this goes back to my black girls who don’t have the capital for numbers to ever break through that. It’s hard. But these are the questions, for sure.

Audience 3: Thanks for the wonderful presentation. I really really appreciated it. So I have a question about the racist images button. I know that we’re not supposed to focus on the slide but I think it’s really awesome, this apparatus that you’re suggesting is kind of really exciting.

But I was just wondering like, the idea of a racist images filter, going back to your work on library search engines, I feel like that is part of the problem, right. Like it’s this idea when machines and algorithms [inaudible] communities of people trying to find something like racism, we get into this sort of push and pull where there are very very very almost comically wrong answers. So I was what your thoughts are on that filter. Because I know there must be some deeper thoughts going on there.

Noble: Yeah. I mean, here’s where I think about the work of my colleague Sarah Roberts around content moderators. That this is a laborious practice. Who decides what’s racist? I was on a panel yesterday with a woman who used to be a content moderator for MySpace back in the day. And she’s like, “You know, the team was me,” she’s a black woman, “a gang banger, like a Latin King; a white supremacist; a woman who was a witch…” You know what I’m saying? She’s like, that was the early MySpace content moderation team in Santa Monica. She’s like, “And also we were drunk and high because it was so painful looking at the kind of heinous stuff that comes through the Web and curating it out of MySpace.”

Coupled with this, obviously, has to be a making visible of the labor that is involved in curating taste and making sense of where do we bottom out in humanity in terms of what gets on to the platforms that we’re engaging with. I mean there’s armies of people who are curating all kinds of things out. The most disgusting things you could ever imagine. Ras was saying yesterday, she said, “I couldn’t shake hands with anybody for three years because I couldn’t believe what human beings were capable of,” alright. So there was a great content moderation conference at UCLA yesterday for the last couple days.

You would have to also make people legible and visible, this type of work visible. Because machines cannot do that tastemaking work. Not yet, and probably not for a very long time. We’re nowhere near that. So I think that’s also what has to happen is…again in Rent-a-Negro, that someone who didn’t get it thought that was racist. But the black people who would have seen it would’ve been like, “That is actually hella funny.” See what I’m saying? So those are sensibilities that we can’t ignore, that can’t be automated. That’re also kind of political, quite frankly. And that certainly has to be part of how we reimagine the technologies that we’re designing and engaging with. I don’t think you can automate that. I think you absolutely have to have experts.

One of the things I say to the media all the time when they ask me me about changing Silicon Valley and the stuff that comes out of it is I say you know, pair people who have PhDs and graduate degrees in black studies with the engineering team. Or PhDs in gender studies. Or American studies or something, right. People…humanists and social scientists. Because they have different kinds of expertise that might help us nuance some of these ideas and categorize. We don’t do that. And this is such…it’s…you know, it’s really difficult in our information schools when we don’t put these ideas at the center of thinking about curating human information needs. They’re way off in the margin right now.

Audience 4: So, like you said, technology can’t do really…much of the taste-making. They can’t really classify, right.

Noble: Yes.

Audience 4: So, the racist, misogynistic content that we see on search engines is pretty much a manifestation of how the Internet society thinks, right. Don’t you think they are complicit in perpetuating these stereotypes? Because Google only aggregates what’s popular, what’s frequent.

Noble: Yeah, but Google also prioritize what it will make money off of. So there’s also a relationship between people who pay it through AdWords, in particular, to optimize. And SEO companies who will pay top dollar to have certain types of content circulate more than other types of content. And so this is where to be like, “Well Google’s like…” You know, if Google didn’t make any money off of trafficking content? We could maybe argue that it’s just like Sergey Brin says, it’s just the algorithm producing the algorithm’s results. Except it makes money off of the things that will be the most titillating, that will go viral, that people will click on. And it couples ads in direct relationship to its “organic results.”

So there’s no way… I mean, it’s making money on both sides of the thin line on the page. So I think that it’s disingenuous to say that Google’s not implicated in the kinds of results that we get. I think there’s a lot of research by others, too, that shows that they will always kind of propagate what they make money from first, alright. So again, this is where people who love Google hate me. Because I’m just going to say they’re also implicated in it. At a minimum, to what effect… You know, when you talk to people who— When you think about YouTube. You know, the beheadings in Syria, out, screened out by content moderators. Blackface? In. Why? Who decides? What are the values? Those are what at play in terms of it’s the way it’s implicated, and the decisions that they make about acceptability. The acceptability of racism or misrepresentations of certain groups but not of others.

Further Reference

Langdon Winner, Do Artifacts Have Politics?

Cheryl L. Harris, Whiteness as Property

Hope A. Olson, The Power to Name: Representation in Library Catalogs