I’m responsible for the success of the School of Computer Science at Carnegie Mellon, where we happen to be a place which has more than five hundred faculty and students earnestly working towards making AI real and useful. And one of the things which has swept through the whole college over the last eighteen months, really has changed a lot people’s direction, is the question of AI safety. Not just it’s important, but what can we practically do about it?

And as we’ve been looking at it, the problem turns into two parts, which I want to talk about. Policy, where we need people, the folks in this room, to do work together. And then verification, which is the responsibility of we engineers to actually show the systems we’re building are safe.

We’ve been building autonomous vehicles for about twenty-five years, and now that the technology has become adopted much more broadly and is on the brink of being deployed, our earnest faculty who’ve been looking at it are now really interested in questions like, a car suddenly realizes an emergency, an animal has just jumped out at it. There’s going to be a crash in one second from now. Human nervous system can’t deal with that fast enough. What should the car do?

We’re really excited, because we’re writing code which is in general going to save a lot of lives. But, there’s a point in the code, and our faculty identified these points, where they’re having to put in some magic numbers.

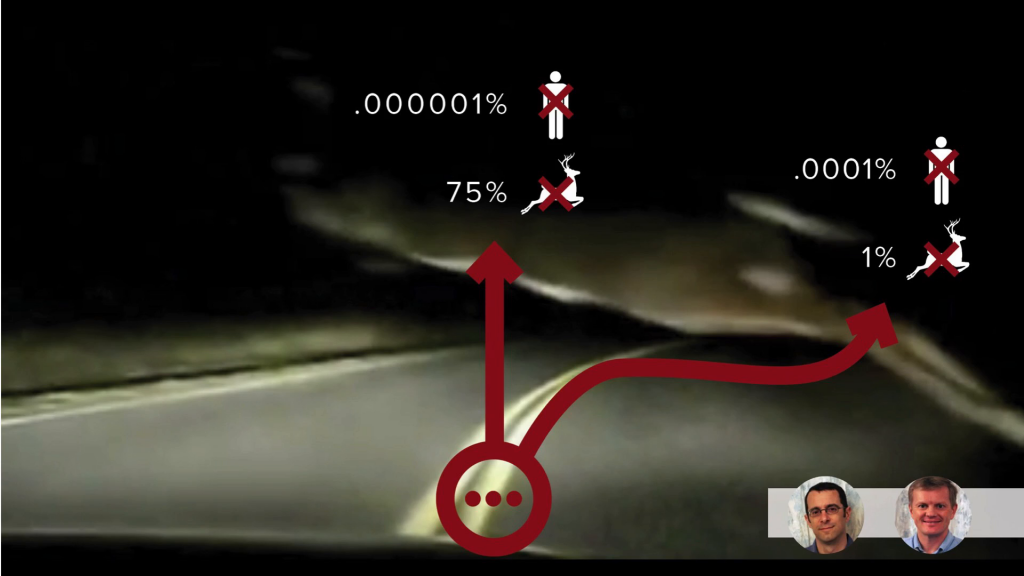

For example, if you’re going to hit an animal, should you go straight through it, almost certainly killing it but maybe only a one in a million chance of hurting the driver, or should you swerve, as most of us humans do right now, swerve very well so that perhaps you’ve only got a one in a hundred thousand chance of hurting the driver, and probably save the animal? Someone has to write that number, how many animals is one human life worth. Is it a thousand, a million, or a billion? We have to get that from you, the rest of the world. We cannot be allowed to write it ourselves.

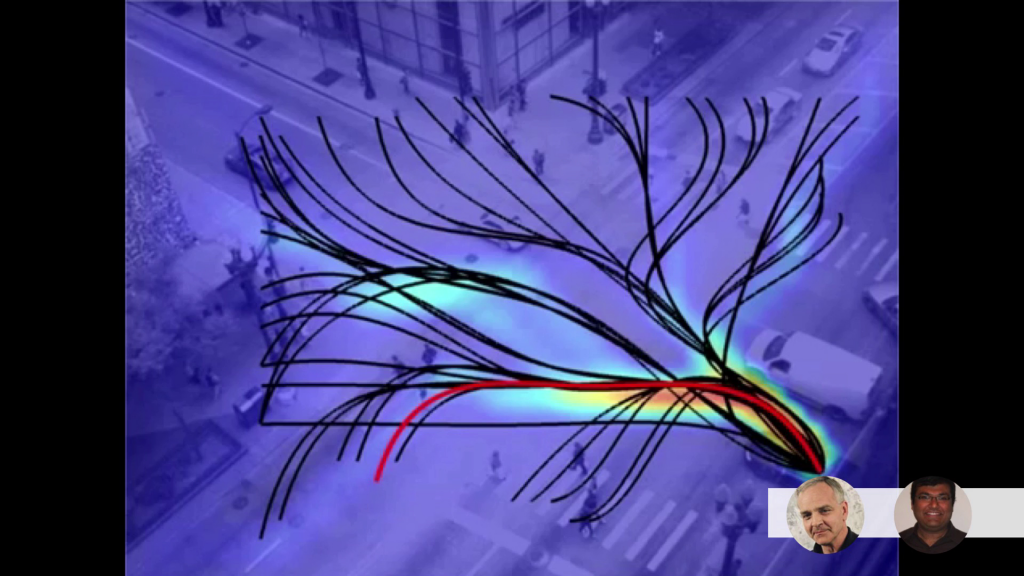

This is really important. Two of our other faculty have pushed on understanding the potential movements of dozens of pedestrians and vehicles at busy intersection, so that they can pull the kill switch if someone’s about to essentially have a serious injury. If that goes off all the time, it will be unacceptable. If it goes off never, then we won’t be saving lives. Someone’s got to put in the number.

So that’s policy. And that’s my real urgency here, is asking for help to make sure that we all get this policy in place so we can start saving lives. After policy, it gets back to being on us, the engineers, to do verification. And this is an area of AI which is exploding because it’s so important.

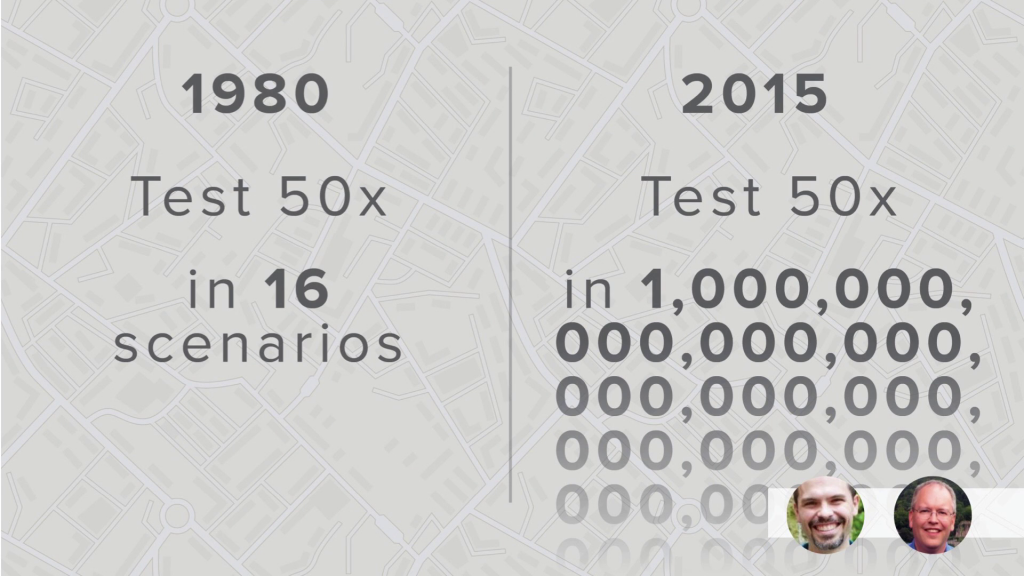

In the old days, when you had non-autonomous systems like regular cars, you had to test crash them in maybe fifty different tests using about eight cars each. Now, with an autonomous system which has got this combinatorial space of possible lives it could live, the testing problem seems impossible. And some of the faculty have really been looking at the question, for example in helping the Army test out autonomous convoys to travel through Iraq. There’s a new kind of computer science available for helping, almost like solving a game of Mastermind, quickly figuring out the vulnerabilities, the area where the autonomous system is in most danger.

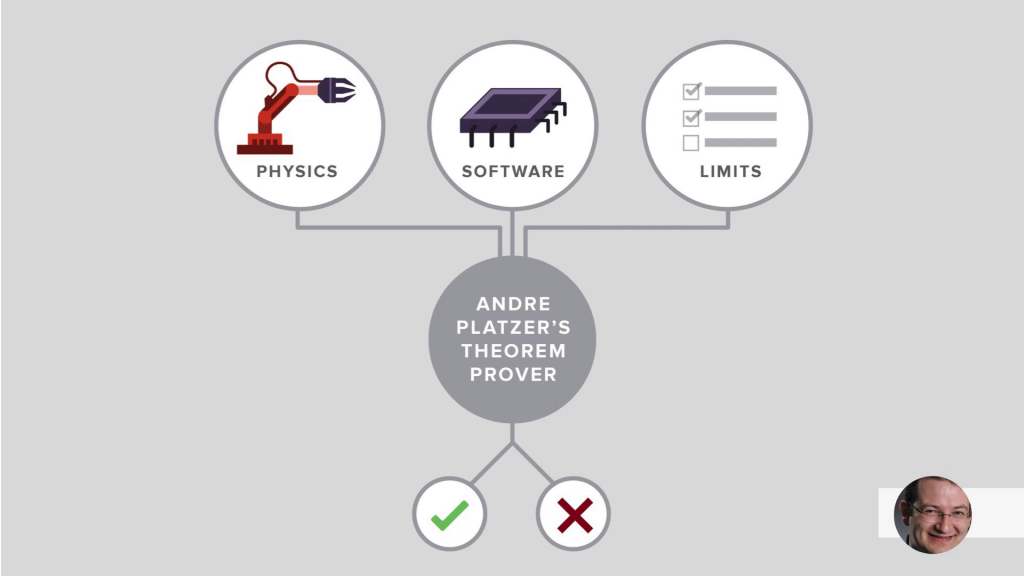

Now, interestingly, academically, there’s testing-based people absolutely at war with another group of people, exemplified by André Platzer, a who used formal proof methods. They say if you’re going to deploy an autonomous system, it must come with a mathematical proof of safety. His system of proving, for example, recently showed that the new air collision avoidance system, when he tried to prove that it was safe, the proof failed and they discovered some cases where it was actually going to be a disaster. So now the system is being updated.

That’s the whole story, policy and verification. I want to finish up by talking about four groups of stakeholders, and my question and message going out here.

Policymakers, we need your help. Most AI labs around the world want you to come visit us. Spend time with the scientists who want to express to you the tradeoffs they’re worried about.

If you’re a company executive right, now and one of your divisions says, “We’ve got a new autonomous system we want to field,” you have to ask them what they’re doing about testing, because they cannot be using 1980s testing technology.

We scientists and engineers, we have to take this really seriously or we will be closed down, and that is tragic because we’re doing this because we think we can save tens of millions of lives over the next few years.

Finally, for entrepreneurs, the service industry of testing autonomous systems is a huge growth potential, and I very much encourage it.

Thank you.