Education has remained largely unchanged for millennia. In any classroom, you see a set of students gathered around a teacher who’s writing on the board, or maybe now we’ve added a PowerPoint deck. But, as in many other fields that have been slow to change, the data revolution is coming for education.

Now, many people think that this revolution will come through MOOCs, or massive open online courses, in part because computers can really easily collect data on all of the sorts of things that students do. So, where they click and when, what they type into a discussion forum, whether they click pause or play on a video.

However, this data is seriously impoverished with respect to what students actually think and do in order to learn. And despite the hype MOOCs aren’t the revolution that we’ve been told they are. On the other hand, real classroom data has enormous potential. If only we could get it. And that’s where connected sensors come in. This is one of the things that my lab is working on.

So, rich data is constantly being produced in the classroom. Student engagement and emotions are one of the strongest predictors of learning. And facial expressions are the data that we use to understand this. So, does the student look frustrated, confused, bored? Are they pleased, excited, or or surprised about something? We can also use voice data. So everything that the teacher says, everything that the students say, this is very important data that tells us about learning. So, what types of questions [is] the teacher asking? Are the students deeply collaborating on a topic? Are they challenging one another’s ideas in an interesting way? All of these are really important pieces of information about how students learn.

Now, normally the teacher is the only one who acts as a “sensor” in the classroom. So, they’re constantly collecting all of this data and frantically trying to make decisions in the moment about what to do. And then maybe at night they can go home and take a minute to reflect by looking at the assignments that their students have produced. But until now, it’s been incredibly difficult to get a real understanding of what’s happened moment-to-moment in the classroom. This is where connected sensors come in.

So, using things like depth cameras or microphones arrays, we can collect all of these data on the student and teacher actions that I’ve been telling you about, and then use machine learning techniques to understand and deepen our knowledge of what works for teaching and learning. So, you could imagine that such a system would be like a personal informatics system. You might even be wearing a tracker for this type of info on your wrist right now. So, we can right now track the the number of steps that we take, the calories in the food that we eat, or other sorts of information about our our daily lives.

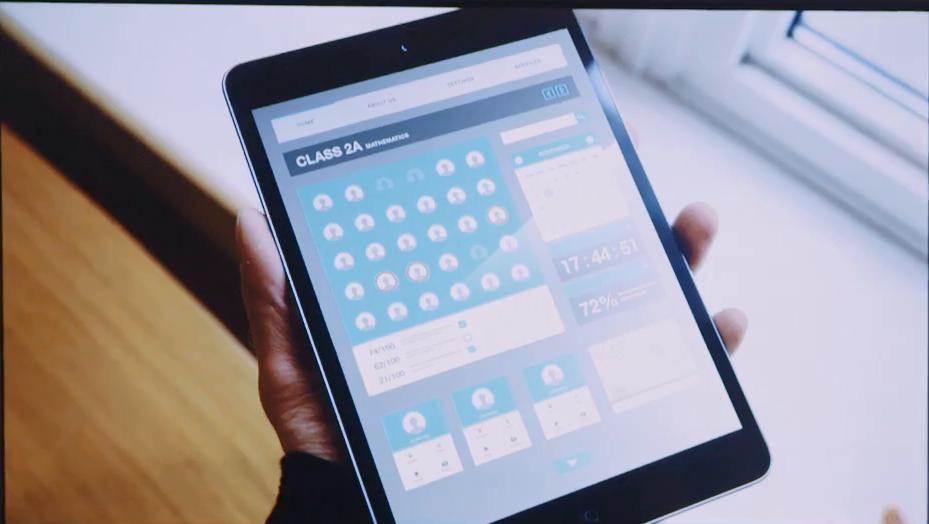

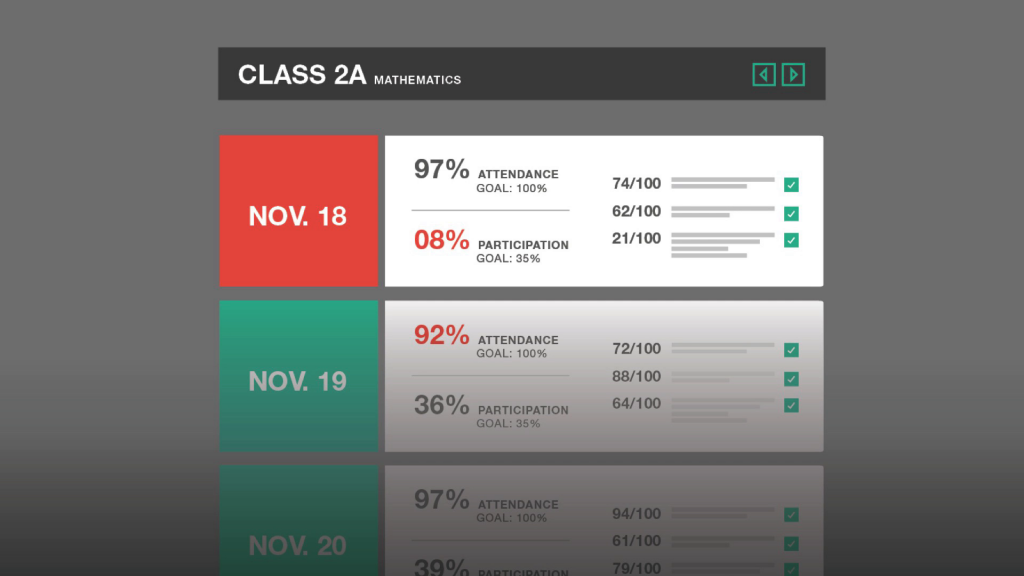

Now, similarly to how the systems tell you about your weight loss over time, imagine that we can now present the teacher with summaries of the most important things that happened in their class that day. So, we could present this to them, and the teacher could, reflect set their goals for moving their class forward, and monitor their own progress towards these goals.

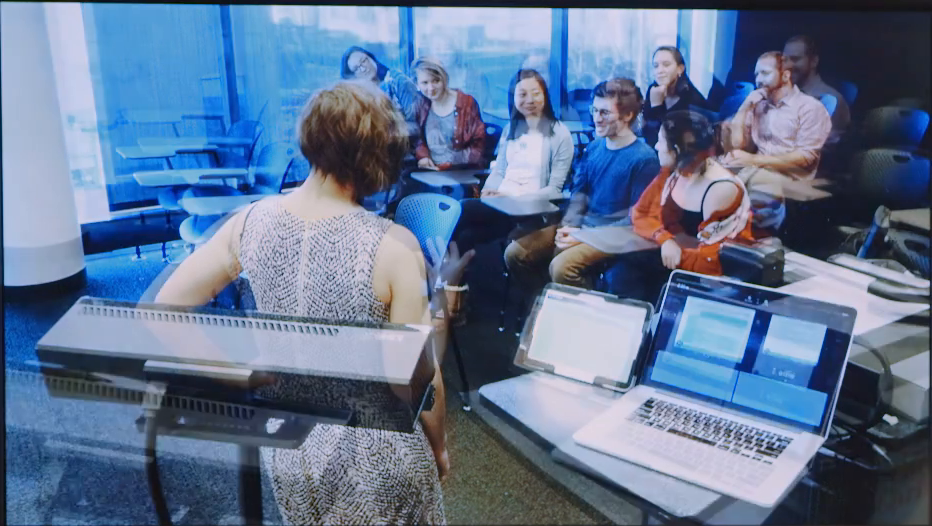

Now, for me, even more exciting than a dashboard is the ability to use these connected sensors, in real-time, in the classroom, in order to improve teaching and learning. So, my lab at Carnegie Mellon is right now automatically collecting all of this sort of data in the classroom, and displaying it to our instructors in peripheral displays.

And of course, this requires some very careful design to make sure that it’s a benefit to learning and not a distraction. But as you can see here we’re able to use our own university classrooms of a living laboratory in order to test the types of techniques that might actually support learning.

So here we’re flashing the screen read when the teacher’s been talking for too long, and they might want to ask a question. But then they can go back after class and look at all of the data that’s been collected and say, “Boy, next class I think I wanna try to get more students in the class to participate. And maybe I can get them to talk earlier on in the class instead of this talking on and on myself.”

Now, I’ve said that MOOCs aren’t the answer, but we can in fact use these same techniques to improve learning online as well. So, we can use the camera, the microphone, that’s already on your computer to detect who’s working. Are they frustrated or confused? And then we can use the same sorts of data to improve the feedback that we’re giving these students.

Of course there come some risks with this type of data, but isn’t it worth it to share your data on whether you’re the quiet one in class in order to give the next generation of teachers the opportunity to give everyone in the room a chance to speak? And I think that parents and even students themselves could benefit from having this information.

Now, the White House and many other agencies are currently making recommendations about just how important data is going to be for improving education. However, in the rush to move to online learning, we shouldn’t forget about the rich data that we can get from face-to-face classrooms and how this can help us improve education everywhere.

Thank you.

Further Reference

Amy Ogan’s home page.