Christian Sandvig: Next up we have on Panagiotis “Takis” Metaxas. I don’t know how I did there with my Greek. He is a professor of computer science at Wellesley, and a visiting scholar at the Center for Research on Computation and Society. And his area of research is about misinformation propagation and electoral predictions. So, I actually was reading about him and I saw that he said that he loves social media in the Wellesley newsletter. He says he loves using social media, but Facebook is uninteresting chitter-chatter. So only Twitter.

Panagiotis Metaxas: Only Twitter for me, yeah.

Sandvig: Alright.

Metaxas: And as a matter of fact, I give a lot of— I don’t want to sound like an advertisement for Twitter. But Twitter’s playing the role of my collection of editors. Instead of going around like crazy trying to be informed about the important things, I have selected carefully the people that I want to be informed from, and I follow their tweets. So if you think you have something to tell me, you send me an email and you’ll tell me.

So, I did something crazy so let’s see how it works. Given the interest of the morning discussion, I tried to change my talk so that it feeds into the form of where the discussion is going. I was going to tell you a lot about how Web spammers and propagandists have a lot of thing in common. And I would have told you a little bit about how the fear of propaganda can help you detect propaganda as well.

Graphs from Panagiotis Takis Metaxas and Eni Mustafaraj, From Obscurity to Prominence in Minutes: Political Speech and Real-Time Search, 2010

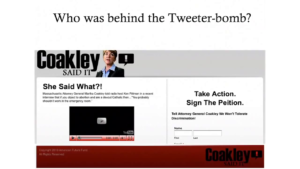

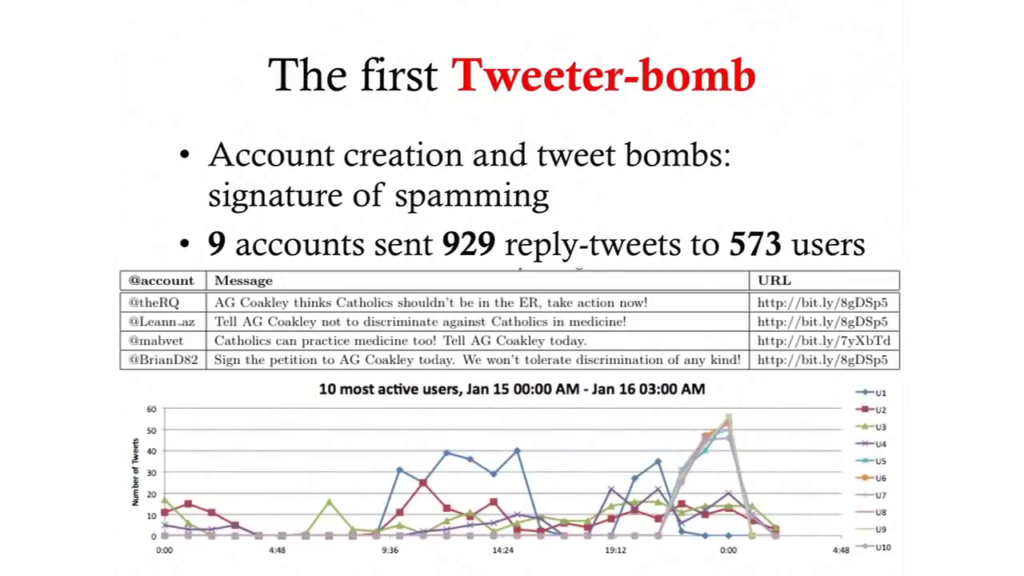

But I’ll skip and I will tell to you a few things about the first Twitter bomb that with my colleague we found a couple years ago. And there it was a case in which somebody was attacking the candidate Martha Coakley in the last Massachusetts elections. And I’m acknowledging Eni Mustafaraj who’s sitting back there. We found out that actually it was easy to detect this kind of attack.

This is where they where trying to send their people. And the message was signed by the American Future Fund. We had no idea who was behind these attacks. Turns out it was the same mayoral run Republicans who were also behind the attack on Kerry. So pretty interesting story when we found. And as Fil said, it would be nice if we had found it a little earlier, not like maybe weeks later, after the elections were done. But of course if you want your attack to be effective, you’d better launch it just before the elections. Elections are awfully important and you can not let them down. That led us into the current project that I’ll tell you about.

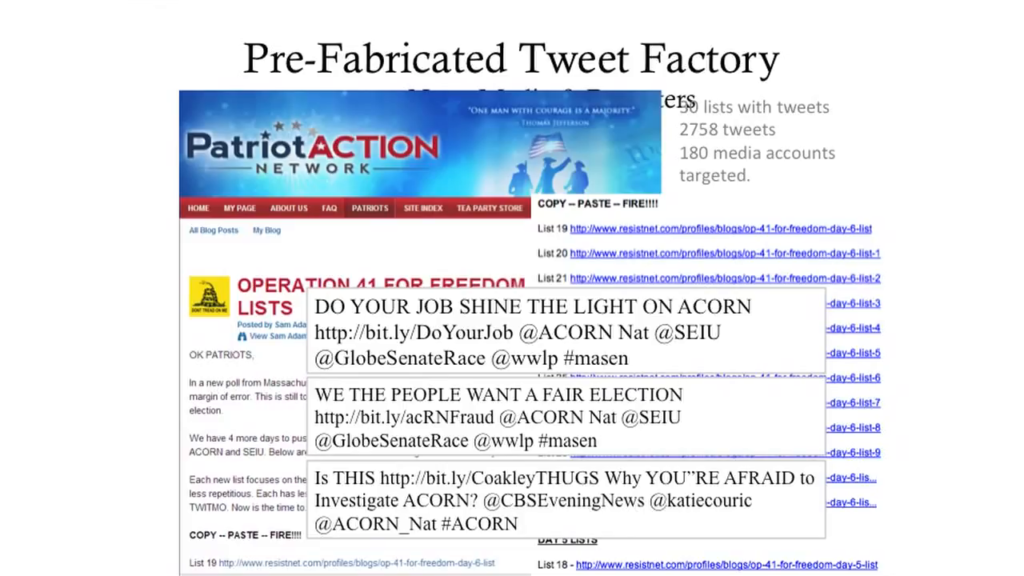

The other thing that we found also was another prefabricated tweet factory. So if you wanted to support your cause by attacking particular reporters, you would go to this site, you would find a collection of tweets and then all you would have to do would be to copy and paste, copy and paste, fire. And instead of trying to tell the general public about that, you were going to tell about particular news reporters, news organizations, about your message, and you would ride in the Massachusetts senatorial race as a way of getting the message out.

So, I will close this parenthesis because I want to tell you a little more about what we’re doing now. The discussion of this session has to do a lot with trying to create tools for individuals. And here is something that I know that people from Ushahidi are doing, so I had a little discussion with Patrick before about that.

What do I care about? Have you ever heard of social theorems. I hope not. Because I just thought of this term as a way of making a point. What’s the point? We treat our knowledge, what we…you know, try to believe as fact; we treat them as theorems the same way that we are looking to mathematical theorems to make sense of the world around us. But the problem is they are not the same.

There is a big difference between the real theorems and the social theorems. You know, social theorems are things like “There is no such thing as a free lunch.” Which you might consider to be true except you know, if you are a graduate student in a university and you will find lots of free lunch after every talk, right? But in general, it is roughly the case. Just not always. And compare it with F = m*a, which is something that no matter what, it will work, as long as you are in this universe.

We confuse natural laws with social behavior. So, we would love society to behave the same way as nature, but it doesn’t. For example, nature will not care if you did another experiment before, and it will not change its behavior the second time you do an experiment. Society will do that. And that’s a problem when you try to find exactly the same behavior time and again. Here I have the Joe Paterno and Whitney Houston deaths. The first one was a fumble. The second one you know, the news organizations were very careful not to repeat it so they were kind of slower as they were going.

Another bad thing that my mother used to tell me all the time, it’s the good guy does not always win. I’m sorry. But this doesn’t mean that you do not have to try every time to get the good things out.

Finally, just because you found a counterexample in a social theorem, it does not mean that the social theorem is wrong. Just because some of the things that society behaves like do not always work does not mean that we know nothing about society. We still know something about society. And we saw some of this earlier, for example Brendan Nyhan talked a little bit about best practices, credible sources, how to make more sense about all of these things.

So, what are social theorems that I want to tell you about, are things that we have discovered in the last five years—some of them—and what we hope is to put them into a personal tool that will help you make more sense as you’re receiving information. We’ll deliver your information with a little bit of trust value, and we’ll tell you whether you should be trusting this piece of information as it’s coming to you, and why. So, I’ll give you three of them today and I’ll give you some evidence about that.

So, the first one is that unedited retweets about political issues indicated agreement and reveal communities in like-minded people. It does not mean that every retweet means that kind of thing, but when you have political discussion, retweets—verbatim retweets—actually mean that “I totally agree with this guy.” Always?, not always, and I’ll show you an example. But most of the time. That’s the first social theorem.

The second one is that— It’s a very optimistic one. Given enough time and enough people’s attention, lies have short and questioned lives. That’s pretty optimistic. It doesn’t mean that every time lies will die. No, it doesn’t. If you look in world religions you will see some lies that have propagated forever. But there are other cases in which in this world we live in actually they can be detected.

And the last—which is not really a theorem but you know, what the heck—is that people with open minds and critical thinking abilities are better at figuring out truth than those without. Sounds like a duh but you know, with the discussion we’ve had in the morning it seems like we tend to believe that’s oh, maybe there is nothing we can do. But it is not like that.

- Conover, M. D.; Ratkiewicz, J.; et al. Political Polarization on Twitter, Center for Complex Networks and Systems Research, 2011.

- Graph from Panagiotis Takis Metaxas and Eni Mustafaraj, From Obscurity to Prominence in Minutes: Political Speech and Real-Time Search, 2010

So some evidence. The first comes from the data we got when we gathered the Massachusetts senatorial elections. This is the retweeting pattern of a group of people that retweeted the most. This is about 1,000 people that retweeted about 14,000 times in seven days. You can see a way that they were divided, and actually they were divided automatically by an algorithm: if you retweet a lot, you get to be clustered together, otherwise you’re far away. The big group, by the way, is not a unified—if you look closer they’re individual retweets. There are three different groups there, some of them engage more with the other group; the others are not engaging at all. The people from Indiana, Fil, Mike, and Bruno there have found a very similar picture for the elections in late 2010.

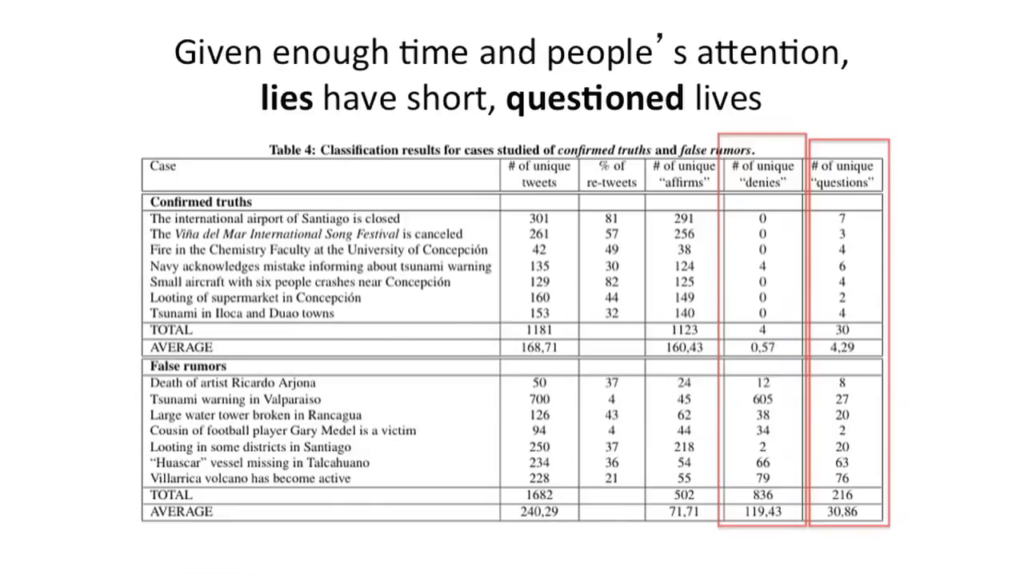

Graph from Marcelo Mendoza, Barbara Poblete, Twitter Under Crisis: Can we trust what we RT?

The second [theorem] about giving enough people’s attention. Here is some results of a paper that checked to see how much misinformation—rumors—about a potential tsunami and earthquakes problem in Chile in 2006, I think it was, propagated and how far they propagated. The bottom part are the false rumors that were proven false eventually. The top part are the confirmed truths. These ones were questioned much more often, as you can see, and here is the number of different questions. It’s not like the truths were not questioned, just not as much by and large.

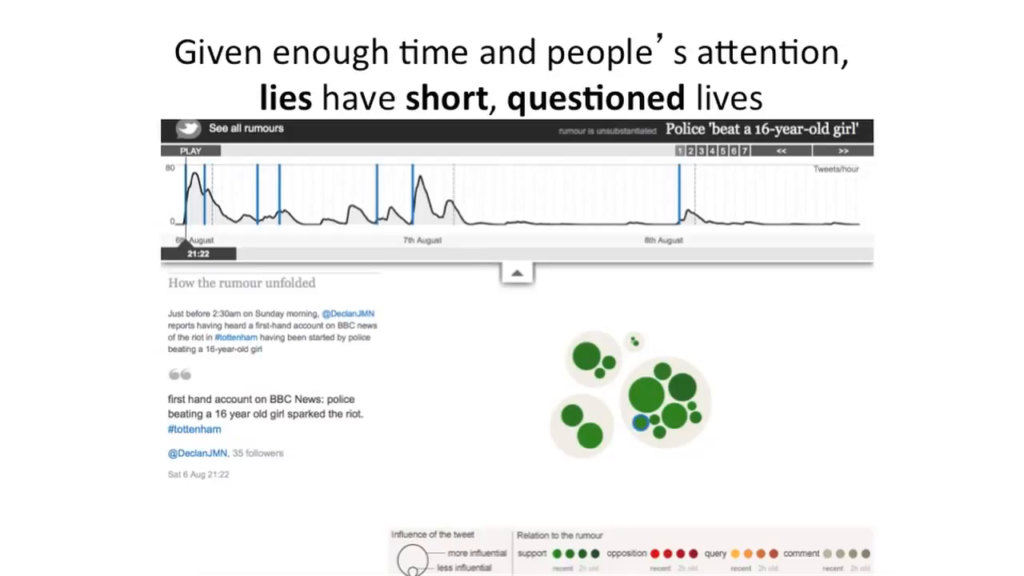

Another very interesting example are the London riots rumors that propagated. The guys at The Guardian have an amazing tool—you should visit and play with it. The pics there show some attempts at propagating false information eventually died. They tried to revive but they did not live for long.

Wisdom from the ages. Here is “Time Saving Truth from Falsehood and Envy.” So we’re going along the same ways.

I need to stop, I know, so a little thing about open minds. The third part, the critical thinking. It’s not that everybody will actually be beaten by every piece of information. How does it go? You cannot fool all the people all the time, right? That’s something that happens. You cannot expect you will have a tool that you will just click and will give you the answer. What you really want is to be able to have technology that will give that information, but you will need to figure out the answer using your own brain. So education is darn important there.

Here is Rigas Feraios, I mentioned in the morning. And notice that he said whoever thinks freely. You want to be free in your thinking, but you want to be able to think as well. Thank you.

Further Reference

Misinformation and Propaganda in Cyberspace and Three Social Theorems by Panagiotis Takis Metaxas, and When the News Comes from Political Tweetbots by Eni Mustafaraj, on the Truthiness in Digital Media blog

Truthiness in Digital Media event site