Susan Crawford: Thank goodness Fil Menczer is here, who is a professor of informatics at Indiana University, who has recently been awarded a $2 million grant to build systems that can detect online persuasion campaigns.

Filippo Menczer: Alright, hi. I’m Fil Menczer from Indiana University and I’m here to tell you little bit about a few examples of truthy memes that we’ve uncovered with the system that we have online. Please go and play with it. It’s truthy.indiana.edu and Mike Conover and Bruno Gonçalves are sitting there, and they’re among the developers of this system. It’s a web site where we track memes coming out of Twitter and we try to see if we could spot some signatures based on the networks of who retweets what, basically, and who mentions whom.

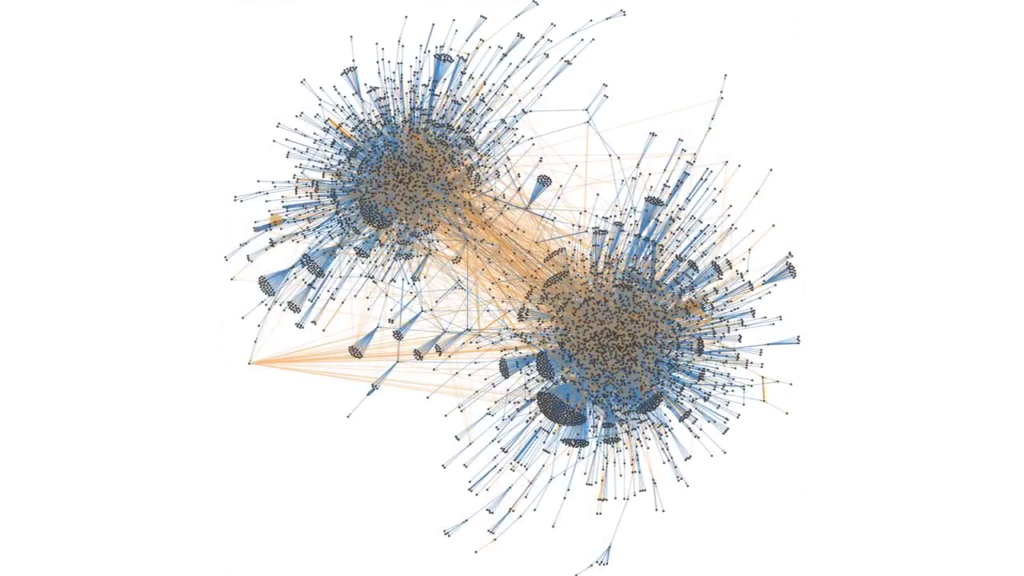

And so you could look at lots of different memes. For example, here’s GOP which I want to highlight because it brings up the issue that has been brought up before about echo chambers. And in a lot of memes that concern politics we observed this clearly bi-clustered polarized structure where people only tend to retweet other people that they agree with. So that’s an interesting thing but that’s not the theme of today.

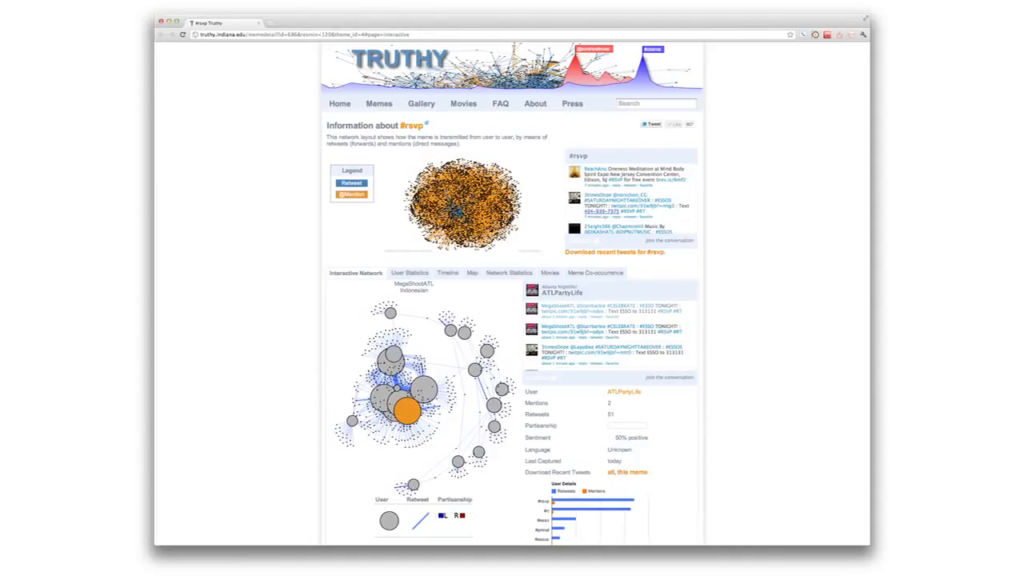

So let me just show you a handful of examples. Here’s one that Mike pointed out to me. It’s not in the realm of politics, probably we would put it more in the sort of spam category. And it’s just a bunch of accounts that promote a particular I think club in Atlanta. So the dots are the people and the oranges are the mentions. So there’s just a bunch of bot accounts that keep tweeting about events and mentioning other people. And so some of them then eventually retweet and this they use to generate buzz about it. So this is one kind of pattern that we observed.

Now let me show you a few patterns that we’ve observed in the run-up to the last election in 2010. Definitely truthy things. So this looks very different. It’s just two accounts. And a huge edge, that blue thing, is just one very thick edge between these two accounts and that means that these two accounts kept retweeting each other. They were bot accounts that generated tens of thousands of tweets, and all of them were promoting one particular candidate. And posting about this person’s blogs and articles in the press, and webs ite, etc.

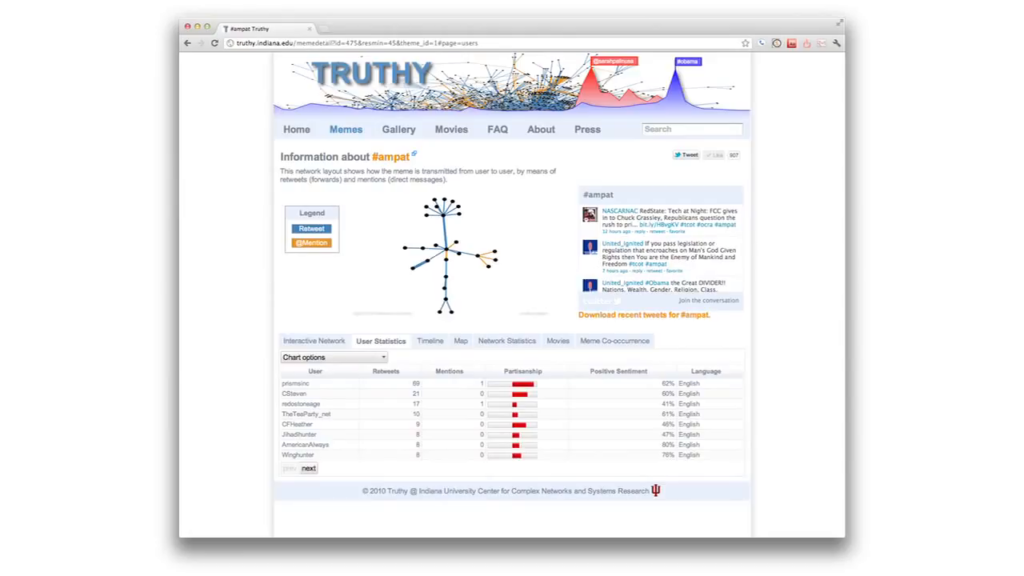

Here’s another one that is not quite as hugely successful but I think is very scary, and it keeps going. It’s #ampat. It’s a tag that is used to post material that is very scary. You know, very graphic movies about beheadings, and promotes the idea that pretty much Obama is trying to push Sharia law in the United States and things of that sort. So, very interesting.

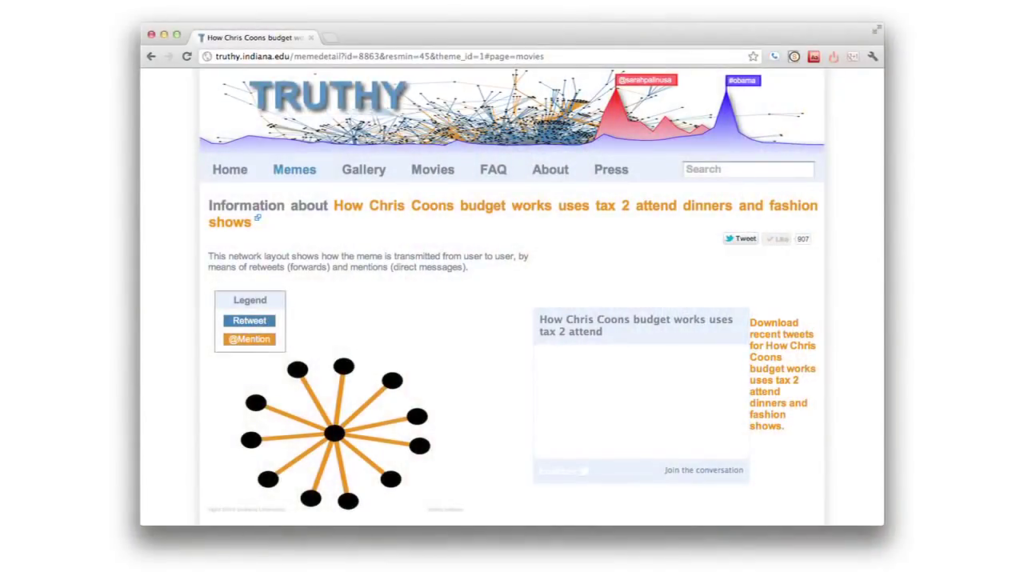

Here’s another example. This is a bunch of bot accounts that all turn around this @freedomist account. Freedomist.com is a web site that posts fake news, and it was extremely active—and still is—in posting all sorts of… It tends to be also another example of right wing, but we found a few examples of left wing, if you’re interested.

But anyway, during the last elections this was very active in smearing certain candidates and promoting other candidates. And there was about ten bot accounts, or accounts that were controlled by this one person, who’s also the person who owns and manages the web site. And then of course all of them would be retweeting. And there was an interesting pattern in which they would all at the same time try to target one influential user, hoping to get this one person then to believe it because it looked like it was coming from different sources. And then if that person retweeted it then there was a chance of creating a cascade.

So it was very effective and they got around Twitter’s spam detection by adding random characters at the end of Bitly-shortened URLs so that they looked like different URLs but in fact they were all pointing to the same sources. So this was very effective. A reporter actually contacted this guy and he freely admitted, said, “Yes of course I’m doing it. Everybody’s doing it.” This is a Republican activist in Pennsylvania. And he’s still there. Some of those accounts have been shut down by Twitter but several have not. So, still doing it.

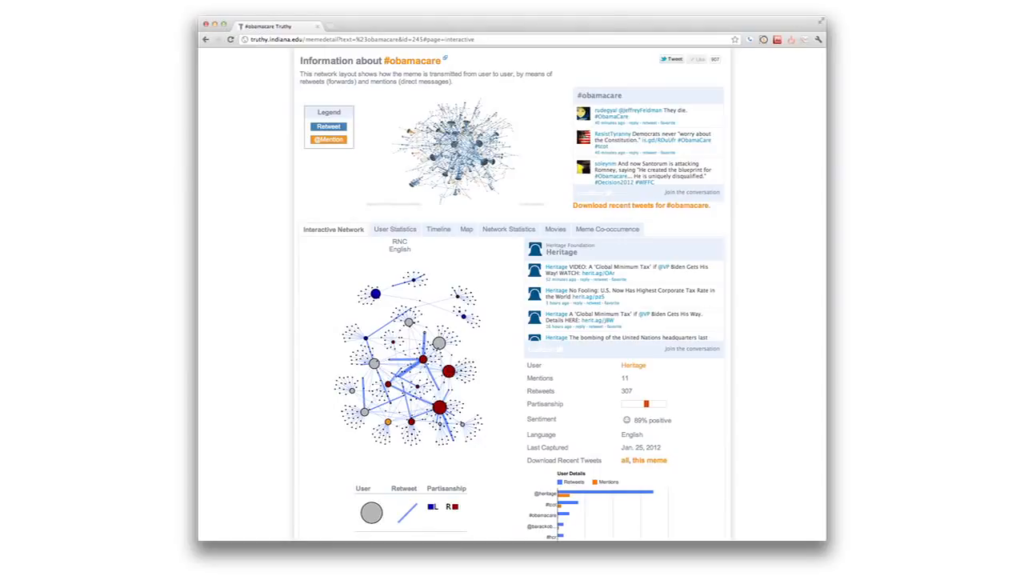

And the last example is more current, it’s the hashtag #obamacare, which is one of those that are used quite actively. And it turns out that… So we have this new tool that we just released. It’s sort of interactive visualization. It’s a little bit hard to see here because you can’t see all the edges on this monitor. But basically you can see what are the users who are most influential that are retweeted the most? And what are the patterns of propagation, and you can sort of explore and dig down and play a little bit with the data.

That particular account there that is the most active on the Obamacare meme happens to be the Heritage Foundation. And we have a few additional tools that let you see what other memes a particular account is active promoting or discussing. Also we try to automatically detect language and do sentiment analysis and a few other things. So you’re welcome to play with it. But these were just just a handful of examples to get us discussing. And of course the fundamental issue is can we detect these early?

Of course you know, as we’ve seen in the previous speaker, and also Takis Metaxis has done this work, if you can go afterwards and you have the time to do some real legwork, you could find out perhaps oh, there was this group behind this ad. There was that paid consultant, or it was this corporation, or it was this particular organization. But, at that time very often the damage is already done, as Takis has shown in his work. So the trick is can we detect it early, before a lot of damage is done. And that’s what we’re trying to do, but we’re just at the beginning of that.

Further Reference

Truthiness in Digital Media event site