Hello. My name is Mike. I work at the University of Falmouth. I’m going to tell you a couple of stories today which are hopefully be a bit about Twitter bots and a bit not. And they’re going to seamlessly merge into a finale where I’m going to say something which I thoroughly encourage you to ignore.

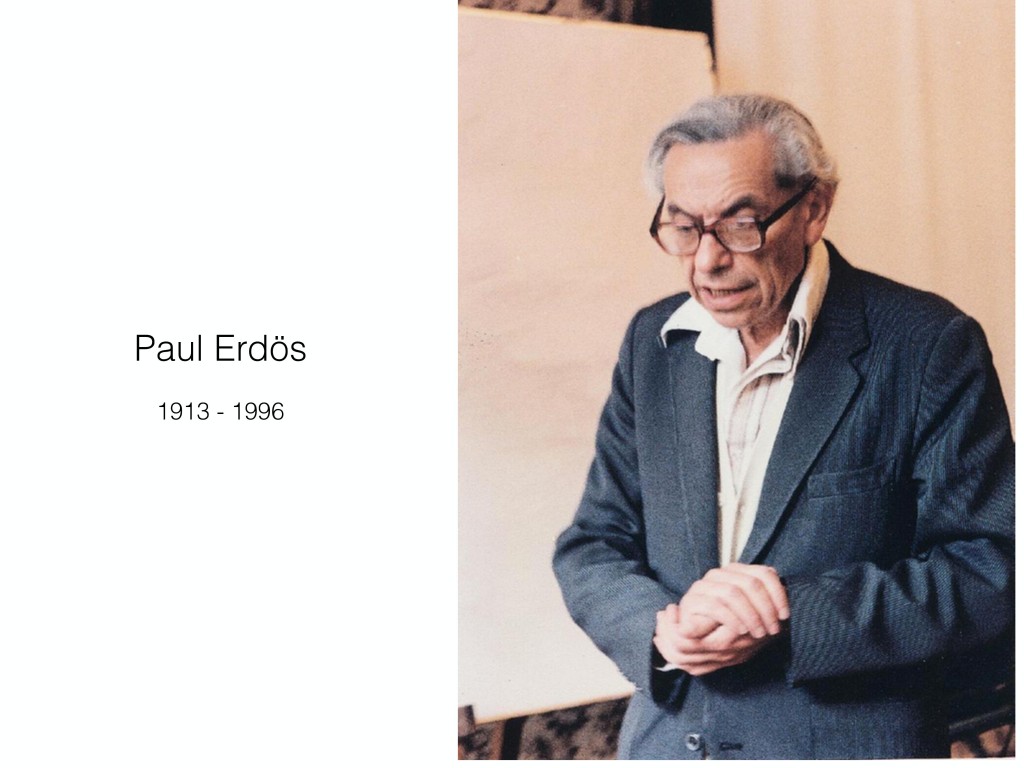

First I want to tell you about something called The Book. I’m going to be talking about a book and then the Book, so it’s going to get a bit [?] and I apologize. But about ten years ago, towards the end of my secondary school career, a friend of mine lent me a book. It was called The Man Who Loved Only Numbers, and it had converted her to mathematics, basically.

It was about this guy, Paul Erdős. He was an eccentric, but legendary, Hungarian mathematician who was incredibly well-known for traveling throughout America and other countries visiting his colleagues in the dead of night and just turning up. And as soon as they opened the door he would walk through and immediately start talking about a mathematical problem. He would publish hundreds of papers a year with people. He was incredibly prolific. You may have heard the term Erdős Number. It’s the numbers of degrees of separation you have between yourself and Paul Erdős, based on authorships, basically. People strive to have the lowest number possible.

“I’m not qualified to say whether or not God exists,” Erdős said. “I kind of doubt He does. Nevertheless, I’m always saying that the SF has this transfinite Book—transfinite being a concept in mathematics that is larger than infinite—that contains the best proofs of all mathematical theorems, proofs that are elegant and perfect” The strongest compliment Erdős gave to a colleague’s work was to say, “It’s straight from the Book.”

Paul Hoffman, The Man Who Loved Only Numbers

He contributed many things to mathematical culture. This is my understanding as someone who’s certainly not a mathematician. But my favorite one is his concept of something called the Book. This is a quote from uh, not the Book but the book about the Book. You don’t need to read the whole quote, but there’s a bit where he says he has this concept of God, which he doesn’t believe in, having this transfinite book. And in this book, all the best proofs from mathematics, all the most elegant and beautiful ones are written. The greatest compliment he could give you was he looked at something you did and said, “That is from the Book.” As if you’d tapped into something that was so fundamental to the universe, you’d seen something that it couldn’t be better. It couldn’t be perfect, you know. In heaven there is a tome that has your proof written in it.

I love this concept. I don’t do maths, though, so I get jealous of things like this that are very romantic. And I think there’s a concept of Twitter bots having a Book. And if I have time at the end I’ll talk about another book about Twitter bots, but I realize we’re pressed for time. So, I think a lot of Twitter bots are about patterns and templates. This is Cheap Bots Done Quick; you may have heard of it. So, a lot of bot-making relies on templates. It’s really hard to write in actual language, so often we cut things up and leave holes and insert words in, which is great. I use it in almost all of my bots. And a lot of bot-making also relies on patterns. Often linguistic bots, other bots also.

Understanding patterns in data or language or the way humans use language…and the last talk by Esther was really on-topic for me, as well. Because understanding some of those patterns, even in a small way, is quite magical when you get it right. Because there are so many of them. And understanding language is really really hard. And when you do it, there’s something really wonderful about it.

@paulcbetts i choose my words carefully

— how 2 sext (@wikisext) May 5, 2015

This is a bot called @wikisext by Thrice. And I’m no Paul Erdős, but I feel like @wikisext could be said to be from the Book of Twitter bots. It understands something about the data it’s using. It understands something about the tropes that it’s trying to insert that into. And the result is something really wonderful. I really like this interaction between the bot, but you should go and look at the bot and see its other tweets, because they’re wonderful.

As I said, @wikisext in particular takes data and there’s a structure that Thrice has understood, and they’ve woven it into this new form, and that is exciting. I get an excitement feeling when that happens. And I feel like it’s akin to how I see mathematicians talk about number theory, which is what Erdős wrote.

.@MuseumBot The most important idea at work here is that of the grape in which a bowl exists. This really makes me feel optimistic.

— AppreciationBot (@AppreciationBot) April 7, 2016

This is one of my bots replying to one of Darius’ bots. Darius wrote a bot called @MuseumBot, which posts an exhibit from the Met every six hours. And I wrote a bot called @AppreciationBot, which pretends to say something intellectual about it. There’s a tiny grain of intelligence in there, and then the rest of it is bluster and bluff, and posturing and weasel words and phrases that don’t really mean anything but they sound like they might. This is what I do when I walk around museums. I wouldn’t cast any aspersions on creators. This is definitely me and my own insecurities.

But the thing is actually quite a bad result from the bot, and people get disappointed when it goes wrong. So this one, the bot’s misunderstood. It things that bowls exist inside grapes, but it’s actually that you would expect grapes to be in bowls, right? And what I’ve noticed is that when I try and have bots act in a very human way, especially when they’re talking in a flowery sense here, the result is a real clash when people realize that it got it wrong.

Now I’m going to digress on another tangent briefly. But we’ll come back. Honestly.

The Painting Fool, “The Dancing Salesman Problem”, 2011

ANGELINA is the name of a piece of software that I made over the last six years for my PhD. I work in a field called Computational Creativity, where we’re interested in building software that engage in creative domains, either as a creator or assisting a creator. This is a piece of art painted by a piece of software.

When I started out in this field, I thought that there was lots of objective truths that we were going to find, there was going to be some checklist of what constituted computational creativity, or creativity in humans. There was some formula that I could plug stuff into, and we’d get a good result out on the other side. And over time, obviously, I was taught by wonderful people, one of whom is in this room, who I’ll introduce you to briefly later, who made me realize that that’s not really what it’s about. Because there is no definition of creativity, really, and there’s certainly not one that we would all agree on.

Similarly, I thought that maybe there was a Turing Test vibe going on here, where we’d show people things that our software had created but not tell them that it was software, and if they couldn’t tell it apart from a human creation, then we would pull off the sheet and point and laugh at them and we’d say, “Haha! It was creative because you couldn’t tell the difference.”

And that doesn’t work either, because what computational creativity is really about is about perception. It’s not about me, and it’s not even really about the software I write. It’s about what people think of the software. So once you’ve pulled the sheet off, that person still isn’t staring at your software. And if you have made them feel like there was some trickery involved or anything like that, their perception of the software, their appreciation of it, drops.

I was reading the Guardian website today when I came across a story titled “Obama to urge Afghan president Karzai to push for Taliban settlement”. It interested me because I’d read the other articles that day already, and I prefer reading new things for inspiration. I looked for images of United States landscape for the background because it was mentioned in the article. I also wanted to include some of the important people from the article. For example, I looked for photographs of Barack Obama. I searched for happy photos of the person because I like them. I also focused on Afghanistan because it was mentioned in the article a lot.

ANGELINA, description of Hot NATO, 2012

ANGELINA was designed to make games. I am interested in games, so ANGELINA made games. And one of the really important things to make people feel like ANGELINA is engaging in a creativity activity and engaging in a creative field is the get it to talk about its works. So this is some wall text that it produced to justify things that it was doing. This was template-based, like many of my Twitter bots are. And it would insert real pieces of data. So, it was using Barack Obama in this particular game, so it was telling you that so that you would hopefully believe in its creative decisions.

What does this image make you think of? Single words are best, of any kind. Thanks everyone! #t96 pic.twitter.com/8XNnbBDfKK

— ANGELINA (@angelinasgames) March 7, 2014

I also made my first Twitter bot with ANGELINA, because I thought it would be good if it engaged people in its creative process, and showed that it was learning and reusing information. So ANGELINA would post images and ask people to give it word associations so it could then use that knowledge when it wants to use this as a texture in a game, for instance.

And there are other parts of the perception of software that I didn’t have any control over, and that was things like the press. So, the press really liked the narrative of ANGELINA as a headline. So things like “Angelina is an AI that loves Rupert Murdoch.” This had come out in an interview with me, and then out of context in a headline it sounds really great. And I included this slide because I wanted to show that the perception of software is not just governed by the people who write it. Once you put it out there, and I think George was getting at this with tools as well, it’s out of your hands. You can’t control it anymore.

And that’s really important, because initially people were interacting with ANGELINA in a very standard way. Many of these people are my friends. They were behaving. They were doing very nice things. And then people said to me, “Well, when ANGELINA’s asking for help, what if I…lied to it?” And I said, “Well, you can do whatever you want. It’s okay.” They were kind of cautious about it. But the reason I said yes is because as you know, Twitter users are unpredictable, and if this Twitter bot ever got bigger it would receive unusual and maybe malicious responses. So I said we might as well practice that now.

https://twitter.com/kadhimshubber/status/441911974004547584

So my friends kind of nervously started giving it incorrect responses. Kadhim now works at the Financial Times, but assure you he’s a very serious gentleman.

Say, am I right in thinking a squirrel is bigger than a tree?

— ANGELINA (@angelinasgames) February 4, 2015

And the thing is that I encouraged people to interact with it as naturally as they wanted. I encouraged them to make jokes with it, or to be a little playful in their responses. But in doing so, you’re encouraging them to develop a relationship with ANGELINA that could never really be aspired to. Like, ANGELINA couldn’t respond in a playful way, because it didn’t understand what was going on. And that is fine. Lots of bots are like that. I’ve had conversations with @wikisext, and it doesn’t really understand me on a personal level. But the difference between @wikisext and ANGELINA is that ANGELINA was trying to present itself as a creator, and I was trying to do that. And that mean that I was trying to present it as something that people could engage with.

And that kind of led to disappointment, because once you raise someone’s expectations, falling from those raised expectations is way more painful, and you fall far further than the original point where you started at. As soon as you start to promise things, once you can’t deliver on them, as you may have experience in other aspects of your life or your Twitter bot making, people’s reactions end up being more negative sometimes. And before I click “next slide,” I’m going to bring everything together now, but I have to apologize because I didn’t want to inflict this on you but it was just right for the talk. Okay?

So. Now. I looked up the definition of “hot take.” And it explicitly says “written.” And this is a talk, so it’s fine. I’m talking to you. This is no longer— It’s okay for me to do this, okay. And it’s brief, I promise you it’s brief.

So, as you may know, and I really apologize if you don’t, Microsoft put a chatbot onto Twitter. It was a bit of a mistake. And various mistakes were made. Including one of the things that it did was sort of repeat verbatim things other people had told it, which is not great. And after the fact, there was lots of talk about whether this could’ve been prevented with better AI. And lots of people turned to the people in this room and said, “What could we have done?” We could’ve used filtered word lists, and we could’ve just not listened to humans.

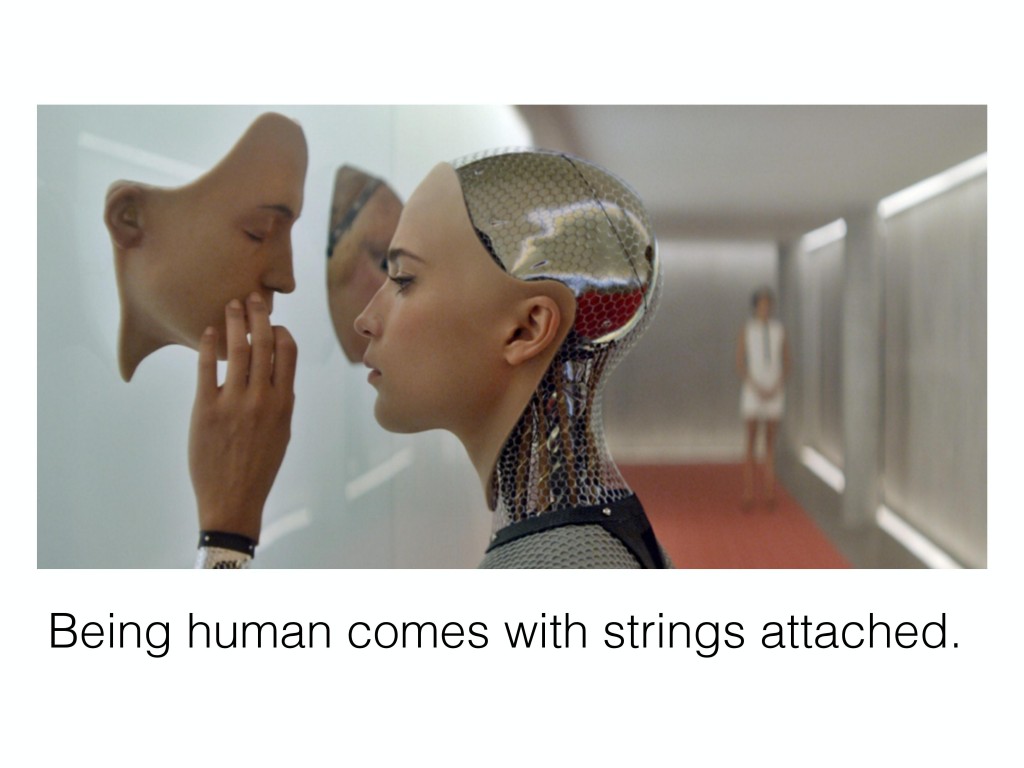

And I think all of those solutions were good, but there was a bigger problem here that people didn’t really acknowledge. And that was that Tay was trying to be human. It was presenting itself on the same level as humans. It wasn’t saying, “I’m made of flesh and blood and I walk down the street,” and things like that. But it was saying, “Treat me as you would treat someone else who is human.”

And the problem is that being human comes with a lot of strings attached. There’s a lot of implications there. When @AppreciationBot starts to use words that refer to “sometimes I think this” or “get a feeling of” (that’s one of the phrases that @AppreciationBot uses), you’re promising things that you can’t back up. You’re raising people’s expectations. Once I encourage people to lie to ANGELINA or get playful with ANGELINA or read things into its games that it then can’t justify, you raise people’s expectations, which can be great, and in my field of computational creativity it’s actually very valuable to raise people’s expectations, I think. Because they engage more with people as a creator. And often, we trust artists to justify what they’ve done. We actually trust them. We don’t press them and try and figure out if they were lying. People do that to ANGELINA a lot, and a lot of other computationally creative software.

But the thing is with Twitter bots and a lot of AI in pop science, it’s kind of like staying up late with your parents. Once you ask to be treated like a human being, you have to [abide] by a different set of rules. You have to be extra good. You have to be on your best behavior if you want to stay up late and watch the grown-up TV, like I used to get to sometimes.

And the second you misbehave, you get sent to bed. Because you didn’t play by the rules that you were agreeing to be judged by. And I think that sometimes we do this intentionally, like Tay did. And sometimes we do it unintentionally like things like @AppreciationBot does, where you go so far in on the bluffing or the playfulness that we forget, I think, the most important thing about AI and its interaction with society, which is I am very fortunate I have met a lot of wonderful people over the last five years. And talked to them about ANGELINA, and many of them are in this room. And the reaction to ANGELINA is wonderful, and people love ANGELINA and they love AI. And they want to believe in technology. They want to talk to bots. They want to believe in AI.

But they are also very easily heartbroken. And I think we have a duty to be very careful with what we let our bots say, what we let our bots do, because people get attached to these things, and we can’t stop them from being let down.

So, this is the lesson I want to leave with you, and I thoroughly encourage you to ignore me. But the next time you try and make your bot as human as possible, think about whether you could take another tack entirely.

Before I stop, I just want to say that I talked about The Book earlier. I am writing with Tony Beall, who’s in the room right now, a book about AI and about Twitter bots and about computational creativity. And I’d love to speak to people in this small but lovely community about who they are and why they do what they do. So, we would love to chat sometime, if not here maybe email me or tweet it.

Thank you very much for having me to talk.

Further Reference

Darius Kazemi’s home page for Bot Summit 2016.