I’m going to talk about translation and bots, and how thinking about translation can help you think about bots. And before I start, I’d like to tell you a little bit about myself, so you know where I’m coming from. But I don’t have that much time, so I’m going to condense this very shortly into three hashtags, as I’ve learned. The first hashtag is #ComputationalLinguistics. That’s what I do. That’s my job, and I’m studying it. My second hashtag is #GermanLanguage. That’s one thing that especially interests me, and it’s also my excuse if I screw up something during my talk. And my third hashtag is #academia‽, which I’m having very conflicted feelings about, so I added a little interrobang there. You now know pretty much everything there is to know about me. So I think I’ll start with the talk.

My talk has three sections. The first one is about bots and languages. The second one, I thought I’d talk about humans and languages. And the third section is about the Turing Test and about diversity.

Bots and Languages

I’ll start with telling you what inspired me to do this talk. It was an article in a German paper that I read a few weeks ago about two bots talking to each other. The authors made the bots talk to each other by copying and pasting the output of the bots into each other’s input fields, which is a pretty good idea. But the commenters of the article were very unhappy with it. Something was off. They weren’t content with the phrases that the bots used, and they thought the bots were just saying random stuff.

I was really confused about this, because the bots that were used for the article were very famous. They were Rose and Mitsuku, and they’ve both won prizes, the Loebner Prize. Probably some of you are familiar with that. So I thought it was a bit off that people were so unhappy with those bots. And I thought about why this was. And I think I have an idea what caused this negative reaction.

As I see it, the bot was designed like this. Some humans were training the bot with some data, with a corpus maybe, and language rules, and they always had a specific type of human in mind, or reader who reads the output of the bot. And they were always assuming that the person who reads the output is the same type of person as the developers. I think that’s a very easy thing to assume. Everyone’s nodding yes, thank you.

And why this didn’t work in the article that I read was I’m a German speaker, and the bots weren’t programmed to speak German. They were programmed to speak English. So when I read the article, it looked a bit different. It looked like this:

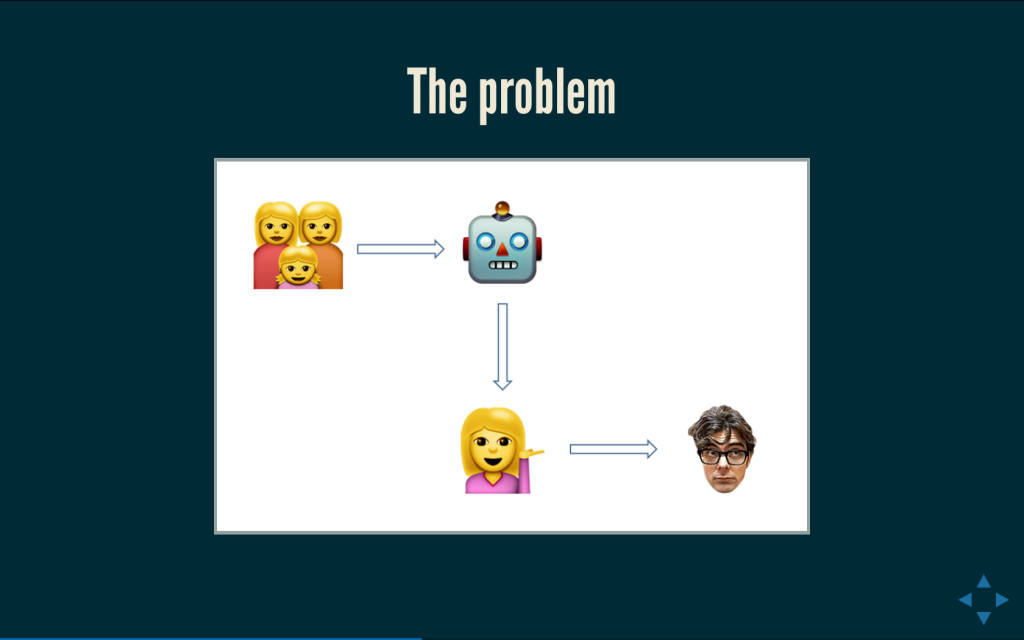

There was something in between. There were not just humans training the bot and humans reading the output, there was a translator in between. And in case you can’t tell, this is a different type of person than this one. And it’s someone from a different culture and from a different language community. And so everything felt a little bit different.

And one reason it felt different was that the people who trained the bot, with this picture in mind, had added some idiomatic phrases to the database. So for example, one of the bots said something about the president having shoplifted the election. That’s what it sounded like in German. Of course, the original phrase was “stealing the election,” and that doesn’t exist in German. So everyone who read the German article was just confused by this. Why would the bot talk about shoplifting and elections? That doesn’t make any sense.

So that’s a problem. I’m not really sure how to avoid this. Maybe we can just do without all these cultural references and idiomatic phrases, and just use normal words for your bots. So your bot will just be understood by everyone. Bad idea, right? It’s not going to be that easy. Sorry.

Navigating Languages

So let’s talk a bit about how humans navigate languages. You probably know this quote by Wittgenstein, “The limits of my language mean the limits of my world.” I’m not a big fan of this idea in particular, and I know many linguists aren’t fans of this. But I’m interested in the other way around. So let’s switch this and talk about how the limits of my world mean the limits of my language.

I’m a human being, as most of you are. And I have some personal experiences, and I have a cultural background that always influences what I’m saying. And they always inform my linguistic choices. I’m always going to use language that harmonizes with my version of reality as I experience it. And that’s not a big problem when we’re talking just about mistranslations of idioms, because it doesn’t really harm anyone. It’s not hurtful, or anything. But of course there are different situations where that might be a big more critical, and I brought you some examples of how that might be dangerous.

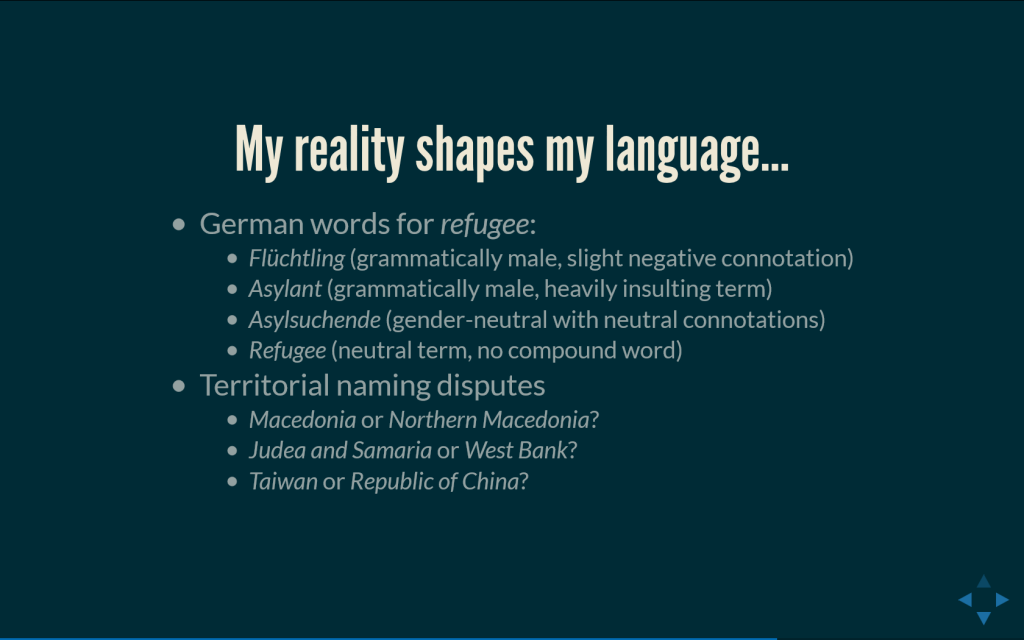

The first one is a set of German terms for the word “refugee.” There are many other terms for it. I just brought you four of them. They have very different connotations. A very neutral one is “flüchtling,” but if you want to, you can use it with a slight negative connotation. And the next one, “asylant” is just an insulting term; it just came to be, historically. Then we have some more neutral ones like “asylsuchende” or “refugee.” Refugee is actually the most positive word when you’re talking about refugees in German. It’s mostly collocated with the word “welcome.” So “refugees welcome” is the standing phrase. And I’m always going to talk about this topic informed by my own feelings and opinions about this topic. So I can’t really talk about refugees in German without transporting these feelings and opinions, because I choose one of those words.

Another example that I brought is also political. That’s about territorial naming disputes. I think Martin was talking about this a few weeks ago. Which of those terms would a bot use to talk about these geographic areas? They always have the same referent, the words that are on the slide, but they have different connotations and for the territorial naming disputes, it’s just informed by a political reality. What you believe, who’s land is this, really?

So, I’m a human and I have opinions, and I use language that fits my opinions and my feelings. A bot isn’t a human, so a bot can’t choose. But my language will shape my bot. So, I have a feeling about a particular political topic, I’m going to use language that fits this topic, and I’m going to teach my bot to use that same type of language. Might be a problem, right? Because if a bot talks about refugees, what type of opinion or stance do I want to communicate? It’s not that great. And you can’t really use “normal” words, like I suggested, because there are no normal words, because humans are different and have different opinions.

The Turing Test

Now I’m going to talk about what all of this has to do with the Turing Test. You all know the Turing Test. It’s always in the media whenever anything happens about bots, you’re always going to read this question, “Does the bot sound human enough?” It’s the essential question of the Turing Test. And I have a problem with this because it implies that being a human or sounding human is objective in some way. There is a default human being, and a bot just has to imitate this human being. And I think that’s not a good way of looking at it.

So, the commonly asked questions is, “Does this bot sound human?” And the question that I think is a little bit more interesting is why do so many bots that win the Loebner Prize sound pretty much exactly the same? They’re really similar to each other. Maybe they all have a particular type of default human being in mind, the people who design these bots. But if so, who is this particular mysterious default human being? Has anyone met them, maybe?

Let’s have a look at the profile of one of the bots from the article. This is Rose, and the profile is taken from chatbots.org. And this says, “Rose is a twenty-something computer hacker, living in San Francisco.” Is this a default human being? It’s probably for very many people who design chatbots, because the tech community isn’t really that diverse. It’s getting there slowly, but there’s still this idea of a default human being, and people transport this when they build bots. And I’m unhappy with this.

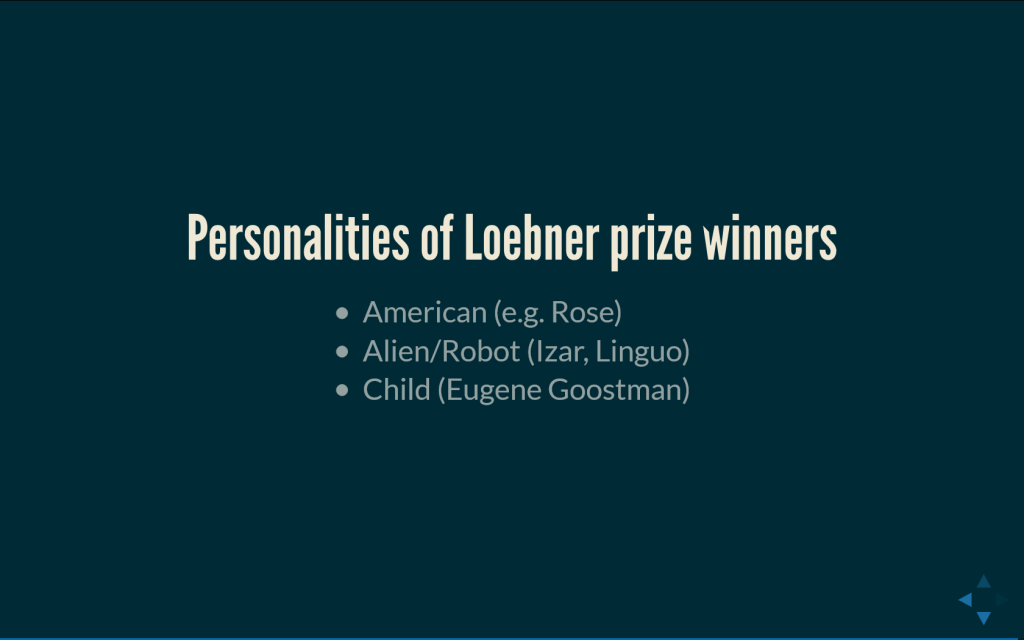

I had a look at the recent winners of the Loebner Prize, and I noticed that there were three types of personalities that the developers gave their bots, because personalities always score more points. The people who pick the winner of the prize actually ask the bot about their personality. Where they come from, who their parents are, and so on. And the personalities that occurred in the last few years were American; aliens or robots, so not human at all; and then I found one single instance of a bot who wasn’t American or alien, and that was a thirteen year-old boy who was presented to be a thirteen year-old Ukrainian boy. And I think that’s a bit shocking. If you have a look at this list, people are either American, not human, or children. Not really, right? So I oppose this idea of a default human being, and I oppose this idea of making bots sound like Americans, aliens, or children.

So I’m going to ask every one of you who makes bots to think about what different types of personalities you might give your bots. Maybe you know some slang words. Maybe you know Yiddish or Arabic or whatever. And I think it’s worth it to make the bot landscape more diverse. So I’m going to leave you with this message: make more diverse bots.

Thank you.

Further Reference

Esther has posted a summary of this presentation at her web site, as well as her slides.

Darius Kazemi’s home page for Bot Summit 2016.