Could we make a moral machine? Could we build a robot capable of deciding or moderating its actions on the basis of ethical rules? Three years ago I thought the idea impossible, but I’ve changed my mind. So, what brought about this u‑turn?

First was thinking about simple ethical behaviors. So imagine someone not looking where they’re going. You know, looking at their smartphone, about to walk into a hole in the ground. You will probably intervene. Now, why is that? It’s not just because you’re a good person. It’s because you have the cognitive machinery to predict the consequences of their actions.

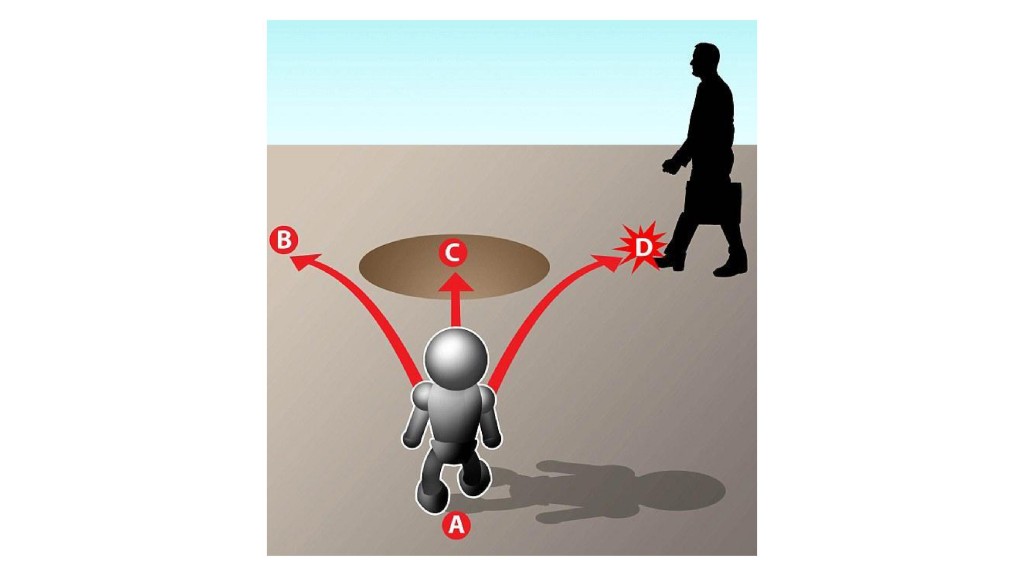

Now imagine it’s not you but a robot, and the robot has four possible next actions. So, from the robot’s perspective it could stand still or turn to its left, and that the human will come to harm, will fall in the hole.

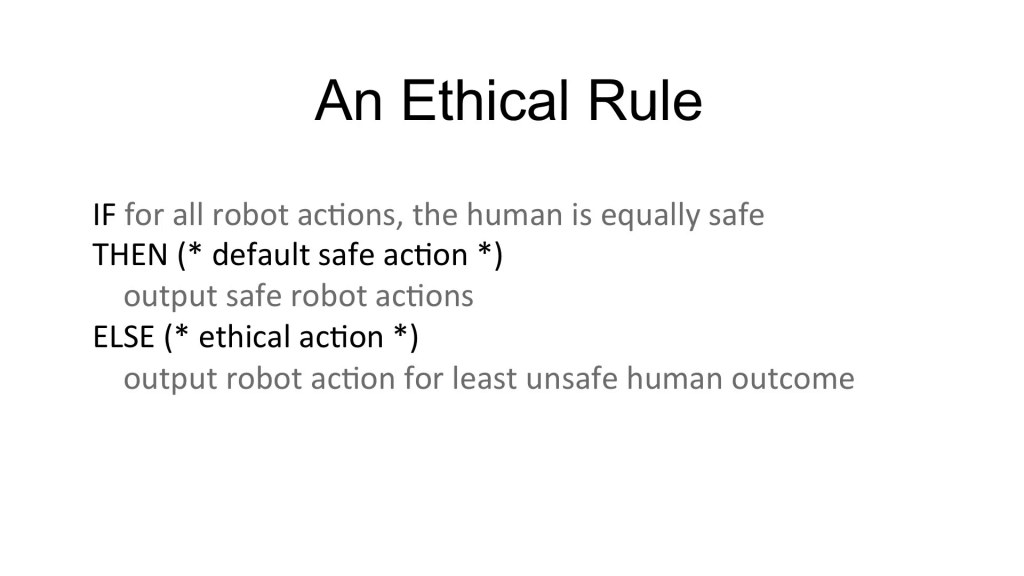

But if the robot could predict the consequences of both its and the human’s action, then another possibility opens up. It could choose to collide with the human to prevent them from falling in the hole. And if we express this is an ethical rule, which you see here, this looks remarkably like Asimov’s First Law or Robotics, which is that a robot must not injure a human or through inaction cause a human to come to harm.

So thus emerged the idea that we could build an Asimovian robot. We need to equip the robot with the ability to predict the consequences of both its own actions and others’ in its environment, plus the ethical rule that I showed you in the previous slide.

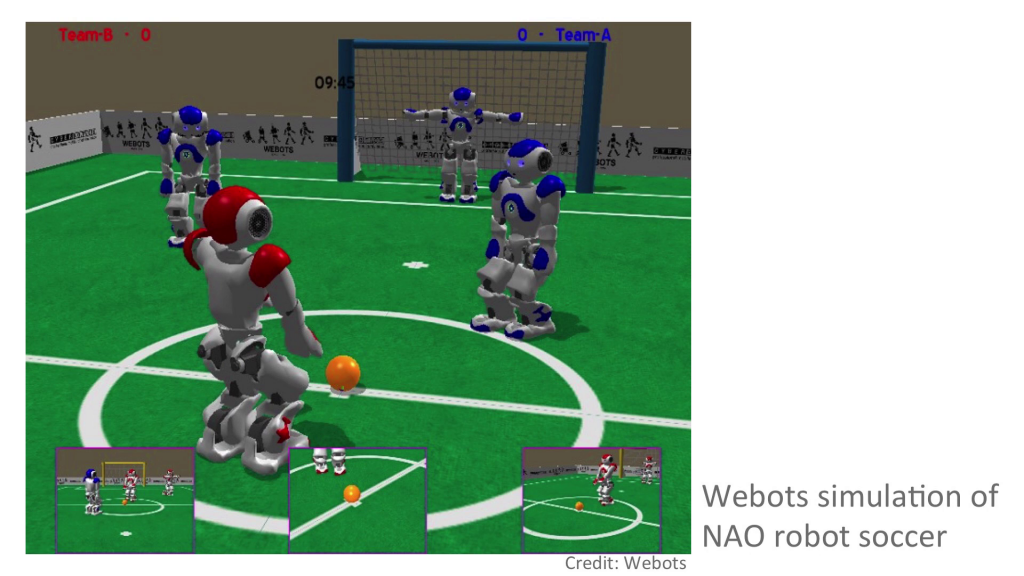

Image: Webots

In fact, the technology that we need to do this exists and it’s called the robot simulator. So, roboticists use robot simulators all the time to model and test our robot code in a virtual world before running that code on the real robot. But the idea of putting a robot simulator inside a robot, well, it’s not a new idea but it’s tricky and very few people have pulled it off. In fact, it takes a bit of getting your head round. The robot needs to have, inside itself, a simulation of itself and its environment, and others in its environment. And running in real-time as well.

So, over the past two years we’ve actually tested these ideas with real robots. In fact, these are the robots. We don’t have a hole in the ground, we have a danger zone. And we use robots instead of humans. We use robots as proxy humans. So let me show you some of our latest experimental results.

Here we have a the blue robot, the ethical robot, is heading towards a destination. This is its goal. But it notices right here that the red robot, the human, is heading toward danger. So the blue robot chooses to divert from its path to collide (gentle collision) with the human, to prevent it from coming from harm. This is exactly the same thing but a short movie clip. You can see again, the blue robot is the ethical robot. Our red robot is the proxy human. Cute robots, aren’t they.

So, we also tested the same with an ethical dilemma. Here our ethical robot is faced with two humans heading toward danger. It rather dithers, rather hesitant, and of course it cannot save them both. There isn’t time. Ethical dilemmas are a problem really for ethicists not roboticists.

So, how ethical is our ethical robot? Our robot implements a form of consequentialist ethics. In fact, we call the internal model a consequence engine. The robot behaves ethically not because it chooses to, but because it’s programmed to do so. We call it an ethical zombie. Our approach has a huge advantage, which is that the internal process of making ethical decisions is completely transparent. So if something goes wrong, then we can replay what the robot was thinking. I believe that this is going to be really important in the future, that autonomous robots will need the equivalent of a flight data recorder in aircraft. An ethical black box.

So, what have we learned? Well, the biggest lesson, in fact the thing that caused my u‑turn, is this, that we do not need to make sentient robots to make ethical robots. In other words, we don’t need a major breakthrough in AI to build at least a minimally ethical robot. We don’t need to build Data from Star Trek.

I’d like to leave you with a question about the ethics of ethical robots. If we can build even minimally ethical robots, are we morally compelled to do so? Well, with driverless cars just around the corner, I think it’s a question that we’re going to have to face really quite soon. So thank you very much indeed for listening. Thank you.

Further Reference

Alan Winfield’s blog with follow-up post and slides, and his staff profile at the University of West England, Bristol web site.