So here’s what happened. If you tell people you’re going to have this super-open, absolutely non-commercial, money-free thing, but it has to survive in this environment that’s based on money, where it has to make money, how does anybody square that circle? How does anybody do anything? And so companies like Google that came along, in my view were backed into a corner. There was exactly one business plan available to them, which was advertising.

Archive (Page 2 of 3)

I’ve been trying to get as many weird futures on the table as possible because the truth is there are these sort of ubiquitous futures, right. Ideas about how the world should or will be that have become this sort of mainstream, dominating vernacular that’s primarily kind of about a very white Western masculine vision of the future, and it kind of colonized the ability to think about and imagine technology in the future.

Victor’s sin wasn’t in being too ambitious, not necessarily in playing God. It was in failing to care for the being he created, failing to take responsibility and to provide the creature what it needed to thrive, to reach its potential, to be a positive development for society instead of a disaster.

We have already changed the world a lot, not always for the better. Some of it’s for the better, as far as we human beings are concerned. But every time we invent a new technology, we like to play with that technology, and we don’t always foresee the consequences.

A lot of the science fiction I love the most is not about these big questions. You read a book like The Diamond Age and the most interesting thing in The Diamond Age is the mediatronic chopsticks, the small detail that Stephenson says okay, well if you have nanotechnology, people are going to use this technology in the most pedestrian, kind of ordinary ways.

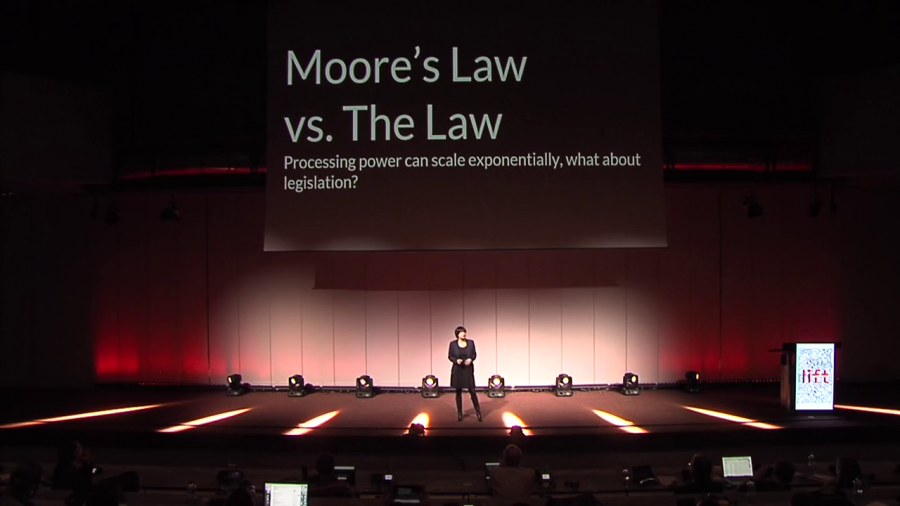

When we talk about technologies such as AI, and policy, one of the main problems is that technological advancement is fast, and policy and democracy is a very very slow process. And that could be potentially a very big problem if we think that AI could be potentially dangerous.

I don’t think it’s going to be necessarily a problem within the next five to ten, fifteen, to maybe even twenty years. But my perspective on it has always been, because I am more philosophically focused in these things, why not try to address the issues before they arrive? Why not try to think about these questions before they become problems that we have to fix?