This is the second day of our conference on governing algorithms and we’ve already been treated to two outstanding presentations by Bob Tarjan and Claudia Perlich, with insights into what…well, a little bit into what algorithms do. And today we continue to explore the social, philosophical, ethical, legal significance of algorithms.

Archive (Page 1 of 2)

So how did this start? Actually all of us—Solon, Sophie, and many other fellows and research, not just at PRG, the Information Law Institute, but also at MCC—we’ve been studying computation, automation, and control in different forms for quite a long time. But it was only at the end of last summer really that we realized that there’s this new notion of the algorithm gaining currency.

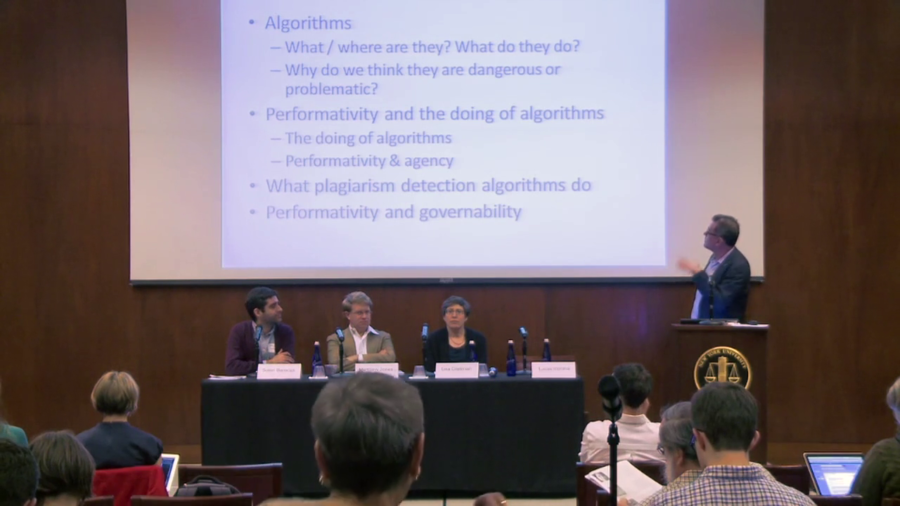

I just want to be clear that I’m not saying that the details of the algorithms are irrelevant. In a way they can matter very much, and you know, in a certain circumstance, in a certain situated use, it might matter significantly what the algorithm does but we can’t say that a priori. So we need to both open up the algorithms, we need to understand them as much as possible, but we must not be seduced to believe that if we understand them therefore we know what they do.

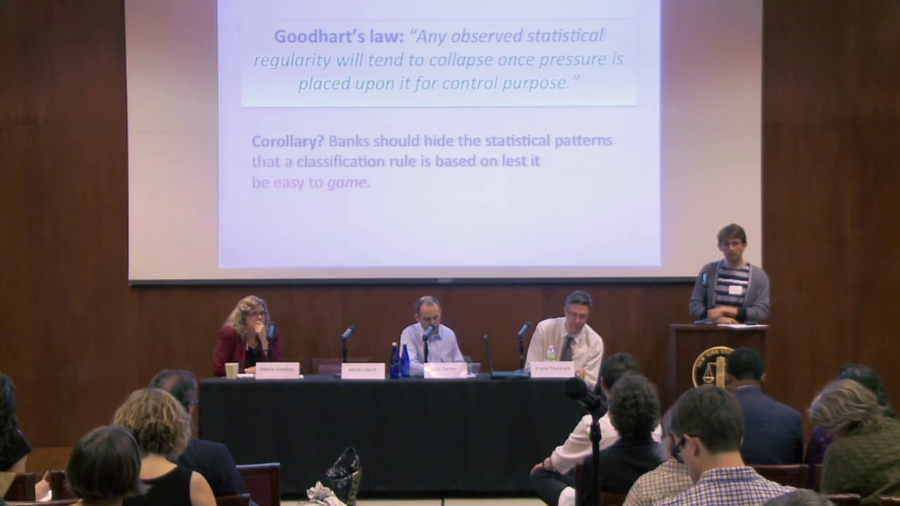

We can’t govern through knowledge, properly speaking. Even if many algorithms are trade secrets, Lucas and others have reminded us nearly all would not be surveillable by human beings, even if we had access to their source code. We have to begin whatever process from this fundamental lack of knowledge. We need to start from the same epistemological place that many of the producers of algorithms do.

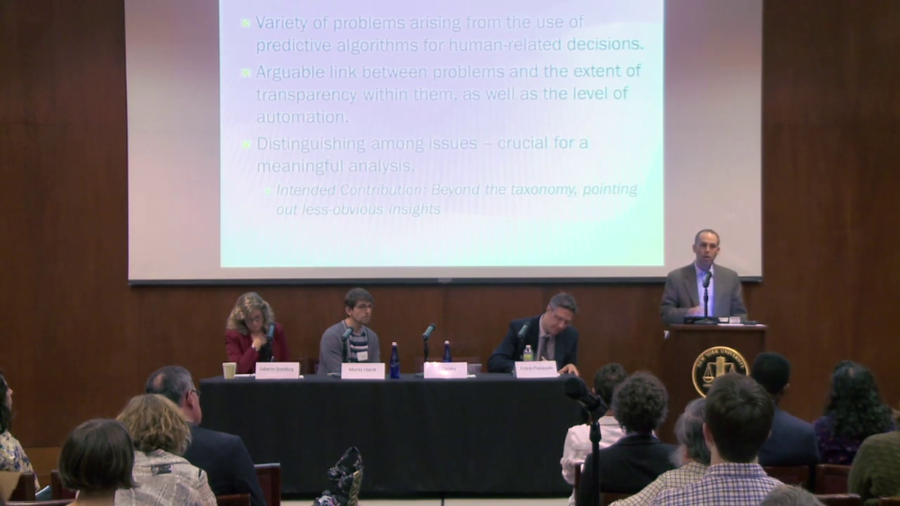

When you make a decision to opt for an automated process, to some extent you’re already by doing so compromising transparency. Or you could say it the other way around. It’s possible to argue that if you opt for extremely strict transparency regulation, you’re making a compromise in terms of automation.

More than sort of a discussion of what’s been said so far this is a kind of research proposal of what I would like to see happening at the intersection of CS and this audience.

The study of search, be it by people like David Stark in sociology, or economists or others, I tend to sort of see it in the tradition of a really rich socio-theoretical literature on the sociology of knowledge. And as a lawyer, I tend to complement that by thinking if there’s problems, maybe we can look to the history of communications law.

How would we begin to look at the production of the algorithmic? Not the production of algorithms, but the production of the algorithmic as a justifiable, legitimate mechanism for knowledge production. Where is that being established and how do we examine it?