Moritz Hardt: So I’m a computer scientist, and I called this response “Occupy Algorithms: Will Algorithms Serve the 99%.” And more than sort of a discussion of what’s been said so far this is a kind of research proposal of what I would like to see happening at the intersection of CS and this audience.

So, what Frank is getting at in my opinion is sort of the question have albums in finance failed to deliver on the promise of greater efficiency, transparency, and fairness? And so what I want to address in this response is gee, what have computer scientist done about it, right, if anything? And I picked out three works that I think are very closely related to what Frank talked about.

The first one’s about ensuring fairness in classification as a pressing theme in Frank’s article. The second one is on thwarting gaming and manipulation. And gaming came up I think so far in every talk and every response, so I think this is also related. And finally I want to talk about the role of computational complexity in financial markets and financial products, and the difficulty that this introduces in the regulatory process and the pricing process. And I hope you all remember what computational complexity is from Bob’s talk and what the word intractable means.

So I should say this is not an exhaustive list. There are many more fastening papers in the CS literature. These are just some that I thought were really related. And yeah, each is just just a two-minute teaser on these papers. I just have a few minutes, so I probably won’t make any true statements. But you can look at the papers if you actually want to see the truth.

So, the first paper I want to mention, we called it “Fairness Through Awareness.” This was joint work with Cynthia Dwork, Omer Reingold, Toni Pitassi, and Rich Zemel from about two years ago. And the problem that we’re getting at from sort of a mathematical perspective was how do we prevent a classifier or classifying algorithm from discriminating based on protected attributes such as you know, race, or religion, or gender? And the running example in Frank’s article would be the credit loan application where you’re concerned about for example steering minorities into less-advantageous credit card offers. So this was also a motivating example for us, but also the targeted advertising example that we saw yesterday is really good here, and fitting.

So, let me just sort of recall of what an insufficient solution concept is, just because a lot of companies will actually tell you that that’s what they’re doing. And I call this blindness to the protected attribute. So, people will tell you, “Oh, we don’t even look at the race attribute. How could our algorithm be discriminating if race doesn’t show up anywhere in the data and we never looked at that attribute?” And as every machine learning person knows, there are lots of redundant encodings of race in the data. You don’t need to see the attribute to be able to reliably predict it. So this doesn’t work given our understanding of machine learning. It’s just not a solution.

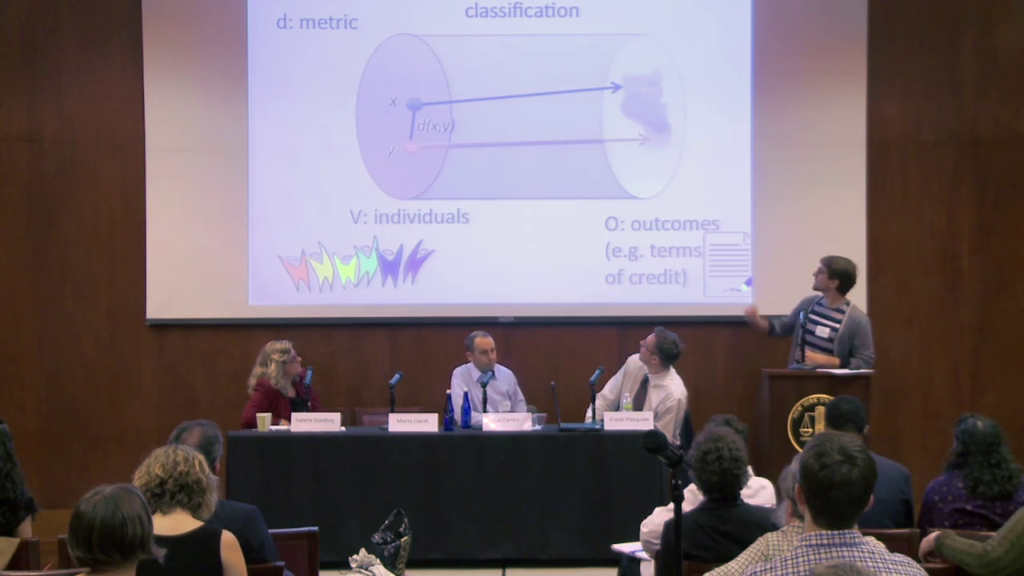

So what we ended up doing is we came up with a new notion which we called individual-based fairness. And it’s a formalization of this idea here, which says that any two individuals who are similar with respect to the specific classification task that you’re interested in should receive similar outcomes or other similar distribution over outcomes. And this is just an informal statement of what our formal notion says. But what’s important here, what should strike you as important, is that it requires the definition of a task-specific metric or task-specific similarity measure between individuals that allows you to compare individuals.

So this is really a central part of this definition, and so I want to emphasize this a little bit more. So what’s this metric? We think of it as externally imposed and open to the public. It’s something that people thought about, and as such it provides transparency. This is something that people who’re interested in the issue of fairness could discuss and influence. So this is something that Frank likes because it gives you a way to regulate the classification process.

On the other hand, the metric does not dictate classification. There could be many classifiers, many very different classifiers, that all consistent with the same metric. So in particular it still allows the bank to optimize their secret, proprietary strategy. And this is something that Tal really thinks is important for competition. So this metric gives you a way to go between these standpoints and get a tradeoff.

But one open problem that we always face and to me as a computer scientist I can’t fully solve is, in any concrete setting who gets to define the metric, and how? And this is really something very interdisciplinary where we need to rely on social insight and people from various disciplines here in this room. And that’s sort of an open problem I have for you. So this is where my work as a computer scientist sort of ends. If you give me something like this, if you can think about what it might be in various settings that you’re interested in, I can give you a useful algorithmic framework.

So just as a sort of a cartoon of this thing, there is the set of individuals. There could be two individuals, they are related by the metric, so they’re at a certain distance. And then the framework would make sure that the distribution over outcomes that they receive is similar to the extent that they’re similar with respect to the metric. This is just a cartoon. You can look in the paper for the actual definition.

So I want to move on, and I want to get to the second thing I mentioned. And this is about gaming classifiers and how you might be able to prevent it on an algorithmic level. And one thing that I really like is this wisdom from financial policymaking called Goodhart’s Law. And this started my interest in this question a long time ago. And Goodhart’s Law says that “any observed statistical regularity will tend to collapse once pressure is placed upon it for control purposes.” And if you translate this into machine learning jargon, what it says is if you have a classifier that relies on some statistical pattern, and you expose the classifier to the public, the pattern will go away. The patterns will change. And there’s sort of a corollary that banks always say is, “Well gee, because of this thing we need to make sure everything we do is very secret, because once we expose our statistical insight it disappears. It becomes useless and the classifier becomes easy to game.” So this is sort of a case they’re making for opacity. And it’s not entirely false.

What I’m interested in as sort of a math guy is well, is that really true, right? Is Goodhart’s Law a law as in you know, the law of large numbers. Something you can prove under some reasonable formalization. Or is it not actually reliably true? Can we somehow come up with classifies that you can expose to the public and they’re still hard to game?

And this is sort of an ongoing investigation with Brendan Juba and Omer Reingold. We sort of take a kind of a game theoretic point of view. We factor in the cost of changing one’s attributes in the classification process. For example getting, a college degree is much harder than buying a bunch of books. So we take the cost of changing one’s attributes into account as well as the individual’s budget for any given classification task. People are only willing to expend so much effort or money.

And once we have these sort of game theoretic ingredients we can talk about designing decision boundaries or classifiers in such a way that a typical negatively-classified person does not have the means or the budget to force a positive classification by any change of attributes. And this is true even if the classifier is exposed. So that’s an important point. So it allows you to investigate which classifiers are hard to game even if you expose them. I think this is a kind of starting point to get at some of the issues we’re talking about here from a formal perspective.

So finally I want to mention a fascinating paper that unfortunately I didn’t coauthor. But my adviser did and a bunch of other great people. So it’s by Sanjeev Arora, Boaz Barak, Markus Brunnermeier, and Rong Ge. And it’s about intractability in the financial derivative market. And the slides are actually by Sanjeev.

So here is a quick recap of the financial crash in 2008. So basically what happened is that the derivative market or it’s total value had become far greater than the world’s GDP. And so the hard lesson that we learned is that even small errors in pricing derivatives lead to huge effect on the economy just because the market is so enormous.

And so what these guys were interested in is the sort of intrinsic computational complexity of pricing financial derivatives. And here’s an informal statement of the fastening theorem that they proved. They showed that evaluating the fair value of a derivative—a financial derivative such as a CDO is an intractable problem. So I don’t want to quiz the audience on what intractable means, but it means according to Bob that you cannot solve it in polynomial time. So even with a significant increase in computational power, even in twenty years you cannot solve this problem.

Moreover, what’s even more oppressing here is that the designer of the CDOs can tamper the product in such a way that it is computationally hard to detect the tampering. And these two results rely on the computational intractability of the Dense Subgraph problem. So this is a beautiful, well-defined combinatorial optimization problem that people have studied for a long time and believe is quite hard.

23

And I want to just briefly show you where the Dense Subgraph comes in. So this a little bit of CS. So this is basically the point of view of the derivative designer. He has a bunch of asset classes; there are N asset classes. And he knows that a bunch of them are lemons. And because he’s the designer of the derivative he might have some information about which asset classes are lemons. And there’s the buyer and the regulator who’s like, “Well gee, which of these assets are lemons? I don’t know but I want to find out.”

Now what the designer of the derivative can do, he can create products on these CDOs which are basically just bundles of asset classes. So this is indicated by these vertices on the bottom of these vertices on the top. And now basically what the designer wants to do, he wants to pack as many lemons into as few products as possible, to get rid of the lemons. And of course this has a pretty devastating outcome on the expected default rate of these tampered products.

And this dense subgraph, this packing between lemons and products, that’s exactly what’s hard to find. So if the graph is large enough, then this dense subgraph that’s contained in this bipartite graph is actually hard to detect. So the regulator will say, “Oh no, it’s the Dense Subgraph problem. I can’t detect this.”

So this is just a little bit of combinatorial optimization, how it tells you something about how hard it is to price financial products. And not just sort of on an intuitive level, like fundamentally, mathematically there’s nothing you can do about it. You can’t figure out what the fair price is.

So let me wrap up. So here’s my proposal, again it’s Occupy Algorithms. And what I want to suggest is that you guys should help us computer scientists in finding formal definitions and models of the problems that you care about. Because as Bob said yesterday, we’re not going to solve a problem that’s not well defined, that’s not in a mathematical model. On the other hand finding models that’s you know, often most of the work. That’s extremely difficult and we need people who have the domain knowledge to to tell us what the right models might be. We can do the formal part but we need insight on what a good model should do. So this is an interdisciplinary thing.

And if we do that we can make social constraints a first-order problem in algorithm design. And this is actually not unrealistic. This has happened in the past, for example there’s lots of literature on privacy-preserving algorithm which I find quite fascinating. And on truthful mechanism design, where the goal is to cope with strategic behavior. So there are areas in CS where yes, social constraints a first-order constraints and we can create more of these cases. So with that I want to thank you for your attention.

Further Reference

The Governing Algorithms conference site with full schedule and downloadable discussion papers.