Some of the long-term challenges are very hypothetical—we don’t really know if they will ever materialize in this way. But in the short term I think AI poses some regulatory challenges for society.

Archive (Page 5 of 6)

Back in 1980, working with the artificial intelligence guys, we had this idea we were going to make smart machines. But it needed to read good books, don’t you think?

Quite often when we’re asking these difficult questions we’re asking about questions where we might not even know how to ask where the line is. But in other cases, when researchers work to advance public knowledge, even on uncontroversial topics, we can still find ourselves forbidden from doing the research or disseminating the research.

We’ve already been through several situations where new technologies come along. The Industrial Revolution removed a large number of jobs that had been done by hand, replaced them with machines. But the machines had to be built, the machines had to be operated, the machines had to be maintained. And the same is true in this online environment.

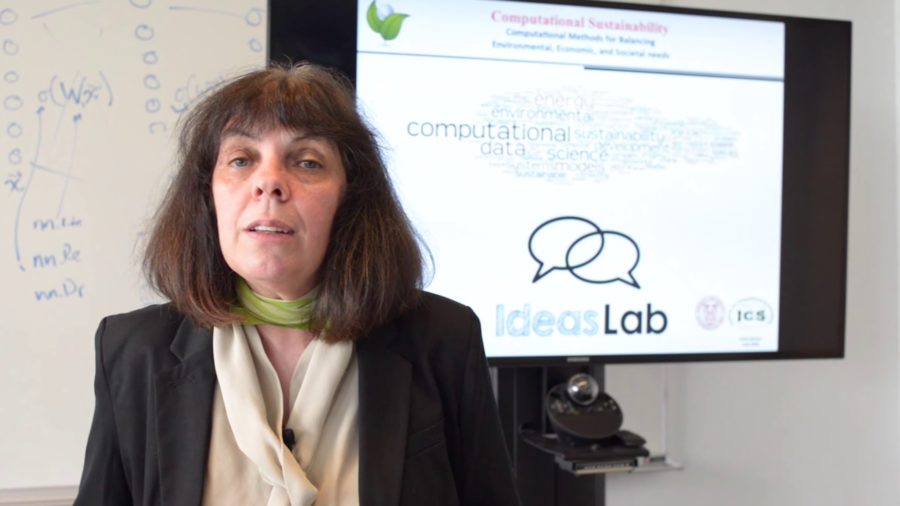

The smartphone is the ultimate example of a universal computer. Apps transform the phone into different devices. Unfortunately, the computational revolution has done little for the sustainability of our Earth. Yet, sustainability problems are unique in scale and complexity, often involving significant computational challenges.

When I go talk about this, the thing that I tell people is that I’m not worried about algorithms taking over humanity, because they kind of suck at a lot of things, right. And we’re really not that good at a lot of things we do. But there are things that we’re good at. And so the example that I like to give is Amazon recommender systems. You all run into this on Netflix or Amazon, where they recommend stuff to you. And those algorithms are actually very similar to a lot of the sophisticated artificial intelligence we see now. It’s the same underneath.

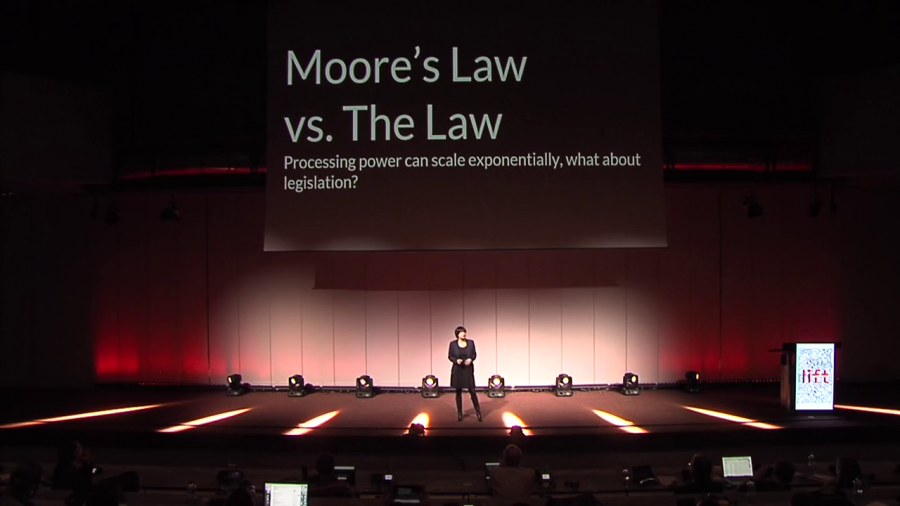

When we talk about technologies such as AI, and policy, one of the main problems is that technological advancement is fast, and policy and democracy is a very very slow process. And that could be potentially a very big problem if we think that AI could be potentially dangerous.

With Twitter bots and a lot of AI in pop science, it’s kind of like staying up late with your parents. Once you ask to be treated like a human being, you have to abide by a different set of rules. You have to be extra good. And the second you misbehave, you get sent to bed. Because you didn’t play by the rules that you were agreeing to be judged by.

We’ve got two paradoxical trends happening at the same time. The first is what I call in my book “the cult of the social,” the idea that on the network, everything has to be social and that the more you reveal about yourself the better off you are. So if your friends could know what your musical taste is, where you live, what you’re wearing, what you’re thinking, that’s a good thing, this cult of sharing. So that’s one thing that’s going on. And the other thing is an increasingly radicalized individualism of contemporary, particularly digital, life. And these things seem to sort of coexist, which is paradoxical and it’s something that I try to make sense of in my book.