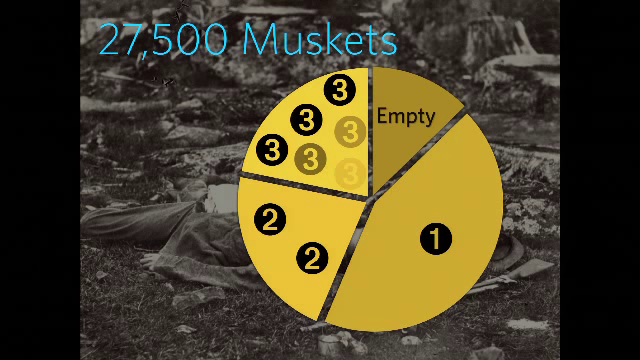

So, humans are actually remarkably averse to performing harmful actions. And paradoxically, one of the best examples of this is war. After the Battle of Gettysburg, 27,500 muskets were recovered. And the US Army expected to find almost all of them empty. Because what soldiers were trained to do is to load a cartridge in the rifle and then as soon as they had it loaded, point it at the enemy and shoot. But when the Army analyzed the the muskets that were collected, what they found is only about 15% of them were empty. And maybe 45% or so were loaded with one cartridge. But amazingly, almost a quarter were loaded with two cartridges, one right on top of the other. And the remaining quarter or so were loaded with three or even more. Sometimes up to twenty cartridges stacked one on top of the other.

And when the Army tried to figure out what was going on, what they realized was that the soldiers were loading, they were trying to shoot but they couldn’t pull the trigger. So they would pretend. And then they would load again, and pretend again, until finally somebody shot them.

So, for the US military, they consider this an enormous problem and you can understand why. It happens in conflict after conflict. It’s not just the Civil War. Front line GIs are really resistant to killing. But from my perspective, I hope from yours too, you don’t see this as a problem but an extraordinary opportunity to try to understand the emotional mechanisms that prevent humans from doing harm to each other, but also to understand their limitations.

So in my lab we’ve been doing a lot of work on this. One of the most recent paradigms that we’ve used to try to get this under experimental control is to ask people to act out pretend harmful actions. So for instance, we’ll give them a disabled handgun. We’ll show them that it’s fake. That it couldn’t possibly harm a fly. We put it in their hands and then we ask them to shoot us in the head. Actually, most of the time it’s my graduate student.

So then what we do, we have them hooked up to to measurement devices that record their blood pressure, heart rate, so on and so forth. And those are reliable indicators of a kind of aversive emotional response. And we find that even for a totally pretend behavior, people have a really dramatic emotional response to this.

But interestingly, if we ask them to just watch as one graduate student shoots another graduate student, the response is significantly weaker. And this teaches us something really important about the nature of our aversion to harm. So, naïvely you might think that the reason that we don’t like to shoot a gun is because we don’t like the outcome. We don’t like the fact that it’s going to kill a person. But even though that’s almost certainly true, it couldn’t possibly explain the data that I showed you, because first of all people knew that there was no actual harm that was going to occur. And second of all, if they’d been worried about even an imagined outcome, they should’ve been just as worried when they were witnessing as when they were doing it themselves.

So what it looks like is part of our aversion to harm is really just an aversion to the physical action. It’s an aversion to feeling the weight of the metal in your hand and wrapping your finger around the trigger. In a follow-up study, we created a new version of this where instead of having people pull the trigger with their own finger, we just tied a six-inch rope around and had them pull the rope. And under those conditions, you find that the emotional response dropped by almost half. And this is really really significant in an era when battlefield technologies are changing the types of physical actions that soldiers are called upon to perform, in ways making them much more distant from their victims. But I think equally importantly in other ways bringing them much much closer to their victims.

And this isn’t just a matter of war, either. I mean, all of you have technology in your pockets that in ways make you much much more distant from people, and in equally important ways bring us much closer together. And this raises deep questions for how you’d want to design and use that type of technology.

But for me personally, what’s most exciting about this research is that all around the world I have colleagues who are making great strides in understanding human learning. The neural and a computational mechanisms that translate the experiences that we undergo into the behaviors that we perform. And in my lab we’re trying to take these models and use them to understand moral behavior. And so this presents us with a really big question. And this isn’t just a question for me or for my lab but for the whole field of moral psychology. Which is, as we gain a better scientific understanding of human morals, is it possible that we can actually overcome some of its limitations?

Thank you very much.

Further Reference

This presentation at the PopTech site.