Anna Skelton: Hi everyone. Thanks for joining me. As he said, this is Deepfakes, Deep Trouble: Analyzing the Potential Impact of Deepfakes on Market Manipulation. My name is Anna Skelton and I am happy as heck to have y’all here with me today.

So, start with a little bit about me. I studied global security and intelligence studies in a little school in the middle of the desert for nerds. I was picked up by GoDaddy initially. I help decide their travel security program. And it was there that I was sent to my first DEF CON, which I went to as a complete and total infosec newbie. I met my mentor Mike, who gently guided me towards infosec said maybe it wasn’t as scary as I thought it would be. I was picked up by a large financial institution into an infosec generalist role, have since transitioned into strategic cyber threat intelligence, which I absolutely adore. In my free time, I like hanging out in my F‑150 pickup that I’ve converted into a camper named Harvey, or snuggling up with my Siamese kitty cat Eleanor.

So, before we get started, I wanted to give a little bit of information on how I’m going to be delivering this talk. so, I believe that the barrier to entry for new topics in infosec should be lower. I know that some of you in this room probably definitely know more about this than I do, but some of you may not know anything at all. So when I explain things I’m going to do so first in a very technical way, and then I’m gonna go over it again in a layman’s way. In my experience, even if you’re really comfortable with the material, hearing it explained in a different way can help shift and mature your perspective.

So you’ll find that this talk is very linear in the way it’s laid out. We’ll start with deepfakes, what are they? We’ll move into market manipulation and a little bit of an explanation of the markets. We’ll look at past examples of negative cyber influence on the stock markets. We’ll talk a little bit about my own misadventures and adventures in deepfake creation, then we’ll talk about future possibilities and wrap up by exploring some solutions.

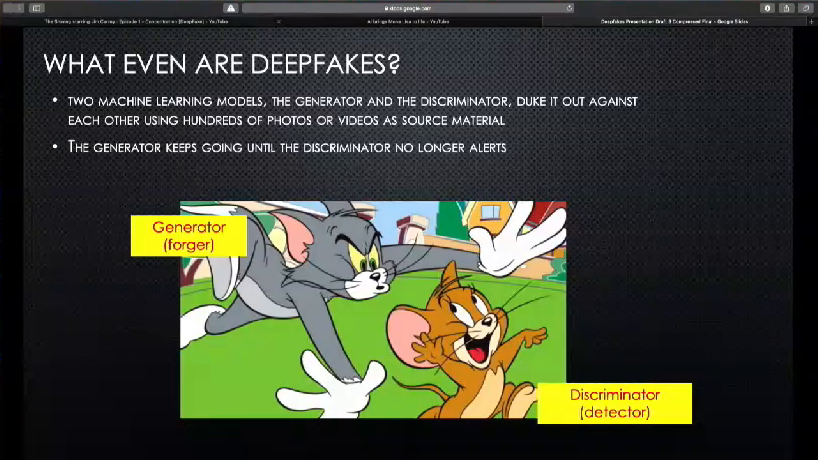

So let’s get started. Deepfakes! It’s quite the buzzword right now. So the traditional deepfakes model uses Generative Adversarial Networks to exploit human tendencies. Basically two machine learning models, the generator and the discriminator, will go head to head. The generator will develop the videos, and the discriminator will point out and identify the forgeries. And they’ll just keep going and going and going, until the discriminator can no longer detect the forgery. So we’re gonna pop on over to YouTube here for a quick example of that.

https://www.youtube.com/watch?v=HG_NZpkttXE

So, I’ve never seen The Shining, and I probably will never see The Shining. But if you had told me that Jim Carrey was in The Shining and then showed me that video I would’ve been like yeah, okay. Like I can see it, sure.

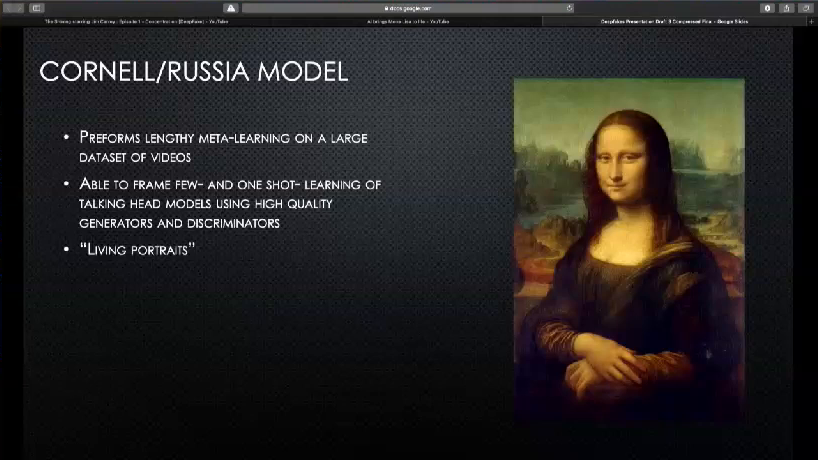

So there’s another model that’s kind of being developed that uses a few-shot capability. Essentially it’s coming out of a research team that’s a partnership between Cornell and Russia. It performs lengthy meta-learning on a large data set of videos and then is able to frame few- and one-shot learning of talking head models using high-quality generators and discriminators. So in lay person’s terms, it just watches a bunch of videos of just people talking, and then uses a few images of the actual target to then create a living portrait.

Now, I bring this up because it shows that deepfakes, even as a concept, continue to grow and develop, right. So we’re not seeing that just what we know now as deepfakes is where it stops. This is going to continue to develop as time goes by. Now we’ll pop back over to YouTube for another example of that.

https://www.youtube.com/watch?v=P2uZF-5F1wI

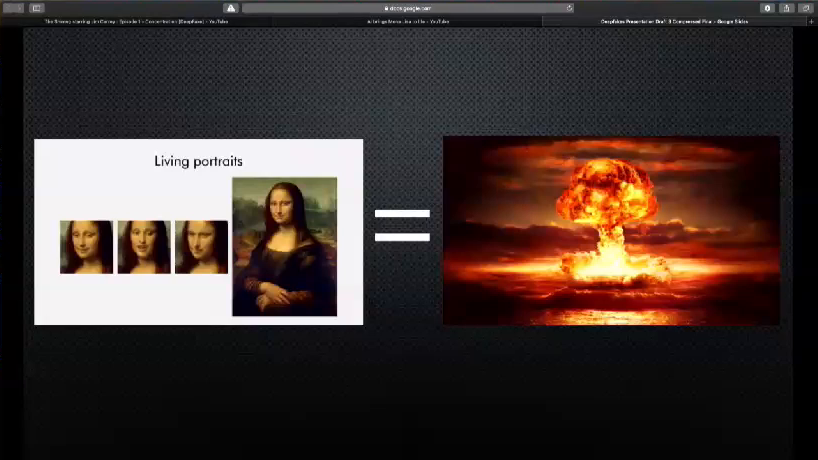

So that shows you how just one image of a painting done literally hundreds of years ago can be put into this format and then come out with living portraits that look like you know, she’s talking.

And Washington’s starting to take notice of the deepfake threat, right. So Marco Rubio recently said that deepfakes were as dangerous as the threat of nuclear war, right. So we’re talking about some pretty serious stuff here.

As far as the laws go, the legality of deepfakes, it’s kind of across the board. So, in Virginia they recently passed legislation outlawing deepfakes as part of legislation combating revenge porn. In Texas there’s a law that goes into place on September 1st that outlaws deepfakes as used in elections. But, if we just keep approaching this threat from a state-by-state legislative perspective, it’s going to be completely inadequate to cover the aggressive deepfake threat. There are two pieces of legislation about deepfakes in the House of Representatives right now, but neither of them are gaining that much traction. And even if they do, we all know how long and arduous the federal legislation process can be, especially with a divided Congress.

So let’s take a minute now to introduce the market. So, within the market you have three different components. You have the currency markets, the equity markets—which is like the stock market, and bond markets, which are backed by the Treasury.

So, it would be hard for deepfakes to impact currency markets because they so intricately deal with the relationships between two different nation*states. So for the purpose of the talk, we’re just gonna go ahead and take currency markets out of it.

Within the equity markets you have the Dow Jones, which is very banking-heavy. You have the NASDAQ, which is very tech-heavy. And you have the S&P 500 which is kind of a mix of everything. It’s worth noting here that there is a trading curb rule, which is basically a fail-safe in place. If enough suspicious activity happens, it will automatically halt trading. That’s important; we’ll back to that.

So market manipulation is narrowly defined as artificially affecting the supply or demand for a security. So essentially this is manipulating for example a stock price using misleading information about a company, or an individual, or really anything at all. We can assume that some level of market manipulation happens every day, but deepfakes exacerbate the scale and risk of damage to the market because the market is so volatile. Today we’ll be looking at this from both a micro and a macro level. So, at a micro level we’ll be looking at just impacting individual stock prices. And at a macro level we’ll be looking at the potential for deepfakes to cause a serious and significant domino effect that causes sophisticated damage.

I’d also like to point out this like vaguely unsettling photo that comes up when you Google Image “market manipulation.” Every time I look at it it just makes me kind of uncomfortable.

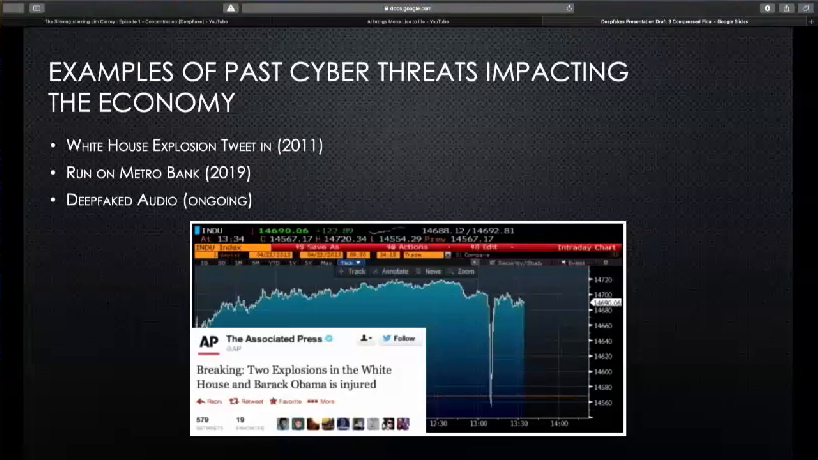

So, taking a quick look at past examples of cyber threats impacting the economy, you might remember in 2011 when the Associated Press’ Twitter account was hijacked and a tweet was published saying that there had been an explosion in the White House and the President was injured. Immediately, the Dow Jones plummeted, and the S&P 500 reportedly lost $136.5 billion in market capitalization, which is the value calculated by the number of shares divided by the price of those shares. As you can see, it quickly it bounced right back up. But in this case a lot of the damage was caused by computerized trading algorithms that monitor social media and news sites and then adjust stock prices based on predetermined rules.

You may remember in April this year when there was a huge run on the Metro Bank in the UK after a WhatsApp rumor said that the Metro Bank was no longer liquid, and people were running into the streets, literally, standing in line for hours waiting to pull out all of their money from this bank. It was all completely a rumor but this one turned physical. A lot of people who were leaving the bank were robbed, of literally everything they had.

And even right now there’s deepfaked audio in which attackers are using the voices of executives of large financial institutions to basically bully lower-level employees into transferring money around into not-so-savory accounts. Already it’s been reported that this has taken three million dollars, and that was just at the end of June. And that’s just what has been reported, so you have to imagine that realistically, that number’s a lot higher.

So, I’m going to take a second here to talk candidly with you guys about my own adventures and misadventures in deepfake creation. So, when I started this project, I assumed the barrier to entry was like, here? [crouching down and indicating near the floor] Like, so low that literally like any Joe could like walk up to a computer and be like, “I made a deepfake!” right. And I was wrong. Now that I’ve gone through this process, I would say it’s probably somewhere more around like here, right? [indicating near waist level] So, it still is an accessible technology, especially if you have the time, the patience, and even just like an inkling of technical experience. I still think it’s a very viable threat vector.

So within my own research I uncovered two schools of thought. There’s DeepFaceLab, which is an active Github repository, and FakeApp, which was taken offline in early 2019 but is still available. Interestingly, both of these have Windows dependencies, and they both rely on Nvidia graphics cards. So I learned that if you’re using VMware, the VM cannot access the graphics card of the computer you’re using. Which was interesting. So you need to have a system that first of all is running Windows OS, and then if you don’t already have an Nvidia graphics card, you’d have to use an external graphics card. That was actually really surprising to me.

I also ran into a couple of fun legal issues when I was creating my deepfakes. Got yelled at by a corporate lawyer. And he said, “You can only make a deepfake of yourself.”

And I said, “Lame.”

Like what, I could like literally be like, I could make a video saying like I really don’t like parmesan cheese. And then I could stand up and be like, “I didn’t say that. I would never say that.” So…kept pressing, and eventually convinced him to let me try to find somebody who works for the same financial institution I do who was willing to give like, written legalese permission that I could make a deepfake out of them. Some of you may recognize this guy on the screen here. This is David Mortman. He runs the CFP for BSides Las Vegas. He’s been around forever. Super cool guy. And super willing to let me make a deepfake of him. So that worked out really well.

So, as you can see here these are my… David’s no Kim Kardashian, right. But it was a very easy Google search to go in and find two videos to extract from that feature him from the head and shoulders up with nothing in front of the face. And actually this one down here would be considered subpar because it does have the microphone but look, I was just taking what I could get, alright.

So diving a little bit deeper into the two different schools of thought. DeepFaceLab, like I mentioned, active Github repo. It offers three different packages which are dependent on your graphic card specs, and they’re available for download on Google Drive. It’s actively updated; last one was just in July of this year. And it’s a lot less user-friendly, right. So, as you can see from this very blurry screenshot here you’re essentially running your own commands on the video. So it’s a much more manual process. And that I think raises the barrier of entry considerably. The YouTube guides…subpar, right. Apparently, the guy who runs DeepFaceLab is just one guy in Russia? And his English is like nonexistent. So he epically Google translated his entire readme. Which as you can imagine is…exceptional. Probably one of my favorite parts of this process. For instance, you can see down here kind of, “DeepFaceLab created on pure enthusiasm one person. Therefore, if you find any errors, treat with understanding.” Like…I love this guy already.

But as you can imagine, his YouTube video that he made to guide everybody through the process doesn’t have any audio. So you can imagine if you’re coming into this as somebody who’s not used following along with technical videos that are moving really fast, it would make it difficult. There are some of course where somebody has made audio for it but I was overall not very impressed.

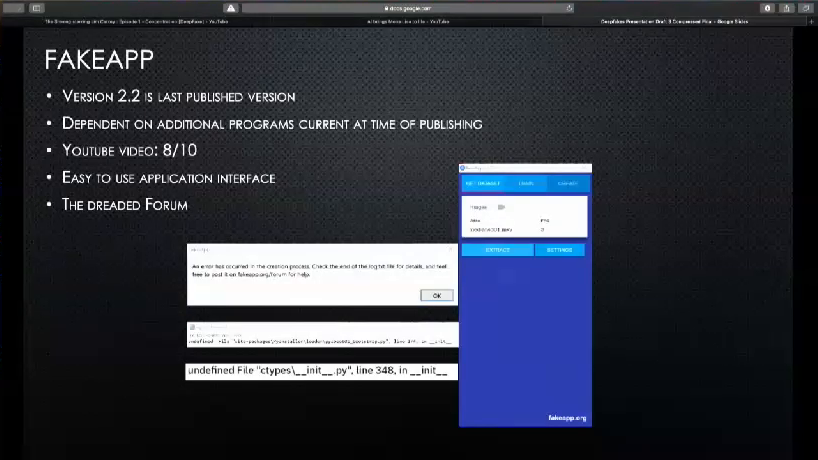

And then, there’s FakeApp. So, FakeApp was taken down in February this year. It was originally hosted on fakeapp.org, which has also since been taken down. Last published version is version 2.2. The majority of the issues I ran into with FakeApp are around this point right here. It has considerable dependencies that are very specific. So like I said, last published version February of this year. All the dependencies it has require the exact software that was up to date in February, of this year. So for instance, CUDA. It likes it, it needs it. CUDA’s now on version like 10.1.3 or something like that, and FakeApp requires CUDA version 9.7.1, with a specific patch set. It’s really annoying. Also Windows Visual Studio 2015. Exclusively.

So you can see… [laughs] Which is weird, right? Because you have to have a Windows license to get like older versions of Visual Studio. So, that’s where I ran into the majority of my issues. However YouTube videos…impressive. It’s just a guy, slowly walking you through everything step by step. Very easy to follow. And some of his videos rack up like 400- to 500,000 views, and they’re still getting views today, which means that I’m not the only one out here trying to get into FakeApp.

The application interface is extremely easy to use, especially when compared with DeepFaceLab’s, right. So, you can see over here you essentially just direct it to where you want it to pull the video from, and then you click…“extract.” And then you go over to the video you want it to train from, and then you click…“train.” And then you get over to this tab where you create it, and you click…“create.” So it’s a lot more user-friendly, and even the application interface is so much more friendly than manually running the commands yourself.

However, there’s another issue with FakeApp. And that is…the forum. Okay, so apparently, I need to…figure out how to lower my voice to say that like more intimidatingly, like the forum. Something more like that. So apparently when fakeapp.org was still online, it had a page which was a forum where you could go and ask any and all of the questions that you ran into when you were using this. And I guess it was probably super helpful. So now, any time that you run into an error on FakeApp, it sends you this very polite message: “Check the end of the log file for details and feel free to post it on fakeapp.org/forum for help.” And you’re like no, I can’t.

So these are the two errors that I ran into approximately fifty times each, just trying to get through the extraction portion. Interestingly there are Reddit posts on both of these errors, but it’s literally like one guy being like, “Hey. I have this error.” And then another guy comes in and is like, “Dude! Me too.” And you’re just like come on guys, like where’d you figure it out? And then you remember that they probably used the forum.

So overall, FakeApp worries me way more than DeepFaceLab. So, it’s way more accessible, and what I’m concerned about is another FakeApp essentially coming out that makes it accessible again, right. So it’s not online anymore, you have to go look for it. But whatever comes out next is probably going to be even more accessible and more easy to use.

So let’s take a second to talk about if you were able to create a really high-quality, sophisticated deepfake video. What could you do? So, earlier I mentioned the trading curb rule. It’s not enacted often, but it is enacted. So the last time it went into place was in China in 2016, when Chinese stocks fell 7% within twenty-seven minutes of opening.

So, in order to get around the trading curb rule, you’d either need to be sneaky enough to just slide right in under it, or you would need to create enough impact and enough damage that it just didn’t matter at all—even if it was triggered, it wouldn’t matter anymore.

So, to slide under the failsafe, you could perhaps make a deepfake video with a super negative sentiment about a financial institution and then pass it around even just on Twitter, even just among Twitter bots. When the trading algorithms connected to the Twitter API to pull the data to adjust prices based on predetermined rules, it would seem that negative deepfake sentiment and potentially impact the price of that stock. So that’s a way that you could do it where you’re slidin’ in right under that benchmark rule, hopefully not raising too many red flags right off the bat.

If you wanted to cause a lot more damage, though, you could take a slightly different route. So, let’s start with the Dow Jones since it’s very banking-heavy. So, say you release a deepfake video of a CEO of a large corporation. It’s high-quality, and he says, “Yo. My firm is no longer liquid.” We saw in the case of—probably not like that, though. We saw in the case of Metro Bank that this immediately takes effect and even just liquidity issues on their own are enough to cause people running into the street, pulling out their assets, and causing lasting enterprise impact to that financial institution.

That’s just if it doesn’t take hold in the stock market. But let’s say it does. So, next you could— So you’ve already affected the Dow Jones. The next thing you could do would be to release another video, maybe even using the same CEO blaming a specific tech company for the damage caused by the first video. Now you’re affecting not only the Dow Jones but also the NASDAQ and the stocks that reside there. As you can see, it would not be hard for this to quickly spiral out of control and the trading failsafe rule wouldn’t even be able to stop it.

So, let’s talk about if you wanted to affect the bonds market. So this would be a longer shot situation because the bonds market is backed by the Treasury. You’d need to call into question the ability of the United States to pay off its debts, right. So this would be perhaps a deepfake video of the Chairman of the Fed, or of a high-ranking member of Congress. And what you’re looking to do here is essentially pull down the the belief of other nations—and even our own nation—that we can pay off our debts. And this might not sound too out of place, especially when you consider that the 2019 budget renegotiations for 2020 are coming up this fall and they’re already raising a significant number of red flags. Especially with the extended government shutdown that happened last time we did budget renegotiations? it doesn’t seem out of place to think that some damage could seriously be caused with a high-quality, sophisticated deepfake video.

So let’s talk about solutions. So by nature, right, deepfakes technology exists to never be detected, right. You want it to be… It exists to make itself good enough—as does many other AI concepts—that it can’t stand apart from reality. So in that way, you really need a short-latency solution. But let’s start by discussing the longer-latency solutions just because those are actually a little bit more built out.

So the University of Rochester has recently released a study saying that they can use integrity scores to essentially grade the videos that you see. So it would be a browser attachment and it would color code the video based on how much of reality it really reflected. There’s a start up called Amber Authentication which is using cryptographic hashes to discern deepfake videos. There’s a very vague startup called New Knowledge that for the low low price of $500,000 dollars says that it will protect your company from the spread of misinformation. But that’s as specific as it goes and I’m convinced they’re not really sure how they’re going to do it, either. And of course we could not get through a talk about deepfakes without talking about blockchain. There are several different companies. Fatcom is one of them which is using blockchain to discern between deepfaked and real videos, with varied levels of success.

But like I said, these all need videos to be online for a longer period of time in order to actually be effective. And the market’s so volatile that we need something that is a short-term solution that can immediately come into place to stop that damage before it happens. So, as far as I’ve found the best option we have right now is just to monitor for the development and release of accessible deepfake software, on both above- and below-ground markets. Looking for the next FakeApp. Looking for the next thing that’s going to allow anybody with the time and the patience to just sit down at their computer and create a damaging video.

Ultimately, in order to reach these solutions, it’s gonna need a lot of different aspects. Human review, collaborative research, collaborative sharing platforms, a whole slew of things just to be able to start approaching the threat. But, I think we can do it, and honestly we really don’t have a choice.

So, throughout this project I was definitely too blessed to be stressed. And I was stressed anyway. John Seymour, who introduced me, was my BSides LV Proving Ground mentor. He’s awesome. David Mortman of course let me use his face, which I appreciate. And all of these other folks were just super super helpful as well. At the bottom here is my Twitter handle. It’s the best way to reach me for questions, comments, qualms, or general existential tidbits, any all these things. And that’s my talk for today, so thank you all for joining me. Questions?

Audience 1: [inaudible]

Anna Skelton: No, I haven’t. So, one of the big issues with that type of solution is volume issues. So if you can imagine you know, trying to apply that to every video that exists or get the right metadata to actually reflect that and apply it to all the different videos that exist, that’s where I see that potentially running into complications. I haven’t done any research on that but that’s definitely something to look into for the future.

Anybody else?

Audience 2: [inaudible]

Skelton: So, that’s a really good question. On that topic, I am a glass half empty person. I genuinely believe that deepfakes will continue to evolve faster than our detection mechanisms can evolve to keep up with them. Yeah.

In the back.

Audience 3: [inaudible]

Skelton: I have not looked into that. I don’t know what goes into making any sort of chatbot. That’s definitely food for thought.

Audience 3: [inaudible]

Skelton: So, I was not successful in making my deepfake. I did run out of time. I definitely should’ve started earlier. And I definitely did not have the right technology going into it. Like I said the Windows dependencies and the Nvidia graphics card dependencies are weirdly like, big roadblocks. But I spent probably sixty to eighty hours working on it. A large part of that was just weird troubleshooting and making sure I had the right technology. If you walked in with a Windows computer with an Nvidia graphics card you could cut off sixty of those hours.

In the red shirt.

Audience 4: [inaudible]

Skelton: That’s a good question. I would assume to some extent yes. But like I said with that guy’s question, at that point it’s a volume issue as well. And another point to bring up here’s even if you can detect that a video is fake, if it’s already been seen, does it even matter?

Yes, you in the glasses.

Audience 5: [inaudible]

Skelton: Well, I think we know with FaceApp being sent directly to Russia, and this one guy out of Russia making DeepFaceLab, that they probably have their own deepfake software… That’s actually a really good question. So, when I originally started this project I had to decide if I wanted to look at it from the perspective of the nation-state, or from the perspective of perhaps a lower technical capability hacktivist group. And I ultimately decided to go at it from the lower capability hacktivist group. I deal with nation-states in my line of work now and I have learned that you really can never exactly guess how capable they are and they’re probably way more capable than you think they are. So yeah, that’s how I chose the scope to do a hacktivist but yeah I’m sure they have their own technology.

You and then you. In order of seat row.

Audience 6: [inaudible]

So, they’re using… The traditional model like I said is using the generator and the discriminator—the traditional adversarial model. I don’t know a lot about module encoders so I couldn’t—

Audience 6: [inaudible]

Skelton: What?

Audience 6: [inaudible]

Skelton: Oh, auto encoder. I thought you were saying module encoders and I was like what is that?

Audience 6: [inaudible]

Skelton: Right. Yeah. Yeah I think to some extent the technology is similar. But I mean, I literally came into this knowing zero things about deepfakes, and all I know is what I learned. So yeah, still learning. Always.

Audience 7: [inaudible]

Skelton: Yeah, absolutely. I mean, I think you know, that’s just is that the next step? You know, do they commercially release that to the public or is it like…you know, when they recently released the BlueKeep POC and everyone’s like “Don’t do that,” and they’re like, “Oh it’s only for people that subscribe.” But yeah. I mean, I think it’s just waiting to see if they’re going to release that software. I absolutely believe it. I think we know that they have the capability to develop it. But yeah, if they’ll make it commercially available is the question. Hopefully not.

Okay. I don’t see any more hands, so I’m gonna go ahead and say thank you all for joining me today, and I hope you enjoyed it.