When Darius asked me to speak I had to think a little bit about what I would say to people who make software agents, which I think is really really cool. And to me, in thinking about it, I think what is a bot to me? A bot is fundamentally a piece of software that involves personality. And I’ve had a long-running interest in building physical robots that have personality of varying degrees. So I proposed to give a talk about that, and I’m going to go from here.

So here’s me. I did research in college in a robotics group dedicated to building machines that interacted with people in sociable ways. I’ll give a little overview of some of the things I worked on in that lab, some of the other projects the lab did, understanding what personality is, and then at the end a little bit about how machines can perhaps generate it, and what software robots can take from that.

So, these are the two main projects I worked on in this lab. On the top left is my grad student Jeff Lieberman, who’s wearing a shirt which has markers that let you track his body and then we created software that would then train you, using feedback on how to move. The idea there was either for therapeutic cases or perhaps to help someone with their tennis game.

At the bottom, far more relevant here, is Autom, a robot I worked on. It’s not a very tall robot, it’s about a torso. It sits on your desk. When you walk in, it looks at you. Its eyes track you. It speaks to you with a generated voice. This started before me, but I worked on it in 2006, 2007, so this was before Siri was a thing. And it had a touchscreen, which is an important part of something I’ll describe later. But you interact with it by as its speaking to you responding via touching the screen on its chest level.

This group was started by Cynthia Breazeal. (A quick shout out to Professor Breazeal.) She built what is credited as being one of the first socially-interacting robots. That’s her with Cog as a PhD student in Rodney Brooks’ lab.

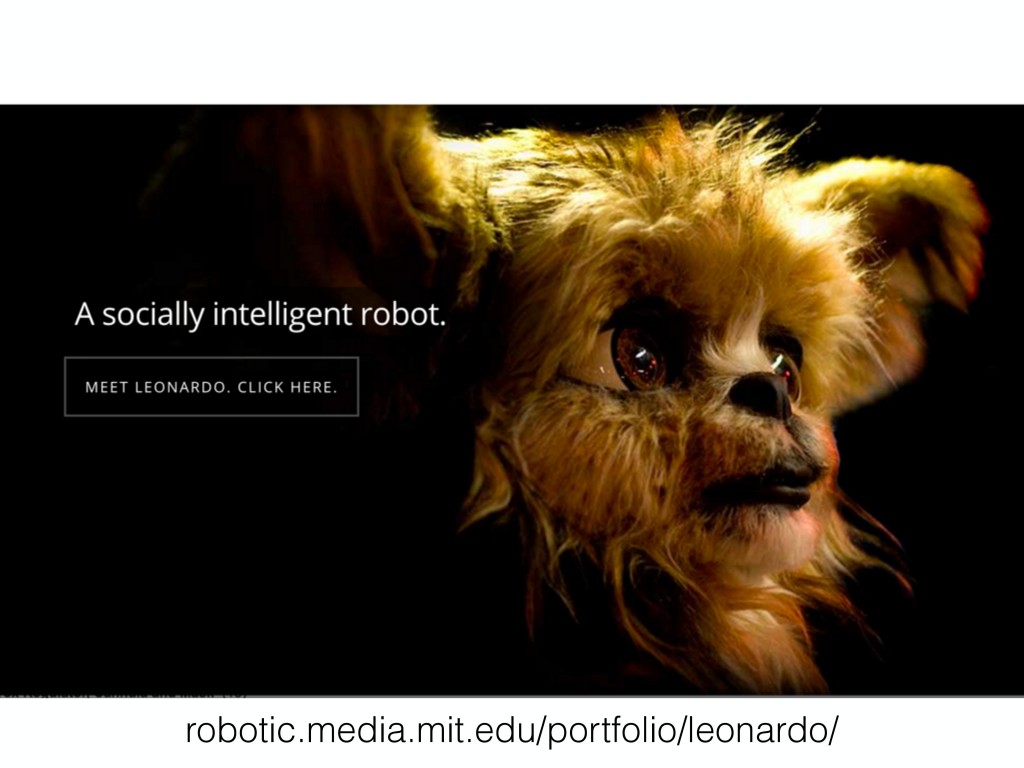

Here’s one of three robots I’ll give as examples for things that were worked on here. I think this is a really interesting robot. This is a robot called Leonardo. Leonardo was built in collaboration between this robotics group and a prop shop. So, they professionally build creatures. You can probably tell this was not the sole work of a bunch of MIT students. And Leonardo exists in a room that can tell where people are. It’s instrumented with cameras. And that let people do research using Leonardo to build a system where the robot can appear to recognize objects.

So, one of the experiments they did was you would have a shell game. You’d have an object and would hide it. And the grad student would ask Leonardo the robot to identify where the object was. And it could understand the human language and then point to where the object was. And they developed this to a point where the grad student could then leave the room and put on a hood, and come back as a villain (so someone Leonardo didn’t recognize, because his face was covered), and then change where the object was. And then Matt would leave and come back again and come back as himself, and then ask Leonardo, “Oh, where’s the object? Is it under where I left it?” and Leonardo, this robot, was capable of expressing, non-verbally, using only facial expressions that it knew something that you didn’t know. Which is pretty advanced. This is considered like, four year-old cognitive development, that I know something that you don’t know, and that we have differing impressions of what things are like. This is really cool.

This was a different direction. This is Ryan Wistort’s work. Ryan built these robots that I really liked. This is a little bit like—if anyone’s familiar with Keepon, a cute little robot. I’m including this because Leonardo is at one end of a spectrum of the extent to which you can build something with extreme complexity and very detailed features. These robots, Tofu, are basically the other end of it. They’re animatronic puppets, and these were an exploration in using techniques borrowed from animation. So basically they squash up and down, and then they stretch, and all of the emotional expression of these robots come from their eyes and that ability to squash and stretch. So I think what I learned from these is that you can have on the one hand this very advanced sort of interaction, but you can also have quite a rich interaction with something that’s far simpler.

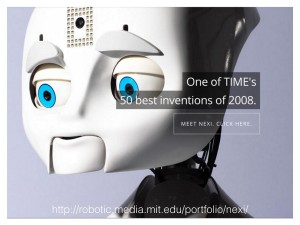

And then lastly this was the flagship robot of this research lab. This is a robot called Nexi. Nexi has, you can tell, a very advanced face. This is quite up there for what research robots do. It has a jaw that moves. The eyes move left, right, up, and down. It can track your eyes. It can blink. And it has actual robotic eyebrows, with multiple degrees of freedom that they can move it. So, it’s quite able of expressing any facial expression you could want it to do. You can have it do that and it can appear to hold a real conversation. It’s also on a mobile platform and has hands. So it was called the mobile, dextrous, social robot. It can do all of these things.

And that was really neat because this opened up this avenue to do greater testing. This is research that Jin Joo Lee did, which is really really funny to me. I think this has to be one of the more uncomfortable experiments you could do. Because Nexi has hands, they wanted to find out what does it take to make someone feel more trust, or less trust, in a robot. So this starts to get into building a personality. Jin Joo Lee worked with a team of psychology students at Northeastern University, and they identified a couple of behaviors that make you trust people less, or make people generally— They thought they wanted to test this. Does this in fact inspire lack of trust? And those are things like if someone maybe touches your face, or leans back, or crosses their arms, or touches your hand.

Those are all things that are identified as inspiring lack of trust. So they had Nexi, the robot, do this in these psychology experiments. I’m merely an armchair psychologist, but to me the guy looks pretty uncomfortable there. And this was seen as being a really cool thing to do with a robot in a research context, because you can have a grad student or someone you’ve hired—an actor, maybe—come and try and do this experiment, but that person’s going to have other things associated with them and maybe they’re in a bad mood today and just no one trusts them, you know, it’s a Tuesday. Who knows? But the robot can do the same thing every time.

And this is fascinating to me. And of course the result was people did feel less trustworthy toward the robot. That’s a mind-blowing sentence to me, but on another level is intuitive and makes perfect sense.

So this raises two questions for me. One is how do you understand affect? And then how do you understand what the person you’re interacting with is feeling, also? So in thinking about that part of it, Nexi of course had a voice, and I think one of the earlier speakers referenced what tone of voice you use. We’ve discussed also gender in robotics. And so I wanted to raise for this audience a related, very interesting study, not from the same lab but from a different one, where these people were building basically cars with a software agent built in.

Drivers who interacted with voices that matched their own emotional state had less than half as many accidents on average as drivers who interacted with mismatched voices!

Matching In-car Voice with Driver State: Impact on Attitude and Driving Performance

In short, they wanted to add a voice to the car, that you would interact with, that would tell you helpful, useful things. And in doing this, they wanted to know, can this voice influence people to be happier. Which sounds horrible, right? But, can you have this voice somehow be an excited voice and have that make people feel more excited? How does that interaction work? And they found that people preferred to interact with a robot whose voice matched their own affect. So if you were depressed, you’d feel better talking to a depressed robot. And if you were just a happy person, you would prefer talking to a happy robot. So then affect detection became really important and interesting.

So that brings me back to Autom. This is the robot that I worked on. And you can see here, this was a later model. Cory ended up leaving the lab and starting to sell them as a product. It has the same touchscreen in the front, and you can see the UI that was built for Autom. You can see it has three buttons, and again this is still a touchscreen, so Autom speaks and the person responds. And I thought this was extremely clever. The solution found here for figuring out how someone feels is there are three responses. The question says, “Hello. I see it’s late, so I’ll help you get through this quickly and start making progress toward your goals.” Autom’s is basically a really advanced food diary. So you interact with it, tell it what you ate, and then it would help you maybe lose weight or whatever.

And trying to determine what sort of mood a person’s in is done right here in this interface. These three answers are exactly equivalent. They all just get you to the next screen. The first one is like, “Hi.” The second one is “Good to see you.” And the last one just says “Okay.” And in seeing people use this—it’s fascinating—people would select the one that matched their own internal voice. They wouldn’t even register the other possibilities. So, I think this was something to learn in the course of building robots that I think tremendously affects my sense of how people perceive personality in robots.

So, at this point I became sort of obsessed with this idea of how do you put personality into a thing? The idea that people would feel less trust in a robot… It’s a pile of bolts and computer, right? Why would you feel one way or another? So I started to try and say, okay so what is personality? And then when we say, you know…some people we would describe as having a lot of personality. So, what is that? What’s going on there, when someone has a lot of personality?

That led me (I promise this isn’t directly, of course) to Tom Waits, who’s a musician. So, describing this a little more for me for how it relates to this, it’s like Tom Waits is a musician. A variety of characters come out in his work. And you can tell from this picture he’s very expressive. And I actually, in the way that very exuberant people in their early twenties will do, I would go around this robotics lab and just sort of try to shake people at the shoulders and say, “Why can’t we build a robot with as much personality as Tom Waits?” And my older friends the grad students were like, “Can we start with something a little easier?”

But nevertheless, I went on exploring. So what makes Tom Waits so personality-filled? And I thin it’s a couple things. He’s very expressive. So, he does things that are very emotional and that inspire emotion in others. It’s generative. So these things come from within him somehow, perhaps in response to an environment, but they’re definitely of Tom Waits. And then they’re unexpected. Like, if tomorrow one of us were to wake up and say, “I’m going to do Tom Waits today,” you couldn’t, right? You just couldn’t even take that one. Something that he’s doing is not predictable.

https://www.youtube.com/watch?v=_RyodnisVvU

From there I want to share a little video. It’s short, but hopefully also amusing. This is my favorite robot, and the best robot I’ve ever found that incorporates personality in the way I think I mean that has those three qualities. This is the Little Yellow Drummer Bot, made by a guy named Frits Lyneborg. He’s letsmakerobots.com, and I’ll just go ahead and show you. It’s a musical robot.

So you can see in this robot’s behavior, it’s doing a lot of those things. It’s very expressive, right? Like, it’s very difficult to see that robot—and you know, I heard some people laughing [inaudible]. But it gives you a sense of joy, I think. It gives me one, anyway. It’s generative, so it’s doing something new every time. It’s actually playing and then listening; it records itself. And then again plays music against its own recording of the surface it’s playing music on. And then it’s unexpected, right? It’s responding to its environment. It’s based on some rules, but you don’t really know what’s going to happen every time.

And so for me, those are the answers and those are the things I would think about that I would leave you with here at Bot Summit in building your cool software that I want to interact with, and find out how they work.

Thank you very much.

Further Reference

Darius Kazemi’s home page for Bot Summit 2016.