So, when I think of the cyber state, I might be thinking of the usual stuff, which is governments of the world and their intelligence agencies gathering information on us, directly or indirectly, through relations or compulsion with private companies. But I want to think more broadly about the future of cyber state, and think about accumulations of power both centralized and distributed that might require transparency in boundaries we wouldn’t be used to. Anything ranging from Google Glass or whatever its successors might be, so that we could find ourselves live streaming out of this room at all times and have that telemetry automatically categorized as to with whom we’re having conversations.

And imagine even the state then turning around and saying in the event of something like the Boston Marathon bombing or in Paris, “Anybody near this event is going to have telemetry that we will now request from patriots,” or demand from those are a little chary, and see just how much data can be accumulated from a peer-to-peer kind of perspective.

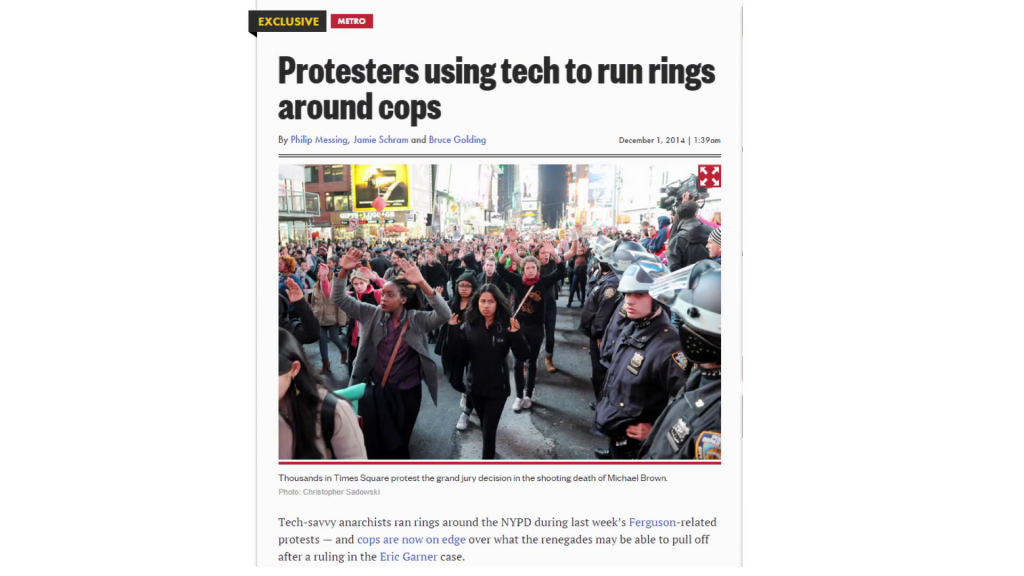

But I think it won’t just be the state are getting assisted from it, it’ll be challenged, too. This is an app called Sukey, in which demonstrators can put into the app where the police are as they’re demonstrating, and the app will then show you the still-open exits to wherever you are that the police hadn’t gotten around to closing off yet. And that’s the kind of thing that worries the authorities.

But there’s a third dimension, too. And that is, how are these folks deciding to convene for a protest? It might be through something like Facebook or Twitter, or other social media. In this wonderful example, Facebook was able in the 2010 US congressional elections to get more people to vote by putting notifications in their news feeds of where the polling places were. Now, they could do it selectively, and they’ve got a lot of data by which to do that.

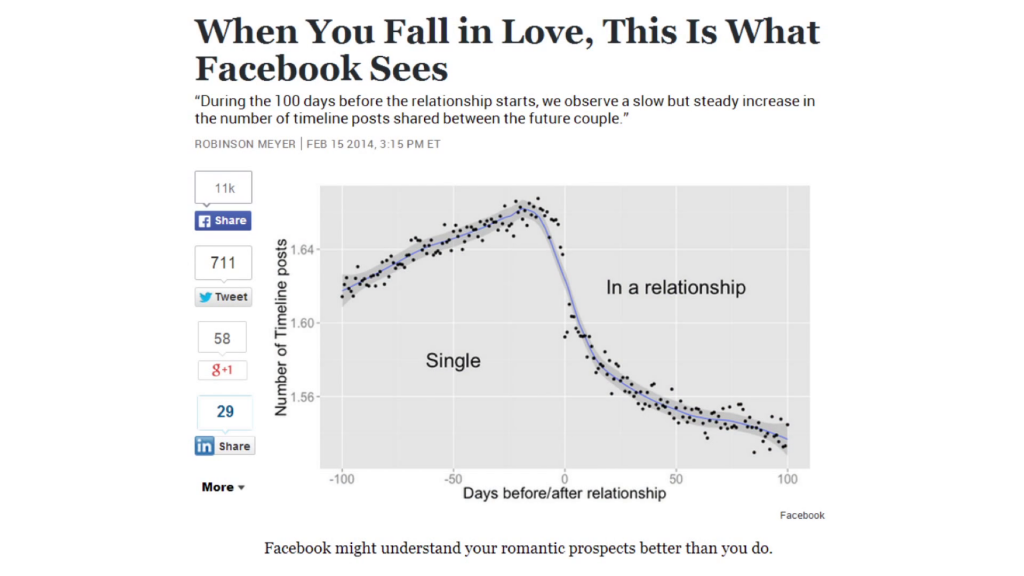

Here’s Facebook able to predict when two Facebook users are about to enter into a romantic relationship, before either of the people apparently knows. And you could imagine Facebook, hypothetically, tweaking the feeds to encourage the people to come together by showing their good sides. Or maybe if they don’t belong together, pushing them apart, and they shall never be.

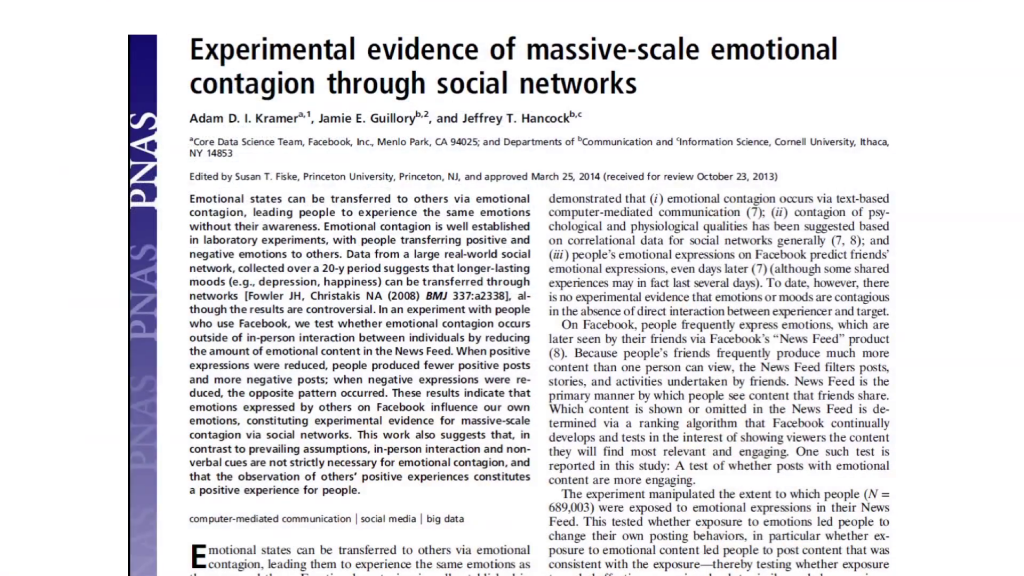

In this highly controversial study, there was—maybe not surprising—evidence that if your feed is filled with happy things you get happier, and if it has unhappy things you get unhappier. Which if you think about it, especially for those in a younger generation for whom they’re getting their information from Facebook and Twitter, et al, their happiness level, whether they feel the world is falling apart or coming together, can be determined through these sort of recondite algorithms.

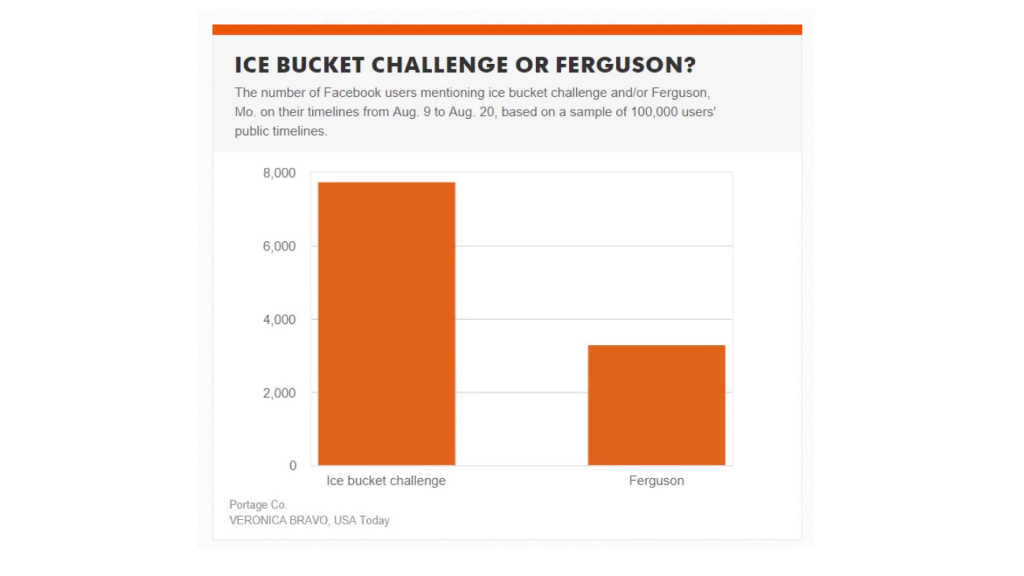

And you could, see some, day Facebook being challenged. Maybe we should put more Ice Bucket Challenge and less a little bit of stuff coming from Ferguson, Missouri if there’s unrest there, in the name of public safety. Thinking about how that should be dealt with.

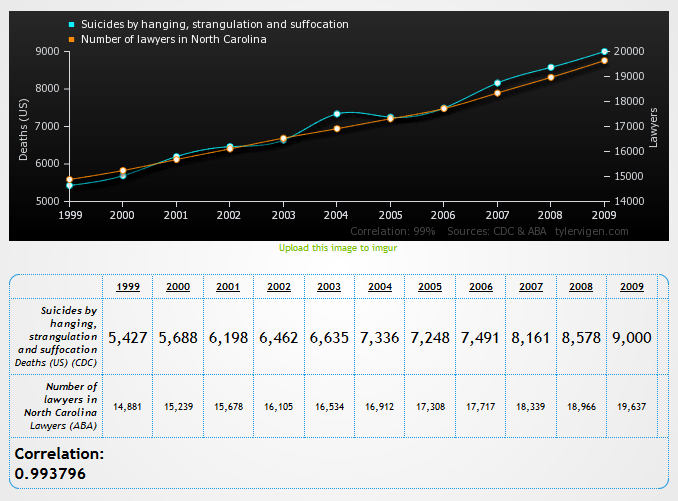

Image: Spurious Correlations

Now, the data that will be used to do this isn’t always solid. Here you can see a very high correlation between suicides by hanging and the number of lawyers in North Carolina, which as far as I can understand are not related at all.

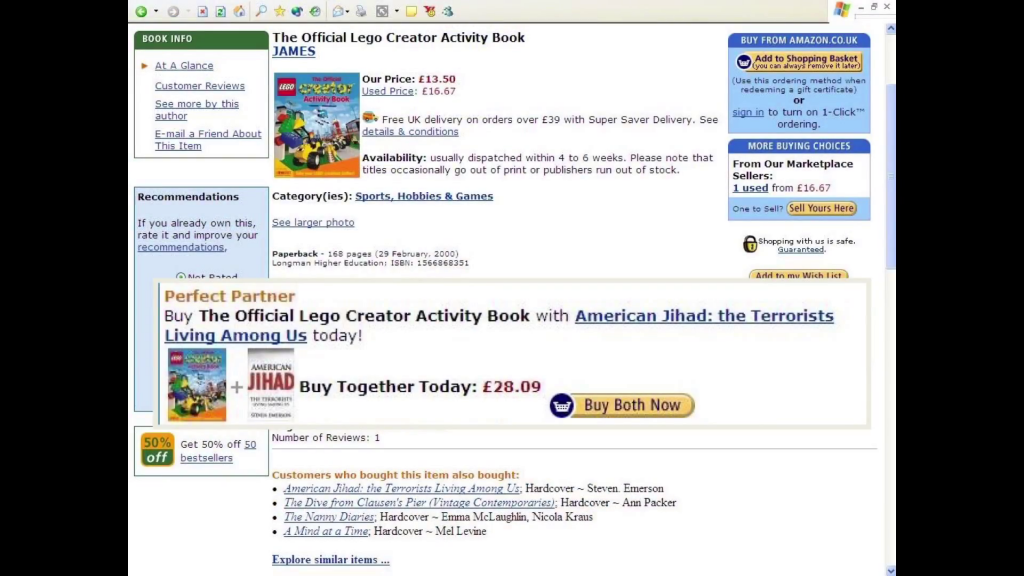

And these kinds of mistakes can lead the funny things here, like the Lego activity book paired with American Jihad: the Terrorists Living Among Us.

And other mistakes can be when algorithms collide. In this wonderful example from Amazon, a regular pedestrian book was selling for 1.7 million dollars because two sellers got into an algorithmic battle, unbeknownst to each other, where they had a formula of calculating their rates based on the other that didn’t stop until it was seven million dollars for the book.

Or in this case, a shirt that actually says “keep calm and rape a lot” that nobody ever bought, nobody ever sold. It was algorithmically generated “keep calm” and random words, all put up for sale. A million shirts on Amazon, waiting to see who might buy one, at which point it would be made.

These kinds of algorithms are going to be more and more common, so when I think of something like the European right to be forgotten, I see this eventually being all automated. The request to be forgotten will be automatically filed on your behalf, Google will think about it automatically, or Bing. And then they’ll make a decision. So the whole line, from soup to nuts, will be done without any human intervention.

Now, of course search engines themselves may be old-fashioned. It might be more like Siri, who’s just a concierge, and will tell you before you even know to ask, where you should go, what you might do. And thinking about where that advice will come from, what ingredients are going in, is it always being given in your best interest? Whether it’s advice or the temperature of your home, all very good questions to ask.

It really calls to mind the Harvard/Yale game of 2004, in which members of the Harvard pep squad went up and down the aisles on the Harvard side of the field distributing colored placards to hold up like a North Korean rally at just the right moment, to say something to the Yalies on the other side.

It turns out that was the Yale pep squad in disguise who distributed the placards. So, when they held them up it spelled a rather unusual message, which is a great example of a miniature polity being created with a message so big and powerful they cannot read it or understand that they’re part of it.

But the key word there is “we.” We is usually what we think of with a state. It’s meant to represent us. But representing us by dint of geographical happenstance, with the sovereign imposed over it, that’s not what the cyber piece is bringing us. What it’s bringing us instead are lots of different we’s, mediated in lots of ways, through lots of intermediaries, worthy very much of study, reflection, and review.

Thank you very much.