Star Trek’s vision of a voice interface to computing was and remains incredibly compelling. So much to the extent that about three years ago, Amazon included “Computer” as a wake word to the Echo so that we can pretend to talk to the first mass-market voice assistant as if we’re on a spaceship in the 24th century.

Archive (Page 1 of 3)

Many of the concerns of the cyberpunk genre have come true. The rise of corporate power, ubiquitous computation, and the like. Robot limbs and cool VR goggles. But in many ways, it’s far far worse.

Cyberpunks, they’re out pirating data and uploading their brains into video games. Solarpunks are revitalizing watersheds, mapping radiation after disaster or war, and bringing back pollinator populations. And since all great speculative fiction is really not about the future but about about the present, cyberpunk is about the politics of the 1980s, right. It was about urban decay and corporate power and globalization. In the same way, solarpunk is really about the politics of right now. Which means it’s about global social justice, the failures of late capitalism, and the climate crisis.

AI Policy Futures is a research effort to explore the relationship between science fiction around AI and the social imaginaries of AI. What those social measures can teach us about real technology policy today. We seem to tell the same few stories about AI, and they’re not very helpful.

This is going to be a conversation about science fiction not just as a cultural phenomenon, or a body of work of different kinds, but also as a kind of method or a tool.

When data scientists talk about bias, we talk about quantifiable bias that is a result of let’s say incomplete or incorrect data. And data scientists love living in that world—it’s very comfortable. Why? Because once it’s quantified if you can point out the error you just fix the error. What this does not ask is should you have built the facial recognition technology in the first place?

What I hope we can do in this panel is have a slightly more literary discussion to try to answer well why were those the stories that we were telling and what has been the point of telling those stories even though they don’t now necessarily always align with the policy problems that we’re having.

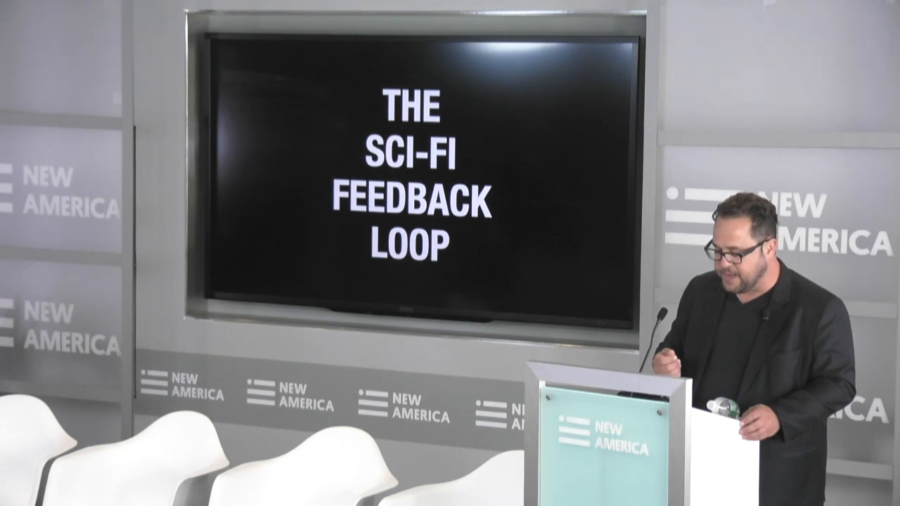

We’re here because the imaginary futures of science fiction impact our real future much more than we probably realize. There is a powerful feedback loop between sci-fi and real-world technical and tech policy innovation and if we don’t stop and pay attention to it, we can’t harness it to help create better features including better and more inclusive futures around AI.

I vacillate…between thinking that we’re doomed because we have given ourselves over to a stupid system that’s now backed up by guns. And then a much more utopian view that we’ve always lived in stupid systems and that we’re always making them better.