Of course we’re avid, avid watchers of Tucker Carlson. But insofar as he’s like the shit filter, which is that if things make it as far as Tucker Carlson, then they probably have much more like…stuff that we can look at online. And so sometimes he’ll start talking about something and we don’t really understand where it came from and then when we go back online we can find that there’s quite a bit of discourse about “wouldn’t it be funny if people believed this about antifa.”

Archive (Page 1 of 2)

We are immersed in a hyperpartisan media ecosystem where the future of journalism is at stake, the future of social media is at stake. And right now I’m really worried that the US democracy might not survive this moment.

The key thing that Congress realized…was that if you want platforms to moderate, you need to give them both of those immunities. You can’t just say, “You’re free to moderate, go do it,” you have to also say, “And, if you undertake to moderate but you miss something and there’s you know, defamation still on the platform or whatever, the fact that you tried to moderate won’t be held against you.”

The question also does come up, you know, is there anything really new here, with these new technologies? Disinformation is as old as information. Manipulated media is as old as media. Is there something particularly harmful about this new information environment and these new technologies, these hyperrealistic false depictions, that we need to be especially worried about?

I’m just going to say it, I would like to completely blow up employment classification as we know it. I do not think that defining full-time work as the place where you get benefits, and part-time work as the place where you have to fight to get a full-time job, is an appropriate way of addressing this labor market.

Where did this evil stuff come from? Are we evil? I’m perfectly willing to stipulate you are not evil. Neither is your boss evil. Nor is Larry Page or Mark Zuckerberg or Bill Gates. And yet the results of our work, our best most altruistic work, often turns evil when it’s deployed in the larger world. We go to work every day, genuinely expecting to make the world a better place with our powerful technology. But somehow, evil is sneaking in despite our good intentions.

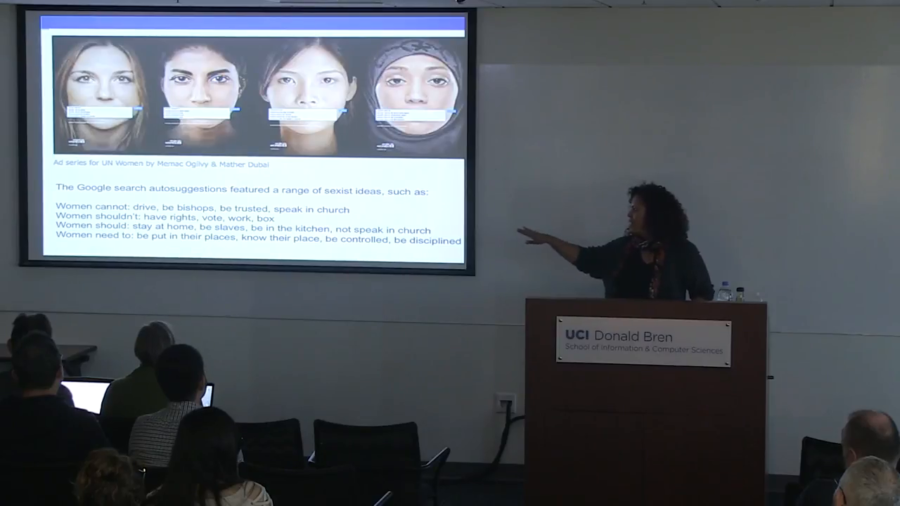

One of the things that I think is really important is that we’re paying attention to how we might be able to recuperate and recover from these kinds of practices. So rather than thinking of this as just a temporary kind of glitch, in fact I’m going to show you several of these glitches and maybe we might see a pattern.

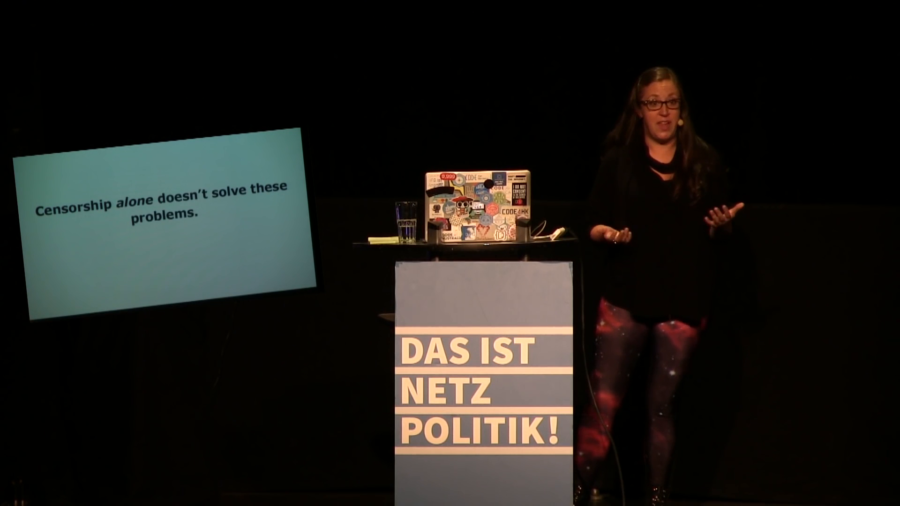

A lot of the topics that we’re trying to “tackle” or trying to deal with on the Internet, we’re not actually defining ahead of time. And so what we’ve ended up with is a system whereby both companies, and governments alike, are working sometimes separately, sometimes together, to rid the Internet of these topics, of these discussions, without actually delving into what they are.

Dangerous speech, as opposed hate speech, is defined basically as speech that seeks to incite violence against people. And that’s the kind of speech that I’m really concerned about right now. That’s what we’re seeing on the rise in the United States, in Europe, and elsewhere.