How people think about AI depends largely on how they know AI. And to the point, how the most people know AI is through science fiction, which sort of raises the question, yeah? What stories are we telling ourselves about AI in science fiction?

Archive (Page 2 of 6)

We came up with the idea to write a short paper…trying to make some sense of those many narratives that we have around artificial intelligence and see if we could divide them up into different hopes and different fears.

When data scientists talk about bias, we talk about quantifiable bias that is a result of let’s say incomplete or incorrect data. And data scientists love living in that world—it’s very comfortable. Why? Because once it’s quantified if you can point out the error you just fix the error. What this does not ask is should you have built the facial recognition technology in the first place?

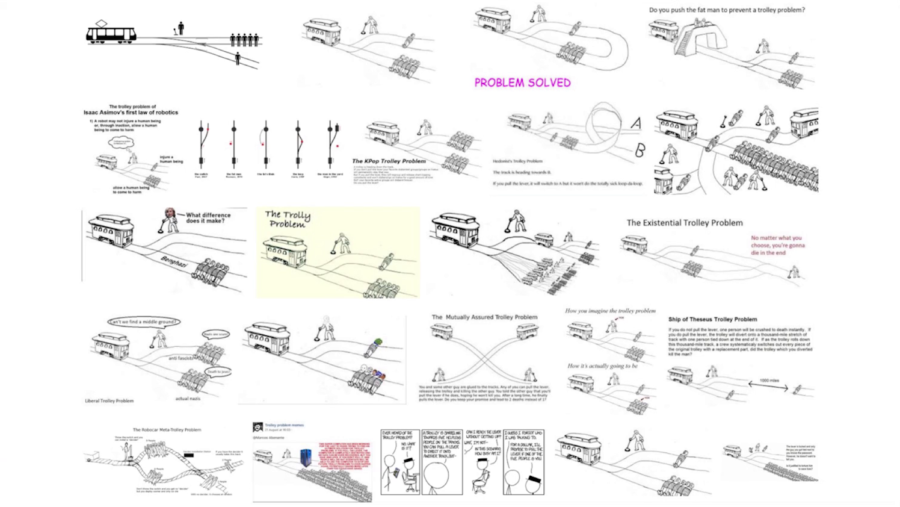

What I hope we can do in this panel is have a slightly more literary discussion to try to answer well why were those the stories that we were telling and what has been the point of telling those stories even though they don’t now necessarily always align with the policy problems that we’re having.

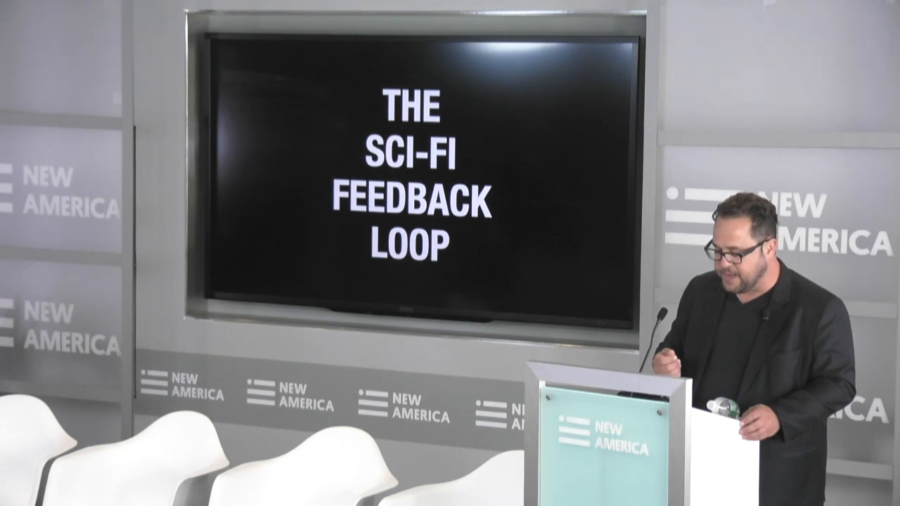

We’re here because the imaginary futures of science fiction impact our real future much more than we probably realize. There is a powerful feedback loop between sci-fi and real-world technical and tech policy innovation and if we don’t stop and pay attention to it, we can’t harness it to help create better features including better and more inclusive futures around AI.

The Markkula Center for Applied Ethics at Santa Clara University has some really useful thinking and curricula around ethics. One of the things they point out is that what ethics is not is easier to talk about than what ethics actually is. And some of the things that they say about what ethics is not include feelings. Those aren’t ethics. And religion isn’t ethics. Also law. That’s not ethics. Science isn’t ethics.

What I want to talk to you about is this idea of machines governing things. And one particular thing that I see governed by the machines is the radio spectrum. That’s an example where I see this kind of regulation can work, and I guess the most radical thing about it is that I think a robotic regulator can actually bring more human values to its regulation.

BJ Copeland states that a strong AI machine would be one, built in the form of a man; two, have the same sensory perception as a human; and three, go through the same education and learning processes as a human child. With these three attributes, similar to human development, the mind of the machine would be born as a child and will eventually mature as an adult.

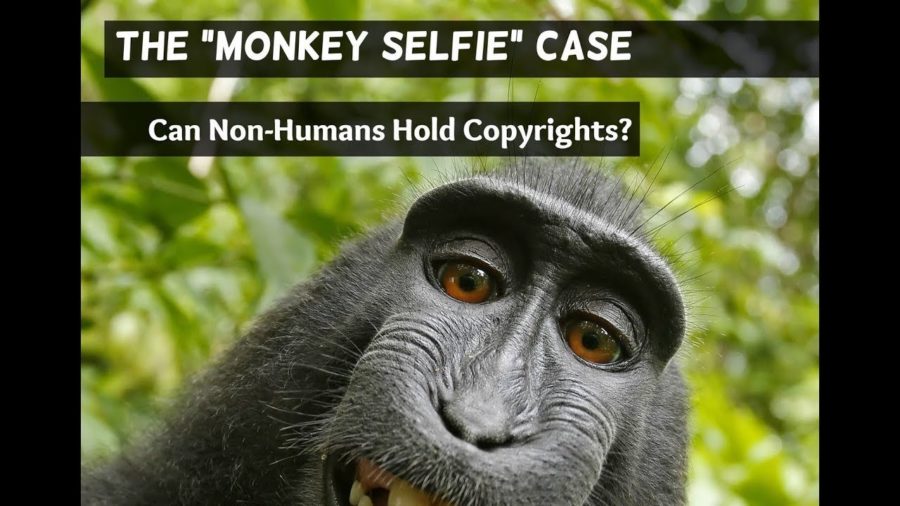

Naruto, then 3 years old, came up and picked up one of his cameras and started looking at it. And he made the connection… By Mr. Slater’s own admission he made the connection between pushing the shutter release button and the change to his reflection in the lens when the aperture opened and closed.