The premise of our project is really that we are surrounded by machines that are reading what we write, and judging us based on whatever they think we’re saying.

Archive

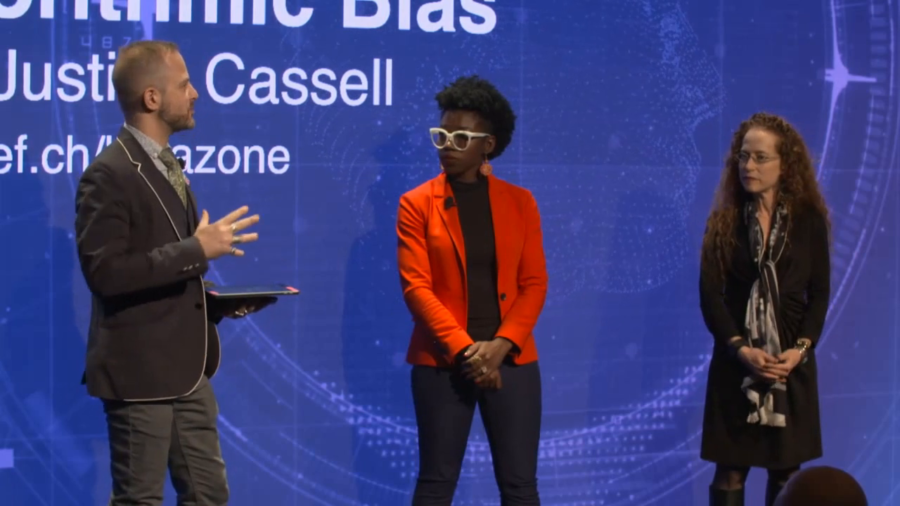

I think the question I’m trying to formulate is, how in this world of increasing optimization where the algorithms will be accurate… They’ll increasingly be accurate. But their application could lead to discrimination. How do we stop that?

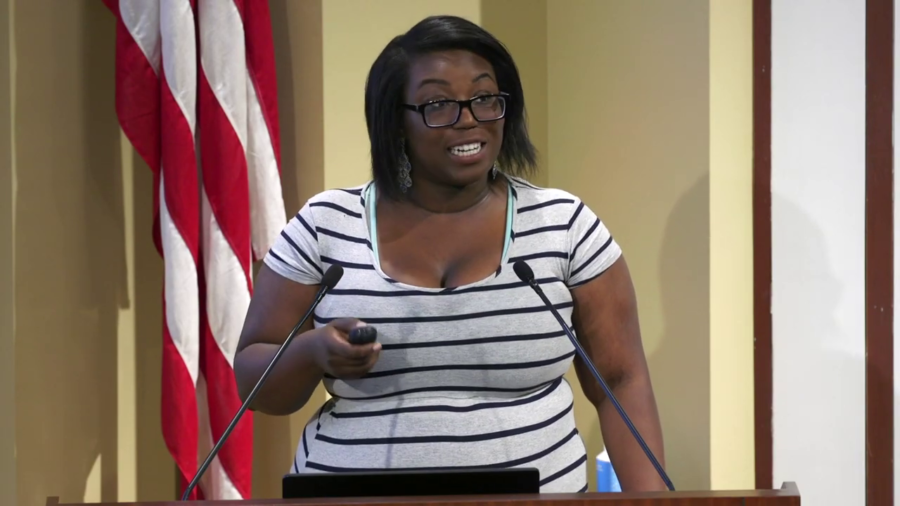

I consider myself to be an algorithm auditor. So what does that mean? Well, I’m inherently a suspicious person. When I start interacting with a new service, or a new app, and it appears to be doing something dynamic, I immediately been begin to question what is going on inside the black box, right? What is powering these dynamics? And ultimately what is the impact of this?

The question is what are we doing in the industry, or what is the machine learning research community doing, to combat instances of algorithmic bias? So I think there is a certain amount of good news, and it’s the good news that I wanted to focus on in my talk today.

Quite often when we’re asking these difficult questions we’re asking about questions where we might not even know how to ask where the line is. But in other cases, when researchers work to advance public knowledge, even on uncontroversial topics, we can still find ourselves forbidden from doing the research or disseminating the research.