Chris Mooney: It’s great to be with you to discuss the science of why we deny science and reality. In other words what is it about our brains that makes facts so challenging, so odd and threatening? Why do we sometimes double down on false beliefs? And maybe why do some of us do it more than others? That’s the next book. I’m not going to talk about it that much. I’m going to try to be somewhat down the middle here, although it’s difficult.

But I’ve been writing about political and scientific misinformation for a decade and I’m going to confess. I got a lot of the big picture wrong initially. The first book was called The Republican War on Science and we did not notice at the time the echo visually with another book that was out. And it was all about people denying the science of global warming. You know, denying evolution. Getting it wrong on stem cells.

What was wrong about my analysis was that I was wedded to an old Enlightenment view of rationality. And what I mean is that I had this vision you know, if you put good information out there and you use rational arguments, people will come to accept what’s true. Especially if you educate them, in places like this. Teach them critical thinking skills. And a lot of people believe or want to believe that this is true.

The problem is that rather awkwardly, there is a science of why we deny science. There are facts about why we deny facts. There’s a science of truthiness. I was going to actually title the book that, but The Republican Brain is better. But there’s a science of truthiness. And the upshot is that paradoxically, ironically, the Enlightenment view doesn’t describe people’s thinking processes so if we’re actually enlightened, we have to reject the Enlightenment view. And I want to tell you first how I realized that.

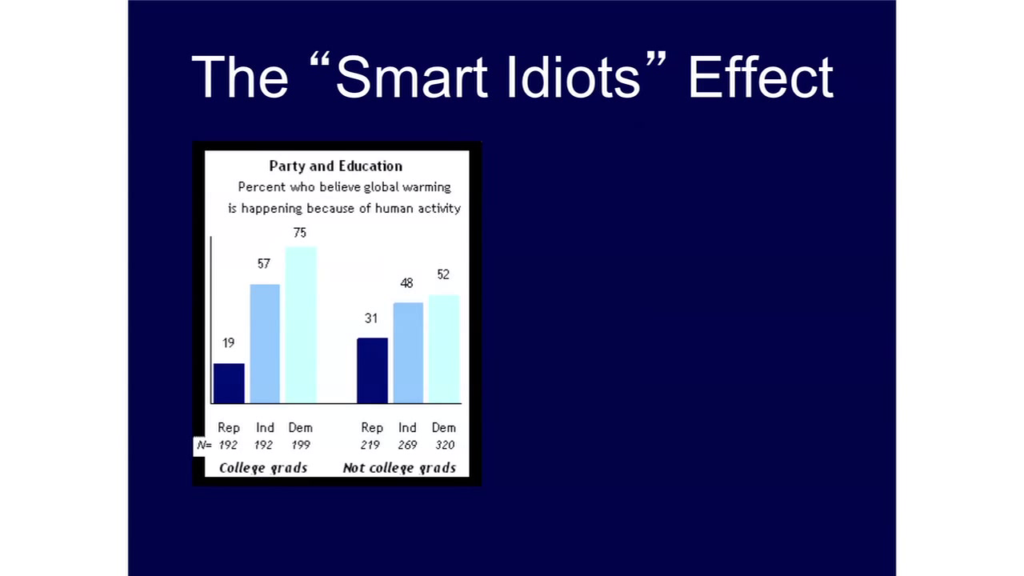

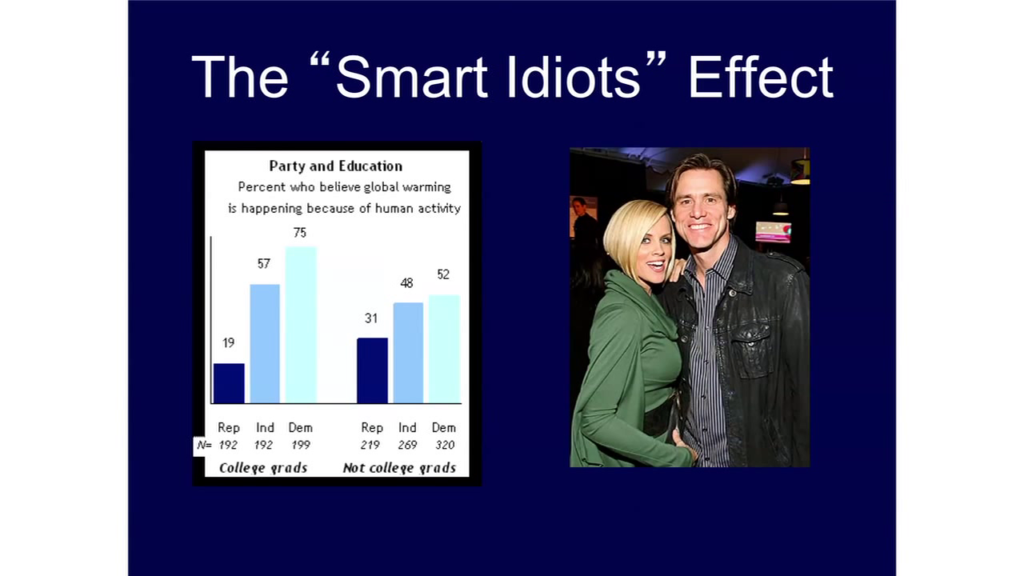

The key moment came in the year 2008 when I stumbled upon something that I call the “Smart Idiot” Effect. What is a smart idiot? This was data from Pew, and it was a poll on global warming. And it was showing the relationship between political party affiliation, level of education, and belief that global warming is caused by human beings.

And I don’t know if you can see it very well because of the color contrast but what it shows that if you’re a Republican, the higher you level of education then the less likely you are to believe in scientific reality. So these are the college grads, these are the non-college grads, and you’ve got less belief among the college grad Republicans in what’s true than you do among the non-college college grad Republicans. Whereas Democrats and independents, the relationship between education and believing in reality is the opposite—they believe it more. So these people, the 81% that don’t believe it, those are smart idiots.

And I’m trying not to be partisan, so I will show you liberal smart idiots. You could call this dumb and dumber.

Now, the people who deny the science of vaccines, which is that they do not cause autism, it turns out that the New England Journal of Medicine studied who these people are and they tend to be white, well-to-do, and the mother has a college education. And they tend to go online, what Jenny McCarthy called “the university of Google,” and inform themselves about this. And so by empowering themselves they end up more wrong, and end up believing that vaccines are dangerous.

Education and intelligence therefore do not guarantee sound rational decisions, nor do they ensure that people accept science or facts. And I want to give you an even crazier, more fun example of the smart idiot effect.

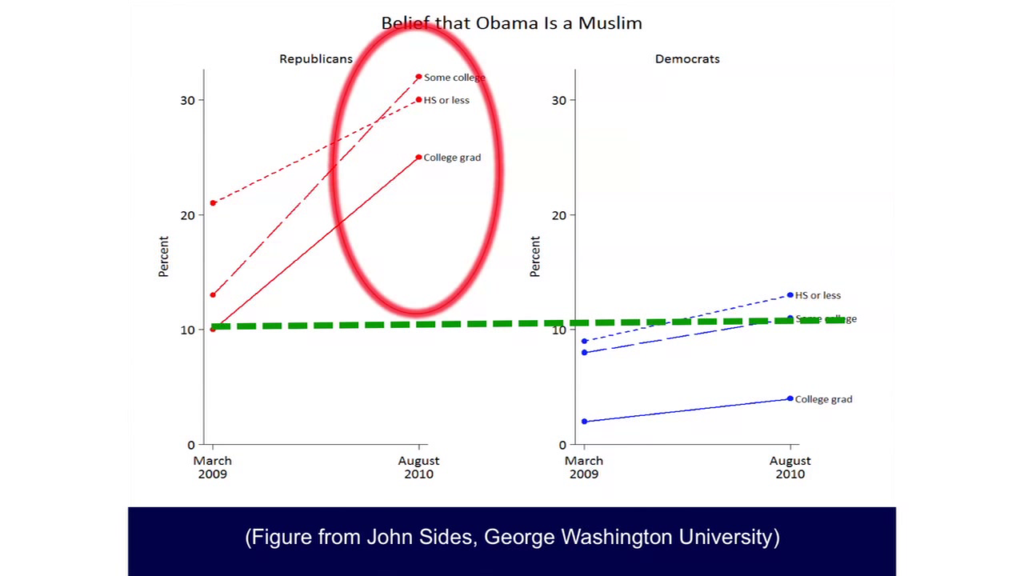

So this is from the political scientist John Sides of George Washington. He unpacked the data on why belief that president Obama is a Muslim increased—or in whom it increased—between 2009 and 2010. So here’s where the increase is, and again we’ve got a higher slope for Republicans with some college or college grad, than those who have less education. So again, smart idiot effect. And this is pretty frustrating. You’re like why they do this? How could this possibly be?

It seems that the more capable you are of coming up with arguments that support your beliefs, the more you’ll think you’re right. And the less likely you will be to change. And if this is true we have a pretty big problem. It would explain a lot of the polarization in America.

The good news is that there is a way of understanding why we do this, and it’s quite relatable. The science behind it is pretty easy to understand because we all know from our personal lives, we all know from our relationships, how hard it can be to get someone we care about to change their minds. And we know from great works of literature like Great Expectations. I actually have seen the black and white Great Expectations.

You know, there’s so many famous characters who fall for self-delusion, who fall for rationalization, Pip being one of them. He wants to believe that he’s destined to marry Estella. He wants to believe that Miss Havisham is not this manipulative old crone, she actually has his best interests at heart. He believes this so strongly that he essentially ruined his life. That’s the story of Great Expectations.

What Dickens grasped about people in a literary way we are now confirming based on psychology and even neuroscience. And what this leads to is a theory called “motivated reasoning.” And what it demonstrates is that we don’t think very differently about politics than we do about emotional matters in our personal lives, at least if we have a strong investment or commitment.

To understand how motivated reasoning works, you need to understand three core points about how the brain works. The first of them is that the vast majority of what all of our brains are doing we are not aware of. The conscious part, the us, the self—just a small percentage of what it’s up to.

The second one is that among the things it’s up to that we’re not aware of is the brain is having rapid-fire emotional or “affective” responses to ideas, images, stimuli, and it’s doing it’s really fast, okay. So fast that we don’t even know it’s doing this.

And then the third point is that these emotional responses guide the process of retrieving memories into consciousness—thoughts, associations that we have with the stimuli. Emotion is the way that those things are pulled up for us to think about.

And so what this means is that we might actually think we’re reasoning logically. We might think we’re acting like scientists. But in fact we’re acting like these guys. And I love being at Harvard Law School putting up lawyers. When we actually become conscious of reasoning, we’ve already been sent down a path, by emotion, retrieving from memory the arguments that we’ve always made before that support what we already think. So we’re not really reasoning, we’re rationalizing. We are defending our case. And our case is not just a part of who we are, it’s a physical part of our brains.

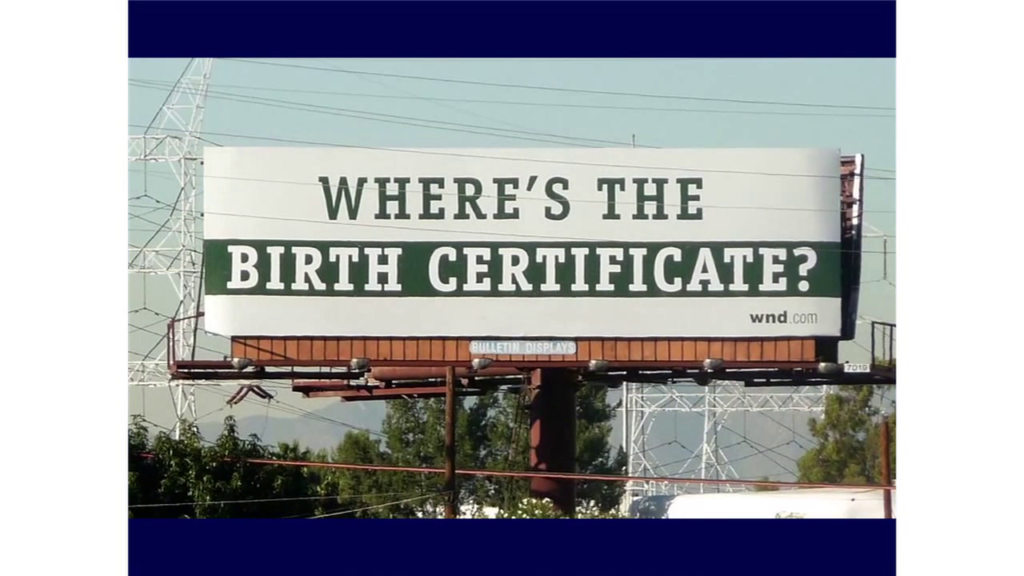

And this explains so many things about why reasoning goes awry. It explains goalpost shifting, for instance. So you know, for years the birthers wanted the birth certificate. The long-form birth certificate. Last year they got it. So did they stop being birthers? No. Did they change their minds? No. The new science of why we deny science can predict that they would double down on their wrong belief and they would come up with new reasons to distrust the new evidence that they’ve been given.

And the same goes for all all manner of logical errors, fallacies, hypocrisies. The answer used to be hey, just learn critical thinking and you’ll avoid these. And that might be true to an extent but it seems like reasoning is designed to help us see these problems in others way better than to see them in ourselves. And it’s designed this way. It manifests first when we’re quite young.

I want to tell you about a really funny motivated reasoning study involving the biases of adolescence. You might remember that in the 1980s there was this big battle about labeling rock albums. And then there was this fear that music corrupted kids, led them to Satanism, what have you. And one side in the debate was the PMRC and Tipper Gore. And here she’s got an album, the title is Be My Slave. I don’t know if you can see the whips and chains. But this was dominatrix metal so. Some moms didn’t think little Johnny should get this record. And on the other side there was Frank Zappa, here pictured in the “Phi Zappa Krappa” picture—that’s so awesome. And as he testified before Congress, I love this quote, “The PMRC’s demands are the equivalent of treating dandruff by decapitation.” Such was the debate, okay.

So in comes a psychologist named Paul Klaczynski and he does a motivated reasoning study dovetailing with it all. He took ninth and twelfth graders who were either fans of country music or fans of heavy metal and he asked them to read arguments that he had constructed. All of the arguments contain the logical fallacy. All the arguments were about the effects of listening to a particular kind of music upon your behavior. So here’s an example of a fallacious argument they might have gotten, something like this: Little Johnny listened to Ozzy Osbourne, then he killed himself. Heavy metal is dangerous stuff. Okay, everyone see the fallacy there?

And so you would get the same kind of flawed arguments about country music. So what happens? Adolescent country fans can see logical fallacies when they are in an argument suggesting that listening to country leads to bad outcomes, bad behaviors. But they do not detect the fallacies in pro-country arguments. The same thing for heavy metal fans. They’re all biased, and it doesn’t get better between ninth and twelfth grade. More education doesn’t somehow let you see what’s wrong with your point of view.

And it is not just problems or fallacies of basic reasoning that motivated reasoning explains, it explains for all the science debates that I write about. The “my expertise better than your expert” problem gets straight to the heart of why some people deny science. They think their science is better.

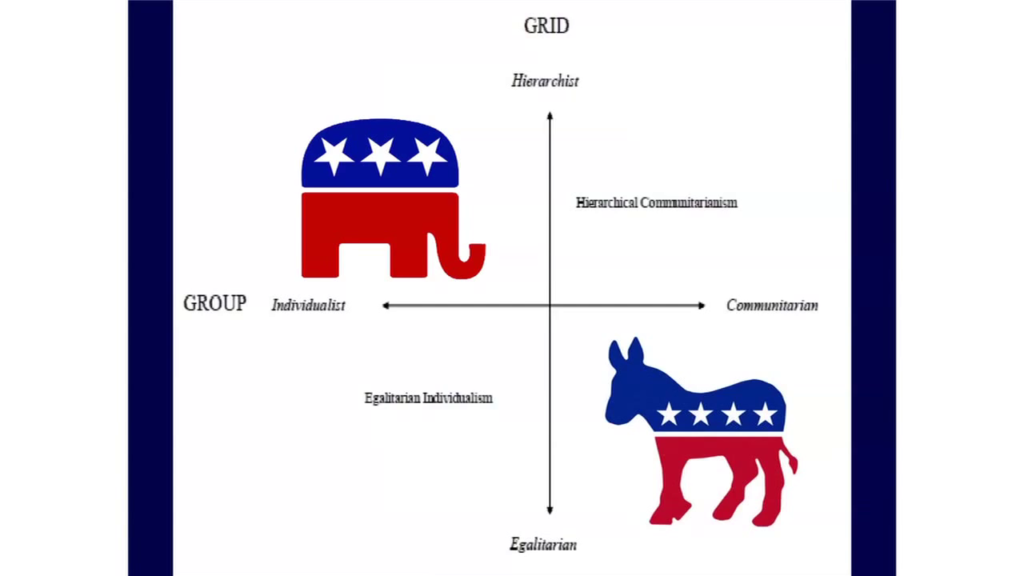

So this is from Yale’s Dan Kahan. He’s also here at the Harvard Law School sometimes, I think. And he divides peoples moral values up along two axes. What’s important about this is your moral values push you emotionally to believe things, and so you start tagging things emotionally based upon what your values are. So we’re either hierarchical or egalitarian, that’s up and down. We’re either individualistic or communitarian.

I think people basically know what these words mean. But in case you don’t, Republicans live up here, Democrats live down here. Republicans tend to be hierarchical and individualistic, supporting more inequality and supporting keeping government out of life. And Democrats more communitarian, supporting the group—it takes a village—and egalitarian, supporting equality.

Okay, the point is that this determines who you think is a scientific expert. Because then he shows people a “fake scientist” who either supports or doesn’t support the consensus that global warming is caused by humans. And if you’re up in the quadrant where the Republicans live, then only 23% agree that the scientist is a trustworthy and knowledgeable expert if the scientist says that global warming is caused by humans. But down here, 88% agree that the scientist is an expert.

So in other words, science is for many of us is whatever we want it to be, and we’ve been impelled automatically, emotionally, into having that kind of reaction to who an expert actually is.

This is bad enough, but the double whammy is always going to come when you combine these flawed reasoning processes with selective information channels (which brings us to the Internet) of the sort that the Internet makes so possible. But this is only a difference of degree. It’s not a difference of kind, because we already see what happens with the selective information channel that is Fox News.

So, lots of research showing that Fox News viewers believe more wrong things. They believe wrong things about global warming, wrong things about healthcare, wrong things about Iraq, and on and on and on. And we can document that they believe them more than people who watch other channels.

Why does this occur? Well, they’re consuming the information, it resonates with their values and then they’re thinking it through and arguing it, getting fired up—motivated reasoning—and then they run out and they reinforce the beliefs. But also, and this is where I’ll get into some possible left/right differences. There’s some evidence to suggest that conservatives might have more of a tendency to want select into belief channels that support what they believe to begin with. Right wing authoritarian are a group that’ve been much studied and they are part of the conservative base, and there are some studies showing that they engage in more selective exposure, trying to find information that supports beliefs.

There was a study by Shanto Iyengar at Stanford of Republicans and Democrats consuming media. And what he found was that Democrats definitely didn’t like Fox, but they spread their interests across a variety of other sources. But Republicans were all Fox, almost, and as they wrote, “The probability that a Republican would select a CNN or NPR report was about 10%” in this study. So the Fox effect, believing wrong things, is probably both motivated reasoning and also people selecting in to the information stream to begin with.

So how do you short-circuit motivated reasoning? That’s what Brendan Nyhan is going to talk about. I’m not going to talk about it, but I will say you don’t argue the facts. There are approaches that are shown by evidence to work. For my part let me just end with some words of eternal wisdom from a truly profound philosopher, Yoda. Yoda said you must unlearn what you have learned. And when it comes to the denial of science and facts, that is precisely the predicament we’re in. So thanks.

Further Reference

Inside the Political Brain, Chris Mooney on the Klaczynski study

Truthiness in Digital Media event site