I’d like to start with two of my favorite quotes. Arthur C. Clark said, “Any sufficiently advanced technology is indistinguishable from magic.” And Gibson said, “The future is already here—it’s just not very evenly distributed.” And when I synthesize those two quotes, my takeaway is that there are magicians amongst us.

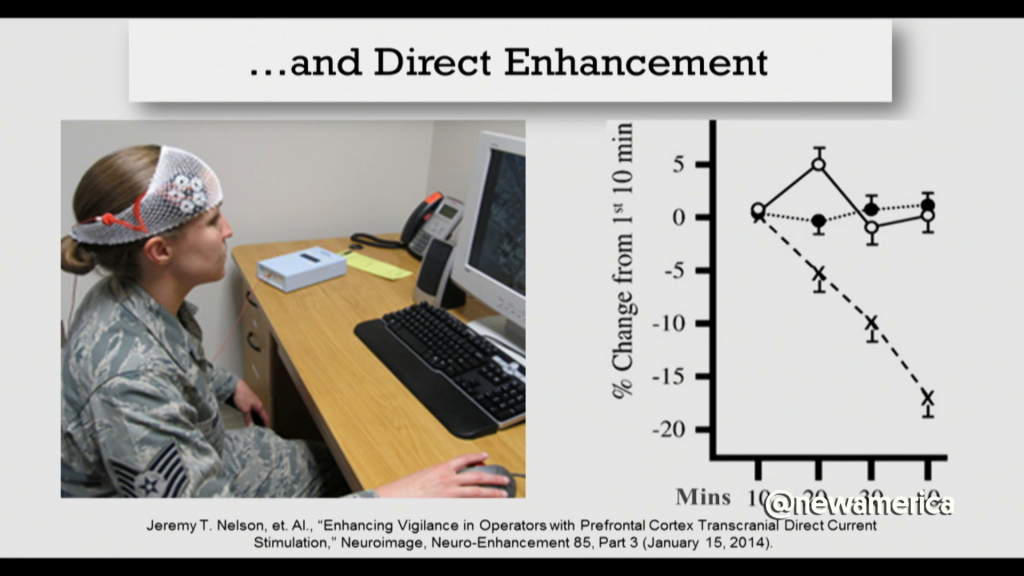

So, what does magic look like today? It looks like me being able to put an electrode on my forehead, controlled by an iPhone in my right hand. And then three minutes into the program, where that iPhone is controlling electrical impulses into my skull to relax me, I had so much trouble putting words together that I felt like I was about a six pack deep into drinking.

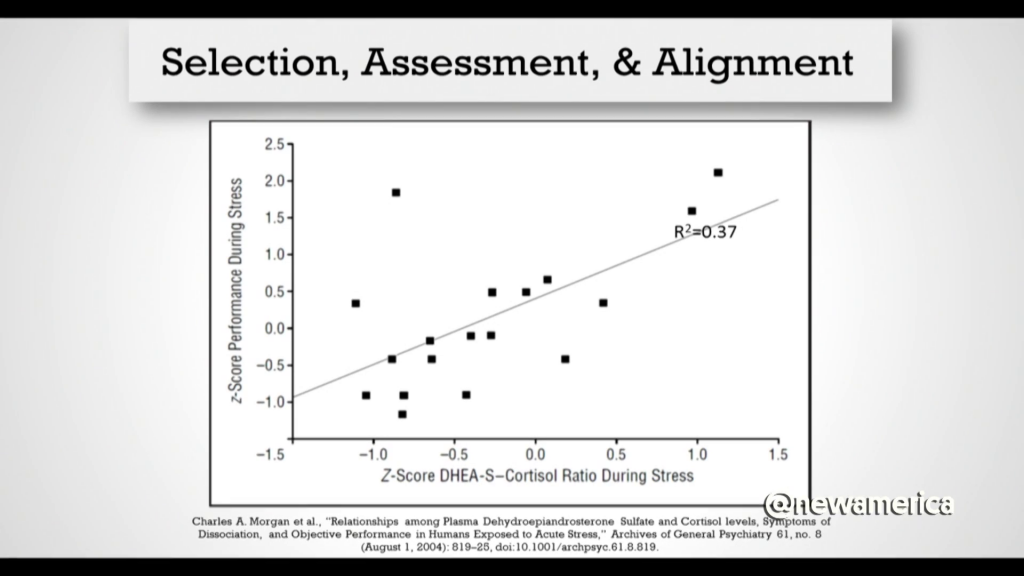

But this is about the future of war. I want you to imagine the ability to take a few drops of someone’s blood, to combine that with some cognitive and some physical testing, and to be able to figure out where that person is going to be most effective in your military. And by most effective I don’t just mean how good they are at performing. I mean they’re going to be happier there, too. Because if they’re not happy and they leave you lose a lot of effectiveness. And then once you’ve put that person in a place where their skill set is optimal, you’re going to be able to enhance that beyond what they come in with.

So why do I believe this? Here’s data that shows the correlation between the ratio of two physiological variables, DHEA sulfate, which is a steroid hormone precursor, and cortisol, which is a stress hormone. And the ratio between those two physiological factors alone allows you to pick up 37% of the variability in performance during a special operations task.

And that same technology that I was using in the first picture, here is now on an airman. And what you see from the graph on the left side is that people using transcranial direct current stimulation (AKA electricity at a very low level going through your skull and changing the activation pattern of the neurons in your brain) were able to focus on a fairly challenging task of watching and tracking aircraft on a screen without losing any performance for forty minutes, when people with the placebo lost 5% of their performance every ten minutes. What would that mean for someone driving down a street trying to scan for IEDs?

But today we only think about someone sleeping a little less, somebody running a little longer without fatigue. I want to take this a lot further. And I’m thinking about the ability to use pharmaceuticals and electroceuticals (so electricity, like I was taking about before) to enhance learning to such a degree, and to enable unit cohesion to come together to such a degree, you could train a military force to high levels of capability within weeks or months instead of years or decades. What would that do for strategic surprise?

I’m thinking a lot about research in autism in other areas. And autism, by the way, is a disorder where people have tremendous difficulty understanding why others think and act the way they do. Now imagine if we were able to use that same type research, or one of our adversaries did, to enhance the ability to think like your adversary. What would that do for psychological operations, for deception, for influence?

And I’m thinking a lot today about how you could improve the general purpose force to match soft levels of performance. But if you just use the general purpose force that was better in the same way, I don’t think it would get you that far. But if you add new CONOPS and organizational structures, break it into smaller units, swarming tactics, I think you might end up with something like an order of magnitude improvement in performance.

And General [Mark] Milley this morning mentioned that it’s not just the size of a force, but a smaller force could smoke a bigger force if they’re better-equipped or better-trained. Well, part of the corollary to that may be a question of what they’re smoking.

And I’m thinking about direct brain-to-brain communication. There are current DARPA programs using brain/machine interface technology trying to replace the memory of our injured war fighters, which is a tremendous goal and undertaking.

But if you can replace someone’s memory, that means you can store ideas and transmit them into their brain. That means I can take those ideas and transmit them into someone else’s brain. Imagine taking a plan and being able to give it whole hog to someone else to be able to think through it, to iterate on it, and to send it back to me. What would that do to the rate of innovation?

But I think anybody should ask, why should we believe that any of this is going to come, if for the last twenty years people have been saying human enhancement technologies are going to really matter? I think there’s three trends that mean we need to take a serious look about what’s going to happen in the next two to three decades.

So on the bottom there, neuroscience. Our scientific knowledge in the past ten years alone has taught us that there [is a] new field, epigenetics. Something that chemical modifications on your DNA that don’t change the letters in the code, can not only change the way you think and act but also can affect your children. We also have new tools, staying on the neuroscience theme we’ve developed tools that allow you to enable brain cells in animals to be activated or deactivated using light, optogenetics. We have new scanning and imagine technologies.

And then move up to the top there. This is two sort of fake street signs that are in a startup in Boston that has tens of millions of dollars in funding that’s developing human enhancement technologies for commercial applications, and not for medicine. So money’s flowing in, too.

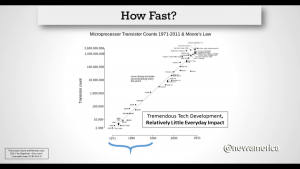

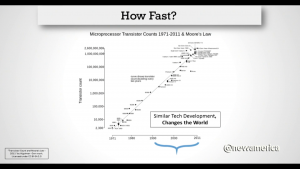

So, I want to take you to an analogy with Moore’s Law, which we’ve heard mentioned a couple times today. In the first two decades of real microprocessor and solid-state transistor development, we saw about a 1000x improvement in the number of transistors you could get on a chip. But frankly, the impact on society was relatively small from that advance. But in the second twenty years here, you only had about a 1000x greater improvement from where you started in the middle there, but of course it changed the world. Microprocessors drove the Internet, in this context C4ISR, etc. Precision strike complex.

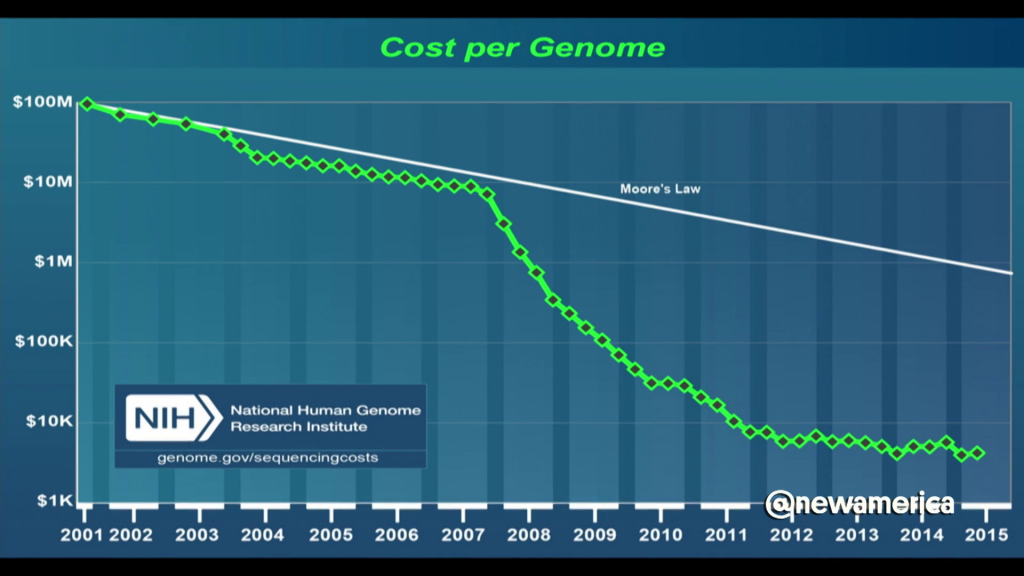

So I think what we need to take is that not only is technology development nonlinear, but it can be further nonlinear when you’re looking at impact. What might this mean when we think about human enhancement technologies? Well, one of the other things that happened as those transistor counts increased is the cost of each of those chips decreased. So here’s the rate of decrease in the cost to sequence a human genome, sequence all about three billion base pairs. And you see it’s dropping faster than Moore’s Law.

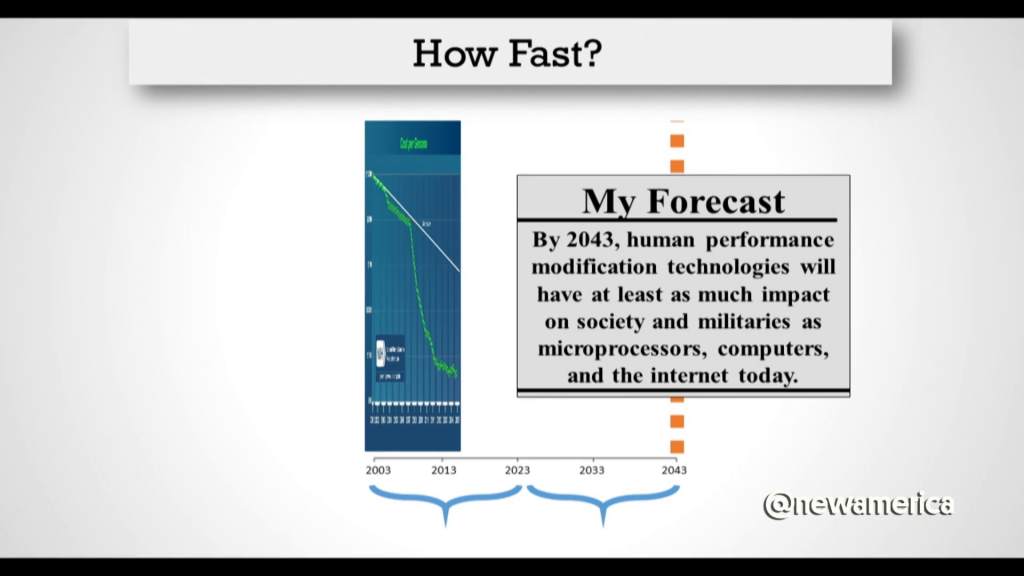

But now I’m going put that on the same forty-year x axis I showed you before, and we’ll start at 2003, which was the the end of the Human Genome Project, the first sequenced human genome. And if you take that date as a start, we’re not even thirteen years into that first twenty years.

But why should the timeline be similar to computers? Well, I’d suggest that biology is a lot more complex than computing…but now we have computers to help. I think the scale is about relatively similar. So I’m willing to make up fairly falsifiable projection and put my name on it. I think by 2043 (and this is my conservative forecast), human enhancement technologies are going to have about the same level of impact as computers did five years ago. And frankly I think it’s coming in the 2030s.

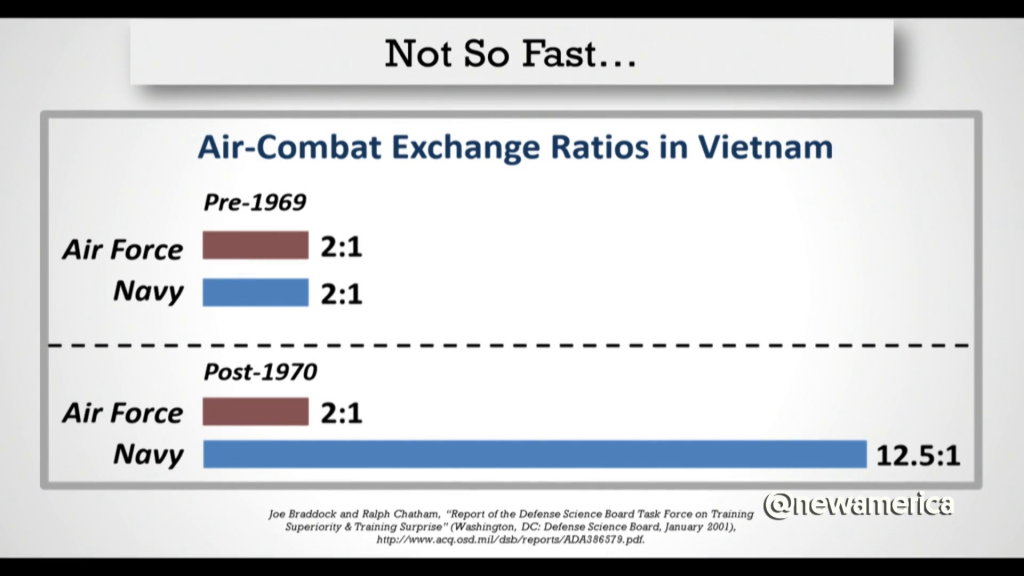

Of course, the second corollary is why should we believe it? But also, will we matter? Well, in this case I want to take you back to the last time humans were obsolete. The F‑4 fighter was built without an internal gun because we were in the missile age. And in the missile age, humans couldn’t dogfight well enough for it to matter. They were just going to use sensors to see the other aircraft and fire a missile and would be done.

Well, we went into the Vietnam War and its amazing experiment that the Air Force and the Navy both flying the F‑4. In the first two to three years of the air war over North Vietnam, both have about a 2:1 exchange ratio. So, they’re killing, shooting down two north Vietnamese aircraft for everyone we lose.

And this was a real surprise. We expected to do a lot better. And so the Air Force goes back to drawing board and says the missiles are tracking properly, and they work on them. And the Navy goes back to the drawing board and says the missiles are tracking properly, but about part-way through that study, about halfway through that study, a gentleman named Captain Ault says, “Actually, a huge part of the problem is that our pilots don’t know how to use them effectively.” Because we’d stopped realistic air combat training because missiles were going to do it all for us and humans didn’t matter.

So, the Air Force goes back to the air war in 1970 after about a year’s break when we’re in negotiations with North Vietnam, and they only are able to maintain their 2:1 exchange ratio. But with the implementation of TOPGUN in ’69 and ’70, and this realistic air combat training and sending two people per unit forward to then train their units, the Navy goes to 12.5:1, almost an order of magnitude improvement in tactical outcomes, because of human performance. And Anyone who doesn’t think training is a true human performance technology, I suggest looking to the research on neuroplasticity showing that training training changes the structure your brain.

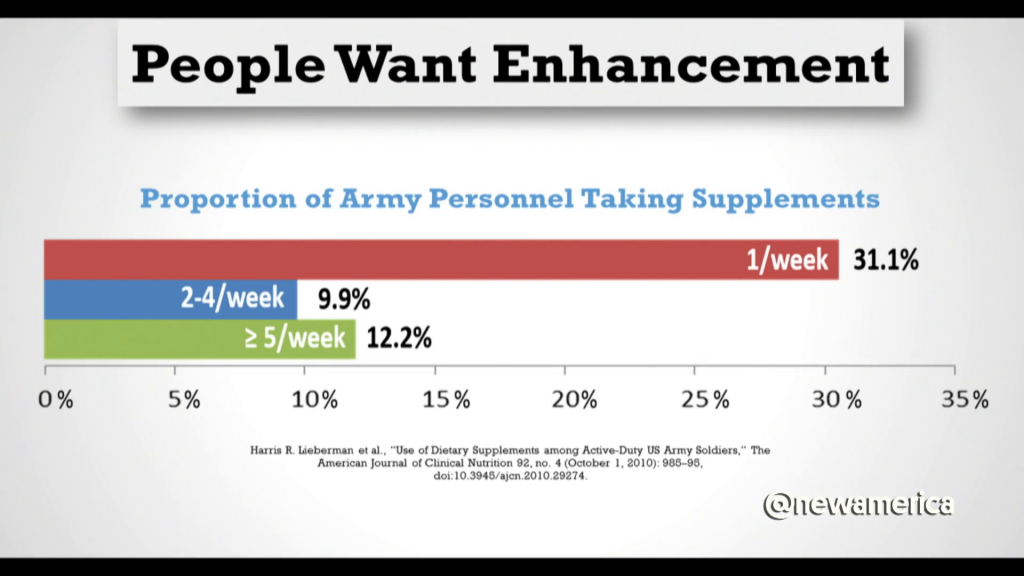

This field is often discussed with a lot of concern in venues like this because of the ethical issues, and this fear that God forbid somebody would force our military personnel to use these technologies, what happens if someone gets hurt? Well, let me tell you something. They want these technologies. My clients in Silicon Valley, in Boston, pay good money for enhancement because they see it as a competitive advantage. And when I give these talks in environments with a lot of junior military personnel, the typical respons is that somebody comes up to me at the end and says, “If you ever need a research subject, I promise I won’t tell anyone.” Now, that’s not how we do it. The military actually has the strictest ethical and bureaucratic restrictions on doing this type of research anywhere in the United States. It is harder to do this research in the military than anywhere else.

This is data on Army personnel taking supplements. And you can see that more than 50% of Army personnel in this data were taking at least one or more supplements per week. And what’s really interesting here is you’d expect that to drop off and have fewer and fewer as you see people taking more and more. But actually more people take five supplements a week then take two to four. Which tells you you have a superuser group. And anyone who spends time round military personnel knows who these people are.

And on the ethical issue, I would suggest we consider reframing it. We require our military personnel to go on the battlefield with thirty, maybe even forty pounds of body armor. And we don’t just think or worry, we know that it’s damaging their knees and their back severely, and these are life-long injuries. And at the same time, it’s decreasing their mobility and therefore parts of their operational performance. But we have human enhancement technologies that range from drugs to stimulation that are safer and enhance their operational capability. So I think anyone who says that we shouldn’t give those to people, I would say that’s a PR issue not an ethics issue. Thank you very much.