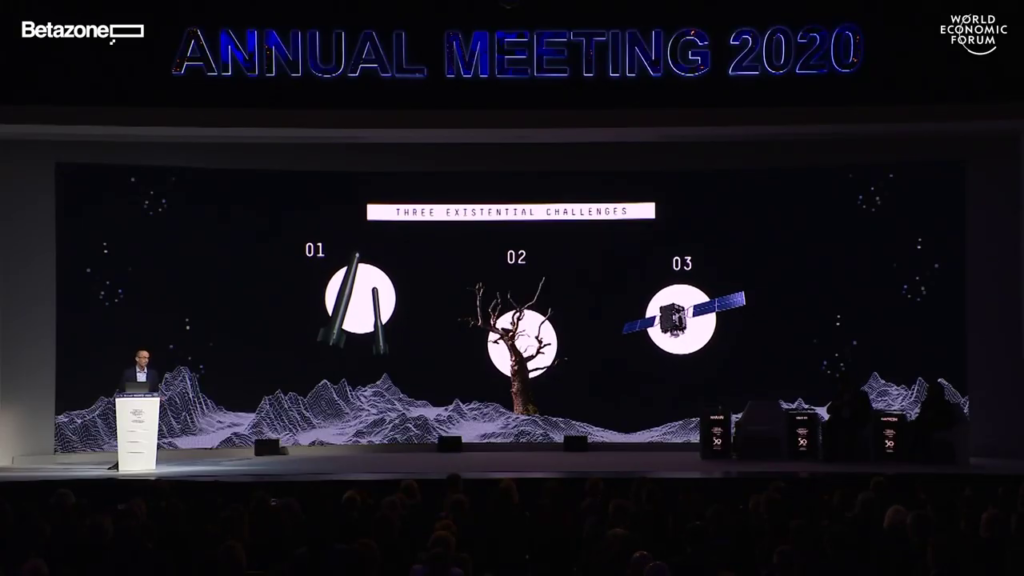

Yuval Noah Harari: Of all the different issues we face, three problems pose existential challenges to our species. These three existential challenges are nuclear war, ecological collapse, and technological disruption. We should focus on them.

Now, nuclear war and ecological collapse are already familiar threats, so let me spend some time explaining the less familiar threat posed by technological disruption. In Davos, we hear so much about the enormous promises of technology. And these promises are certainly real, but technology might also disrupt human society and the very meaning of human life in numerous ways ranging from the creation of a global useless class to the rise of data colonialism and of digital dictatorships.

First, we might face upheavals on the social and economic level. Automation will soon eliminate millions upon millions of jobs. And while new jobs will certainly be created, it is unclear whether people will be able to learn the necessary new skills fast enough. Suppose you’re a 50 years-old truck driver. And you just lost your job to a self-driving vehicle. Now, there are new jobs. In designing software, or in teaching yoga to engineers. But how does a 50 years-old truck driver reinvent himself or herself as a software engineer or as a yoga teacher?

And people will have to do it not just once but again and again throughout their lives, because the automation revolution will not be a single watershed event following which the job market will settle down into some new equilibrium. Rather, it will be a cascade of ever bigger disruptions. Because AI is nowhere near its full potential. Old jobs will disappear. New jobs will emerge. But then the new jobs will rapidly change and vanish. Whereas in the past, humans had to struggle against exploitation, in the 21st century, the really big struggle will be against irrelevance. And it’s much worse to be irrelevant than to be exploited.

Those who fail in the struggle against irrelevance would constitute a new useless class. People who are useless, not from the viewpoint of their friends and family of course, but useless from the viewpoint of the economic and political system. And this useless class will be separated by an ever-growing gap from the ever more powerful elite.

The AI revolution might create unprecedented inequality not just between classes but also between countries. In the 19th century, a few countries like Britain and Japan industrialized first, and they went on to conquer and exploit most of the world. If we aren’t careful, the same thing will happen in the 21st century with AI. We are already in the midst of an AI arms race with China and the USA leading the race, and most countries being left far, far behind. Unless we take action to distribute the benefits and power of AI between all humans, AI will likely create immense wealth in a few high-tech hubs, while other countries will either go bankrupt or will become exploited data colonies.

Now we aren’t talking about a science fiction scenario of robots rebelling against humans. We are talking about far more primitive AI which is nevertheless enough to disrupt the global balance. Just think what will happen to developing economies once it is cheaper to produce textiles or cars in California than in Mexico. And what will happen to politics in your country in twenty years when somebody in San Francisco or in Beijing knows the entire medical and personal history of every politician, every judge, and every journalist in your country, including all their sexual escapades, all their mental weaknesses, and all their corrupt dealings. Will it still be an independent country, or will it become a data colony? When you have enough data, you don’t need to send soldiers in order to control a country.

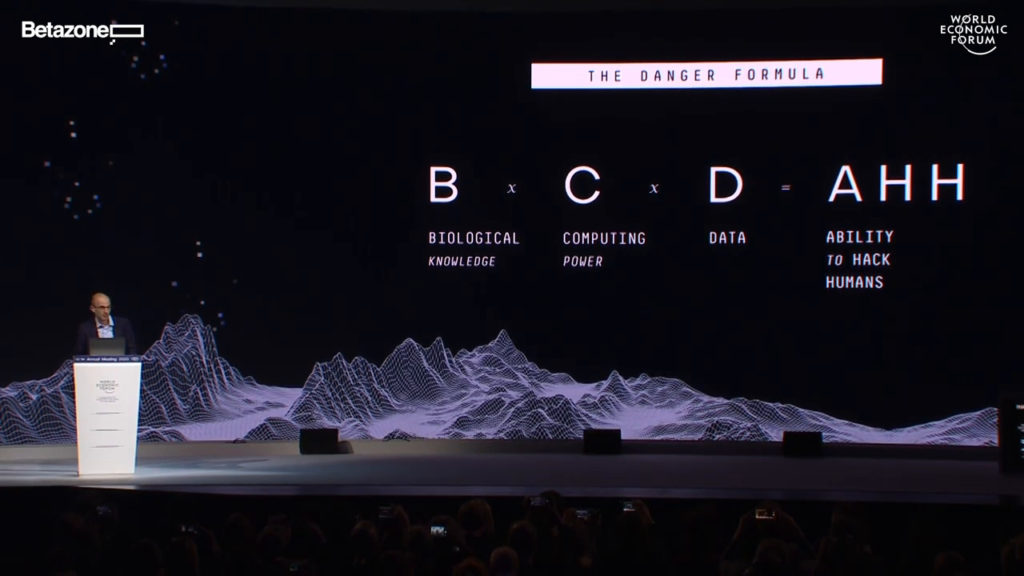

Alongside inequality, the other major danger we face is the rise of digital dictatorships that will monitor everyone all the time. This danger can be stated in the form of a simple equation which I think might be the defining equation for life in the 21st century. B times C times D equals AHH. [pronounced “ah”] Which means biological knowledge, multiplied by computing power, multiplied by data, equals the ability to hack humans—“ahh.” If you know enough biology, and you have enough computing power and data, you can hack my body and my brain and my life, and you can understand me better than I understand myself. You can know my personality type, my political views, my sexual preferences, my mental weaknesses, my deepest fears and hopes. You know more about me than I know about myself. And you can do that not just to me but to everyone. A system that understands us better than we understand ourselves can predict our feelings and decisions, can manipulate our feelings and decisions, and can ultimately make decisions for us.

Now in the past, many tyrants and governments wanted to do it, but nobody understood biology well enough. And nobody had enough computing power and data to hack millions of people. Neither the Gestapo nor the KGB could do it. But soon, at least some corporations and governments will be able to systematically hack all the people. We humans should get used to the idea that we are no longer mysterious souls. We are now hackable animals. That’s what we are.

The power to hack human beings can of course be used for good purposes, like providing much better healthcare. But if this power falls into the hands of a 21st-century Stalin, the result will be the worst totalitarian regime in human history, and we already have a number of applicants for the job of 21st-century Stalin. Just imagine North Korea in twenty years when everybody has to wear a biometric bracelet which constantly monitors your blood pressure, your heart rate, your brain activity, twenty-four hours a day. You listen to a speech on the radio by the Great Leader, and they know what you actually feel. You can clap your hands and smile, but if you’re angry, they know, you’ll be in the gulag tomorrow morning.

And if we allow the emergence of such total surveillance regimes, don’t think that the rich and powerful in places like Davos will be safe. Just ask just Jeff Bezos. In Stalin’s USSR, the state monitored members of the communist elite more than anyone else. The same will be true of future total surveillance regimes. The higher you are in the hierarchy, the more closely you will be watched. Do you want your CEO or your president to know what you really think about them?

So it’s in the interest of all humans, including the elites, to prevent the rise of such digital dictatorships. And in the meantime, if you get a suspicious WhatsApp message from some prince, don’t open it.

Now, even if we indeed prevent the establishment of digital dictatorships, the ability to hack humans might still undermine the very meaning of human freedom. Because as humans will rely on AI to make more and more decisions for us, authority will shift from humans to algorithms. And this is already happening. Already today, billions of people trust the Facebook algorithm to tell us what is new. The Google algorithm tells us what is true. Netflix tells us what to watch. And Amazon and Alibaba algorithms tell us what to buy. In the not-so-distant future, similar algorithms might tell us where to work, and whom to marry, and also decide whether to hire us for a job, whether to give us a loan, and whether the central bank should raise the interest rate. And if you ask why, you will not be given a loan. Or why the bank didn’t raise the interest rate. The answer will always be the same. Because the computer says no.

And since the limited human brain lacks sufficient biological knowledge, computing power, and data, humans will simply not to be able to understand the computer’s decisions. So even in supposedly free countries, humans are likely to lose control over our own lives, and also lose the ability to understand public policy. Already now, how many humans really understand the financial system? Maybe one person, to be very generous. In a couple of decades, the number of humans capable of understanding the financial system will be exactly zero.

Now, we humans are used to thinking about life as a drama of decisionmaking. What will be the meaning of human life when most decisions are taken by algorithms? We don’t even have philosophical models to understand such an existence. The usual bargain between philosophers and politicians is that philosophers have a lot of fanciful ideas, and politicians patiently explain that they lack the means to implement these ideas.

Now we are in the opposite situation. We are facing philosophical bankruptcy. The twin revolutions of infotech and biotech are now giving politicians and businesspeople the means to create Heaven or Hell. But the philosophers are having trouble conceptualizing what the new Heaven and the new Hell will look like. And that’s a very dangerous situation. If we fail to conceptualize the new Heaven quickly enough, we might easily misled by naïve utopias. And if we fail to conceptualize the new Hell quickly enough, we might find ourselves entrapped there with no way out.

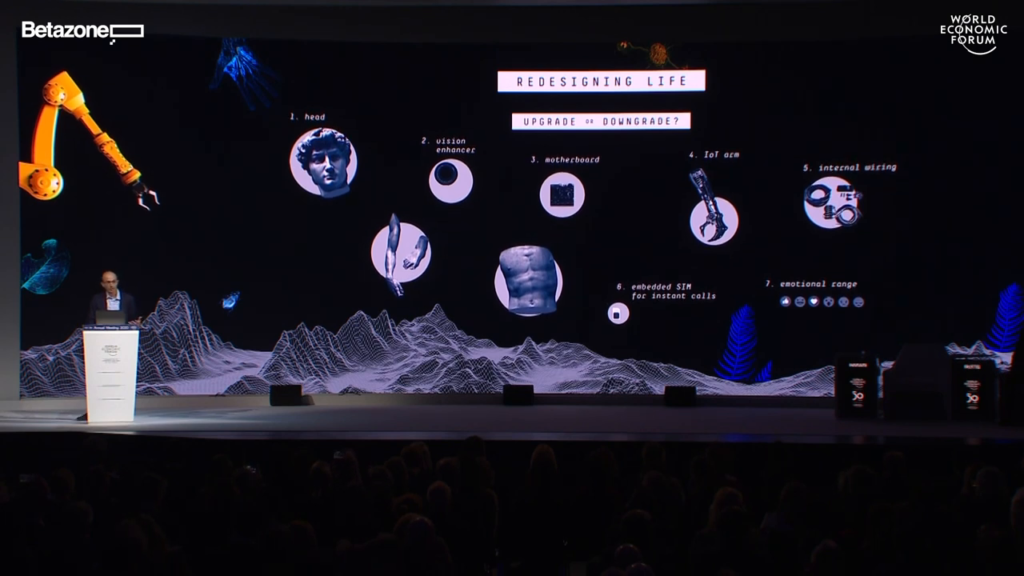

Finally, technology might disrupt not just our economy and politics and philosophy, but also our biology. In the coming decades, AI and biotechnology will give us god-like abilities to reengineer life and even to create completely new lifeforms. After four billion years of organic life shaped by natural selection, we are about to enter a new era of inorganic life shaped by intelligent design. Our intelligent design is going to be the new driving force of the evolution of life. And in using our new divine powers of creation, we might make mistakes on a cosmic scale. In particular, governments, corporations, and armies are likely to use technology to enhance human skills that they need like intelligence and discipline, while neglecting other human skills like compassion, artistic sensitivity, and spirituality. The result might be a race of humans who are very intelligent and very disciplined, but the lack compassion, lack artistic sensitivity, and lack spiritual depth.

Of course this is not a prophecy. These are just possibilities. Technology is never deterministic. In the 20th century, people used industrial technology to build very different kinds of societies. Fascist dictatorships, communist regimes, liberal democracies. The same thing will happen in the 21st century. AI and biotech will certainly transform the world, but we can use them to create very different kinds of societies.

And if you’re afraid of some of the possibilities I’ve mentioned you can still do something about it. But to do something effective, we need global cooperation. All the three existential challenges we face are global problems that demand global solutions. Whenever any leader says something like, “My country first,” we should remind that leader that no nation can prevent nuclear war or stop ecological collapse by itself. And no nation can regulate AI and bioengineering by itself.

Almost every country will say, “Hey. We don’t want to develop killer robots or to genetically engineered human babies. We’re the good guys. But we can’t trust our rivals not to do it. So we must do it first.” If we allow such an arms race to develop in fields like AI and bioengineering, it doesn’t really matter who wins the arms race. The loser will be humanity.

Unfortunately, just when global cooperation is needed more than ever before, some of the most powerful leaders and countries in the world are now deliberately undermining global cooperation. Leaders like the US President tell us that there is an inherent contradiction between nationalism and globalism, and that we should choose nationalism and reject globalism. But this is a dangerous mistake. There is no contradiction between nationalism and globalism because nationalism isn’t about hating foreigners. Nationalism is about loving your compatriots. And in the 21st century, in order to protect the safety and the future of your compatriots, you must cooperate with foreigners. So in the 21st century, good nationalists must be also glolbalists.

Now globalism doesn’t mean establishing a global government, abandoning all national traditions, or opening the border to unlimited immigration. Rather, globalism means a commitment to some global rules. Rules that don’t deny the uniqueness of each nation but only regulate relations between nations. And a good model is the football World Cup.

The World Cup is a competition between nations, and people often show fierce loyalty to their national team. But at the same time, the World Cup is also an amazing display of global harmony. France can’t play football against Croatia unless the French and Croatians agree on the same rules for the game. And that’s globalism in action. If you like the World Cup, you’re already a globalist.

Now hopefully, nations could agree on global rules not just for football but also for how to prevent ecological collapse, how to regulate dangerous technologies, and how to reduce global inequality. How to make sure, for example, that AI benefits Mexican textile workers and not only American software engineers.

Now, of course this is going to be much more difficult than football, but not impossible. Because the impossible—well, we have already accomplished the impossible. We have already escaped the violent jungle in which we humans have lives throughout history. For thousands of years, humans lived under the law of the jungle in a condition of omnipresent war. The law of the jungle said that for every two nearby countries there is a plausible scenario that they will go to war against each other next year. Under this law, peace meant only the temporary absence of war. When there was peace between say Athens and Sparta, or France and Germany, it meant that now they are not at war, but next year they might be.

And for thousands of years, people had assumed that it was impossible to escape this law. But in the last few decades, humanity has managed to do the impossible, to break the law, and to escape the jungle. We have built the rule-based, liberal global order that despite many imperfections has nevertheless created the most prosperous and most peaceful era in human history. The very meaning of the word “peace” has changed. Peace no longer means just the temporary absence of war. Peace now means the implausibility of war. There are many countries in the world which you simply cannot imagine going to war against each other next year. Like France and Germany.

There are still wars in some parts of the world. I come from the Middle East, so believe me I know this perfectly well. But it shouldn’t blind us to the overall global picture. We are now living in a world in which war kills fewer people than suicide, and gun powder is followed less dangerous to your life than sugar. Most countries, with some notable exceptions like Russia, don’t even fantasize about conquering and annexing their neighbors. Which is why most countries can afford to spend maybe just about 2% of their GDP on defense while spending far far more on education and healthcare. This is not a jungle.

Unfortunately we’ve gotten so used to this wonderful situation that we take it for granted, and we are therefore becoming extremely careless. Instead of doing everything we can to strengthen the fragile global order, countries neglected and even deliberately undermine it. The global older is now like a house that everybody inhabits and nobody repairs. It can hold on for a few more years, but if we continue like this it will collapse and we will find ourselves back in the jungle of omnipresent war. We’ve forgotten what it’s like, but believe me as a historian, you don’t want to go back there. It’s far far worse than you imagine. Yes, our species has evolved in that jungle, and lived and even prospered there for thousands of years. But if we return there there now, with the powerful new technologies of the 21st century, our species will probably annihilate itself.

Of course even if we disappear, it will not be the end of the world. Something will survive us. Perhaps the rats will eventually take over and rebuild civilization. Perhaps then the rats will learn from our mistakes. But I very much hope that we can rely on the leaders assembled here and not on the rats. Thank you.