Leonardo Flores: Hi, everyone. I’m here to talk about publishing and preserving bots. This is both a few ideas, and an invitation. So, let’s quickly get to it.

Just a quick thing about my work on bots for those who might not be familiar with me. You can see me on Twitter. During the summer, I put together this WordPress site that’s a bot forum. You all have an invitation to join it. It’s a space to have conversations about bots. So I invite you to do this if you like. I’m mostly not so much a bot maker but a scholar of bots and electronic literature. I’ve reviewed and compiled resources on bots. Many of you I’ve reviewed and, not everyone, but I’m always interested and I’m always wanting to continue reading and reviewing and appreciating bots, and spreading it to the world.

The project I really want to talk about is, I’m part of the Electronic Literature Collection editorial collective, and this means that we’re putting together this collection of electronic literature. The ELO, the Electronic Literature Organization has published two of these collections in the past, in 2006 and 2011. They are wonderful resources for studying, teaching, experiencing electronic literature. You might ask yourselves “What is electronic literature?” and I’m going to borrow a little note from Nick Montfort, who used this distinction very well a couple of years ago in a presentation. First of all e‑lit is not e‑books. E‑books are the sort of industry-driven representations of the book in digital media. They’re top-down, they’re really about selling books in devices. Selling devices as well.

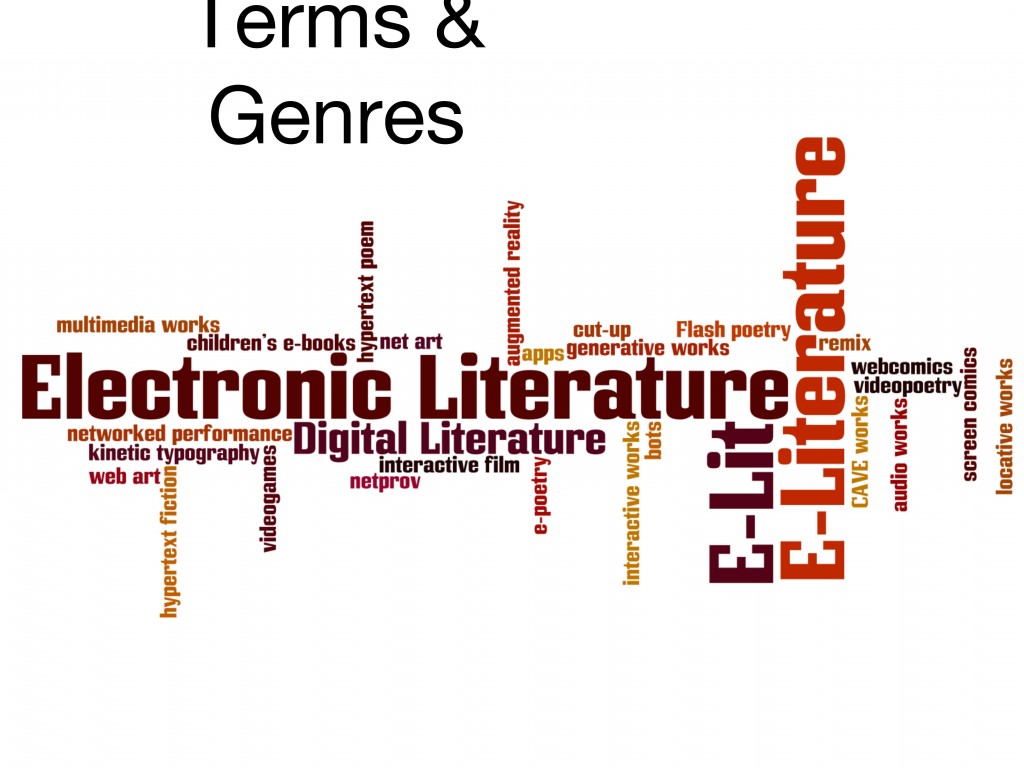

But e‑literature is this set of grassroot experimental practices that embrace the potential of digital media technologies to create innovative engagements with language. It’s what you’re doing. It’s essentially just people using digital media to create and be creative, and to engage language with those technologies. So there’s a ton of different genres that have developed around this. E‑lit is also known by many different names, e‑lit, e‑literature, digital literature, electronic literature, but you can see a bunch of different genres that have developed over the years, and bots are one of those genres and I think a very interesting and vital one.

And it’s digital context, right? They have these material dependencies. In this case, we see a lot of social network use. Twitter, Tumblr, Instagram, others have been mentioned. And these platforms are necessary but also productively creative spaces for us to mess around with. The work with the Electronic Literature Collection volume 3 is we’ve had this open call for submissions which ended on November 5 [2014]. I sent a lot of invitations out there to get some bots to be considered, to be submitted. And I think the question of why should we publish a bot? Aren’t bots already published on Twitter? I think the idea of publishing a bot in the ELC3 aims to do more. We want to contextualize the bots for the audience of the ELC3, people who study and are interested in electronic literature. To frame bots as a kind of electronic literature. To link to the live bot on Twitter. But we also want to offer materials so those bots can be studied. We want to preserve it for future generations. So what does this mean, exactly?

When we say we want to publish a bot, we want to publish an introduction to the bot; I mentioned that already. And we want to link to the live Twitter bot, but also I think it’s important to publish the bot’s source code. That way people can see how it works, they can remix it if they like, or replicate the engine, or perform code readings on that source code. I want to publish, and we want to publish, a snapshot of the bot’s activity. So the Twitter archive that’s downloadable. We can provide the raw CSV file, but we also would want to produce a nice interface to see the data. It might end up just being a big link to the tweets, and links to the individual tweets’ URLs, because I think that’s really interesting as well. Whenever a bot tweets something it is this digital object that exists on Twitter, and people can interact with that digital object. They reply to it, they favorite, they retweet. It gains a life of its own, so I want to provide access to those objects on the web.

I also want, and I’m thinking we might want to scrape some data on that individual tweet. If Twitter were to suddenly crash and burn, we want this to survive. We want to have at least a sense of, at the moment of publication, how was that tweet perceived? Just to kind of gather that data and make it part of what we publish. And of course, as long as Twitter’s there, as long as they honor and maintain those links, wonderful. You can just follow the link and see the updated version, the live version. But again, if it crashes and burns, we still have a record of it. I think that’s important as well. I’m thinking long-term preservation here.

Some concerns. Attribution and permission are concerns. For example a bot with copyrighted source materials. Can we publish that without getting the permission, or paying the copyright owners, for that material? I’m not sure about some of these things. The question has already been raised about what constitutes Fair Use, and whether something is being changed enough. Also do we need to contact and get permission of all of @pentametron’s and @haikud2’s attributed retweets and tweets? They’re retweeting other peoples’ tweets, isn’t that their property? Can we publish that? I want to, and my inclination is yes we must. But at the same time, it might be complicated. So it’s something worth thinking about. And of course the other concern is if Twitter crashes, or if there’s another botpocalypse, and it all comes crashing down. I do want us to have a record that this happened, even if the live bot doesn’t work anymore. Even if Twitter itself doesn’t work anymore. I would like for there to be a record in the ELC3 that these bots existed, and that people interacted with them, and they responded to them, and they produced things, and here’s a sampling of that, here’s a snapshot of that.

So I want to make a special invitation to you all. The call for submissions closed on November 5 [2014] but between us (And don’t tell anyone please; pretend this is not streaming live on the Internet.) the form is still open, which means you can still submit your bot, if you’re interested. If your bot kind of fits this idea of e‑lit, of this sort of engagement with language, there’s the link. Go and submit the bots, and we will consider them. This window, we will eventually shut down the submission form. We’ve already received over 400 submissions, and we’re thinking to publish about sixty works. So this will be competitive. However I think these bots can compete, and can compete very well. So I’m very interested in this, and we can have a conversation about this. If you have questions, comments, ideas, even beyond the scope of this particular bot summit, here’s all my contact information. Get in touch with me. Ask me the questions. Submit more than one bot. Give us some material to think about. And I’ll be very grateful. Thank you all.

Darius Kazemi: We did have a question from Matt Schneider, asking about preservation. This is sort of a mechanical question about preservation and concerning bots that use media, and rich media essentially, and that capturing the tweet is often not enough. Or even if a bot links to a web site and expects the user to visit that web site. You might want that web site in that context as well.

Leonardo Flores: Yeah, we can’t copy the whole Internet. We do have some space constraints. However, we’ll try to archive the things that are sort of in the purview of that bot however much we can. We’ll try to do as much as we can. But of course it’s a concern.

Darius: Allison can you talk about the excellent point you brought up in the chat?

Allison Parrish: This is something I feel gets left out of a lot of these discssions of preserving technology, like it’s kind of a big sub-field in electronic literature stuff, in particular. But I think the important thing (This isn’t a question but Darius is making me say it.) I think an important and interesting thing to do would be to do some ethnographic work in addition to archiving work, and interviewing people about their experience of reading or following or using a bot. And so that we capture a little bit of— because like you say you can’t capture the entire Internet, but we can have a record of somebody’s experience of doing that particular thing. I wonder what you think of that idea of including a little bit of ethnographic work in addition to the the technical work of actually doing this archiving.

Leonardo Flores: Absolutely. I think if you’ve seen the Electronic Literature Collections, they all have a nice little introduction to each work. And I think this is a good space to include that kind of material. This Electronic Literature Collection can be what we make of it, and I’m game. I’m game and interested in considering any kind of additional material that enriches the experience of the work, and the documentation of the work, but we can document experiences of the work as well as the work itself.

Darius: Other questions, or comments on preservation?

Leonardo Flores: Do you think this might work? I think it seems sound, right? You can download the archive, you can get the source files, so at least that we can do.

Darius: I think it definitely seems sound. Then there’s just, how far do you take it? There’s an infinite amount of work that you can do in archiving, and I think it’s a matter of drawing lines, and maybe that’s a line that expands where necessary. Maybe it’s up to a bot creator to decide, “Oh well, I want ethnographies, and I want to scrape all the pages that I’m referencing” and that sort of thing as well. That’s my thought on that. I guesss Nick and then Joel and then Eric.

Nick Montfort: I’m just going to mention we have in the second volume of the Electronic Literature Collection already documentation of installations. Like work that was done in a cave in the ground, and various places where we don’t have the work itself there. But we have information about it to show you some of what it was like. So we haven’t done this with bots, but we’ve already done similar types of work in the making that material available alongside computer programs that run, and multi-media pieces that work and so on, so that people do get this richer idea, what creative activity’s going on.

Allison: To be clear, I wasn’t talking about some perceived problem with the Electronic Literature Collection. I was just thinking, my point is that we could have a perfectly-preserved Commodore 64 or something, but it doesn’t mean anything to have just that artifact sitting there unless we also know what people did with it.

Darius: Joel?

Joel McCoy: I was just going to say that you’ve from the, minimum viable idea of what those archives are going to be… Ever since the @horse_ebooks situation, a lot of people are very interested in having the archive for that account, because at this point if you by by what’s still available in its archive, and what Favstar has got in most engagement, it’s always the content since it was taken over by a human being. It’s always been very interesting, even a a very basic level of “Alright, let’s bisect this archive into when it was script and when it was an art project.” So even just the raw tweet content, at least in that case, would be a very interesting piece of history to use. We don’t have it.

Leonardo Flores: I would love, if anyone knows a way to contact the @horse_ebooks person. I’ve been trying, I’ve been asking around, but I don’t know how. I haven’t been able to get a hold of, I forget his name, but I haven’t been able to get a hold of him. I think it’s an important phenomenon.

Darius: Which part, though? The Russian who ran a spam account, or the artist who ran the not-bot, I guess?

Leonardo Flores: The one who bought the bot. I think it’d be interesting to include that piece. It was wildly popular. And to do a study of the generated part versus the performative, the human performance part, I think would be interesting.

Darius: Eric, you had a comment.

Eric: One thing that comes to mind if we’re going to be archiving source code for bots, is I feel like with any of these software preservation projects there’s always the problem of, you have source code but can anyone actually run it or use it or get it to do anything? And especially since Twitter controls the API to their service, a lot of the bot source code, at least in my experience, is often dealing with these types of APIs that change over time. So I was wondering A, what your thoughts on that are and then B, is if we’re going to start archiving bots in a serious way, is there some kind of—I almost want to say like a Twitter virtual machine or something we could program that will be stable. That in thirty years or something you could actually fire it up and run a Twitter bot and get it to do something, as opposed to like, the service may not exist anymore, the operating system might be different. It just seems very ephemeral right now.

Offscreen 1: I think that’s called [Archer?].

Leonardo Flores: I would love to have a small Twitter just running inside of the Electronic Literature Collection volume 3. As a matter of fact last night I was having a conversation with Susan Garfinkel of the Library of Congress. I’m at the American Studies Association conference right now. She was suggesting even creating this sort of mini Twitter-like space where someone could go interact with the bots, and read, like to publish ten or twelve or twenty bots in the ELC3, and have a little space where someone could potentially interact with the bots. But then that would require all kinds of additional programming. It would be a different kind of experience. So I think things break, but if you have the original source code, maybe twenty years from now someone can say, “Well all we need to do is reconstruct this, this, this, and this and [bring] the bot back to life, kind of fix it.”

Darius: I think that really depends on the nature of the bot, too. If it’s a bot that sources from people saying they’re lonely on Twitter, for example, unless you sample Twitter for six months and just run it on a constant loop, even then you’re going to get this weird scenario where it’s like, this is frozen in time. One of the things I like about bots is, like green bots, as Mark Sample would call them (Second Mark Sample citation!) is that they evolve with the world. So as slang evolves on Twitter, as memes evolve on Twitter, as news comes out, they stay topical. And I think it’s interesting, like I would be interested in making a closed time loop of Twitter that could be sampled or something and made a source. I think it would be imperfect, but also like emulating a Nintendo is imperfect, too. I’m certainly glad I live in a world where we can emulate Nintendos versus never playing Nintendo games ever again.

Joel: It’s like the weird idea of resurrecting the dead machine from thousands of years ago versus translating the content of old Amiga or Tandy manuals or whatever, and being like, “Here’s what this thing did. Here’s what it looks like to be brought alive in our world that we’ve living in.” And I don’t know if resurrecting the ancient machine the way that our wise forebears left it to us is any better than recreating it in the world that we’re now in. So the idea of getting this “Stasis Twitter” seems less engaging than the idea of simulating a Twitter stream with RSS or some other system. Or whatever the modern network is, or porting it to whatever social network is popular [cross-talk]. That seems more fruitful as the procedural versus content [emulating?] that he was talking about.

Darius: I think that almost touches on some of the points Nick talked about in the translation work, where you want to just translate the sense of the work rather than shoot for a mechanical idea.

Leonardo Flores: And I think that’s why it’s important to have that sort of snapshot of the moment. Because it is a performance. Right now what we can document is the source code, but also the performance of the bot’s life, up to the moment in which, as late as we can before going to press so to speak, with the collection. And therefore that can survive.

Darius: You think like, happenings or Situationst performances and things like that. We have archives of them, but I’m never going to know what’s it’s going to be like in Paris in the 60s so I’m just not going to have that context, and the best I can do is read people write about it, either from that time or people who were there or have studied it a lot. Nick.

Nick Montfort: I just want to make a bit of a case for keeping functioning artificial artifacts around. Because think about something like ELIZA, to speak of bots. Fifty years later, we still have psychotherapy. You can understand what that is, even if we didn’t understand exactly as in the 60s. Computers are certainly at a different stage, and development of natural language interfaces is different, and so on. But it’s not just a matter of thinking about how did that work on a teleprinter, back in 1965, and what was the office like and what was that experience. If we become too obsessed with trying to recreate that, we don’t give ourselves the permission to have our own experiences today with the same computational work, the same piece of art or literature that happens to be a computer program. You don’t go to the art museum, you go to the Met, you don’t say, “Okay let’s experiences these exactly as they did in Egypt 2000 years ago.” We recognize that we live in the world today, and we’re looking at works that have been maintained and exist now. So I think it’s sensible to consider preserving things from an ethnographic standpoint and considering how people use them, but not all this stuff will only be of the moment. Some of it might be interesting in fifty, a hundred, or more years. And having it around, having source code around to have it run is part of that.

Darius: Yeah, it’s a “yes, and” type situation. I don’t think anyone’s saying we should throw the source code out the window. Although I could take that approach.

Nick: Or on the command line.

Further Reference

Darius Kazemi’s home page for Bot Summit 2014, with YouTube links to individual sessions, and a log of the IRC channel.

Leonardo has posted the slides for his presentation at his site.