Ethan Zuckerman: Our next act, and please come onto the stage, is titled “Messing with Nature.” And this is the first of two Messing with Nature panels. This one is on genetic engineering. And I’m going to hand you over to your moderator. Megan Palmer is a bioengineer turned policy wonk. She is now a Senior Fellow in international security over at Stanford, and she’s going to lead us through this conversation.

Megan Palmer: As the video here provokes us to ask, “messing with nature.” When it comes to a field as fast-moving and as high of stakes as genetic engineering, how do we proceed wisely? How do we balance our own wildness and civility as we develop increasingly powerful ways to interact with the living world?

Today we’re joined by three exceptional panelists, who are asking questions, pursuing lines of research, that are considered by some to be wrong, forbidden, sacrosanct. They’re really pushing the limits of our ability to engineer our environment and our selves. I’m looking forward to the chance to discuss with them how they grapple with that type of responsibility. Are there types of questions they don’t ask?

But this is a very personal issue for me, because I did train as a biological engineer right here at MIT. And there, I really only considered the constructive uses of the technology. Now, I work instead of engineering at the microscopic scale, at the molecular scale, I consider how we are shaped by and shape our social world at a global scale by being able to engineer living systems. More on that in a minute. First I want to introduce our panelists.

We’re first joined by Kevin Esvelt. He’s right here at the Media Lab. And he leads a group called Sculpting Evolution. And that group is really inventing new technologies to engineer ecosystems in really powerful and profound ways, but also asking how can we change the process by which we develop these technologies to ask the right questions at the beginning.

We also have Ryan Phelan. She leads Revive & Restore, whose mission is to increase biodiversity but specifically through genetic rescue, looking at species that are either on the brink or are already extinct, and how we can use new genetic tools to bring them back.

And lastly we have George Church, who is really a leader, a pioneer, and a visionary in genetics and in genetic engineering, who’s developed new tools and started major projects like the Human Genome Project and the Personal Genome Project.

I’ve asked them to each share about five minutes about their work, and then we’ll get into a discussion about why they do what they do. But I thought I would share a little bit about first my path on why I’m here today.

So, for the last five years I’ve participated in a multi-university research center developing the field of synthetic biology. MIT was one of those universities. And I helped to codirect part of that center that was focused on policy and practices, really asking difficult questions about the safety, the security, the ethics, the governance of getting better at engineering biology.

We confronted a number of very difficult questions, and we worked with both leaders in genetic engineering, but also experts in law, in the social sciences, in science and technology studies, and political science, to ask these complex questions all through the process of developing the technologies. Some of the questions that we came across were around does getting better at engineering biology necessarily increase our thriving, our security, our economic prosperity? Or might it imperil it? It’s the reality that microscopic organisms are trying to kill us all the time, unconsciously. So are our conscious efforts going to be more of a risk through either accidents, or through unintended consequences, or the intentional misuse of the types of technologies we create?

A second and often less appreciated set of questions had to do with, if we’re giving these tools to all the people in a distributed manner, first of all can we do that? Will it increase social inequities or will it decrease them? And does access to the tools necessarily mean access to the benefits and access to the risks?

And it’s easy to get caught up in paralysis when you’re asking these questions. Some of my colleagues call it the “half-pipe of doom.” We’re simultaneously destroying and saving the world all at the same time. So what we did was rather take a really case-based approach and really focus on what are the particular projects, and who are the particular people we’re working with?

One of the most successful places we looked at this, I think, is in the context of the iGEM competition. Some of you may be familiar with the first robotics competition, where you get a kit of parts and get to build a robot over the summer and show it off and compete against your friends. Here at MIT, they started the International Genetically Engineered Machine Competition. Same idea. You get a kit of genetic parts. And then you give them to undergraduates to figure out what are they gonna make? They don’t compete them against each other; that would be a bad idea. But rather, they ask “what is the most useful thing we can do with this technology?”

And one of the things we can do with something like an undergraduate competition where people don’t know how to do science yet, is you can give them incentives and rewards to ask those difficult questions all along the way. So they actually show us how should we think about these questions. What should the rules b?. Because in often case, the rules in laws around this don’t apply.

The other thing they learn is something that I did not learn when I was in undergraduate or graduate studies, which is around norms that used to exist about the destructive use of biotechnology. They learned that the US, for instance, had an offensive biological weapons program that was considered at one point to be something that we would do. And since the 1970s, this is not what we do. We have an agreement that we are not going to do it. But they also learned that they have to uphold that norm over time.

Now, the difficult thing comes not with the kids, but with the adults. And as we are seeing these types of technologies that really push the boundaries of what we can do, that limit between what’s constructive and what is destructive gets really fuzzy. But that doesn’t stop us from developing new policies. So we already have policies around ethics and safety when it comes to the research in these labs. As of last September we now have new policies around dual-use research of concern, meaning all of biotechnology is dual-use, but some of it is potentially…concerning, more concerning. Things like work on pathogens.

But when it comes to applying those rules, we can’t really quite figure out in what cases should it apply. And when we develop the data to figure out okay, can we balance the benefits and risks? The jury is out. We actually can’t quantify some of those risks. So we’re left with questions about who should decide in the midst of uncertainty.

I don’t have answers but I hope that we can discuss today what that might look like. I’m going to allow Kevin now to go on and tell us a little bit about his work.

Kevin Esvelt: Thank you, Megan. So, I’m interested in the question of when we engineer life, what does that say to other people? And what are the repercussions, not necessarily to the living systems that comprise our world? Living systems that—let’s not doubt it—that we depend upon. That is, ecosystems are the life support systems of the planet. And it’s not unreasonable to say that we tamper with them at our peril. And of course, we’re doing that all the time.

But people are unusually sensitive to altering life. And we need to recognize that there’s a difference between altering a single organism in the laboratory and altering many. Because when we alter one organism, we’re tinkering with something that evolution has optimized for reproduction in the wild. And in general, when we mess with it, we break something. That means that when we alter an organism, it’s not going to survive and proliferate very well in the wild. Our change is not going to spread.

But there are some natural genetic elements that will spread in the wild, even if they don’t advantage the organism. And the dawn of CRISPR, which is a molecular scalpel that lets us precisely cut and therefore edit any gene in any organism, can also be used to build one of these gene drive systems that lets us spread that change through entire wild populations. That is, biology has always been of course a self-replicating technology. But when natural selection weeds out your changes, that’s not real self-replication. That’s self-replication in an environment you control.

With gene drive, we can spread those changes to the wild. This has immense potential to do good because this is a way to solve ecological problems using biology, not bulldozers and poisons. We could alter or remove the mosquitoes that spread malaria. We could solve schistosomiasis, known as snail fever. The Aedes mosquitoes that spread other diseases. We could control invasive species. We could, instead of spraying poisons to kill insects that eat our crops, we could tweak the pests so they don’t like the smell.

But what is it going to do if someone messes up? That is, we’re treading on the boundary of what many people consider to be fundamentally unwise or even profoundly morally wrong. We are messing not just with a single organism in the lab, or even ones that we might grow in our fields, but entire natural ecosystems. What if someone makes a mistake? What would the public backlash be, and how much harm would that do to this field and its potential to do good to save lives and species? Or to everything else we might do in biotechnology. Or even to biological research in general. You can’t ban CRISPR-based gene drive without banning CRISPR, and CRISPR is now one of the four pillars of biotechnology.

So how do we address this? Well, we are working to ensure that all work is done in the open. Because we have to ask, do we have the right to run experiments that could end up affecting everyone if we make a mistake behind closed doors? In medical research we demand informed consent before experiments can be run. But right now, there’s nothing stopping me or any other scientists from simply building a gene drive system in the lab that could impact the lives of everyone here. That’s a problem. Because we’re not always careful. There are laboratory accidents. So, some things are forbidden and arguably shouldn’t be. Other things, perhaps we should set up some more barriers.

But does that not mean that laws are the solution? We’ve heard about the flaws of laws and regulations. So perhaps instead what we need to do is change the incentives so that research like this, that would have shared impacts, is always done in the open. That would actually accelerate progress. Because right now, it’s very hard to know what other people are doing, what other people can do. It’s very hard to find the other piece of the puzzle. It’s very hard to learn whether or not your efforts are really wasted because other people are doing the same thing. Science would be more efficient, and it would be safer, if done in the open. Because then everyone can keep an eye on one another.

So we’re working to ensure that this is possible by in part leading through example. We’re not only developing technologies that would let us alter local ecosystems, not just global ones, but we’re also pioneering an approach wherein you test the alteration, in the wild, without a gene drive system, and you do it in a local grassroots manner.

And we’re doing this by working with local communities. Just yesterday we went to Martha’s Vineyard, island off the coast here, to introduce the idea of releasing mice engineered to be unable to to carry the pathogen that causes Lyme Disease. Most cases of Lyme come when ticks bite these mice and get infected, and then bite a person. If we can prevent the mice from being infected, then we can disrupt the cycle of transmission in the ecosystem. No infected ticks, no infected kids.

But we’re doing it by approaching the communities at the earliest possible stage, before we’ve done anything in the lab, to ask for their guidance. And the response was remarkable Martha’s Vineyard is known as a sort of crunchy nuts and granola community. People are very concerned with genetically-modified foods. But when we emphasized we’re coming to you first; we’re doing this in the open; this is a nonprofit; it offers obvious benefits; we’re very concerned about safeguards; we are not starting with a gene drive system; and we want independent monitoring set up by you to make sure that everything goes well and that you’re confident; we want to build in points where the project stops unless you give explicit permission to succeed. And out of the hundred people who attended this meeting, every single one supported moving forward.

So this is a way that we can proceed, that we can walk this line with research that people are very nervous about, by making it safer and increasing public confidence in this. Because education alone will not do it. You cannot simply explain what you want to do and how it works and trust that people will go along. You have to show that you understand their concerns. You need to invite their concerns. Because trust is not a given. It must be earned each and every time. Thank you.

Ryan Phelan: Extinction is a familiar concept to everyone in this room. But it might surprise you to know that just over a hundred years ago, no one really believed that you could actually wipe out a whole species off of a continent. And we did that with the passenger pigeon in 1912. It was really the first time the public really dealt with that concept, and yet it’s ingrained in all of us today this room that extinction is forever.

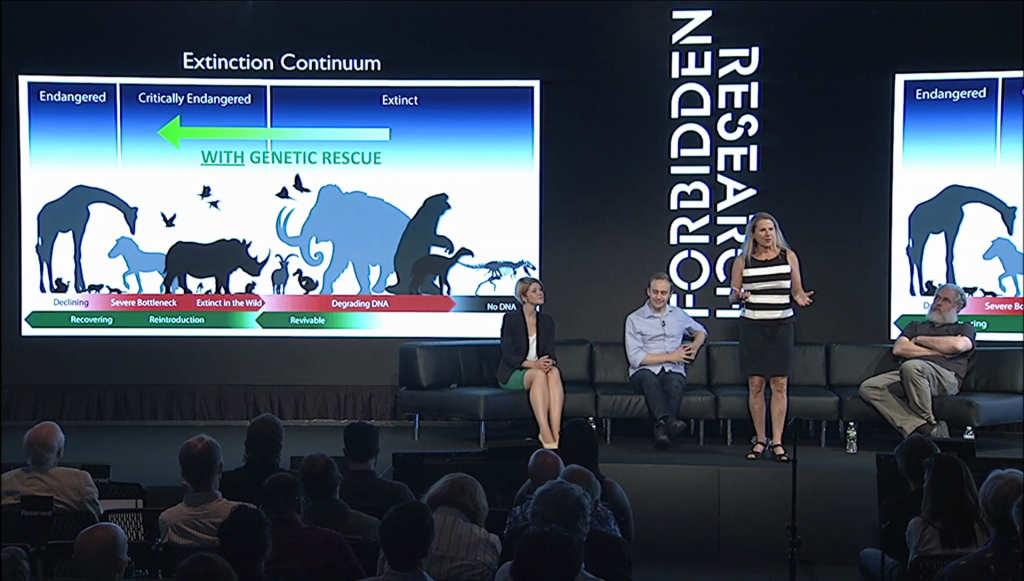

Slide: Revive & Restore Extinction Continuum

Well, on the left hand side of your screen, you’ve got those species that are walking into that line in the center, crossing over into extinction. And with the help of George Church and others, we have started to think about if there’s recoverable DNA, that it changes the game. And I’m going to share a little bit about that with you.

For the last four years, our organization Revive & Restore, which I started with Stewart Brand as part of The Long Now Foundation, have talked about the concept of using recoverable DNA the woolly mammoth, for the passenger pigeon, and other extinct species, to actually bring them back to a healthy ecosystem someday. Now, this is a long-term project. And one of the things I want to share with you is the fact this idea of de-extinction has been applauded and it created an incredible media interest and a lot of public interest. But it’s actually strangely taboo for a lot of the academicians who have wanted to get involved with this project; for funders, who have been apprehensive about funding de-extinction and yet very supportive of the work that we do on that other side of that continuum slide, which is using the same tools of genomics to help endangered species, many who are are far along along the line on that brink of extinction.

So it’s interesting to us that we’ve been able to see this change where we’ve been able to secure government sponsorship with US Fish and Wildlife, with corporate potential partners, with funders, for the kind of work that we might do with the black-footed ferret that’s an endangered mammal. Or for using genetic rescue to save the birds of Hawaii that are being plagued with avian malaria brought in by an invasive mosquito. There’s a lot more interest in that even though, as Kevin says, we might drive that mosquito to extinction. So you know, imagine how complicated that is for all of us. One side talking about de-extinction, another side potentially even talking about extinction.

And I think part of the problem with de-extinction, and it’s the argument that is raised by many conservationists and environmentalists, is it will take away from the concept that extinction is forever. That that slogan has been a rallying cry for environmentalists for the last fifty, sixty years that has made us motivated as citizens to protect those endangered species. And that’s a good thing.

On the other hand, if these technologies that others are pioneering can actually take specimens like the passenger pigeon from the toe pad and extract that DNA from over 1,500 specimens that are in museums all over the world of passenger pigeons— If we could actually bring that bird back, we could potentially recreate that ecosystem.

So their concern is taking money away from conservation, which is understood. But they see it as sort of a zero-sum game. And we want to make sure that we can attract more money for conservation and bring new awareness to these endangered species, like the birds of Hawaii with avian malaria. But the bottom line is they’re really also concerned about something that is referred to as the moral hazard. That we in the current generation may unleash a problem for future generations. That problem could be that de-extinction doesn’t really work. We’ve made it look easy, and people will take pressure off of protection, and they’ll inherit the problems that scientists today will be unleashing on those future generations. And that’s something that we all have to take very seriously, and I welcome this conversation today.

Thirty years ago today, literally, the Frozen Zoo at UC San Diego was viewed as being incredibly rogue with the idea of taking in and cryopreserving endangered species. And they raised that moral hazard argument with them. They said you know, “You’re gonna take the pressure off of protection if people think you can just reconstitute these species.” But thirty years later, we’re now taking those cells out and using them to increase genetic variation in the very same black-footed ferret that is suffering now from disease susceptibility because of the lack of genetic variation. Today, the Frozen Zoo is viewed as being prescient and thinking about the future for other generations. And I can only wonder, thirty years from today, what future generations will think of genomic technologies and the opportunity that we have had to explore bringing back, adapting, engineering, species to give them a greater pathway into the future.

George Church: So, when we talk about forbidden, we worry about things like commandments; hurting, or stealing…individuals. It’s even more problematic when we talk about hurting classes of individuals as we’ve heard already today. And even more problematic when we’re talking about impacting the entire world. And that’s why we get concern about self-modification. About systems that can write their own code, that can evolve at alarming rates. We worry about things like artificial intelligence, but this is kind of a placeholder for actually changing ourselves. We’re hybrids. We’ve been hybrids. We’ve been augmented for a long time. We reassure ourselves that oh well, we’ve banned augmentation in sports, but most of our life is not about sports. It’s about… we’re quite willing to change our minds and change our bodies.

And many people of will even do this well before FDA approval. There are now individuals that I know personally that are doing gene therapy on themselves, way in advance of animal and FDA testing. We’ve seen gene therapy fail in the past, but gene therapy is definitely with us. There 2,200 gene therapies in clinical trials right now. Fully approved processes. Many of them are getting into practice.

This ability to self-modify is quite prominent, and we can’t say oh, it’s all about DNA, it’s all about genes. Because you know, there are many things that are heritable—in fact, most things that are heritable like this thing here. [holds up his cell phone] In my family we have generations that have used this. And when we talk about genes, you have this kind of genetic exceptionalism where you’re talking about irreversibility. This is ridiculous. Genetics is perfectly reversible. The things that are hard to reverse are the other things we inherit, which are things like a culture, and our technology. It’s very hard to pry this loose from our society, our computers and our phones.

What we’re worried about is something that we do that could be very attractive in the short term and have some triggering mechanism or some slow events that occur far in the future. And that’s why we worry about changing ourselves, changing our environment in such a way that we could have unintended consequences.

But doing nothing is also extraordinarily risky. We have a population which is easily twice what we thought was the carrying capacity of the globe, and it’s probably half of what we will eventually have. So there’s no magic number, but the thing is we need to be very thoughtful about unintended consequences, which means we need to have an open system such as Kevin has outlined.

And this has been a core theme for many of the people in this room, in this meeting today, and in my own lab. Over a decade ago, three examples of the making open… How people are involved in medical research so they can get access to their own data, whether it’s medical records or medical research. And then how they can safely understand what they’re getting into and share that information. A second example is The rise of synthetic biology. All of these methods of reading and writing genomes are an exponential curve which is faster than any technology has ever been. We’re measuring half-lives and doubling times not in decades but in months in these fields. And so you need to pay attention. So I argued for surveillance of the uses of synthetic biology, which is now fairly widespread among the companies that provide synthetic DNA.

So, what we need going forward is not just a reaction to what we think is yucky. I mean, for example germ line modification may be more acceptable to some people than abortion or in vitro fertilization. If you have a recessive disease in your family 25% of the embryos are at risk. With IVF it might be 80%. While with sperm modification it could be no embryos are at risk. You change the alleles back to the normal version of DNA that’s present In 99.9% of the population; it’s very well tested. We need to think a little bit out of the box rather than immediately rejecting some of these new technologies.

Altering our minds. We certainly do do this with chemicals. We are doing it increasingly. There are hundreds of thousands of people who have various electrodes implanted for sensory issues, for depression, for epilepsy, and so on. We will continue to do that, and it will become more and more biological. That is to say the Compactness and the programmability of biological systems will enable this. And this will go much faster than anything we can do in the germ line. We can modify our selves real-time. It’s obviously going to be a faster revolution. It could spread as fast as the Internet does, while germ line takes on the order of twenty years to see impact. Thank you.

Palmer: So we’ve heard about potentially, technologies that can make species extinct, technologies that can recover them from extinction, and technologies that can alter us in ways we may not even imagine. What I’d like to talk about is—because there’s so many different issues that genetic engineering and genetics brings up—is is to really bring it back to the personal.

So for you, in your work, in your labs, how do you actually reconcile whether or not you should do what you’re doing? Are there steps that you’ve had to put in place, or advisors you’ve had to put in place to help you wrestle with these questions over time. And I’ll start with George, because perhaps you have the largest group of potential advisors right now.

Church: Yeah. Well, step one is openness to being advised, to listening. Not just educating the public, but listening very carefully. And of course that can come from the government, industry, lay audiences, religious groups, and so on. And you need to hear what’s important to them, whether or not it makes sense to you immediately, scientifically, it makes sense in some larger global senses. So I think that’s number one where I can deal[?].

Also, just making a special effort to make our information public in a one-sided way, ignoring possible competitors that might take advantage of us and so forth, that’s I think an important part of making sure we don’t do the wrong thing. Because we need lots of input. It’s not just like some super-genius is going to figure it all out.

Palmber: Kevin, for instance, in your work is there a point at which with this meeting that you had at Martha’s Vineyard, what if the answer had been not 100% yes? What if it had been 75 or 50 percent? What would you you do with that? What would be your next step?

Esvelt: In terms of proceeding, that’s a question for the community, and it’s an issue of governance. It’s up to the community to decide do they needed a two thirds majority to proceed, do they need unanimous consent, or what.

I was a little bit disappointed that it was unanimous, because I was hoping that we might have some skeptics. Because skeptics are actually he ones that we need. Because if we’re doing everything in the open and we’re inviting scrutiny, the people I trust to be the most careful in checking everything that we’re proposing, to find any kind of error that could sink the project— And make no mistake, were setting it up such that the project stops unless there is affirmative community consent to proceed.

Phelan: So, this question of community is important, because it’s which community. So you know, Martha’s Vineyard is a wonderful island. But imagine it’s not an island, or the species that is being engineered can go off-island, the same way that many of these invasive species came on-island; they came by boat, they come by plane. And which community is going to actually give permission for other communities that may be vulnerable. I think that just really changes the game for us in this field.

Palmer: Are there situations where in the work you’ve not had consensus? You have not been able to necessarily define a community, and yet still have made the decision to proceed? Or, areas where you haven’t.

Phelan: Well, I think for Revive & Restore, we certainly don’t have consensus that what we’re doing is a grand idea. What we have are people with varying opinions. And I think what we’ve found is much greater comfort on genetic rescue for endangered species. And it was partly through that public dialogue, George, that we had from when we put on TEDxDeExtinction with National Geographic. We got a lot of media, a lot of interest. But we had people say to us, “Can you help with some short term gains here with species that are endangered?”

So we learned from the public, we adapted from the public response. But we haven’t given up on the fact and the idea that de-extinction and the work to George and others are doing that is bleeding edge will help impact things for other species. So I think we don’t want to say you need consensus in order to do science. We wouldn’t move anywhere. But I think we need to do it, as Kevin you’re suggesting, is with a lot of public input and with a lot of safeguards.

Palmer: So, you guys have all made strides to develop innovative ways to get input, dialogue, with your communities. Not all of our community takes those same precautions. How do you interact with other people who are in the biological engineering, in the genetics community, to shape that culture that you discussed? The things that might be harder to pry away. Are there things that worked, or will there always be activities on the fringe that will just you know, not necessarily say this is a good idea? In particular the case of gene drives, where one actor alone could lead to a large consequence.

Esvelt: I have nightmares about fruit flies. Imagine an expanding wave of yellow moving from city to city, nation to nation, continent to continent, accompanied by headlines every time dramatizing the point that scientists cannot be trusted to deal with this technology.

So in dealing with that, we decided early on on realizing what CRISPR-based gene drive could do, but we needed to tell the world first, before demonstrating live. That’s just not done. You show that it works first, before you waste everyone’s time telling them. Now, it turns out we were right, because it’s the same mechanism used for genome editing and gene drive. But we got a lot of professional criticism from that.

And in a lot of other fields, that makes sense. But we’re entering a new era of increasingly powerful technologies, and the scientific enterprise incentivizes to do everything behind closed doors, as George was talking about. And that’s hard because we can often get away with it. But what about our students? We’re responsible for helping them in their careers. If we’re open about their projects and a competing lab that is working in the dark scoops them, publishes first, that could be it for our students’ career.

And that’s a hard decision because it means that yes, we do have to pre-commit to force ourselves to do the right thing in the future in moments of moral weakness, but we’re not the only ones bearing that price.

Phelan: So, there’s one other example of where research is becoming quite open, and that is where human health issues are at an emergency crisis point like AIDS in the past, today with Zika. And I don’t know if you saw the recent editorials on this, really calling for open research approaches. And I think the more that this is surfacing, and you guys are really leaders in this, it’s going to impact the way science is done across the board. I feel like you guys are game changers for this.

Palmer: Can I dig a little bit more into what we mean by openness? We have lots of different ways that we can tell people about what we do, and that doesn’t necessarily mean that they are consenting to it. And it doesn’t even mean they’ll pay attention to it, or they’ll read it, or they will engage critically in the work that you put out there. So, one of the things that this reminds me of is in the regulatory review, or any type of review, we inform people of what we do and then we expect public comments. And I don’t know if you’ve read some of the public comments, but they’re not always there, and they’re not always good. They’re not always informative.

So as you’re thinking about projects that are bigger and bigger in scale, are there types of things, steps that you would like to take to make that a more engaging dialogue, to make that openness step-wise? What are the meaningful things we need to share, and what are the things that maybe are not worth sharing, or not efficient to keep sharing?

Esvelt: It’s hard because you can’t simply share your research results. We can’t simply have electronic lab notebook and make it open and expect people to follow what you’re doing. That’s just too much information. There’s a reason why we write things into stories and share the stories. That’s what makes people pay attention.

And yet at the same time, we had a very near miss with fruit flies. And it was really sheer accident that we heard about a work and were able to contact the authors immediately and share our concerns. And they actually revised their paper days before it came out in the journal, in response to those concerts. And then we gathered together, worked with them and a bunch of other scientists, to come up with recommendations for laboratory safety of gene drive studies to make sure there wasn’t an accident.

Palmer: Can you elaborate a little bit on the fruit fly for those that don’t know?

Esvelt: So, one of the problems with a technology is sometimes it’s inevitable. So, we may have realized the implications of CRISPR for gene drive first, but even after we had published on it and told the world before doing anything, and then we demonstrated in the lab and so forth, another group independently realized that they could essentially teach the genome how to edit itself, and that this would be good for knocking out both genes in an organism. Because if you insert the CRISPR system, make it self-inserting to disrupt a gene, if it goes in once it’s certainly going to cut the other version and copy itself over. That’s exactly how a gene drive works.

But they weren’t thinking about a gene drive as in altering the wild population. They were thinking about, “We want to knock out both copies in one step very easily and reliably.” And so they developed it as a tool to do that, without really thinking about the implications certainly for any other species, but even really for in the wild and what would happen if they publish this as a method for many many other groups to use. And they weren’t the only ones. It’s an example of how even the best experts in a field, no matter how good their intentions, can’t reliably anticipate the consequences of what they’re doing on their own. The world is too complicated.

Palmer: So, what any other types of things that we might be able to do, knowing that there will be mistakes? That there will be things we do wrong. What are some of the things you’re doing, either in their labs or with others, to help anticipate and perhaps have a more proactive response?

Phelan: Well, I think monitoring is going to be key in all of this, and how monitoring gets handled is— I think it’s way beyond the community level. I think anytime you’re introducing a species that’s been engineered in any capacity, there are known protocols for this. And I think part of what these new technologies have to borrow from are the existing safeguards that conservationists, environmentalists, have been using for decades now before they reintroduce wolves to Yellowstone, or reintroduce beavers to Scotland. They know how to do captive breeding, and they know how to do release in the wild, and I think we need to learn as scientists moving from the lab into the wild how to draw upon that expertise.

Church: So, there are various ways that we can get broad adoption that’s even larger than the small consensus that we have. So for example, we can have aide synthetic biology companies to do computer monitoring of all of their orders, and then that can spread from company to company. And then that can be a very small bottleneck through which tens of thousands of researchers have to go, whether or not they want their DNA to be monitored. So that’s an example where you identify a bottleneck and you get consensus among the small numbers of DNA providers.

Esvelt: And sometimes you can create a bottleneck. So, right now we’re working to develop technology that would make transgenesis, which is editing the genome of an organism in the first place where you could insert a gene drive, right. Now that’s limiting. If we can—oddly enough—accelerate that, make it easier, but require specialized equipment, we can make it like DNA synthesis. So, it will be easier for everyone to outsource their orders to a company that uses specialized equipment to do it, thereby reducing the incentive for lots of people to train their laboratory techs in the key techniques that are now limiting for building a gene drive system.

Palmer: I’m struck by the difference also in some of the narratives when it comes to talking about how we monitor and centralize to safeguard the technology in certain cases in this field, following up from the discussions around how we actually ensure we don’t centralize other activities in other fields, including in the types of technologies we use to communicate elsewhere. And so, how do we sort of reconcile the rhetoric around the “democratization” of those tools, of the tools that we’re [developing?] versus what it actually means to democratize the decisions and the monitoring and surveillance around them?

Church: So, we have another opportunity for getting broader engagement without necessarily huge consensus via new technologies. We have new technologies to do sequencing that are getting to be handheld. There will probably be parts of our cell phone or cell phone network soon, wearable sequencing, where we can do surveillance of microorganisms, not just the macroorganisms that we might be introducing in a de-extinction project. And that could be a very helpful.

We have to get outreach, though, get people engaged. Do it yourself biology should be the ultimate in citizen science, really. It’s about yourself and your immediate environment. You should be able to monitor the allergens, pathogens, and so on. So there are efforts to try to get information out to the public, again through sort of passive met methods. So, pged.org, for example has outreach through screenwriters in TV and films, outreach to Congressional aides to the individual desk districts, so that they are genetically literate and they know what the options are. Things like How To Grow Almost Anything is something that’s a worldwide capability, builds on maker and fab society and so forth.

Palmer: I love to take some questions from the audience. I see we have one, and if others would like to ask questions please just use one of the mics, and definitely keep them to questions not comments.

Audience 1: I have a question about informed consent. Maybe you know that in the EU, informed consent cannot be given, per 2018, for algorithmic decision-making, for example. And you say that you have unanimous consent by 100 people in Martha’s Vineyard, which sounds good. But what is that consent? What are they consenting to? As researchers, we also don’t know what is to pass from the research that we execute, so what are we informing people of and how are they consenting to it, and how do you shape it in your research?

Esvelt: It’s a great question, and a challenge because all they heard was a single presentation on a technology that was admittedly imperfect in conveying the depth of information that really would be necessary to give truly informed consent. What they were consenting to was to move forwards with doing basic laboratory experiments that would eventually lead to a question to them, should we proceed at this particular stage? So it really cost them nothing to say yes here. We’re not going to be introducing mice because they said yes. And how we overcome that and make it truly informed, I don’t know. I think that’s the question before us, is determining how can we extend our admittedly imperfect mechanisms of governance to technology. Because now we’re at the stage where technologies developed by even individual researchers can have an impact as great as a law passed by duly elected representatives.

Church: One thing that we can do—have done—is to, when we’re getting consent for personal genomics or for biomedical research, we ask them to take a multiple choice question exam to simultaneously educate them and test their understanding. I think that’s one thing. And then better yet is having them do experiments, mostly analytic experiments rather than altering the environment, so that they get a feeling for it. So this is the power of do-it-yourself biology, and testing for knowledge.

Stewart Brand: Another question is, it sounds like career development for scientists and maybe engineers has a lot to do with the difference between sequestering and sharing. And if you get rewarded for keeping your stuff tight until it’s published in a prestigious and usually expensive scientific publication, that’s how careers go forward. But if what you’re shooting for is openness, and with urgency— If you’re trying to get the data out to everybody as fast as possible even though it’s not perfect yet—like happened with GenBank way back at the beginning—can career development move forward in a way so that people’s careers are rewarded for sharing rather than just for sequestering information?

Church: Well, one of the protections there is fear of being scooped. And so now the the preprint archives that were started in physics and now are quite widespread in biology as well, is one way of preventing yourself from getting scooped. And I’m seeing more and more examples where that is reported on by journalists. They don’t wait for it to come out a fancy journal. The fancy journals don’t hold it against you. You can eventually…essentially publish it twice, once in preprint form. But the preprint form is something that we can transact on and discuss. So I think that is a way of of getting this more open. And the patent system was another way of doing that. I think the alternative to patents was trade secrets, and I think that causes obfuscation, and I think obfuscation in biomedicine is a very bad combination.

Esvelt: But it is hard because preprints is still too late. If what you want is to invite public opinions and concerns (getting back to that informed consent question), we need to ensure that people have a voice in decisions that will affect them. And that decision may be, “Do you even begin this experiment?”

So we need the openness to be at the earliest stages. That is, your grant proposals need to be public. So what we’re hoping is that we can ensure that this is true in the field of gene drive, where that should at least to me seem self-evident that you really need to at least inform people of what you’re going to do if your experiment could get out of control and affect them. At a minimum.

And if we can show that that works in gene drive, and change the incentives by talking with journals and funders and possibly even utilizing IP to ensure that that is the optimal course of action for individual researchers in that field, then maybe we can move it on from there. Say well, what about other shared-impact technologies? And maybe if this system is more efficient and leads to faster scientific progress, because I believe that this is a rare opportunity where we can, if we can overcome this collective action problem, accelerate progress and make it safer, then maybe that model can spread to the rest of the science.

Palmer: It’s always those collective action problems, right? We just need to overcome the collective action problem.

Audience 2: So in computing, we have open source software, but it doesn’t mean much without being able to verify that you’re running the open source software that you think you’re running. Are there other mechanisms for determining that the genetic modification, the bag of seed that you buy or the banana that you buy or the mouse that you’re deploying, is running the modification or has been modified in the way you think it has, and it hasn’t been kind of subverted in any way?

Palmer: When we talk about, as George said, “surveillance” in this field, we often mean just trying to understand what are the modifications that exist in the environment. So, environmental surveillance, trying to understand what are the microbes around us, what are the organisms around us. But when it comes to research on developing those types of tools, to understand the effectiveness over time, it’s a pretty underfunded area of work, often. And even knowing who are the researchers that might do work in that intersection between say, ecology in the environment and genetic engineering, that’s still an area we have to develop. And so I’d say in most cases no. But we’re trying. Maybe there’s… [gestures to panelists]

Church: Yeah. I mean, sequencing technology has come down about three million-fold in cost over the last few years. So in a way, that is the way we program these systems. There are parts of some genomes which are very hard to sequence, and if you hid your information in those parts— But the technology is also improving in making those dark regions brighter and brighter. So I think in a few years it will be very inexpensive to read the code of any organism and every single base pair of that organism. But we’re not quite there yet. Human genome is about a thousand dollars now.

Audience 3: There’s obviously a lot of applications that come out of genetic engineering and genetic drives. And I’m wondering about who’s going to be making decisions about the ideal human form, especially as it’s applied to humans and traits that might only be expressed in 2–3% of the population eventually becoming extinct and not being expressed any longer. And so I think there’s a potential threat to misdiagnose the problem and erase it from the expression of human diversity that we currently see. And not just human diversity, but also cultural richness that comes from natural forms of what we currently call disability.

So where do you draw the line and who’s going to be making the decisions of what gets expressed and what doesn’t get expressed? And even if we’re going to be looking at the ideal form as being the dominant form, what happens to the forms that aren’t expressed as often? And so whose hands are going to be involved in the pot?

Church: I just have to say that I think there’s increasing discussion of how we can increase diversity rather than decrease it. I think this is incredibly important. And with many of our technologies, we do see an increase. There is not the ideal mode of transportation. In the same sense, there won’t be the ideal of a human being or animal or plant.

I think that we can, each of us, discuss this broadly, what we mean by the advances of diversity. But the lesson that we’ve learned time and again with natural evolution, and in engineering, is that diversity is an extremely good thing. We’re not talking about just diversity of culture and color, but actual biological diversity, neural diversity. Many, I think, people in academia are extreme values in things that could be classified as deleterious under the wrong circumstances. So I don’t think that the technology itself necessarily prescribes one or the other. We need to do that as a community discussion.

Phelan: And you mentioned the idea of gene drives I think creating the ideal, limited, diverse human population. This is already going on today with preimplantation genetic diagnostics, where couples or individuals are making selections to reduce embryos that are carrying deleterious mutations. So I think this is something that is unleashed, its in current medical practice, and is only going to increase with or without gene drives. Don’t you think, George?

Church: Well, yes. I mean, in fact in America 80% of gender selection is for female, so we might all be female someday soon. Which might not be so bad. But the point is that to the extent that we can culturally encourage people choosing a diversity, not some life-threatening disease that causes pain in their child for years until they die, but there’s many other things that are subthreshold. It’s not clear that everybody would pick the same traits, but I think it’s a very important discussion. It’s good that we’re having it in advance of some things, but we’re certainly not in advance of in vitro fertilization.

Palmer: We’ll have the chance to take these last two quick questions, and then we’ll have a closing comment from our our panelists.

Audience 4: Thank you. So, with regard to the de-extinction project, obviously there’s a whole globe of ethical questions around genetic engineering. Is any consideration being given to the availability or lack of availability of habitat for certain kinds of species?

Phelan: Yes. The International Union for the Conservation of Nature, IUCN, is the organizing body worldwide that deals with the Red List (that’s how people will refer to it) to determine whether or not a species gets put on that list. With Revive & Restore, we have helped shape de-extinction guidelines. And one of the very first questions, or one of the very first criteria—and there’s like twenty-five items listed—is whether or not there is appropriate habitat for a reintroduced species to flourish. It’s absolutely fundamental.

Other criteria I’ll just mention include ensuring that the purpose of de-extinction is to actually encourage the flourishing of the species in its natural habitat, not to be a zoo specimen or a curiosity, that the underlying justification is there. And other criteria include that the original source of the extinction, the cause of the extinction, whether it was hunting or poaching or pollution, has been removed and is not going to be an ongoing concern.

And then all the issues of animal safety and welfare and monitoring are part of that. But habitat has been the primary cause of much of the conservation movement, its focus, and I think rightly so. It’s been an issue, but increasingly we now have new challenges for species which are wildlife diseases, invasive species, and I should say alien wildlife diseases that are taking down in many cases bottleneck populations. And so that’s why these new technologies are so awesome.

Palmer: One last question, then we’ll give you guys a chance to—

Audience 5: I have a tremendously plebeian question. All the things you’re discussing are exciting and scary in a good way and in a bad way. But there is something that we haven’t talked about, which is regulation of things that are going on. There’s been a CRISPR product introduced on to the market. There’s a mushroom. It was edited in that way. There was no regulation, no discussion. Now, intellectually I understand that. They’re not changing genes, they’re not introducing genes from outside. But if you’re worried about the public adopting a new technology, there’s a lot of people who are not going to be happy that we’re suddenly just introducing this kind of thing without any discussion whatsoever anywhere. I don’t know what the regulatory framework should be, but maybe you do.

Palmer: I think this is a… I’m really glad you brought it up. You know, we have a biotechnology regulatory framework, the Coordinated Framework. It was developed in the 80s. A lot of things have changed since then. But it’s been kind of a political hot potato to look at it and re-question whether or not the assumptions and the way we broke down the rules at that time still apply today.

But actually, right now, the OSTP in the White House as well as others are reconsidering the framework for the first time, and trying to ask some of the same questions that you are. And that’s a perfect example of where the comment thread is either underpopulated or overpopulated when we go to public comment. So this is actually a perfect opportunity where there are some people who are trying to take the next steps. Where your opinion should be part of that discussion, to figure out how do we not necessarily demonize a specific technology, but focus on what do we want that technology to do, and how can we ensure that it’s safe enough, and applying in all the other aspects that we might want.

So, I want to thank you for your questions. I want to leave the panelists to have one last final comment. So you can make a comment or, what I would encourage you to do in the spirit of disobedience, is tell me what’s the one rule you love to break. Or, is there something that’s even more forbidden than the work you told us about that you are thinking about, and can you share it? And I’ll start with George.

Church: Yeah, well I suppose that the main rule that many of us in this room break is being silent scientists that just do our job. I saw my colleague say that it’s not their responsibility to point out something that they see that might be problematic. Especially if it could cause repercussions on their funding. So I guess that’s a—you’ve got to admit that’s a perfectly justifiable rule. I don’t feel particularly edgy stating that.

Yeah, I think that…just one comment on the GMOs. I think that the mushrooms and many other applications of CRISPR agriculturally have been discussed quite extensively. And in fact there’s a great deal of excitement that CRISPR causes mutations without introducing foreign genes from other organisms, and hence is different and embraceable by the public than ever before. So I think there’s been a lot of discussion, but maybe not as much as we should have.

Phelan: You know, I’ve been in health care for three decades. And part of my MO has always been to challenge the medical authority and to give consumers access to genomics, access to medical information. And I feel like this change for me in the last five years into conservation is really to help give a voice to those endangered species and ecosystems. Because we really are stewards, and I’m going to go back to the film we started with. Do we have a responsibility to think beyond the human implication of these genomic technologies which are leading the way, and to start to share some of that technology with species that can’t do it on their own.

Esvelt: Well, one of my favorite paraphrasings of a prominent member of the National Academy… So, my friend and collaborator Ken Oye here at MIT was at a National Academy meeting discussing gene drive and our decision to go public before demonstrating it. And this eminent scientist who shall remain nameless stood up, and Ken’s paraphrasing of what he said was, “Don’t tell the Muggles.” We believe it’s important to tell the Muggles.

But that’s hard, because I’m also interested in correcting what I see as the fundamental flaw of evolution; we’re the Sculpting Evolution group. But evolution is amoral. And that’s hard because that means that nature is amoral. If what we believe is morally optimal is human or animal flourishing, wellbeing, evolution hasn’t optimized for that. Do we need to do that? Should we begin building organisms where we’ve engineered them for optimal flourishing and wellbeing? Do we understand enough to do that? Because if we do, then there’s certainly a moral argument that we should. But I’m not certain that the moral arc of progress, such as it were, has bent far enough to have that discussion yet. And that directly conflicts with the need to tell the Muggles.

Palmer: I want to thank all of our panelists. I can’t possibly tell you about all the forbidden things that I’m thinking about. But apparently we’ve already broken the rule of going a little bit over time, so thank you for your time.

Further Reference

Session liveblog by Willow Brugh et al.