Trevor Paglen: Well, thank you guys so much for being here and thank you for the World Economic Forum for inviting me. I’m Trevor Paglen and I do lots of preposterous things. Really. I’m an artist, and you know, one of the things I really want out of art, what I see the job of the artist to be is to try to learn how to see the historical moment that you find yourself living in, right.

I mean that very simply and I mean it very literally. How do you see the world around you? And this is harder to do than it might seem in many times. The world around us is a complicated place. There’s all kinds of structures and forms of power that are very much a part of our everyday lives that we rarely notice.

And one of the things I’ve been working on for fifteen years or so is looking at the world of sensing. Looking at the world of…you know, looking at the kind of planetary-scale structures that we’ve been building that facilitate telecommunications, but at the same time are also instruments of mass surveillance. It’s something I’ve taken a really close look at over the years.

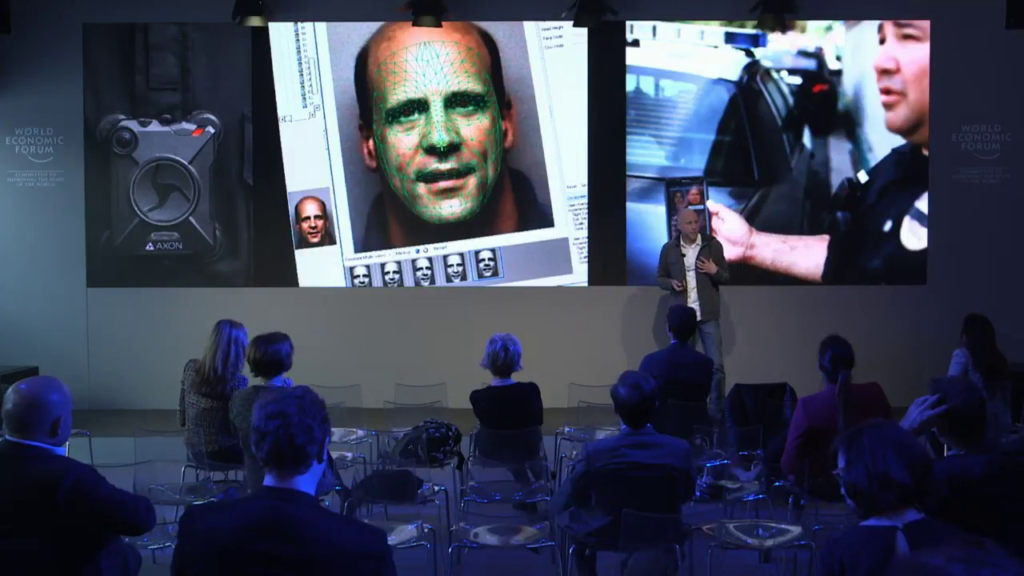

When we talk about surveillance I think a lot of us have the idea oh, there’s the security cameras and then there’s somebody standing behind all the monitors and looking and seeing what’s going on. That image is over. It doesn’t work anymore. Right now the cameras themselves are doing the operations. In other words, you have a traffic camera; that camera can detect if somebody is doing something wrong and automatically issue a ticket. So we’re building these autonomous surveillance systems that actually intervene in the world.

And a lot of people are saying that like by 2020 there’ll be a trillion sensors on the surface of the Earth that are able that are able to do this kind of thing. So this is something that’s very much transforming not only the surface of the Earth but our everyday lives as well.

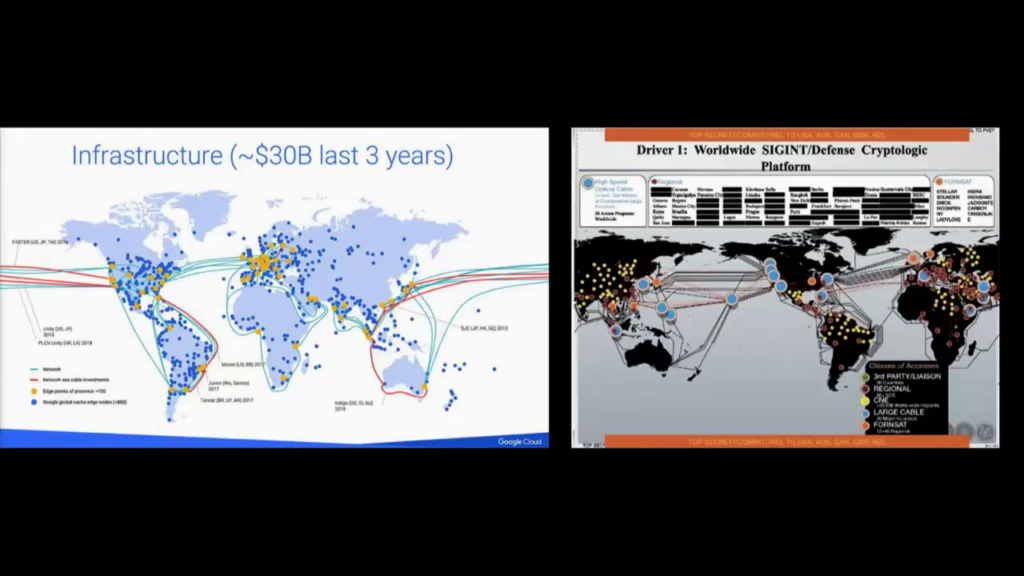

When we look at what these planetary infrastructure look like, on the left we see an image of what Google’s global infrastructure looks like; on the right we see the National Security Agency’s global infrastructure as of 2013. The point is these are literally technological system that envelop the Earth.

So one project that I’ve been doing is just trying to go to the places where these systems come together. Where does this infrastructure kind of congeal in very specific places? A really important part of global telecommunications is choke points, places where transcontinental fiber optic cables come together. What are the places where the continents are connected to one another? These are really important to telecommunications but also obviously very important to surveillance—you sit on these places, you can collect most of the data that’s going through the Earth’s telecommunications systems.

What do these look like? Well this is a place in Long Island, one of these sites. One in northern California at Point Arena. The west coast of Hawaii. Guam is really important to this kind of thing. Marseille, in France. And what do you see in the image? Nothing, right. The point of these images is these are some of the most surveilled places on Earth. These are literally kind of like, core parts of global telecommunications and surveillance infrastructure—there’s no evidence whatsoever that that’s going on in the photos of these kind of places. So what does that tell us kind of allegorically about how some of these infrastructures and systems work?

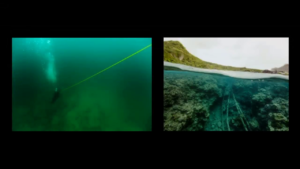

I did start pushing this a little bit further. I wanted to say well, theoretically there should be these conjunctions of cables in these bodies of water in these images. And so I learned how to scuba dive in a swimming pool in suburban Berlin, as you do, and started going out and studying nautical charts and undersea maps to try to find places on the continental shelf where I could maybe see these.

Going out with teams of divers, when you do everything right you find images like this. As you can see, there’s dozens and dozens of Internet cables moving across the floor of the ocean.

These are cables that connect the East Coast of the United States to Europe.

Now, when we’re talking about planetary surveillance systems, AKA planetary telecommunications systems, they’re not only enveloping the Earth like in a series of cables and hardware and infrastructure. They’re also in the skies above our heads. Every minute of every hour there are hundreds and hundreds of satellites over our heads. One project I’ve been doing, again, over many many years, is trying to track and photograph all of the secret satellites in orbit around the planet, all the unacknowledged satellites.

This is done using data from amateur astronomers. Amateur astronomers go out, they see something in the sky, they look it up in a catalog, it’s not there, they know they’ve seen a secret satellite. Usually an American military or intelligence satellite. They write down what they saw. What I can do is I can take that observation, model the orbit, make a prediction about where something will be, and then using telescopes and kind of computer-guided mounts, I can pinpoint the place in the sky where I think it’ll be. And if you do everything right, which is rare, you get an image like this.

An this line here is the streak of something called the X‑37B, for example. This is an American secret space drone that’s currently on its fourth mission. The X‑37B.

So I get into the culture a little in these things. This is from the crew patch of the guys that fly this thing. And this is the program office that controls it, an outfit called the Rapid Capabilities Office, who have this motto here in Latin, Opus Dei blah blah blah, “doing God’s work with other people’s money.” So this is kind of a glimpse into the culture of this kind of stuff.

So the point is like, we have surveillance systems that exist at the scale of the planet, that literally envelop the surface of the Earth and literally envelop the heavens above the Earth. But these scale down in various ways. These are also articulated of course at the scales of cities, down to the scales of living rooms, down to the scales of our bodies, down to the scales even of our thoughts and the questions that we ask.

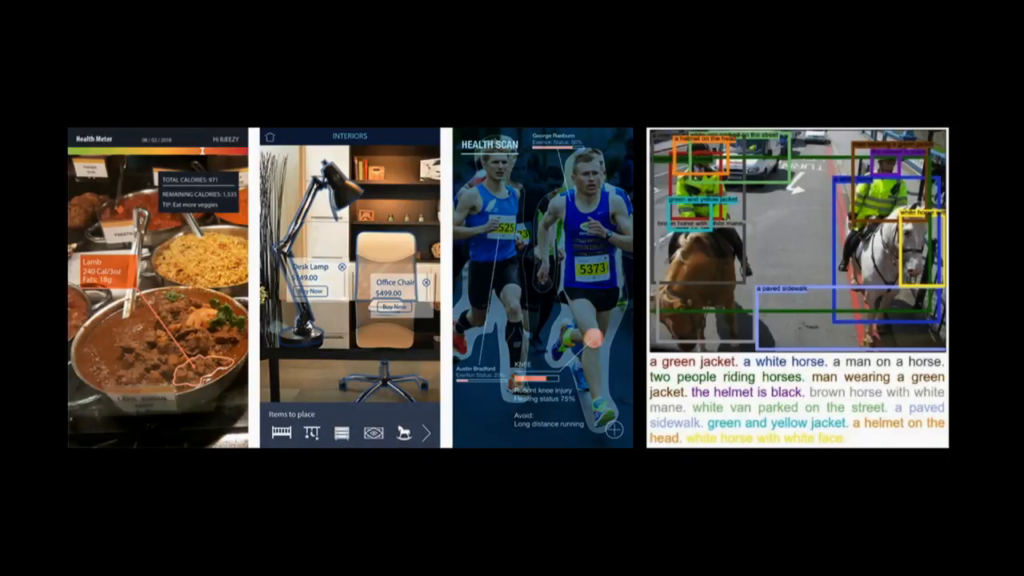

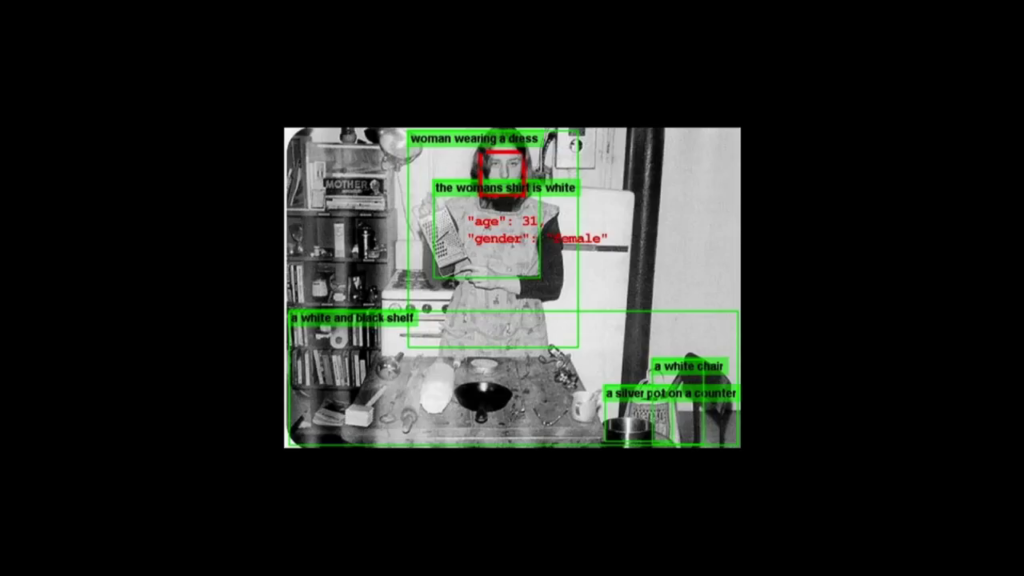

One of the set of tools that I’ve been developing in the studio for the last couple years, a set of tools that allow us to make images that show us what a computer vision system might see as it looks in the world, what a neural network might see as it looks to the world. In other words tools that make us images showing us what autonomous sensing systems are actually perceiving when they look out at the world.

This for example is an image of the US/Mexico border. And for those of you that don’t know this already there is a wall already. And what we’re seeing is the border and overlaid on top of the border, we’re seeing a vision of what the border looks like as seen through computer vision systems that are used to detect motion, detect anomalies. You know, we’re seeing the border as well through these systems that surveil it.

A phenomenon that’s been going on for a while is ALPR, automatic license plate tracking. These are systems that take pictures of every single car that drives by on on a city street, is able to autonomously read the number of that car, and either put that in a database that the police or law enforcement have access to or again, issue things like traffic citations based on that. All without human intervention.

The same thing is starting to come online with police body cameras, which are now being outfitted with facial recognition technology to do something similar.

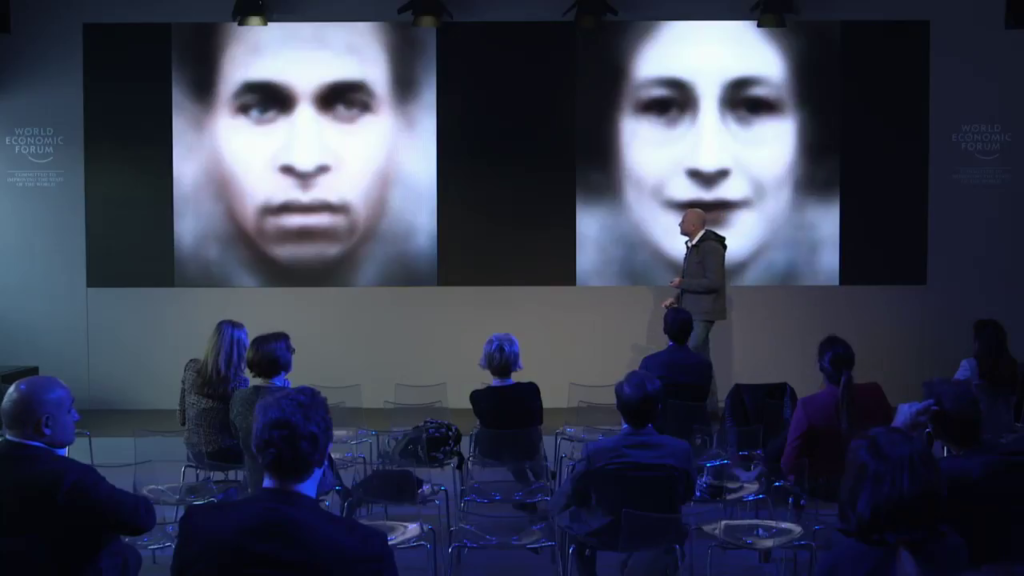

One of the tools that we have in the studio is the ability to make portraits of what people look like as they are seen by facial recognition software. And we’ve been running these on portraits of revolutionaries and philosophers from the past. The left is the great post-colonial philosopher Frantz Fanon as seen through facial recognition software. On the right is Simone Weil.

This is also obviously happening in the commercial space. You go to a modern supermarket, there are autonomous systems identifying you, trying to understand when the last time you were there was, how much money you spent. What are you looking at? What’s your emotional state? What’re you interested in?

And they’re getting more and more intimate. Sensing systems looking at what kind of food do we eat? Are we going to the gym? You know, are we in good health? How are we behaving? Are we drinking too much? Do we smoke cigarettes? What kind of objects are in our houses and what does that say about who we are?

We’re at a point now where Google or Facebook or Amazon literally knows more about me and my history than I know about myself. And what are some of the implications of that? How do we think through that? What does that mean? What do we see when we actually look through these kinds of sensing systems?

Well one thing that I think becomes very obvious when you spend some time with it is I think there’s a kind of popular idea out there that oh, technology is neutral, it’s just how you use it. And I want to counter that. I want to say there’s no such thing as technology detached from how you use it. And so when you use it, and when you deploy these kinds of systems, any kind of sensing technology sees through the eyes of the forms of power that it’s designed to amplify. The forms of power that it’s designed to exercise, whether that is military power, or law enforcement, or commercial power, etc. I think that’s one thing that you start to see.

And this brings up a lot of concerns for me. I worry about what the future of these kind of planetary autonomous sensing systems are. I worry that they have a tendency to kind of reproduce the kinds of racism, and patriarchy, and inequality that’ve characterized so much of human history. I’m also concerned that they represent enormous concentrations of power in very few places.

I said at the beginning of the talk that one of the things I want out of art is things that help us see the historical moment that we live in. How do we learn to see the world? But there’s something else that I want out of art as well. I want something that helps us see a world that we want to live in. And if you want to see that world you have to ask yourself what do you want? And so I spend a lot of time looking at these technologies and asking myself how would I want them to be different? What world do I want to live in?

I want to live in a world in which artificial intelligence has become decoupled from its military histories, from its commercial histories, from its law enforcement applications and so on and so forth. So I started building very irrational neural networks. Neural networks that instead of being able to analyze your face and detecting what emotion you might be in, that look around at the world and see literature. In this case this is an AI that sees omens and portents, and this is an image it synthesized of a comet, or a rainbow.

This is an image that it synthesized of a vampire. This was an AI that was trained to see monsters that have historically been allegories for capitalism.

Histories of warfare or fantastic images.

I want an Internet…I want global telecommunications systems that are not the greatest instruments of mass surveillance in the history of the world. What would that look like? So I started building communications hardware for museums in the form of sculptures that create open WiFi networks. But instead of tracking all the data of all the people that connect to them, it does the exact opposite. It anonymizes the identities of people connecting to the network and encrypts all the traffic so that nobody can see what people are doing when they’re using the Internet in a museum.

I want space flight that’s decoupled from the histories of nuclear war and the military histories associated with it, even the kind of colonial imaginations associated with it. So I started building a satellite. This is a project called Orbital Reflector that’s due to launch this summer between July and August. We just got our official launch letter yesterday. This was commissioned by the Nevada Museum of Art. And all it is is a satellite goes up in space, what it does it deploys a giant mirror about thirty meters long, a hundred feet. What that mirror does is reflect sunlight down to Earth to create a new star in the sky. That lasts about two months and then it burns up harmlessly.

And so this question of what kind of world do we want to live in. Well, I want to live in a world that has more justice, that has more equality, and that has more beauty in it. And that is kind of why I try to do these preposterous things. Thank you guys so much for coming.

Nicholas Thompson: So I will start with one thing that struck me while I was watching. When do you strive for beauty in your art, and when do you strive for banality?

Trevor Paglen: Yeah. That’s a really good point, that’s a really good point. You know, and when I’m making art, this is… I almost want to find out what something else wants to look like. And I know that sounds really mystical but usually the process that I have is I look at something and then I look at it again, I look at it again, I look at it again. And I wait for it and a kind of aesthetic language to emerge, like almost where it finds a form that it almost wants to be.

And I and I don’t worry about that contradiction between beauty or you know… You know, people criticize me sometimes, saying, “Trevor, you take beautiful pictures of bad things,” right. And my counter to that is you know, I’m not sure that that’s how beauty works. I mean, I think we would all love to live in a world in which beautiful things were good and ugly things were bad. But I can tell you if you go out with a telescope and you look at the night sky and you’re looking at the wonder of the cosmos, and then you see the tiny glint of a spy satellite flying through it, you will see nothing more beautiful in your life. Even though the politics of that are something we could have a debate about. And so I guess those kind of contradictions, I guess, I’m to inhabit.

Thompson: You stare at the cable or you stare at the surveillance station and then you think, “Does this thing want to be beautiful? Does this thing want to be…?”

Paglen: It’s not that conscious, you know. You just work with the materials until you find something that speaks to you.

Thompson: Right. Okay. We have some excellent questions here. First one from Allison Martin. “When can surveillance be good for us? Crisis response, for instance. How do we balance the good with the less good?”

Paglen: Yeah…

Thompson: So if there’s a disaster, we want to know where the building fell and find the people.

Paglen: No, absolutely. No, absolutely. I mean, you know, so I think of course— I mean, I think this is exactly the question I’m trying to ask, right, in the sense of we have these technologies. Technologies I will insist kind of amplify the forms of power in which—that they’re deployed to do. So what places in society do we want to optimize? What places do we not want to optimize? I would argue we want to optimize energy efficiency, for example. That seems nobody wants argue with that, that’ll help the planet. We can do that.

Do we want to optimize for…extracting money out of people? If you do that, then you’re going to produce a system that does that. And so I think for me that’s the big kind of question.

Thompson: I believe some of those companies might be here in Davos.

Paglen: Yeah, that’s the whole entire premise—

Thompson: It’s very successful business.

Paglen: Yeah, no. I mean, that’s the premise of software platforms. That’s the premise of a Google or a Facebook.

Thompson: But let’s let’s hang with the question a little bit longer. As you think about surveillance technology developing, how would you want to push it in a way that it ends up being used more for good causes than for causes you see as bad?

Paglen: Yeah. You know, I think—

Thompson: How do push tech— I mean, one way to push technology is to show art of it and have us think about it and have conversations.

Paglen: Of course.

Thompson: But how else do you try to push technology?

Paglen: You know, I think it really does come down to this question of what world do I want to live in, right? And when I think about like the Internet, for example. I love the Internet. I use the Internet all the time. I want an Internet on one hand that’s like a library. The library has two important parts of it. They’re very important institutions to democratic societies for two reasons. One, you can look up anything that you want and get any kind of information you want. Two, equally important, the police don’t get a record of the books that you checked out, right. And that’s not…you know, Google doesn’t get a record of…whoever.

And so I think about like those are kinds of institutions that I want. And so I guess I think the collective thing that we should all be doing as we are building this technosphere or whatever it is, is asking ourselves what do we want the world to look like? And where do we want to apply these kinds of technologies and sensing systems. And what kinds of places do we want to exempt from them? What are places in society that we actually don’t want to optimize?

Thompson: This is a question that ties to that. We have a question now that came in through that feed from Zimbabwe. “Don’t you think that surveillance actually assists the way of life for us, although it inconveniences a few?” And there is an argument—we were talking about this before, that actually getting lots of data on lots of people helps bring people into the financial system. It helps lots of people, particularly in developing countries. Is there…is surveillance something we worry about too much in the West, but actually it’s beneficial for the world as a whole?

Paglen: Well, let’s think about what the implications of that are, right. So, today the most obvious use of data collection is like, selling us stuff. So maybe they want to sell you Crocs and sell me like, motorcycle boots or whatever it is. And what have you. And we can argue about whether we like that or not. But as business models evolve, the point of any business, and you’d be negligent not to do that, would be to extract as much money out of the data that you’re collecting as possible, in a kind of heavily capitalist system like the US.

And so what am I going to do with that data? Well, I’m going to figure out what’s your lifestyle? Are you a healthy person or not? Then we’ll sell that information to your health insurance company, and they might say, “Oh, you drank a Coke this week. That’s gonna charge you and extra five bucks on your health insurance premium.” And you can think about what the logical implications of that are. If you play that out, you are creating a society that whereby inequalities are going to become much more acute, on one hand. And you’re going to create a society that ultimately is a lot more conformist, because there’ll be serious consequences for doing stuff like I did as a teenager. Like, you screw up and and do weird stuff.

So you’re going to fundamentally change what the culture is as well. And I worry that by not exempting parts of everyday life from surveillance or data collection, that the consequences are not going to really be a world that we all feel perfectly free in, honestly.

Thompson: One of the details I love about Trevor’s life is that he lives in Berlin next to the old Stasi offices.

Paglen: It’s true.

Thompson: We have another question, this one from Anonymous. “What is the one technology that worries you the most?”

Paglen: The one technology that worries me the most. I mean, I think right now it’s probably like a combination of artificial intelligence plus capitalism, or plus state power. And what I mean by that is the ability to do stuff with massive data sets, right. So in the case of the US it’s what we talked about with credit or health insurance or these kinds of ways in which our everyday lives, and our de facto liberties, are actually modulated, and whereby companies are incentivized to draw as much money out of us as possible. Or you have a society like the the system China’s setting up now, this kind of social credit score system where the state becomes an institution that is using similar tools in order to regulate the kind of docility or conformity of a population.

Thompson: Is that was that worries you the most? The sort of… The system in China, which, cover story in Wired magazine if anybody’s not familiar with it, last issue. PSA. Is that the…if you were to say of all the major projects in the world is it the surveillance system, the social credit score being built in China, the one that worries you the most?

Paglen: I think to me the social credit score system is the clearest distillation of what is happening in all surveillance platforms in general. So for those of you that are not familiar, the Chinese social credit score system is a system that surveils everything that you do and gives you kind of good points if you’re kind of obedient and say nice things about the state and you pay your taxes, you go to work on time, whatever. A range—

Thompson: You lose five points if you interview Trevor Paglen on a stage…

Paglen: You lose social credit points—you know. And this has implications for what state services you’re able to access, what schools you’re able to go to, whether you can get a visa or not. You can get travel enhancements if you’re good, and you can get travel restrictions if you’re bad. And so it’s a very obvious way in which you can see a society that has a kind of a strong centralized state wanting to maintain a population a certain way these kinds of tools to do that.

Thompson: [indicating his event badge]It’s kind of like this, except the color keeps changing based on what you do here at the World Economic Forum.

Let me ask you about how, as you talk about the move towards AI, how that affects your art. Because if your art is photographing specific things, that’s relatively discreet, right. You can photograph a satellite, a station, a cable. How do you deal with this new world we’re going into where everything is going be inside of code? How will that affect your career as an artist?

Paglen: Yeah, it’s been really interesting. The last couple of years I’ve been working a lot with AI and computer vision, and trying to— It’s almost like going inside the cables themselves and trying to see what world lives in there. And obviously it’s a world that has no analogue to our lived experience, our everyday life. Even to the point where you can’t really understand what’s going on in a neural network if you’re building and you’re a computer scientist. I mean, they’re kind of famously inscrutable to humans.

So, I think the way that I have been trying to deal with that question in particular is you almost have to make almost a literary turn. You know, finding metaphors, finding allegories. That’s kind of what I was doing with the AI, where I was training it to see images from Freud or Dante, or taxonomies of things that I would make up. And you try to find allegories.

Thompson: That’s a way of seeing a new kind of AI. How do you explain how the inner AI of some of the surveillance companies, how do you explain how those work through art?

Paglen: I’m not sure that that’s really what my job is as an artist, you know. I really think that my job isn’t to explain to you so much as it is to create a series of images that you can use as kind of a vocabulary, a kind of cultural vocabulary, with which to try to have conversation. So I think it’s an adjacent project to something like journalism or criticism or something like that. And in order to understand the world, we need language, we have logic, we make arguments on one hand, we have evidence and data. But also we experience the world through images, through culture, through music. And we kind of put all these together to form a worldview.

Thompson: And so why and how did you make this your job? How did you decide that explaining surveillance to the people through art? Because it’s not like… You probably didn’t study that in college.

Paglen: Well, I did study that in college. I have degrees in art and I have a degree in geography as well. And I think that doing this kind of work is…you just do it or you don’t. I mean, I really feel like in terms of doing art, you either do it or you don’t, you know.

Thompson: But you start with one project on surveillance. When did you decide that was going to be the thing that you would devote your life to?

Paglen: Yeah, I mean one project always turns into another one. So in the aughts I was doing a lot of work around the CIA and you know the war on terror and trying to understand that visual landscape of that as well as what’s the relationship between secrecy, invisibility in that. And then as a result of that work I was brought on to do some work around the Edward Snowden project and the Citizenfour film, which turns into a project about NSA and global infrastructure. Then at some point you look back and say okay, this is what the NSA looks like but wait a minute. There’s this bigger thing called Google, what’s going on there? And so I think just one project always turns into another.

Thompson: So we have another question that’s been upvoted. “What kind of technology do you use or avoid using as a private individual?”

Paglen: No, it’s a really good question. So I use the same technology—I’m on Twitter. I’ve got the smartphone. There are… A lot of us imagine, you know— One of my pet peeves is when people say, “Well, we just give all our information to Google,” or, “We just give all our information to Facebook. We just give all our information to Apple by having smartphones.”

These are not systems that you can opt out of, right? I mean, in practical life, if you want to have a job, you have to have a smartphone. If you want to stay connected with your friends, you’ve got to have a Facebook account, etc. So I don’t that submitting to these kinds of systems or having these systems be a part of your everyday life is something that you can opt out of as easily as— I don’t actually think we have much choice in that.

Having said that, if I’m doing something like Googling questions I might have about my own personal health or about things like that, I’m going to use tools that encrypt that. So I’ll use Tor for example to do that. I’ll use Signal to communicate with other people. Just because I don’t necessarily want that kind of thing to be in my metadata signature. So I think a lot about what that metadata signature looks like, and what I’m not so worried about, and things that I think down the line might have unintended consequences.

Thompson Right. One of my favorite tools to delimit that is software that will Google thousands and thousands of random phrases just to confuse your metadata signal.

Here’s a pointed question. “Have you considered using your work to do good? Solve a crime and democratize access to technology. So it’s not only for those who can afford them.” So I think that’s sort of the software you’re creating, the tools you’re creating.

Paglen: No, absolutely. So, I think in terms of doing good we always operate at the scale that we’re able to operate in and using the institutions that we do. So I’m an artist. I work with museums. That’s a place where I can make an intervention. So the software that we use is all open source and we publish the code and everything like that. But you operate within your sphere of influence, you know.

Thompson: I think you’re doing good. We only have about two minutes so I want to ask you, you’re about to launch a satellite!

Paglen: Yes.

Thompson: I think you might be the only guy in this room launching a satellite and we kinda just breezed past that. So please explain why you’re launching a satellite, what the satellite is going to do, and when does it go.

Paglen: Yeah. So the satellite is a project commissioned by the Nevada Museum of Art. It’s conceived of as an Earthwork. And Earthworks are traditionally big art projects that are out in the middle of the desert. You drive out there, you see it, it’s a big artwork. And the Nevada Museum of Art, because there’s a lot of the desert, they commission a lot of these things.

So this is commissioned as an Earthwork. It’s a satellite that’s a small satellite. It piggybacks, actually, on a spy satellite from Vandenberg Air Force Base in California. It goes into orbit and then once it’s in orbit in a 575 kilometer orbit, it opens up and it inflates a giant mirror, basically, in the shape of a diamond. About 100 feet long. And then as that mirror kind of goes through space it catches sunlight and reflects it down to Earth. And there’s a window for a couple of hours after twilight and before dawn, if you look up in the sky you’ll see the sunlight reflected off of this, and it will look like a star about as bright as one of the stars in the Big Dipper. And so there’ll be a web site and an app where you can say, “I want to see this project,” and it’ll say, “Oh, you’re in San Francisco. Go out at 8:15 tonight and look at this constellation, here’s the extra star.”

Thompson: So the point is just for us to be able to see it?

Paglen: That’s the entire point. So the point of this satellite was to build a satellite that as much as possible had no military, scientific, or commercial function, right. So it was trying to build a satellite that as much as you possibly could was just an aesthetic object, and what’s more to kind of mobilize the ingenuity of people in the aerospace industry towards that goal. And so in that sense I think of it, it’s a preposterous object, or an impossible object. And I’m trying to make an object that kind of contradicts the logic of every other satellite that’s ever been launched.

Thompson: Do you worry that you’re going to…contradict some of the wonder we have when we look up at the sky? I think about my kids looking up at the sky, and it’s like you seeing stars but you’re also seeing Trevor Paglen’s Earth project…

Paglen: Well, I think the bet is that having Trevor Paglen’s art project in the sky gives you an excuse to take your kids out there and look at the sky.

Thompson: There you go. That’s even better. Alright, thank you very much. I’ve got to—speaking of surveillance, go to three events hosted by Facebook. Thank you Trevor Paglen. I think he’s awesome. I think his work is amazing. Thank you for being here. I very much enjoyed that conversation. Thank you for all the questions.