Malte Ziewitz: We thought that as we are waiting for the coffee to kick in, we should at least give you a brief introduction to the conference theme which mainly involves three things. One, to briefly recap how this conference came about, what was it that puzzled us initially and that led us to think about something like Governing Algorithms.

And then second, share five selected scenes from the provocation piece that some of you might have read. This provocation piece actually started as a record that Solon Barocas, Sophie Hood, and I just put together for ourselves initially, but then we thought it’s actually a nice thing to share. And now it’s become quite popular, at least by…when you count the downloads. So we thought while it is impossible to share really every detail and to give a comprehensive overview, we should at least share five themes, five algorithmic moments that will probably pop up at different stages during the day.

And then finally the third thing I’d like to do in the next fifteen minutes is to actually just walk you through the program, the plan for today and make sure that everybody’s comfortable and feels fine.

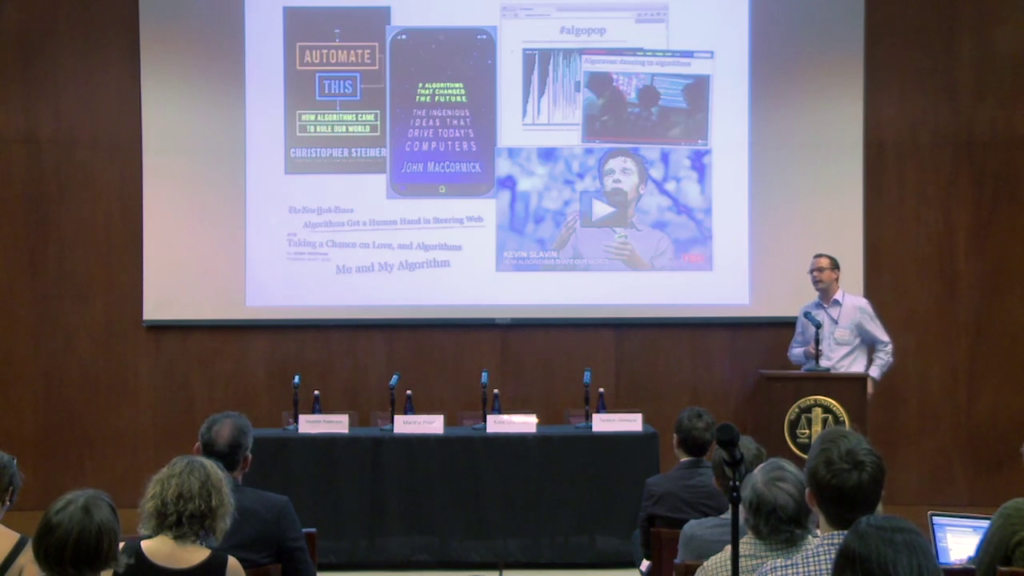

So how did this start? Actually all of us—Solon, Sophie, and many other fellows and research, not just at PRG, the Information Law Institute, but also at MCC—we’ve been studying computation, automation, and control in different forms for quite a long time. But it was only at the end of last summer really that we realized that there’s this new notion of the algorithm gaining currency, right. So at that point we noticed well there’s trade books coming out, right, by Christopher Steiner, Automate This: How Algorithms Came to Rule Our World. There’s a book called Nine Algorithms That Changed the Future by John MacCormick. There’s hardly an edition of The New York Times which has not the word “algorithm” in its title and also other newspapers. They are Tumblr blogs on the topic of Algopop, where people just collect stuff that’s related to algorithms. Boing Boing has posts on stuff like algoraves, which is basically a very boring video of people to electronic music, but actually it’s called an “algorave,” which is quite amazing. So why should this be called an algorave? And finally, maybe the ultimate harbinger of something becoming really hot, there’s a TED Talk, and the one by Kevin Slavin some of you even suggested in the open reading list that we have on the web site and that Helen mentioned.

So, there seems to be this big buzz around algorithms. And of course that’s something that as researchers we are quite suspicious of, right? And so, we felt increasingly uneasy about this. And the uneasiness came from a tension, which is the following. On the one hand, there are increasingly claims that algorithms govern, shape, manage, control, select, sort or otherwise shape our lives, as you can see in some of these titles here. But then at the same time, it’s really difficult to pin down how they do it, what these algorithms are, and how these things work, and what actually is the point of focusing on algorithms when you could focus on so many other things.

And so this was the tension between the rather hyperbolic, or you could cyberbolic, claims in these cases. And then on the other hand this uneasiness about well, what is this thing, actually, and what work does this concept do?

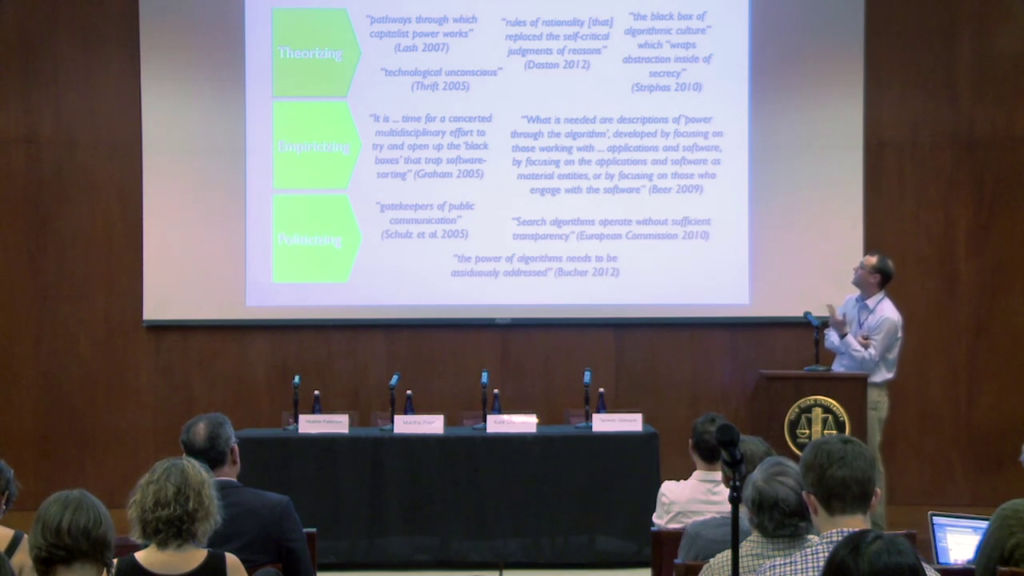

So then the next thing we did, which is an obvious thing when you do research in the social sciences, you look at what other people at that time, at that point in time that was last summer, have written about it. And then we thought well a useful way to think about this is is think about it in terms of three strands.

So there’s a lot of people in sociology, for example, who started theorizing the phenomenon of the algorithm. So Scott Lash, for instance, talked about algorithms as pathways through which capitalist power works

. Grand claim.

Nigel Thrift has written about the “technological unconscious,” right. So all this stuff that is somehow hidden somewhere that does something, and we don’t quite know how it works but it is pretty important, apparently.

There’s Lorraine Daston, a historian of science, who’s talked about algorithms as rules of rationality that replaced the self-critical judgments of reasons.” So she makes this broad historically to think about and make sense of them.

And then there’s legal scholars like Ted Striphas, who talked about the black box of algorithmic culture

which wraps abstraction inside of secrecy

.

So then our next strand, which in many ways there’s a common dynamic, which then is often a reaction to the first one, is that people then start to ask “Well, these are all grandiose claims. But how do these things work in practice,” right. That’s when they start empiricizing.

And then there’s people like Scott Graham, for instance, who said It is…time for a concerted multidisciplinary effort to try to open up the ‘black boxes’ that trap software-sorting.

Then an article that probably many of you know by David Beer, a recent one, suggests What is needed are descriptions of ‘power through the algorithm,’ developed by focusing on those working with…applications and software, by focusing on the applications and software as material entities, or by focusing on those who engage with the software.

And when you then read this, it makes sense, it sounds like a good way to study algorithms. But then, on second thought we thought, well what is this other than actually studying engineers, technology, and users? And what’s the point of understanding algorithms in there?

And then finally a third strand, which is also quite common in these dynamics and often even detached from the other ones, is a strand that has to do with politicizing. So people then become concerned with algorithms as “gatekeepers of public communication.” The European Commission has been worried for a while about search algorithms operating without sufficient transparency, calls for openness, disclosure, and so on. Or generally, a concern with the power of algorithms needs to be assiduously addressed

.

So what we found interesting about these things is that in virtually all of these cases algorithms are used as what Ian Hacking once called a bit of an “elevator word.” It’s a word that you can use and it just takes you anywhere…you want, really. So, in many ways algorithms here appear as a resource.

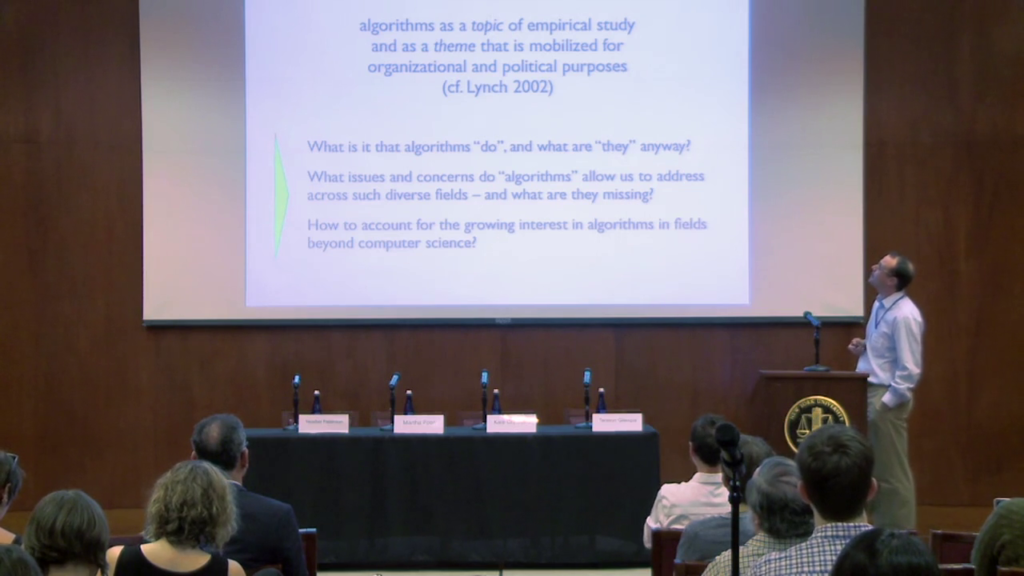

So we thought well, what happens when we turn this into a topic, where we don’t make claims about how algorithms change our lives, world, and so on. But what happens when we turn this idea, this notion of an algorithm into a topic and invite you all and then have a great time for one or two days.

And so then the idea is to think about algorithms as a topic of empirical study, or of a social scientific study, and as a theme that is mobilized to organizational and political purposes. And at this point, you arrive at slightly different questions. So you don’t ask anymore necessarily what is an algorithm, or what do we need to do to understand one. But you ask well what is it that algorithms actually “do,” and what are “they,” anyway? You ask well, what issues and concerns do “algorithms” allow us to address across such diverse fields. Which is quite amazing, because when you look at the program today, we have papers from airport security, from plagiarism detection, from the financial markets, and from social media I guess, right? So what is it that makes this concept useful still across these fields, even though it seems to be a bit of an elevator word.

And finally, how can we account for the growing interest in algorithms in fields beyond computer science? That’s something we started to discuss yesterday in the exchange I think between Chris and—the lady’s name I unfortunately forgot and who’s probably not here today. In short, the discussion already started yesterday.

So, just to get us in the mood we thought we’d just extract five very quick things from the provocation piece, and maybe sharpen your senses and also ask you to keep these in mind as we go through the day, through the presentations.

So the first theme that we struggled with was really to think about algorithms, the very idea algorithms. And the example we’re going to use here, just for simplicity because everybody knows it, has to do with web search. It’s a bit lame [indistinct], maybe. But it’s just something that’s easy to explain.

So in that case of search there’s often claims, as you all know, that the search algorithms rank evaluate these things and then determine relevance. So relevance is algorithmically developed and determined. So then the trouble starts when you think about how this actually happens. So then topics come up that already surfaced yesterday. So what about the data, right? When you do search, you don’t search the Web but an index of the Web. So there’s a huge database which is itself updated all the time and evaluated, a point that will also pop up in one of the responses today.

What about the material entities that are involved? What about stuff like data centers, that some of you are studying like Kate or Megan Finn. What about even materialities that you use when you use a computer. Like a keyboard, a desk, you sit in front of a screen, right. What about the interface and its design? I mean, producing top ten list of links is just one way of thinking about these things. You could do this many other ways in principle, in many other colors, forms, arrangements. And finally what about the user, right. When you do a search, you come up with a search query. You might not search just once but twice, or three times. And then what you do is actually outside as well. But still when we talk about search there’s often this reflex to think about “the algorithm.”

And so, we thought well what do we actually talk about when we talk about algorithms in these contexts. And what is it that we can understand by looking at these questions. So this is this weird thing, is that it’s easy to make a claim that involves the word “algorithm” in the general, but the moment you try to be specific you kind of lose it. Which made us a bit nervous. Also about discomfort.

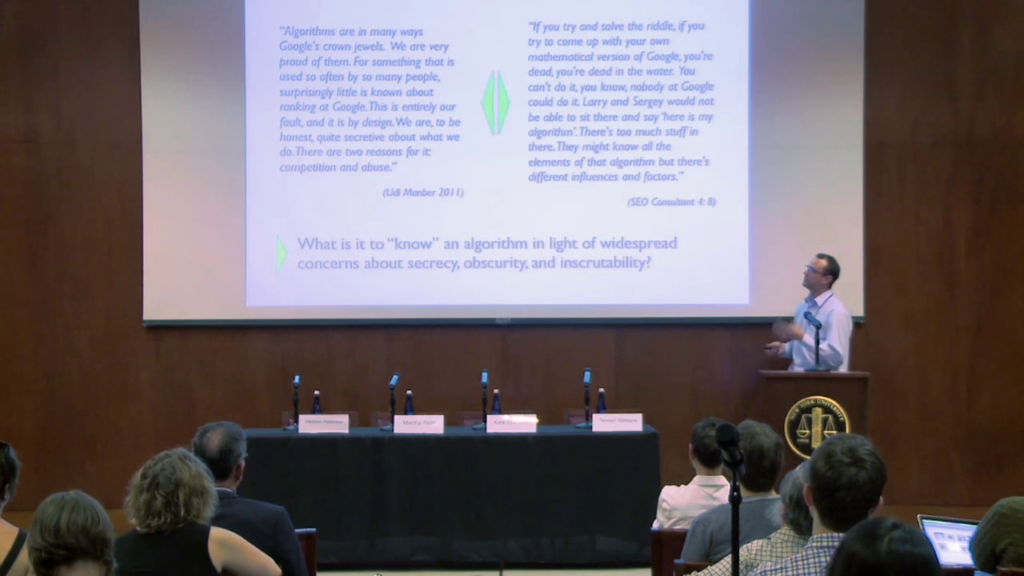

The second theme has to do with secrecy. That’s something that also comes up very quickly, right, when people talk about algorithms. Especially researchers are endlessly worried about trying to understand the inner workings of the algorithms. So how does this actually work. And then they try to get access, and try to talk to people, and try to have someone explain it to them. They turn to computer scientists, they turn to people who work at some of these companies. And to stay with the example of Google, what happens a lot of the time is this. This is a quote by Udi Manber. He’s a vice president of engineering at Google, and he writes in a blog post,

Algorithms are in many ways Google’s crown jewels. We are very proud of them. For something that is used so often by so many people, surprisingly little is known about ranking at Google. This is entirely our fault, and it is by design. We are, to be honest, quite secretive about what we do. There are two reasons for it: competition and abuse.

Introduction to Google Search Quality

So this is the common story you get, and most people know. So it needs to be secret because competition and abuse, and they’re hidden. Which also suggests actually that there is something to learn.

Now, this is from fieldwork with the search engine optimization industry. And when you juxtapose what Udi Manber said with what a senior SEO consultant said, you kind of start getting into trouble because this guy said,

If you try to solve the riddle, if you try to come up with your own mathematical version of Google, you’re dead, you’re dead in the water. You can’t do it, you know, nobody at Google could do it. Larry and Sergey would not be able to sit there and say, “Here is my algorithm.” There’s too much stuff in there. They might know all the elements of that algorithm but there’s different influences and factors.

SEO Consultant 4; 8

So this then makes one even more nervous, right? So on the one hand there’s widespread talk about secrecy, and we have to understand how these things work, and this might be a question of access. Some people discuss this as a question of expertise. This might be a question of knowing. But then it may turn out that the secret is that maybe there is no secret. Which then brings up all kinds of other interesting questions. That is, what work does this secrecy, this spectacular secrecy, do if there is not much to know. So what is it to “know” an algorithm in light of widespread concerns about secrecy, obsurity, and inscrutability?

A third theme which I’m sure will also be discussed today had to do with problems and solutions. And the reason is that also again, a lot of talk about algorithms is framed in this rhetoric. So algorithms are often conceived as technoscientific solutions to public problems. This is an example again, to stay with our example of the Google search engine from an explanation about how search works on the Google corporate page. It says,

You want the answer, not trillions of web pages. Algorithms are computer programs that look for clues to give you back exactly what you want

I mean, already this phrase, they give you back “exactly what you want” is…you could write an article about that. Which is quite amazing. But what’s interesting here, there’s a problem embodied, right, in this description of the algorithm, which is an information overload problem, which makes a lot of sense. But then again when you start thinking about it, when was the last time you actually faced trillions of web pages? Well, you could say that actually I only face trillions of web pages because there is the search engine.

So, to what extent, you can ask, can algorithms “solve” problems, or maybe are they just performing them? And there’s definitely a couple of people in the room who’ve tackled this specific issue, like Evgeny Morozov has talked about solutionism, or Daniel Neyland has actually a project which I think is about can markets problems, is that correct?, which is starting up. So there is lots of relation— Especially when you think about the market and the invisible hand and the algorithm as the invisible hand. So there’s lots of interesting parallels to think about.

Which might go into the direction of magic, to a certain extent.

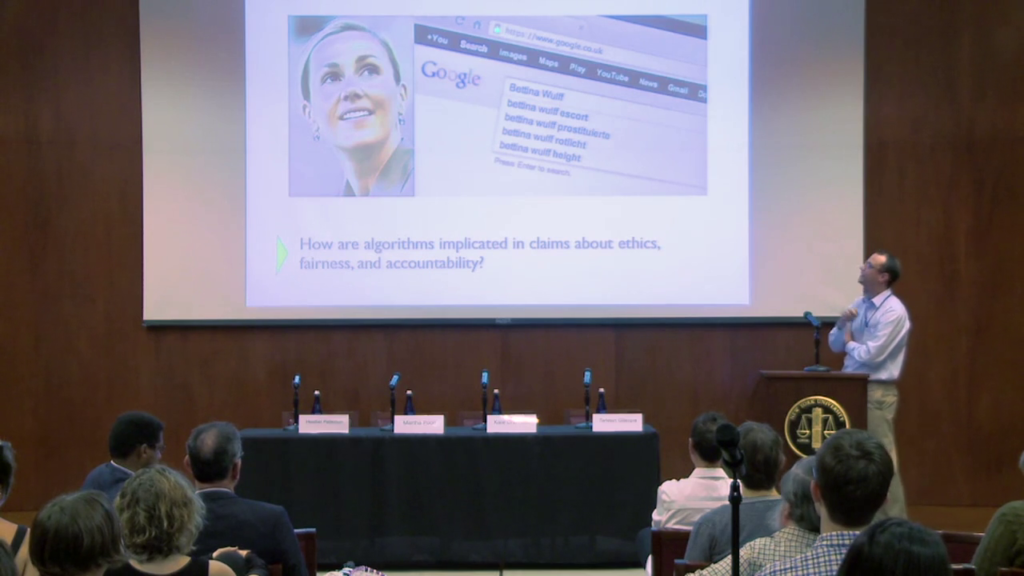

The fourth theme of course, it’s a very important one, is the theme of ethics, fairness, and accountability. There’s a lot of considerations in the provocation piece. This is just a case we thought would be good to illustrate that. This is actually the ex-wife of the ex-president of Germany. And she actually sued Google Germany because whenever you type in her name, which is Bettina Wulff, the autocomplete function would suggest “escort,” this [prostituierte] is the German word for prostitute, “rotlicht” is “red light” like red light district, and height, which is how tall you are. And she was not very happy with— [audience laughs] I’d rather explain it.

And she was not very happy with it, so she actually sued Google. And this is still pending but there’s another lawsuit which has been decided by federal court in Germany just three days ago, a very similar one, where a company did exactly the same thing because whenever you type in the name of the company, the words “Scientology” and “fraud” would come up. And in this case the federal court in Germany actually decided that Google needs to sort this out. This is defamation, at least in the German context. This is libelous. And so they need to do something about it.

Which then brings up interesting questions. So how are algorithms implicated in claims about ethics, fairness, and accountability? You could ask well what do algorithms have to do with this case. And actually what they have to do with this case is that Google Germany said “Well it’s not— We didn’t do anything, right. It’s just the autocomplete algorithm. It’s what users search for. It’s the algorithm.” So this is a nice example of an algorithm becoming an object of the deferral of accountability. So you could say algorithms can become accountabilia, right. And thinking along those lines might be quite fruitful we thought, and opens up lots of other questions about what counts as an ethical or not-so-ethical algorithm.

And finally, the theme of the conference Governing Algorithms, who, which, or what is actually governing what, which, or whom. And is “algorithmic governance” a useful project to engage in? Like in the example before, is it the German federal court governing the algorithm but by mandating that Google needs to sort out their autocomplete results? Is it the engineers at Google governing the algorithm by doing such evaluation? Or is the “user experience,” this mysterious user experience, that is mobilized to optimize an algorithm and govern it? Or is it the other way around, that algorithms govern us with a malware warning? This is a malware warning from two years ago which was on Yochai Benkler’s home page. He had a virus, and so when you wanted to go to his home page there was this virus warning. Or is something like autocomplete, actually, that governs us? These are all interesting, incredibly difficult questions.

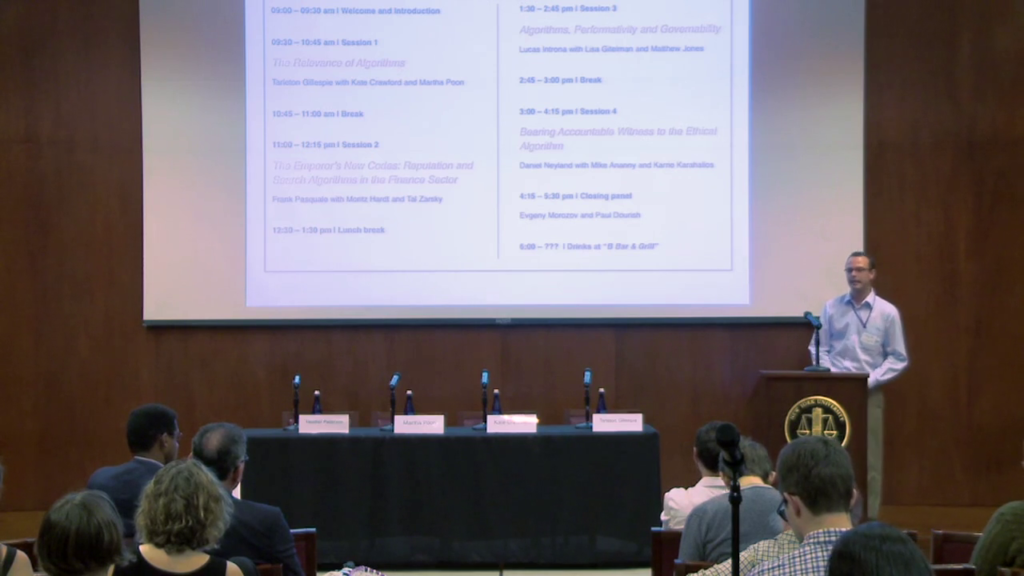

And the best thing when you face all these incredibly difficult question is to ask incredibly smart people to answer them. And that’s what we did for today. So this is the plan for today.

As you can see, the day is organized around four sessions which are highlighted in orange on this slide. We asked four very brave scholars to reflect on some of the themes mentioned in the provocation piece and come up with their own take on some of the issues, some of the confusing aspects of algorithms. And fortunately they’ve done so and they’ve written papers which you can find on the web site under Resources->Discussion Papers.

In addition, we have asked, for each of these sessions, two even braver scholars to respond to these main papers. And you can also find their responses on the web site and they will also talk about this today.

And finally, we have something we call the closing panel, with Evgeny Morozov and Paul Dourish. And these two guys will listen very carefully all day to everything; they will write down everything you say. And then at the end of the day, they will basically reflect on themes that have come up, themes for future research, stuff they found weird, strange, interesting, or worth sharing. Then we’ll have a quick discussion at the end.

Another important thing to mention is that we will have lots of breaks in between the sessions. Many of them are coffee breaks. There will be coffee. And I think also still for a bit longer, breakfast outside all day. Not the breakfast but the coffee and the tea. There will be a lunch break where you’re on your own. We have prepared a list of places around here where you can go. And so just make sure you’re back by 1:30 because we’ll go on then quickly quickly.

And then finally, on the culinary part we thought maybe just if anyone is still up for anything tonight, we thought we could have drinks at the B Bar. We can tell you later on today where it is and how this works.

One important thing, one last thing I forgot to mention is that of course the format of the sessions follows the paper format. So there will be the main presenters speaking twenty minutes about their paper, making their point. Then the discussants will each have ten minutes to respond to that. And then the moderators will open it up. And in order to participate in the discussion, there’s two ways, same as yesterday. One way is to send a tweet with the hashtag #govalgo and share you question there, or your comments, or your concern. And Dove, who’s standing in the back, will monitor these tweets and try to feed them into the discussion. The other way is to just line up behind one of the microphones in the room and make your comment here. The advantage is that you’re not restricted to 140 characters if you do it right here in the room. So you might take advantage of that. It’s also more personal because we can see you and know who you are, and we would like to ask you to introduce yourself.

With that I think that’s it. Have I forgotten anything? Solon, Sophie? Helen? No. Okay. So then, I think we can start with the intellectual fireworks we’re going to burn today.