Imagine your privacy assistant is a computer program that’s running on your smartphone or your smartwatch. Your privacy assistant listens for privacy policies that are being broadcast over a digital stream. We are building standard formats for these privacy policies so that all sensors will speak the same language that your personal privacy assistant will be able to understand.

Archive (Page 4 of 6)

We have to be aware that when you create magic or occult things, when they go wrong they become horror. Because we create technologies to soothe our cultural and social anxieties, in a way. We create these things because we’re worried about security, we’re worried about climate change, we’re worried about threat of terrorism. Whatever it is. And these devices provide a kind of stopgap for helping us feel safe or protected or whatever.

Social media companies have an unparalleled amount of influence over our modern communications. […] These companies also play a huge role in shaping our global outlook on morality and what constitutes it. So the ways in which we perceive different imagery, different speech, is being increasingly defined by the regulations that these platforms put upon us [in] our daily activities on them.

I wonder with all these varying levels of needs that we have as users, and as we live more and more of our lives digitally and on social media, what would it look like to design a semi-private space in a public network?

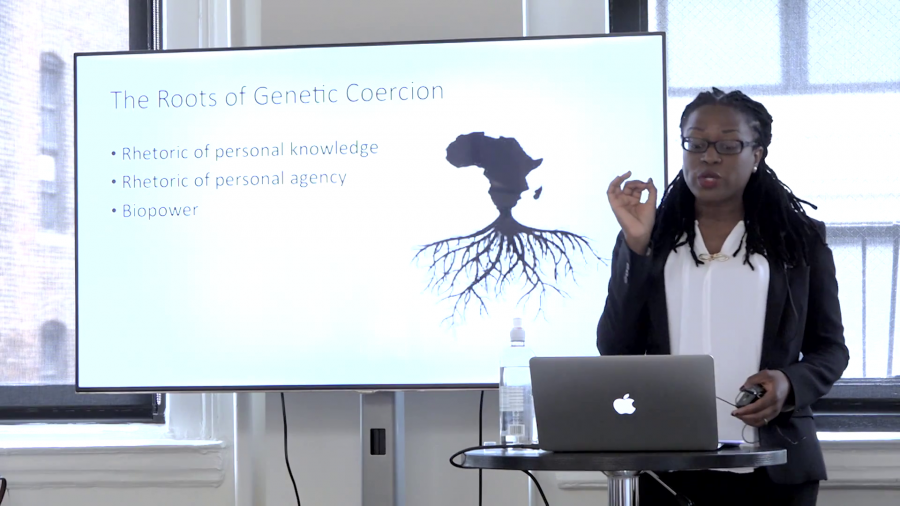

We’re trying to say it’s on you, it’s your responsibility, figure this out, download this, understand end-to-end encryption, when it’s a shared problem and it’s a communal problem.

What does it mean to be private when you’re in a place where you have no right to privacy but are ironically deprived of the thing that makes your privacy go away?

I think that privacy is something that we can think of in terms of a civil right, as individuals. […] That’s a civil rights issue. But I think there’s also a way to think about it in terms of a social issue that’s larger than simply the individual.

How do we take this right that you have to your data and put it back in your hands, and give you control over it? And how do we do this not just from a technological perspective but how do we do it from a human perspective?