One very interesting addition to the public space is how we are conditioning and defining the public space with regards to eventual attacks. And it’s changing the landscape radically. And the very first knee-jerk reaction was concrete blocks in front of many institutions. Now they’re trying to design these concrete blocks so they seem something which is part of the landscape but the presence and the robustness is still so violent that it’s hard to hide the intention.

Archive (Page 2 of 6)

Citizenship, after not thinking about it for a while, feels like something we’re all thinking about quite a lot these days. In the words of Hannah Arendt, citizenship is the right to have rights. All of your rights essentially descend from your citizenship, because only countries will protect those rights.

First of all, let’s recognize that the privacy of transaction records is not a brand new issue at all. We have many decades of experience, and I think it helps to understand that we have two types of consumer transaction records that we’re talking about.

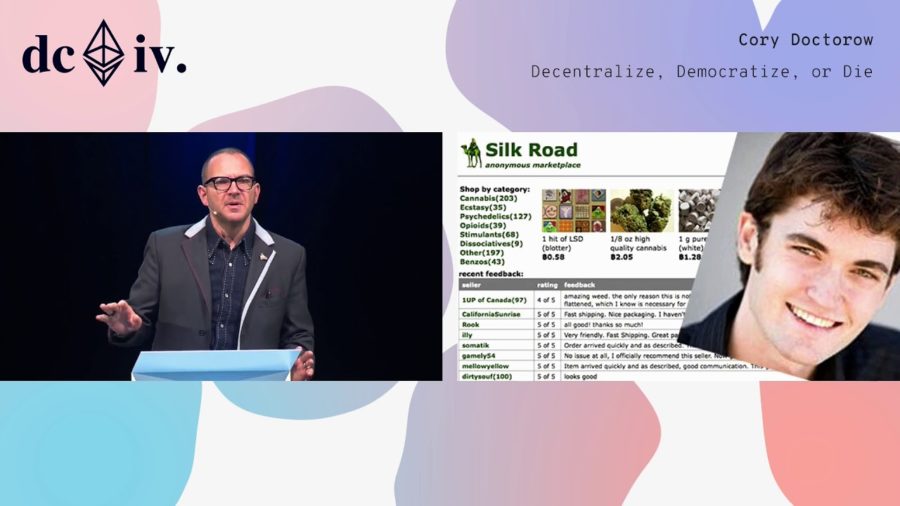

You might be more comfortable thinking about deploying math and code as your tactic, but I want to talk to you about the full suite of tactics that we use to effect change in the world. And this is a framework that we owe to this guy Lawrence Lessig.

As we’ve moved into increasingly digital spaces, so online worlds, we’re moving away from your traditional physical spaces where you have public streets; where you have public squares; where people can go to protest, and into areas, if you would call them that, that are entirely controlled by corporations.

What we’re trying to do is to see over the horizons, looking at essentially a five-year time frame, and identify what will be the cybersecurity landscape in that context.

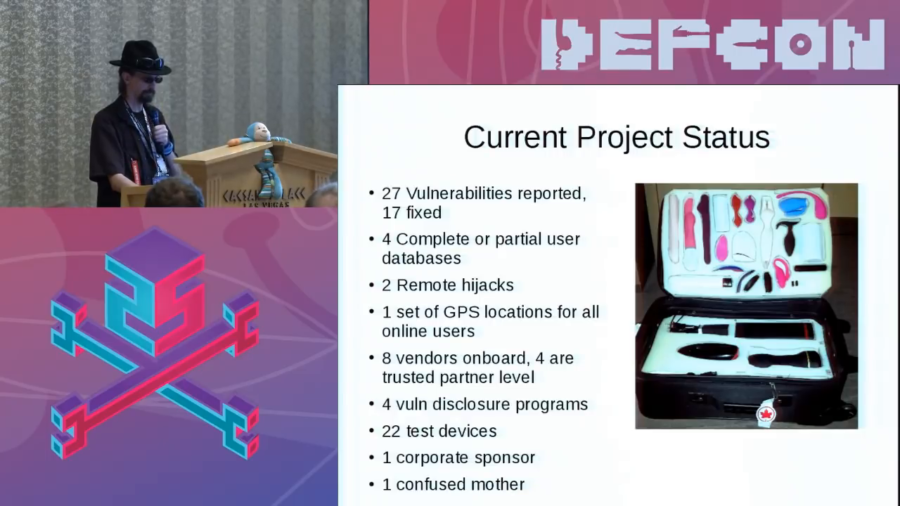

A large number of IoT research firms…yeah, they don’t want to look at this. Because there are stigmas around sex. We have a very weird thing in North America about sex. We’ll watch all the violence we want on television but you can’t see two people have sex.

The big concerns that I have about artificial intelligence are really not about the Singularity, which frankly computer scientists say is…if it’s possible at all it’s hundreds of years away. I’m actually much more interested in the effects that we are seeing of AI now.

You may have heard people come up to you and say like, “Hey, you’re young. That makes you a digital native.” Something about being born after the millennium or born after 1995 or whatever, that makes you sort of mystically tuned in to what the Internet is for, and anything that you do on the Internet must be what the Internet is actually for. And I’m here to tell you that you’re not a digital native. That you’re just someone who uses computers, and you’re no better and no worse than the rest of us at using computers.

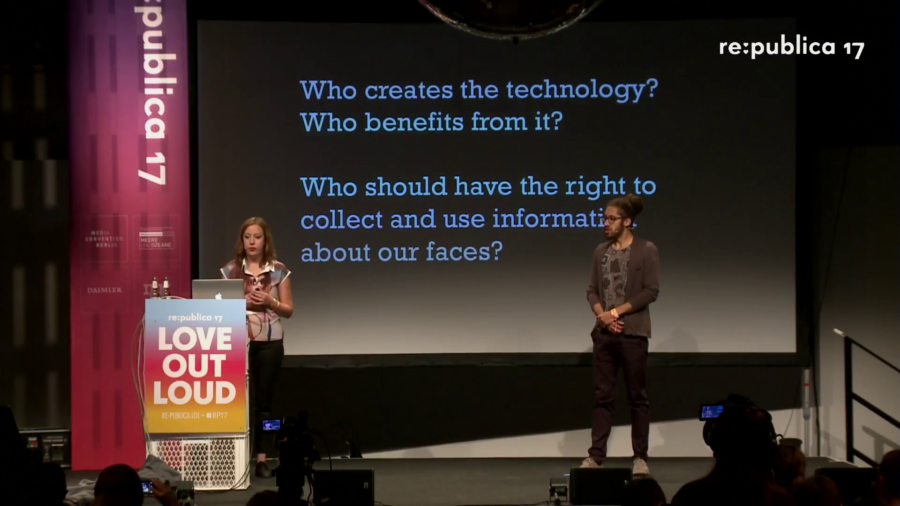

We have to ask who’s creating this technology and who benefits from it. Who should have the right to collect and use information about our faces and our bodies? What are the mechanisms of control? We have government control on the one hand, capitalism on the other hand, and this murky grey zone between who’s building the technology, who’s capturing, and who’s benefiting from it.