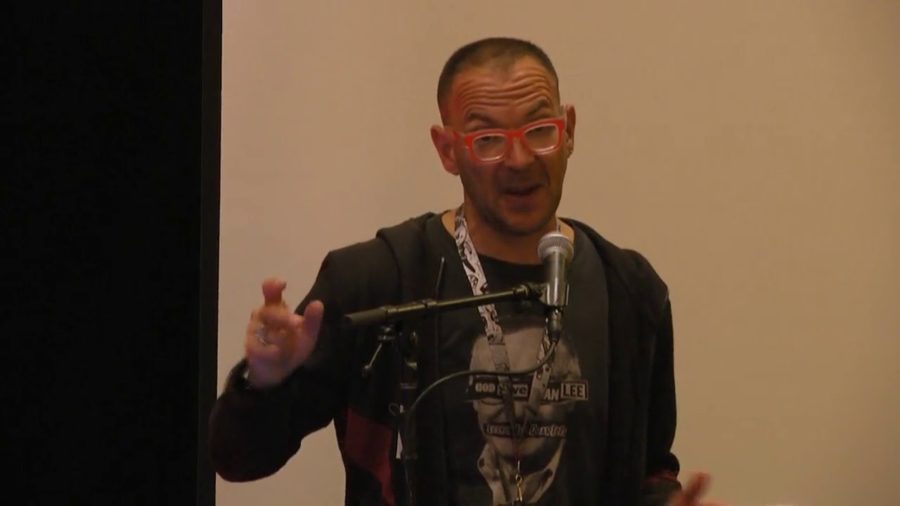

Cory Doctorow: Hi, I’m Cory Doctorow. I write science fiction novels for young people and I work for the Electronic Frontier Foundation. It’s a pleasure to be back here at DEF CON Kids at r00tz. And the talk today, it’s called “You are Not a Digital Native.”

So, you may have heard people come up to you and say like, “Hey, you’re young. That makes you a digital native.” Something about being born after the millennium or born after 1995 or whatever, that makes you sort of mystically tuned in to what the Internet is for, and anything that you do on the Internet must be what the Internet is actually for. And I’m here to tell you that you’re not a digital native. That you’re just someone who uses computers, and you’re no better and no worse than the rest of us at using computers. You make some good decisions and you make some bad decisions. But there’s one respect in which your use of computers is different from everyone else’s. And it’s that you are going to have to put up with the consequences of those uses of computers for a lot longer than the rest of us because we’ll all be dead.

So there’s an amazing researcher named danah boyd. She studies how young people use the Internet and computer networks. She’s been doing it for about twenty years. She was the first anthropologist to work with a big tech company. She worked at Intel, at Google, at a company called Friendster that’s like, no longer extant. She worked at Facebook. She worked at Twitter. And one of the things that she sees over and over again is that young people care a whole lot about their privacy, they just don’t know who they should be private from, right.

They spend a lot of time worried about being private from other kids they go to school with. From their teachers. From their principals. But they don’t spend a lot of time wondering about how private they need to be from like, the government in twenty years looking backwards to figure out who their friends are and what they were doing when they were in school and whether or not they’re the wrong kind of person. They don’t have a lot of time worrying about what a future employer might find out about them. But they spend a lot of time worrying about what other people might find out about them, and they go to enormous lengths to protect their privacy.

So danah, she documents kids who are hardcore Facebook users. And what they do is every time they get up from Facebook, every time they leave their computer, they resign from Facebook.

And Facebook lets you resign and then keeps your account open in the background for up to six weeks in case you change your mind. And if you come back and you reactivate your account, you get all your friends back, you get all your posts back, and everyone can see you again. But while you’re resigned no one can see you on Facebook, no one can comment on you, and no one can read your stuff. So this is a way of them controlling their data, and that’s what privacy is. Privacy isn’t about no one ever knowing your business. Privacy’s about you deciding who gets to know your business. And so young people really do care about their privacy, but sometimes they make bad choices.

Now, that matters. Because privacy is a really big part of how we progress as a society. You know, there are people alive today who can remember when things that today are considered very good and important were at one point considered illegal and even something you could go to jail for.

So one of the great heroes of computer science, a guy named Alan Turing, the guy who invented modern cryptography and helped invent the modern computer, he went to jail and then was given a kind of bioweapon, hormone injections, until he killed himself because he was gay. So that was in living memory. There are people alive today who remember when that happened to Alan Turing. Today if you’re gay you can be in the armed forces, you can get married, you can run for office, you can serve all over the world.

The way that we got from it was illegal to be gay and even if you were a war hero they would hound you to death if you were gay, to it’s totally cool to be gay and you can marry the people you love, is by people having privacy. And that privacy allowed them to choose the time and manner in which they talked to the people around them about being gay. They could say, “You know, I think that you and I are ready to have this conversation,” and when they had that conversation, they could enlist people to their side. And one conversation at a time, they made this progress that allowed our society to come forward.

So unless you think that like now in 2017 we’ve solved all of our social problems and we’re not going to have to make any changes after this, then you have to think that like, at some point in your life people that you love are going to come forward and say to you, “There’s a secret that I’ve kept from you, something really important to me. And I want you to help me make that not a secret anymore. I want you to help me change the world so that I can be my true self.” And without a private realm in which that conversation can take place, those people will never be able to see the progress that they need. And that means that people that you love, people that are important to you, those people will go to their graves with a secret in their heart that caused them great sorrow because they could never talk to you about it.

So, we talk about whether or not kids are capable of making good decisions or bad decisions, whether effectively kids are dumb or smart. And I think the right way to think about this is not whether kids are dumb or smart, but what kids know and what they don’t know. Because kids can be super duper smart. Kids can be amazing reasoners. But kids don’t have a lot of context. They haven’t been around long enough to learn a bunch of the stuff that goes on in the world and use that as fuel for their reasoning. That’s why you hear about children who are amazing chess players or physicists or mathematicians. Because the rules that you need to know to be an amazing chess player you can absorb in an hour. And then if you have a first-rate intellect you can take those roles and turn them into amazing accomplishments.

But you’ve never heard of a kid who is a prodigy of history, or law. Because it just takes years and years to read the books you need to know to become that kind of amazing historian or legal scholar. And so when kids make choices about privacy, it’s not that they don’t value their privacy, it’s that they lack the context to make the right choices about privacy sometimes. And over time they get that context and they apply their reasoning, and then they become better and better at privacy.

But there’s something that works against that privacy, against our ability to learn the context of privacy. The first is that our private decisions are separated by a lot of time and space from their consequences. So imagine trying to get better at kicking a football or hitting a baseball, and every time someone throws the ball you swing the bat. But before you see where the ball goes you close your eyes, you go home. And a year later someone tells you whether or not the ball connected with the bat and where it went. You would never become a better batter because the feedback loop is broken.

Well, when you make a privacy disclosure, it might be months or years or decades before that privacy disclosure comes back to bite you in the butt. And by that time it’s too late to learn lessons from it.

And if that wasn’t bad enough, there are a whole lot of people who wish that you wouldn’t have privacy intuition. Who hope that you won’t be good at making good privacy choices. And those people often are the ones who are talking about digital natives, who want you to believe that whatever dumb privacy choice you made last week you made because you have the mystical knowledge of how the Internet should be used and not because you made a dumb mistake.

And those people, they tend to say things like, “Privacy is not a norm in the 21st century. If you look around you’ll see that young people don’t really care about their privacy.” What they actually mean is, “I would be a lot richer if you didn’t care about your privacy. My surveillance platform would be a lot more effective if you didn’t take any countermeasures to protect your data.” So it’s really hard to get good at privacy, partly because it’s intrinsically hard, and partly because we have a lot of people who use self-serving BS to try and confuse you about whether or not privacy is good or bad.

Ultimately, privacy is a team sport. Even if you take on board lots of good privacy choices, even if you use private messengers, even if you use private email tools like GPG and PGP, even if you full-disk encryption, the people that you communicate with, unless they’re also using good privacy tools, all of the data that you share with them, it’s just hanging around out there with no encryption and no protection.

And so you have to bring those people on board. And that’s where the fact that young people do care about privacy even if they don’t know exactly who they need to be private from, that’s where this comes in. Because young people’s natural desire to have a space in which they can conduct their round without their parents, without their teachers, without fellow kids looking in on them, that’s an opportunity for you to enlist them into being more private, into having better control over who can see their data.

So I’m going to leave you with a recommendation for where to look for those tools. Electronic Frontier Foundation, for whom I work, we have a thing called the Surveillance Self-Defense Kit, ssd.eff.org. And it’s broken up into playlists depending on what you’re worried about. Say you’re a journalist in a war zone, or maybe you’re kid in a high school, we’ve got different versions of the self-defense kit for you.

It’s in eleven languages, so you can get your friends— If you come from another country, you can get friends in other countries to come along with you. And that way you can all play the privacy team sport, and you can use your youthful curiosity to become better consumers of privacy and better practitioners of privacy. And then the Internet that you inherit, that will be long dead and you’ll still be living with, that Internet will be a better Internet for you. Thank you very much.

Oh hey. So, I just found out we’ve got free t‑shirts from the Electronic Frontier Foundation. They have these awesome designs. They have all the best things from the Internet. There’s a cat. There’s books. And there’s CCTV cameras. Which are all the things the Internet has. If anyone has a question, the thing I will redeem the question for in addition to an answer is a t‑shirt. So, anyone got a question? Yes. Oh, they’re smalls. But you may have a friend who could wear it. Or you could wear it as a hat. Go.

[Audience 1 question inaudible]

Cory Doctorow: How legal is it to decrypt Bluetooth packets? Well you know, r00tz has this amazing code of ethics, that you can see over here, that’s about what you should and shouldn’t do. And one of those things is you should be messing with your own data but not other people’s data. If you’re messing with your own data, if you’re looking at a tool that you own like a Bluetooth card that you own and analyzing, then in general it’s pretty legal. But let me tell you, there are a couple of ways in which this can get you into trouble. And the reason I’m mentioning it is not to scare you but to let you know that EFF has your back.

So there’s a law called the Computer Fraud and Abuse Act, CFAA of 1986. And under CFAA… Let me tell you a story of how CFAA came to exist. You ever see a movie called WarGames with Matthew Broderick? So after WarGames came out, people went like, “Oh my god, teenagers are going to start World War III. Congress has to do something.” So Congress passed this unbelievably stupid law called the Computer Fraud and Abuse Act in 1986. And what they said is if you use the computer in a way that you don’t have authorization for, it’s a felony, we can put you in jail. And so that has now been interpreted to say if you violate terms of service, which is the authorization you have to use some web site, you are exceeding your authorization.

But like, every one of us violates terms of service all day long. If you’ve ever read terms of service, what they say is, “By being dumb enough to be my customer, you agree that I’m allowed to come over to your house and punch your grandmother, wear your underwear, make long-distance calls, and eat all the food in your fridge, right?” And every one of us violates those rules all day long. And when prosecutors decide they don’t like you, they can invoke the Computer Fraud and Abuse Act.

Now, we have defended many clients who’ve been prosecuted under the Computer Fraud and Abuse Act. And we will continue to do so. We are agitating for a law called Aaron’s Law. It’s named after a kid named Aaron Swartz who was a good friend of ours. He was one of the founders of Reddit. Was an amazing kid. Helped invent RSS when he was 12 years old. And in 2013, after being charged with thirteen felonies and facing a thirty-five year prison sentence for violating the terms of service on MIT’s network and downloading academic articles, he killed himself. So we’ve been fighting in his name to try and reform this law ever since.

We’re also involved in fighting to reform a law called the Digital Millennium Copyright Act, or DMCA. And the DMCA has this section called Section 1201. And it says that if there’s a system that protects a copyrighted work, that tampering with that system is also a felony that can put you in jail for five years and see you with a $500,000 fine for a first offense. And one year and two weeks ago, we filed a lawsuit against the US government to get rid of that law, too. Because it’s being used to stop people from repairing their own cars, figuring out how their computers work, jailbreaking their phones, doing all kinds of normal things that you would expect to be able to do with your property.

Now, in general what you do with your Bluetooth device? That’s your own business. But laws like the Computer Fraud and Abuse Act and the Digital Millennium Copyright Act, they get in the way of that. And that’s one of the reasons EFF is so involved in trying to kill them. Thanks for your question. Here, take some textiles.

Yeah, go ahead. And I’m going to come down so I can hear you.

[Audience 2 question inaudible]

So the question was what’s my favorite science fiction question to write about? And so I’m one of a small but growing number of science fiction writers who write what I call “techno-realism?” So, usually in science fiction for like fifty years, maybe even longer, whenever a science fiction writer wanted to do something in the plot, they’d just stick a computer in and say, “Oh, computers are able to do this thing, or computers aren’t able to do this thing,” to make the plot go forward, without any actual reference to what computers could do.

And you know, I think that like sixty years ago when computers were the size of buildings and like eleven people in the world had ever used one, that was like an easier kind of magic trick to play without anyone figuring out where you hid the card? But today I think a lot of people know how computers work. And so I like to write stories in which the actual capabilities and constraints of computers are the things that the computers turn on. And so you know, I’ve got books like Little Brother that are ten years old that people still read as contemporary futuristic science fiction, even though I wrote it twelve years ago, right. And that’s because the things that computers can and can’t do, theoretically those aren’t going to change. Maybe like, how we use them will change, but the underlying computer science theory is pretty sound. So that’s how I like to work in my science fiction.

Alright one over here, yes.

[Audience 3 question inaudible]

So the question was how do you know when it’s safe to share your information, when it’s a good idea? Well you know, that’s really hard. Like, I make bad decisions about that all the time. There’s been—like, many times I’ve gone and made a choice to give some data to a web site or whatever, and then I find out that that web site— Like Yahoo!, right? Yahoo! was compromised for five years before they told anyone that they leaked everyone’s data. And so it’s really hard to know for sure when to do that. I think that some of it is just trying to remember what you thought might happen when you made a disclosure, and then comparing it to what happened.

And some of it I think, though, is that we need companies to be more careful with our data. And one of the ways to do that is to hold them to account when they make bad choices. So right now, a company that breaches all your data, generally they don’t owe you any money. Like, Home Depot breached 80 million customers’ credit card records. And they had to give each of them about thirty-three cents and a six-month gift certificate for credit monitoring services. So like, if those companies actually had to pay what it would cost their customers over that breach, then I think those companies would be a lot more careful with their data. So some of it is us getting better at making the choice, but some of it is the companies having to pay the cost for being part of that stupid choice, right. Thank you for your excellent question.

Alright, we’ve got two more t‑shirts.

[Audience 4 question inaudible]

So, the question was how can other people get involved in fighting all these fights? So if you’re on a university campus, Electronic Frontier Foundation has this network of campus organizations. If you email info@eff.org and say that you’d like to get your campus involved, that’s a thing you can do.

Joining EFF’s mailing list is really useful. We will send you targeted notices when your lawmaker needs a phone call from their voters saying “get out there and make a good vote.”

Obviously like, you know, writing EFF a check is a nice thing to do. We’re a member-supported nonprofit. We get our money mostly from small-money donors like you.

Making good privacy choices yourself, and then helping other people around you make those choices. Having this conversation. You know like, people say, “Oh, my mom will never figure out how to use this stuff.” And you know, first of all it gives moms a super bad rap. Because people design technology for the convenience of bosses. Like, if a technology can’t be used easily by like someone’s boss? That person gets in trouble. If a technology can’t be used easily by someone’s mom, they’re like, “Oh, my mom’s dumb,” right? And so like, moms have to work like a hundred times harder than bosses. And so they’re like ninjas compared to bosses. So bosses suck at using technology, not moms.

But there are people in your life, the kind of people who might come to DEF CON, who haven’t really thought this stuff through. If you had this conversation with two people in the next month, you would triple the number people in your circle who care about this stuff. Thank you. Have a t‑shirt.

Who’s got the last question? It has to be really good, but no pressure.

[Audience 5 question inaudible]

So the question was he just got here, can I repeat the entire speech all over again? I can. But it’s just the hash of the speech. So it’s really short. It’s like 0ex117973798777. So you can just compare that to the recording—make sure that it hasn’t been tampered with. Alright.

[Audience 6 question inaudible]

So the question was are any of my stories going to be made into movies. I’ve had a ton of stuff optioned. I’ve got a thing under option at Paramount right now, Little Brother. There’s a Bollywood studio that has an option on my book For the Win. But most stuff that gets optioned doesn’t get made. That’s like a pull process, not a push process. The author doesn’t get to show up and say, “Now you make my movie!” It’s more like someone comes along and says, “Maybe we’ll make your movie,” and you’re like, “Okay, give me some money,” and then you wait. So I’m just waiting. Thank you.

Alright, thank you all very much. Have a great r00tz.