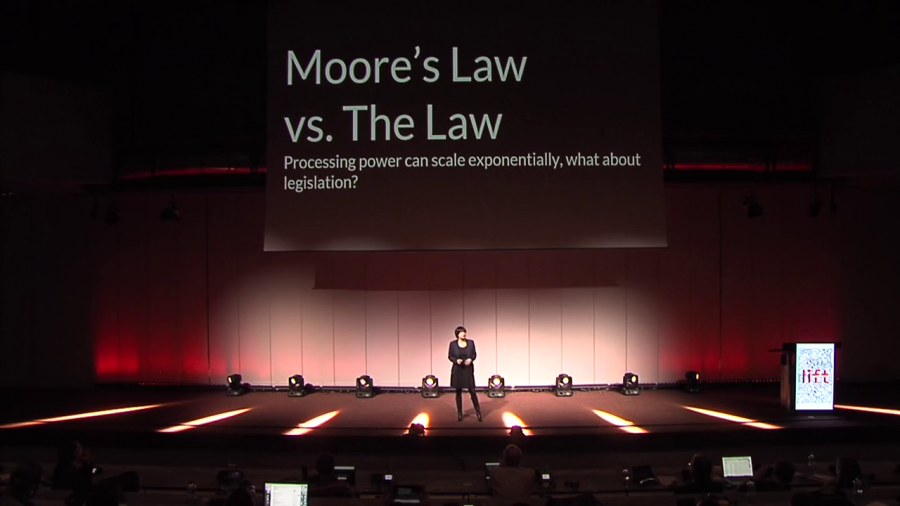

When we talk about technologies such as AI, and policy, one of the main problems is that technological advancement is fast, and policy and democracy is a very very slow process. And that could be potentially a very big problem if we think that AI could be potentially dangerous.

Archive

I came into doing work in an antidisciplinary space more or less by accident. Back when I was applying to university, the schools would send out these books talking about the different programs they offered and what each program was like. And for some reason I never read any of those books. I just applied to engineering school because I thought, “Oh, you know I like to make things, and engineering school’s where you make things.”

The scientific method was perfected in the crucible of natural science, and physics in particular. And an old professor of mine once told me that a good theoretical physicist is intrinsically a lazy person. And so these heuristics of ignoring superfluous detail, simplifying the problem to its barest essentials, maybe even making a caricature out of it, solving that simpler problem. If you can’t solve that simpler problem, solve an even simpler problem. This actually works in physics. Because the universe is intrinsically a lazy place.

What does it mean to be antidisciplinary? To me, it means struggle. Sometimes, working in interdisciplinary fields, I felt like I’ve maybe tried really hard working and working and working on a project, and I wasn’t seeing any difference. Sometimes people would look at me and be like, “What are you even doing?” So, to me antidisciplinarity means not only not working in one specific field, but rather instead drawing from elsewhere to imagine something new.

Rammellzee […] considered graffiti as viruses. And what he liked to do was to connect his production to military language. He was saying that the graffiti artists were in a kind of symbolic campaign against the standardization of the alphabet.

We have to be aware that when you create magic or occult things, when they go wrong they become horror. Because we create technologies to soothe our cultural and social anxieties, in a way. We create these things because we’re worried about security, we’re worried about climate change, we’re worried about threat of terrorism. Whatever it is. And these devices provide a kind of stopgap for helping us feel safe or protected or whatever.