I think the question I’m trying to formulate is, how in this world of increasing optimization where the algorithms will be accurate… They’ll increasingly be accurate. But their application could lead to discrimination. How do we stop that?

Archive

I’m interested in data and discrimination, in the things that have come to make us uniquely who we are, how we look, where we are from, our personal and demographic identities, what languages we speak. These things are effectively incomprehensible to machines. What is generally celebrated as human diversity and experience is transformed by machine reading into something absurd, something that marks us as different.

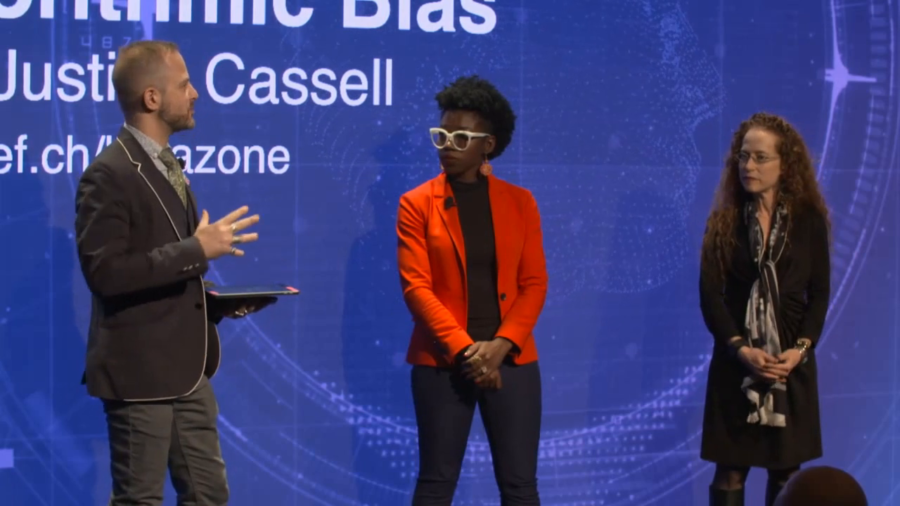

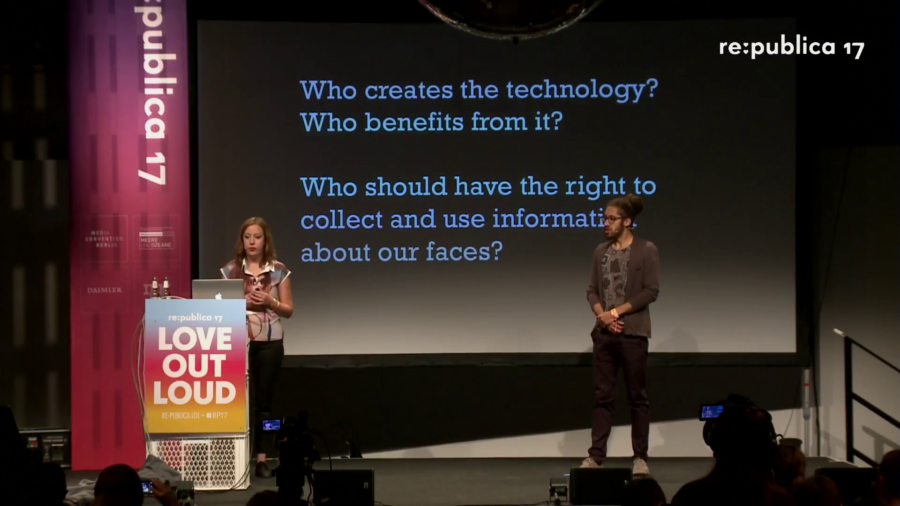

We have to ask who’s creating this technology and who benefits from it. Who should have the right to collect and use information about our faces and our bodies? What are the mechanisms of control? We have government control on the one hand, capitalism on the other hand, and this murky grey zone between who’s building the technology, who’s capturing, and who’s benefiting from it.

[The] question of what happens when blackness enters the frame can kind of neatly encapsulate the ways I’ve been thinking and trying to talk about surveillance for the last few years.

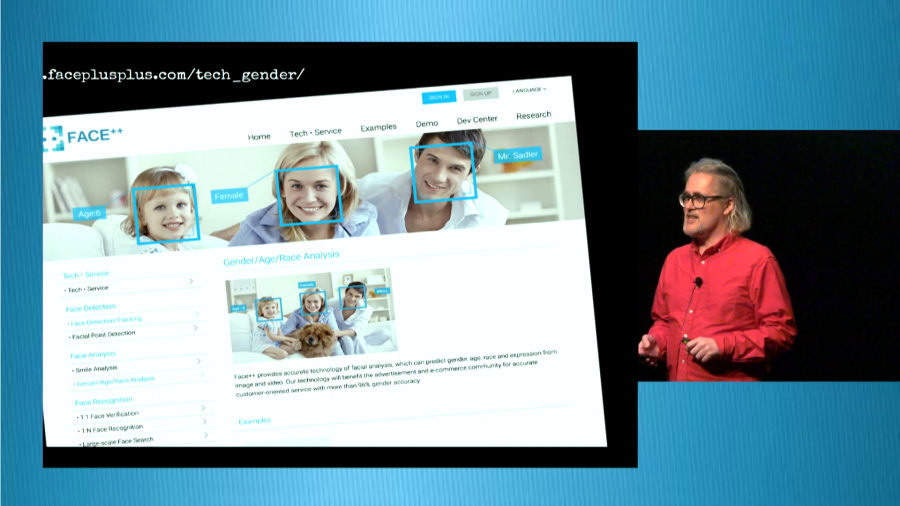

The face is a constant flow of facial expressions. We react and emote to external stimuli all the time. And it is exactly this flow of expressions that is the observable window to our inner self. Our emotions, our intentions, attitudes, moods. Why is this important? Because we can use it in a very wide variety of applications.