Jillian C. York: We all know that hate speech is a huge problem online but we don’t really necessarily agree on what “hate speech” is. Same goes for harassment, same goes for violent extremism. A lot of the topics that we’re trying to “tackle” or trying to deal with on the Internet, we’re not actually defining ahead of time. And so what we’ve ended up with is a system whereby both companies, and governments alike, are working sometimes separately, sometimes together, to rid the Internet of these topics, of these discussions, without actually delving into what they are.

So, I work on a project called onlinecensorship.org, and what we do is look at the ways in which social media companies are restricting speech. Now, you could argue that not all of this is censorship, and I might agree. We look at everything from, as you may have seen last year, the censorship of nudity, which I firmly believe is censorship, to takedowns around harassment, hate speech, and violent extremism—some of which border the line between incitement and potentially lawful speech in certain jurisdictions.

So I looked at the different definitions of hate speech that the major social media platforms give. Twitter for example says that you may not promote violence against, or directly attacker or threaten other people on the basis of race, ethnicity, etc. All of the different categories that you might imagine. It’s very similar categories to before.

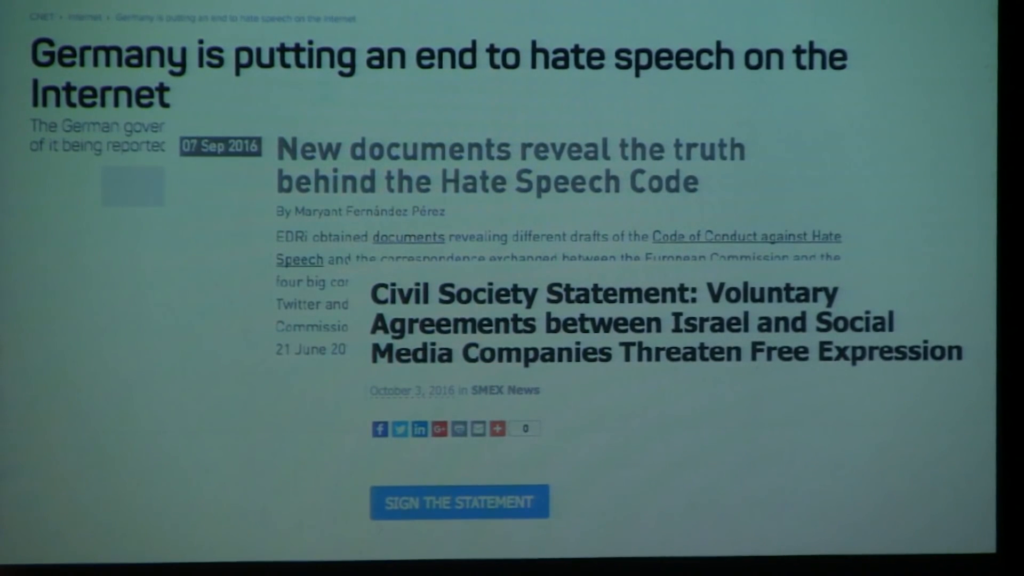

So, we know that these companies, these places where most of our speech takes place and spaces online…we know that they’re already committed, or at least they say so, to taking down or regulating that kind of content. But governments haven’t necessarily agreed, and I think Kirstin spoke about this a bit earlier today. Governments have felt that what the companies [recording skips] not enough. And so last year we saw the German government at the end of last year form an agreement with the companies to take down hate speech by getting them to remove it within twenty-four hours of being reported.

So essentially how this works is you already have these flagging mechanisms that exist on Facebook. When I report content, I can report it as…there’s a number of different, and it actually is quite granular. I just looked when it comes to hate speech.

But then the German government wanted them to go a step further and make sure that they’re doing it within twenty-four hours. We’re talking about 1.6 billion users, content moderators of…some number. We don’t actually know how many people are employed by these companies to look at those numbers, and hopefully we’ll have more transparency about that soon—fingers crossed.

But, essentially they’re asking for a twenty-four hour turnaround for all of this 1.6 billion users. This is almost impossible. And it’s not just Germany. We saw the European Commission of course, which I think was discussed earlier, as well as more recently a potential agreement between the Israeli government and Facebook to deal with incitement. Now, that one’s less clear. There’s some denial as to whether or not there is in fact an agreement. But it remains to be seen what kind of content’s taken down. So far, however, I would note that within the past two weeks since this went public, we’ve seem two editors of popular Palestinian publications censored by Facebook for reasons unknown. Facebook apologized, said it was a mistake, but nevertheless, whenever there’s that additional scrutiny put on a certain category of people or a certain geographic location, you’re bound to see more erroneous takedowns, as I like to call them.

And so then what do we do about this? Because if the governments feel that the companies aren’t doing enough, and we as society have no input into that, then essentially what we’re seeing is this quick, reactionary attempt to you know, like I said before, get rid of all of the content without actually assessing what we’re looking at. What is it that we’re talking about. And I think that that’s the first step, is that we haven’t agreed on a definition of hate speech. My vision of it might be different from yours. As we’ve seen by different governments, the vision is different. And so if we want a free and open Internet—and I’m not saying that we shouldn’t tackle hate speech. We should absolutely tackle hateful speech. But, if we want a free and open Internet where we all have equal access and equal opportunity, then this additional fragmentation that we’re seeing through these different privatized agreements is not the way forward.

And so first we need to find a definition that actually works for us, before we even talk about what to do about the speech itself. And then of course voluntary backdoor agreements between governments that we’ve elected democratically, in all of the examples that I’ve given so far. We’ve also seen some…less-democratic governments try to strike deals with companies, and that’s another precedent that we might be setting with these. But regardless, they deny us input. And by us I mean citizens, I mean citizens of both our countries and of the Internet, “citizens” of these platforms insofar as you could make that argument.

But we have no input into this. We’re not part of these conversations. Not only have civil society groups, NGOs, been excluded from the actual table where these agreements are being decided, but the average user has no actual say in how these spaces are governed. And so I’m not going to talk about nudity this year—I know I’ve talked about it the past two years. But I will throw it in there as an example that I think it’s really interesting that companies have just decided for us that this is an unacceptable thing. Now, their reasons might be valid. It might be really difficult for them to tackle the difference between pornography and nudity. There are all sorts of technical reasons why that might be a really hard question, and I respect that. But. They’ve already made the decision to go beyond the law there, how do we know they’re not doing that in this case, too.

And then I would also go a step further and say that censorship alone doesn’t actually solve the problem of hateful speech. It doesn’t. And I’ll give you a couple examples.

I was in Budapest a couple years ago, just walking around in the summer. And this was in the middle of the debate in the United States around the Confederate flag. And so for those who might not be as familiar, the Confederate flag was of course the flag of the separatist South, and has now become largely known as a…well, at least where I’m from it’s known as a hateful, racist symbol. And in a lot of the south of the country it’s a symbol of pride for the Confederacy. But nevertheless it’s pretty known for what that is.

But when I see it internationally in another context, my reaction is oh, maybe they’re just you know, trying for some Americana. And so I saw this military shop in Budapest and I saw the Confederate flag, and I thought oh, well maybe it’s just like an Army Navy store. So I posted it on Twitter and I asked some friends in the country, and they were like, “No no no, that’s a Nazi symbol.”

I wouldn’t have known that. Because what happens when you censor some symbols is that other ones crop up in their place. And we’re starting to see that on Twitter and Facebook now, with secret codes to avoid censorship. I’m not going to get into the actual definitions, but if you look at this article, essentially you’ve got really far-right right wing communities online that are using innocent words like “Google,” “Skittle,” and “Skype” as substitutions for racist words.

And so this happens, and then we see this in China to get around censorship there in more positive contexts. But it’s only a matter of time if companies are building in algorithmic methods to filter or censor speech, it’s only a matter of time before people just come up with new substitutes. That’s how people have always gotten around censorship. I don’t see how that will not continue.

But then lastly I would also say that censorship isn’t the solution to hateful speech. It might be a solution; it may be a component of the solution. I don’t know. That’s something for democratic processes to decide. But, it doesn’t solve the problem. To solve the problem we have to get at the root causes of it. And this is why I find this title for this talk really challenging. Because I’m not the expert on how we deal with hateful communities and hateful speech and all of the right wing groups that are cropping up in my country and yours.

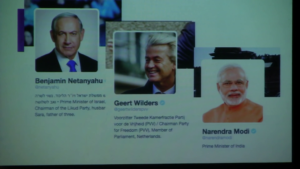

But, I do know that we should be looking somewhere else, and I think we’re asking some of the wrong questions as to the origins of this. And I’ll just flip through these real quick to note all of these are people who are verified on Twitter and who engage in hateful speech on a regular basis. These are our leaders. These are the people that we should be asking the question, how do we get rid of hateful speech? It’s not necessarily “let’s just strike it from the record,” it’s let’s get to the root cause and then we can talk about what we do with it on our online communities. So thank you, and thank you for having me.