When my son was two years old, one day I showed him the character “E” on a piece of paper. The next day he would point to the different Es in the street, including this huge upside-down E painted on the football field.

I was amazed that he could learn and generalize from just one example. When my little daughter saw this Picasso painting, she screamed, “Face!” right away, even those she had never seen such a distorted face before.

Of course, being my children they are naturally really smart, but how is that possible? Computers today aren’t this intelligent. Computers can only do things that they are trained for. For example, after the Boston Marathon bombing, human FBI agents had the come in to watch hours of surveillance tape to identify the bombers. Computers cannot do that because they don’t even know who and what to look for.

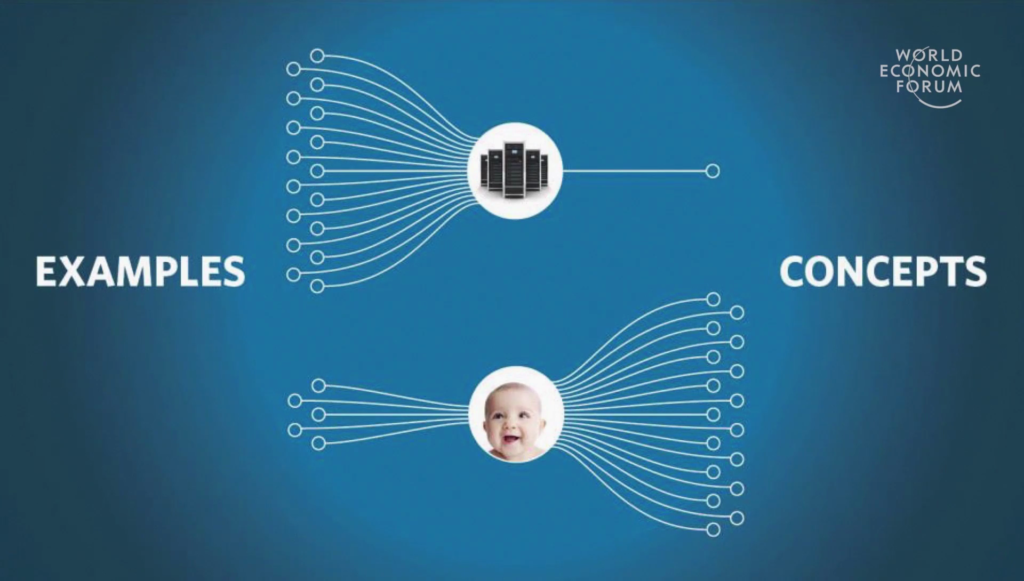

We can train computers to learn to recognize objects by giving them millions of examples with the correct answers. A human baby, on the other hand, learns to recognize many concepts and objects all by themself simply by interacting with a few examples in the real world.

My research at Carnegie-Mellon involves looking inside the brain to study what is going on at the levels of individual brain cells and circuits when the brain is seeing and learning to recognize objects. We want to use this knowledge to make computers see and learn like humans.

For humans, seeing is a creative process. There is a distinction between what our eyes take in, which are fragments of the world, and what we perceive. We rely heavily on our experience and knowledge to make up the image that we see in our mind.

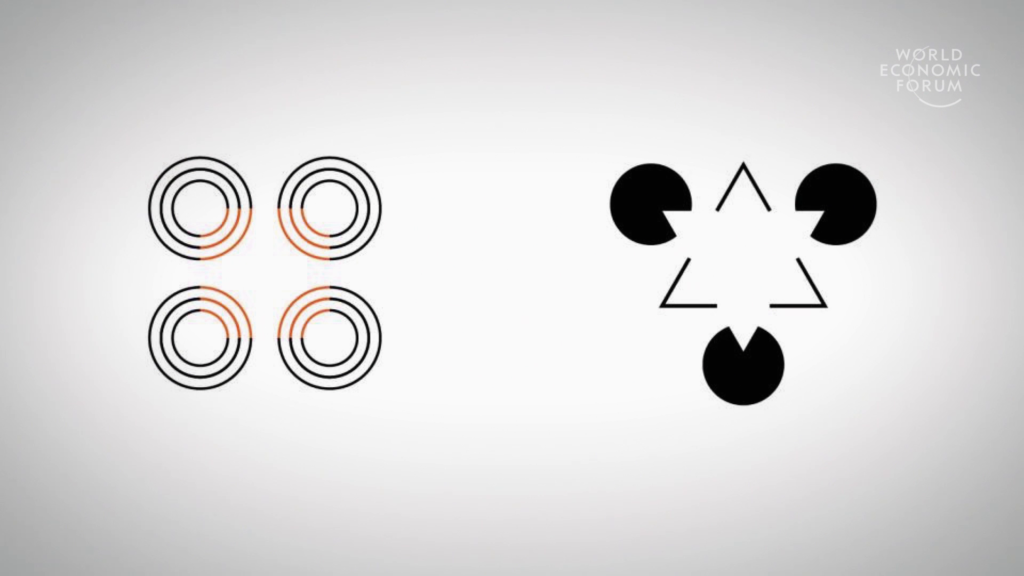

These pictures illustrate what I mean. On the left you see a red translucent surface, but really there’s no red surface. Only fragments of the black rings have been turned red. On the right you see a white triangle, but in reality it’s not there. It is an illusion.

What we see in our mind is our interpretation of the world. The brain fills in a lot of missing details to make up the most probable mental image that can explain what comes into our eyes. We see with our imagination, and we create the image that we perceive in our mind.

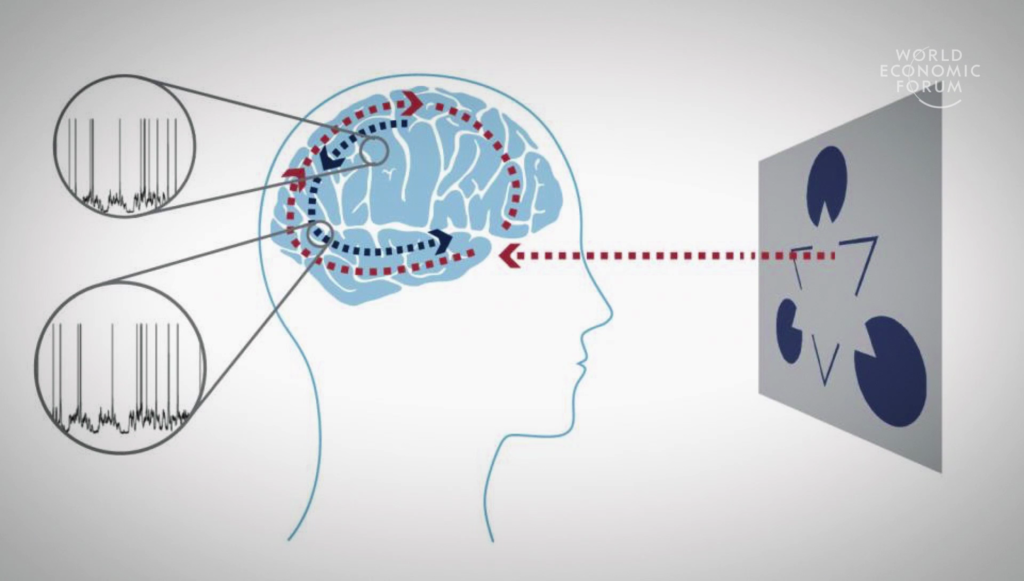

Creating this image in our brain involves the interactions of many levels of brain circuits in the visual cortex, the part of the brain that is responsible for processing visual information.

During perception, information flows up and down across the different levels to integrate global and local information. We have observed that at the high level, neurons can see the white triangle but only fuzzily.

Neurons at the lower level can see more clearly, but initially they only see a fragmented view of the world. But after a brief moment, they start to represent the white triangle as well. We believe that the brain created this image as a way to check whether its interpretation of the world is correct.

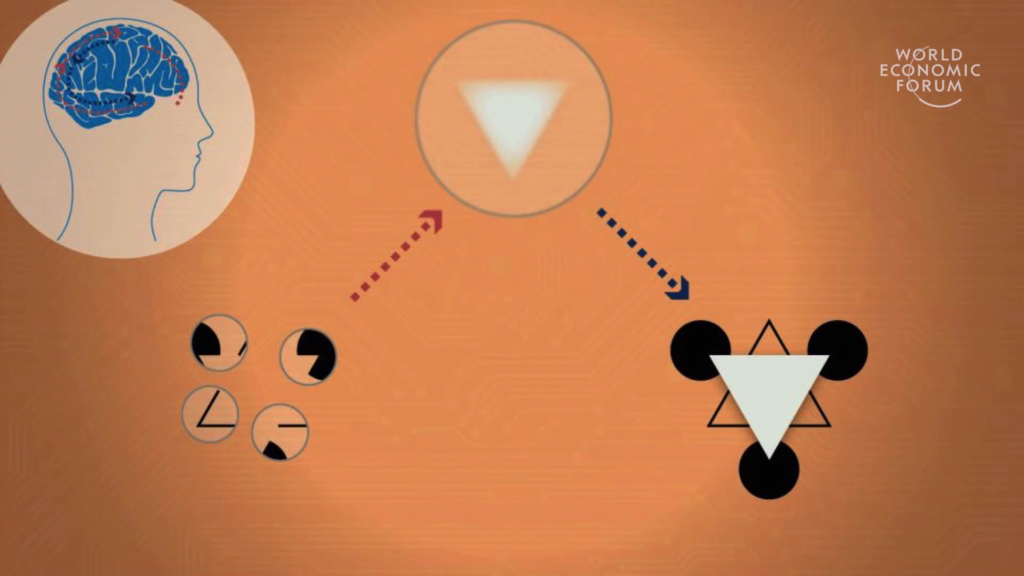

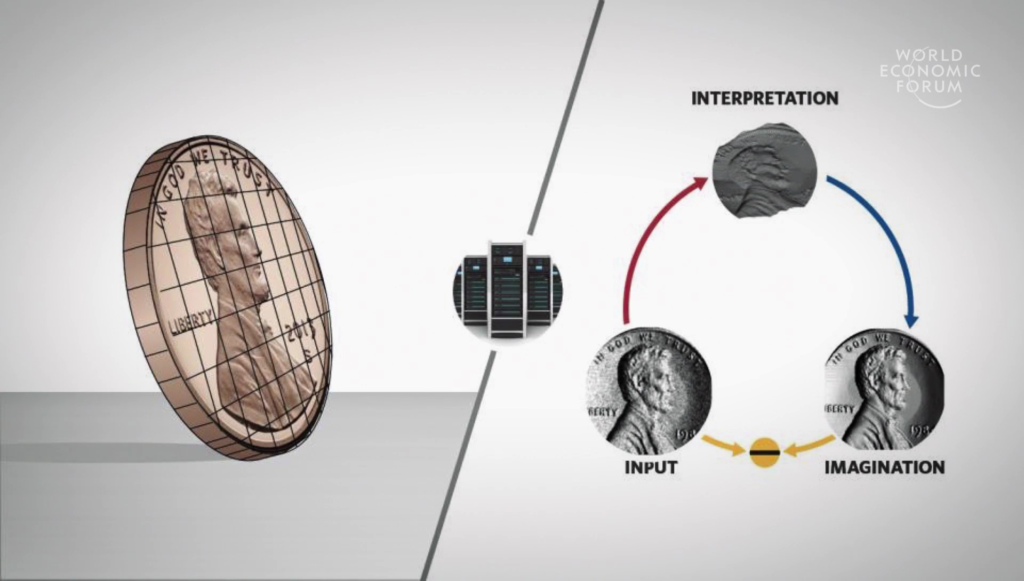

This is the result of a computer program we wrote based on the same principles. Given an image, the program has to interpret its 3D structures. For each interpretation, it can imagine what it expects to see. And if the imagined image matches the input image, I explain the image, the program knows he got the right answer.

So the brain, when it is not interpreting and perceiving the world using this process, it can use the same circuit to imagine how an object might look like under different situations. So in this way, it can actually generate a huge amount of big data based on a few examples to train itself.

So our natural impulse to daydream, to imagine the future, and to do creative things, and our innate need for artistic expression and art-making, could actually all be byproducts of the same program and process running constantly in the brain. So these activities could be key to the development of our abilities to learn from a few examples and to recognize objects that we have never seen before. So we want to give this capacity of creativity and imagination to computers so that they can learn automatically like a baby.

As a part of this large-scale “Apollo Project of the Brain” recently launched, we at Carnegie-Mellon are studying actual neural circuits that enable our visual systems to see and imagine with imagination. And we want to put this to work in computers.

So we hope this work will not only help to us understand the brain better, but to make more flexible and intelligent robots. And the question I want to leave with you is, can you imagine what machines can do if they had the power of imagination? Thank you.

Further Reference

Tai Sing Lee home page at Carnegie-Mellon University

2016 Annual Meeting of the New Champions at the World Economic Forum site