Ravi Shroff: So, here at Data & Society, my fellowship project is to understand the development and implementation of predictive models for decision-making in city and state government. So specifically I’m interested in applying and developing statistical and computational methods to improve decision-making in police departments, in the courts, and in child welfare agencies.

Now, the questions that I work on generally have a large technical component. But I want to mention that other aspects or policy concerns, ethical concerns, legal and practical constraints, are just as challenging to deal with. So in this Databite I’m going to briefly describe two examples, one from criminal justice and one from child welfare, along with some questions that’ve been inspired by my interactions with people at Data & Society over the past year.

So before I jump in I just want to talk about why an empirical approach to questions in these areas is hard. So first of all, there are often complicated ethical issues at play. So tensions between fairness and effectiveness. Tensions between parents rights and a child’s best interests. Racially discriminatory practices.

Moreover, tackling these issues generally involves extensive domain knowledge, in particular to understand the intricate processes by which data is generated. And so in practice this means that I generally collaborate with domain experts. The data that’s available is often observational, and simultaneously highly sensitive and of poor quality. And so this can make evaluating solutions particularly challenging. And in the context of working with city and state agencies, there are often external pressures like request from the Mayor’s office, and practical constraints that can make implementation difficult.

So I work with a variety of city, state, and nonprofit agencies. Here are some of them. And most of them are headquartered in or around New York City.

So I’m going jump into the first example, which is pretrial detention decisions made by judges. So in the US, shortly after arrest suspects are usually arraigned in court, where a prosecutor reads a list of charges against them. And so at arraignment, judges have to decide which defendants awaiting trial are going to be released (or RoR’d—released on their own recognizance) or which are subject to monetary bail.

Now in practice, if bail is set for defendants they often await trial in jail because they can’t afford bail, or end up paying hefty fees to bail bondsmen. But judges on the other hand are legally obligated to secure a defendant’s appearance at trial. So judges’ pretrial release decisions have to simultaneously balance the burden of bail on the defendant with the risk that he or she fails to appear for trial.

Now, two other things I just want to quickly mention is that there are other conditions of bail besides money bail. But in the jurisdiction that I consider, money bail and RoR are the two most common outcomes. And the other the other thing is that in many jurisdictions judges are legally obligated to consider public safety risk of releasing a defendant pretrial. But in the jurisdiction that I’m going to focus on judges are legally obligated to only consider the likelihood that a defendant fails to appear.

So, judges can be inconsistent. They’re humans. They get hungry and tired. They get upset when their favorite football team loses a game. And there’s research that in fact suggests that judges make harsher decisions in those circumstances. And judges are also like us. They can be biased. They can be biased implicitly or explicitly. And also, when a judge looks at a defendant and hears what the defense counsel has to say and what the prosecutor says, they take all these factors into account and then in their head they make some decision, and then we see that decision. So a judge’s head is a black box. It’s opaque.

And I should mention that the private sector has also attempted to aid judges’ decision-making by producing tools, but these have their own issues. And so I want to read an excerpt from an op-ed that appeared in The New York Times yesterday by Rebecca Wexler, who is fellow here it at Data & Society who also gave a Databite talk last week. And she writes,

The root of the problem is that automated criminal justice technologies are largely privately owned and sold for profit. The developers tend to view their technologies as trade secrets. As a result, they often refuse to disclose details about how their tools work, even to criminal defendants and their attorneys, even under a protective order, even in the controlled context of a criminal proceeding or parole hearing.

Rebecca Wexler, When a Computer Program Keeps You in Jail [originally “How Computers are Harming Criminal Justice”]

So this raises the question, can we design a, consistent transparent rule for releasing (nonviolent, misdemeanor) pretrial defendants? I should also mention that some work is going on that’s funded by foundations in this area.

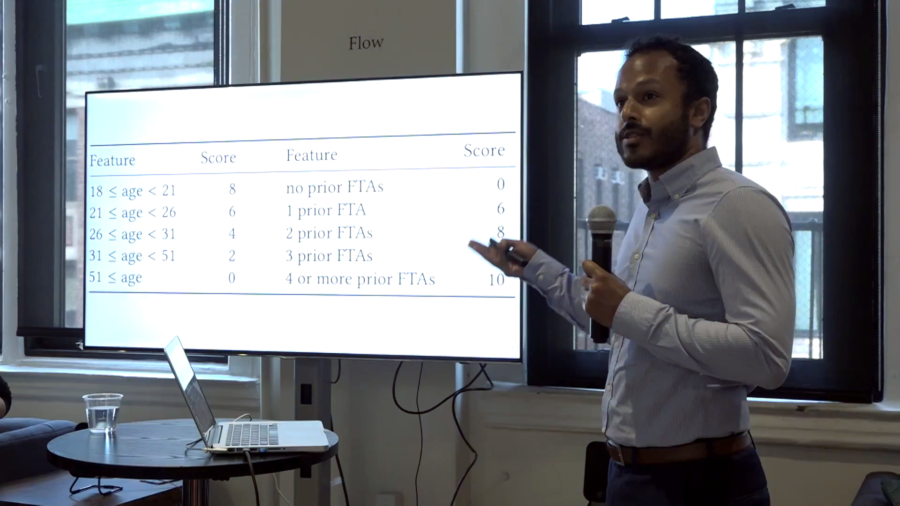

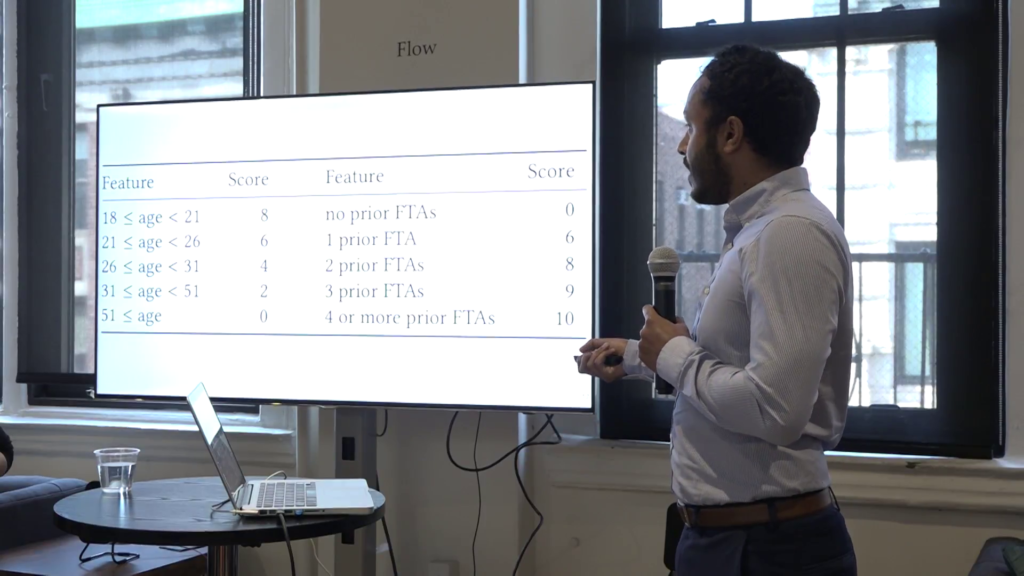

And we can. And this is the rule that my collaborators and I came up with. It’s a simple two-item checklist. It just takes into account two attributes of a defendant: the defendant’s age, and the defendant’s prior history of failing to appear for court.

So the way this could work is suppose you have some threshold, let’s say 10. Then you take a defendant, maybe the defendant’s 50 years old and has one prior failure to appear. So the defendant’s score would be 2 for their age, and 6 for their prior history of failing to appear. The total would be 8. That’s less than the threshold of 10, so they would be recommended to be released. And if it exceeded 10, the recommendation would be that you set bail.

And what we find is that if you follow this rule with a threshold of 10, we estimate that you would set bail for half as many defendants without increasing the proportion that failed to appear in court. And that’s relative to current judge practice. Moreover, and this is I think maybe the more surprising aspect, this rule performs compared to more complicated machine learning approaches. Which begs the question, if a super-simple checklist which only uses two attributes of a defendant can perform the same as something much much more complicated, well why not use the simple approach?

So, in 2014 the Attorney General at the time, Eric Holder, referred to these risk assessment tools in a speech he gave, where he said, “Equal justice can only mean individualized justice, with charges, convictions, and sentences befitting the conduct of each defendant and the particular crime he or she commits.”

So this raises another question, which is how can you balance this idea of individualized justice with consistency? So think about the checklist, for example. It’s certainly consistent, right? If you’re in a particular age bucket and you have a particular number of previous failures to appear, it’s going to recommend either that you’re released or that bail is set for you. And it’s certainly not individualized. Because beyond age and your prior history of failure to appear, it doesn’t take into account anything else.

So the usual answer is you say well, I’m going to use a checklist or a statistical rule to aid a judge’s decision, not to replace it. So a judge would see the recommendation and then the judge could choose to follow it or to do something else. And so in practice, balancing individualized justice and consistency is tough, but I think a good first step is to focus on transparency.

Now another question is well, why not just release all (nonviolent, misdemeanor) defendants before trial? So this is sort of thinking outside the box, right. I mean, this checklist is sort of a statistically designed procedure to optimize who you release and who you set bail for. But let’s ask a different question, which is why don’t you just let everybody out? And if you did, what would happen?

And so we estimate that in fact if you were to release all nonviolent, misdemeanor defendants in our jurisdiction you would see a modest increase in the percentage that failed to appear, but not very much. It would go from 13% to 18%. And so I feel like this is a question as a society that we need to ask. Which is you know, are the burdens on all those people who are not RoR’d, is that outweighed by having a slightly lower failure to appear rate?

Okay. So I’m going to go to my second example, which has to do with children’s services in New York City. So, New York City’s Administration for Children’s Services handles about 55,000 investigations a year of abuse or neglect, and is responsible for roughly 9,000 children, and it’s a big agency. It has an annual budget of about $3 billion.

So ACS recently had an initiative to use the data that they collect on children and families to improve the level of service that they provide. And a part of this initiative which I was involved with is to use data to understand which children currently in an investigation of abuse or neglect are likely to be involved in another investigation of abuse or neglect within six months’ time.

And I should mention that this is happening at a sort of highly charged time for ACS. There were a number of high-profile fatalities in the last year of children who were under investigation by ACS. And that could add urgency to the desire to use data and analytic tools to improve services for children.

So I want to raise a question, which is what is the intervention? And what I mean by this is suppose that you could accurately or reasonably accurately predict the likelihood, the probability, that a child is going to be involved in another investigation within six months. Suppose you see it’s like 99%. Well, what would you do?

So, you could remove the child from the parent. You could also say, “These are challenging cases. I’m going to prioritize their review. I’m going to have managers or more experienced caseworkers deal with these cases.”

Or you could say, instead of allocating sanctions to a family like removing a child, “I’m going to allocate benefits like preventive services. I’m going to flood the family with services to try and reduce that likelihood.”

And I’m going to mention that ACS has been very clear in saying that they will not use these algorithms to make removal decisions but instead to prioritize case review and to match children and families to the services that they need.

Another question is how can ACS actually insure that these analytic methods are going to be used appropriately? So, children’s services is in the process of building an external ethics advisory board comprised of racially and professionally diverse stakeholders, who are supposed to oversee the way that predictive techniques are being used to inform decision-making.

And danah boyd, founder of Data & Society, has this saying that I like where she says, “Ethics is a process.” And I feel like that sort of suggests that sometimes a solution to these problems is to create an institution which is in charge of supervising that process.

And so finally I just want to pose the question of, how will caseworkers actually use the output of these predictive algorithms? You could also ask the same question in the pretrial release context. How will judges actually use the output of risk assessment tools? So, will they generally follow the recommendations of the tool, or will they make different decisions in a manner which has unexpected consequences? It’s an active area of research. And I’ll say that a former Data & Society fellow has also investigated this specific question in the context of criminal justice.

So I just want to wrap up by saying that understanding this interaction between human decisionmakers and algorithmic recommendations is really essential to ensure that they function as intended. So thank you very much, and thanks to everybody at Data & Society, and my collaborators:

Bail: Jongbin Jung, Connor Concannon, Sharad Goel, Daniel G. Goldstein

ACS: Diane Depanfilis, Teresa De Candia, Allon Yaroni

[presentation slide]