Golan Levin: Our first speaker, Patricio Gonzalez Vivo, is an artist and engineer from Buenos Aires who makes digital art from repurposed e‑waste as an alchemical practice. His work is technically sophisticated meets playful tinkering. He’s the author of The Book of Shaders, glslViewer, and the PixelSpirit Deck. Patricio’s work has been shown at Eyeo, Resonate, GROW, FRAMED, FILE, Espacio Fundación Telefónica, and FASE. He’s taught at Parsons The New School, NYU ITP, and the School for Poetic Computation. Patricio Gonzalez Vivo.

Patricio Gonzalez Vivo Thank you Golan. I’m turning my camera on and…hope you can see me now?

Levin: Yes.

Vivo: Perfect. Thank you. So I’m going to share the screen next. Here it goes.

So I want to start by saying how grateful I am to be here. Thank you Golan and everybody that made this possible. For me it has been a great couple of months. Having the opportunity to really work on glslViewer and some important features and also to meditate on the tool together with other toolmakers. So it has been a joy to experience.

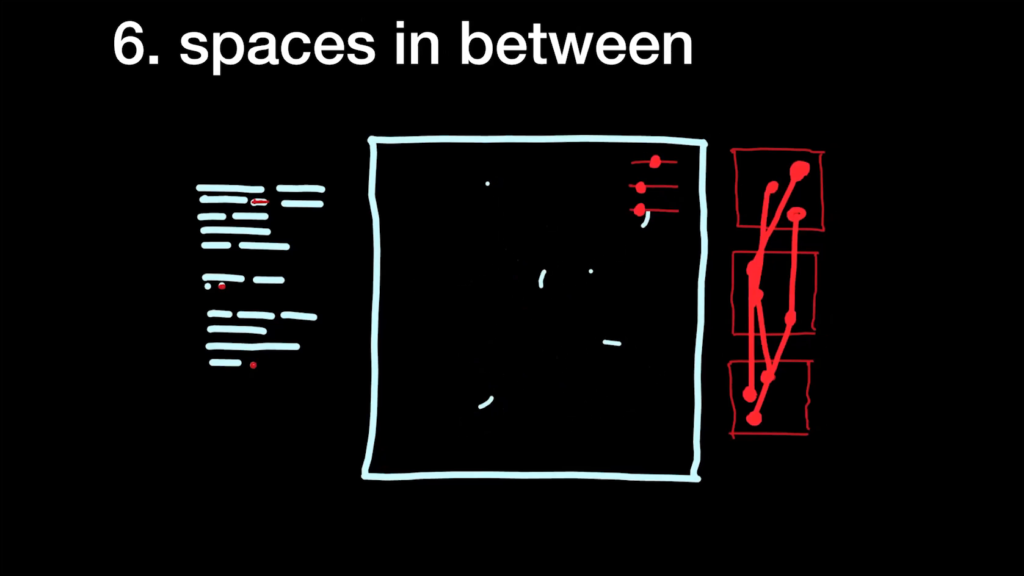

Today I kind of want to share a path that I went through from taking a personal tool which is…like a personal toy tool to the point that it’s a professional tool that I can use in my everyday work for whatever kind of work I do. So with that in mind I want to start by kind of defining some patterns that I have found during working with code as a medium. And that pattern, which we will refer to as The Pattern, I think the starting point will be…here.

It will be making the algorithm. And hopefully everybody can relate to this and take this to their own personal experience. It doesn’t have to be one-to-one but I hope everybody can find it resonant. So, you start by coding your program. It could be a visual experience or a generative piece of work. It could be a particle system. And you kind of develop this intimate connection to your code, right. You know how it behaves. It came out of your mind. You based it on some paper that you found.

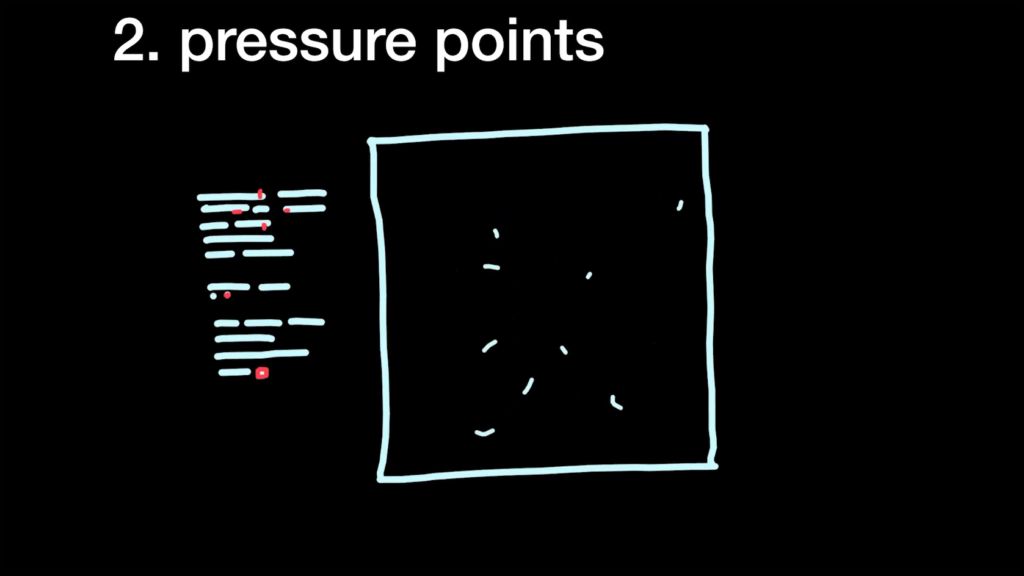

And after creating it, you discover and you have a sense of what are the pressure points. What are those are variables here and there that when you change the values, it really kind of makes something different. It could be something interesting, something that looks different, or something that really speaks to you. And at this point our work becomes a little like tinkering and like poking at your code. And kind of seeing what happens. So particularly in this step I really enjoy kind of how you’re driven by curiosity.

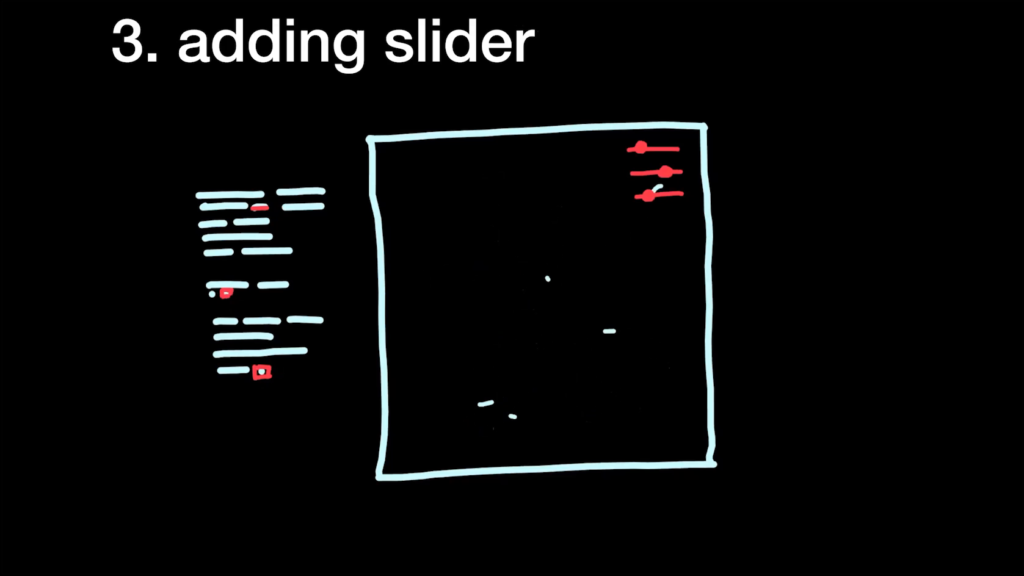

And I think the immediate next step, at least for me, what happens is that I have this urge of like, put on the Bret Victor hat and I’m going to put some sliders into that. And here you can use a lot of tools. In the past I’ve actually used Reza’s ofUI when I was working mostly on openFrameworks. And here a series of challenges comes, right. You’re trying to—like you have this kind of puppet and you’re pulling the strings, and you want to define what is the sweet spot of each one. So you have to define the ranges that they work on, and which will be the nice defaults to have when the thing opens. And once you’re done, you go through another series of kind of curiosity driving us. Like now there’s a slider, how can I make this code and this algorithm really speak through me, right, amplify my voice. How can I find my own voice in this or something that I’m interested in pursuing.

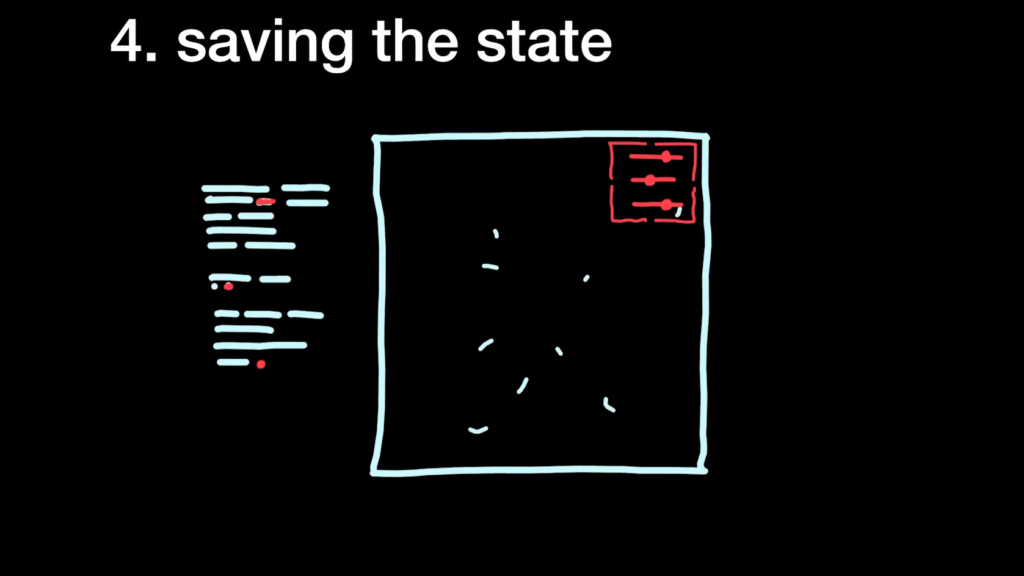

And that leads us to the fourth step which I have found, which is you want to save it. You want to kind of make an amber copy of that and store it somewhere. And the step here brings another challenge. So if you’re not using a mature UI you will be faced with like, which format should I make it? Should I make it something that is easy for GitHub to source control, or should it be something binary, and I don’t care so much about that. But this part really is kind of like a moment of collecting different iterations and variations of your own work. Which is fascinating.

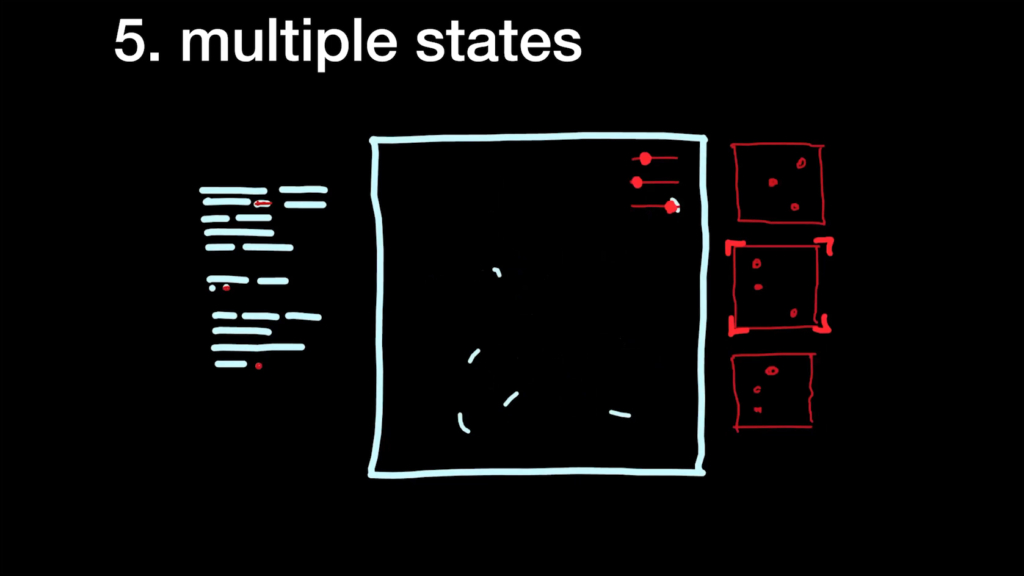

But that brings us to the next step, which is your program now needs to deal with multiple states. So you need to load it correctly, and it needs to transition correctly. At this point you also have to deal with the source control, having a way of keeping up with all these slightly different variations and having ways of prioritizing and kind of like…you know, sometimes we fall into the typical file formatting of like “finalFINAL/final-version1.” Everyone has a different version of that, but I usually…that has happened.

And I think this step kind of opens up the final one, which is you realize that very interesting things happen in between those stages. And this really opens to kind of making compositions over time, right. My particle system is all clustered together, very tightly tied, and then I release it. And they come out very fast into the space. Or something like that. And in the past I have been tempted to also implement a whole like timeline for that, in order to be able to do some storytelling with this composition.

So, this is the overall pattern that I have followed, and hopefully other people feel that they have been there too.

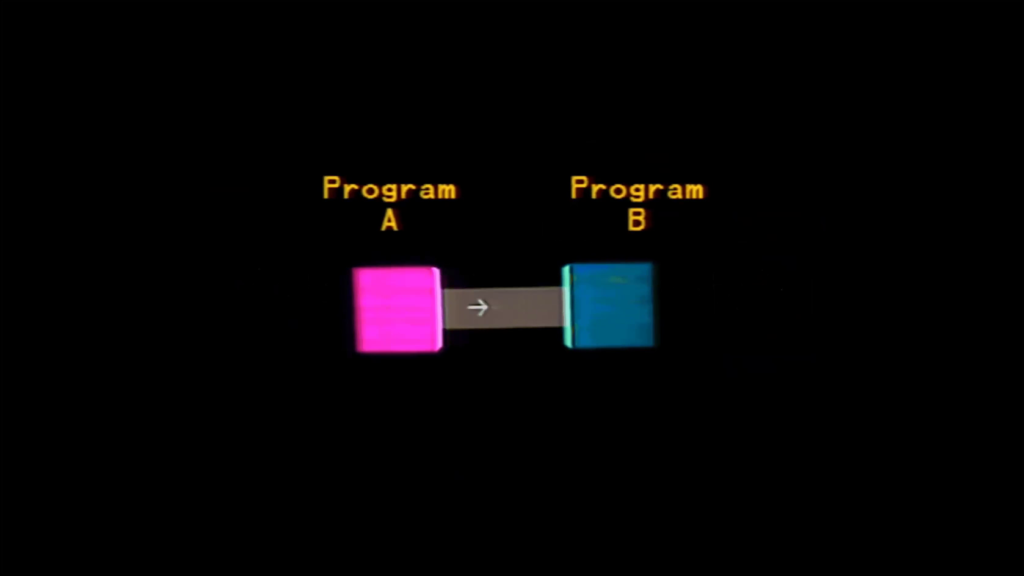

And at this point I want to propose a different pattern I have found with glslViewer. It’s not particularly better or worse, but it kind of has helped me to deconstruct this in order to not spend so much time reimplementing UIs. And it’s a very old pattern, so I’m using the word “new” here taken with a grain of salt because it’s new for me but it’s very old. Actually it came from the Unix principles of design, which is old, as old as like C and mainframes.

And what really struck me from that model is this idea of like, instead of having one program that does it all…like it has all the buttons and has all the whistles, and all the features, you go more for simple, very atomic or modular structures where each one of the programs does one thing but it does it really well.

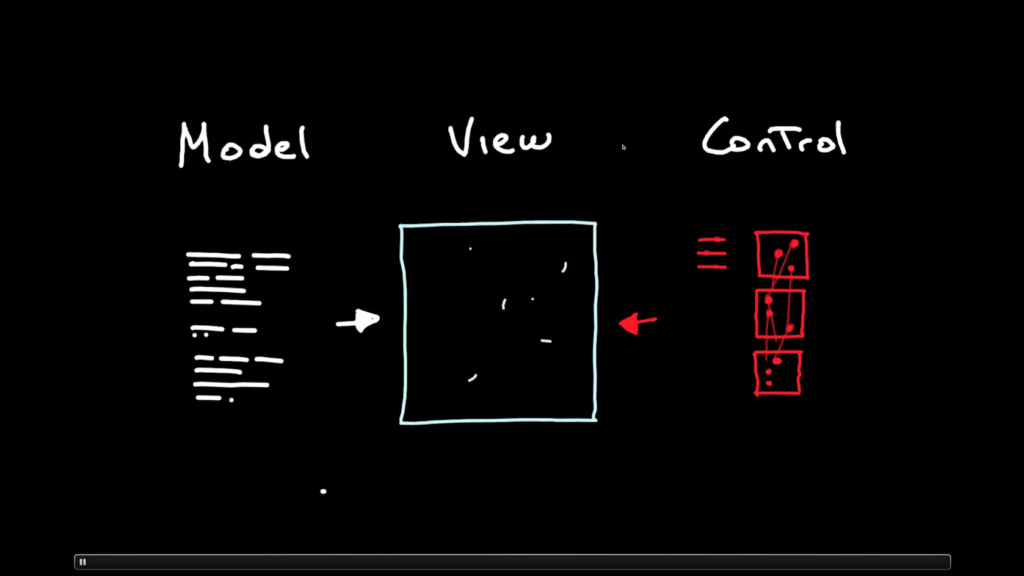

And nowadays there’s a different paradigm, a more modern name for this, especially when you develop programs, which is this idea of separating the model, the view, and the controllers. And with this I’m kind of getting closer to glslViewer.

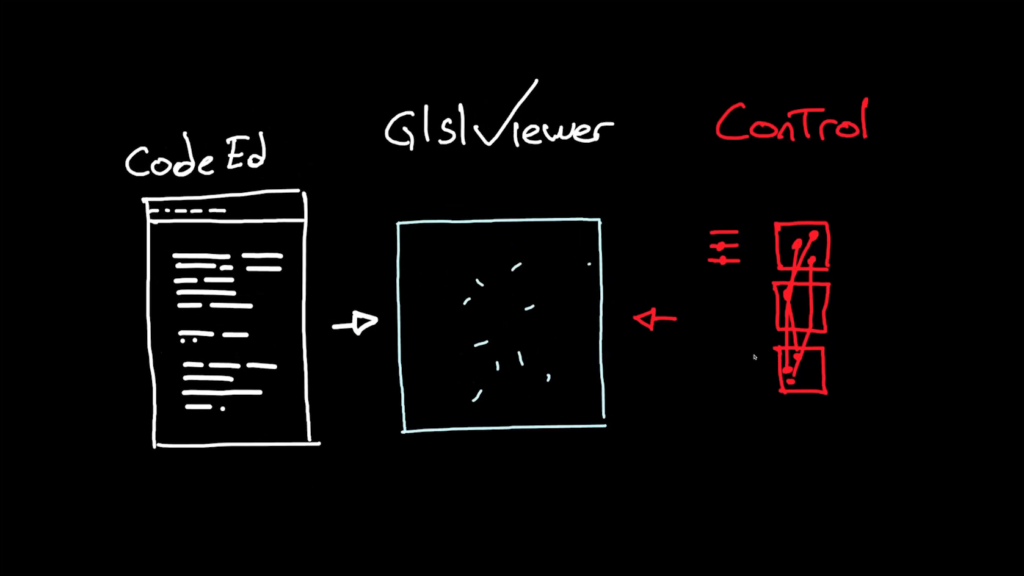

So the model in our case when you are… And to give a small introduction, glslviewer is shader sandbox. And the code is gonna be the model, the view is gonna be the rendering of that, and the control is the parameters that we feed into it, right. And hopefully you can grab this model and think in your own applications, like we could say with Processing or with openFrameworks or whatever pattern you’re using.

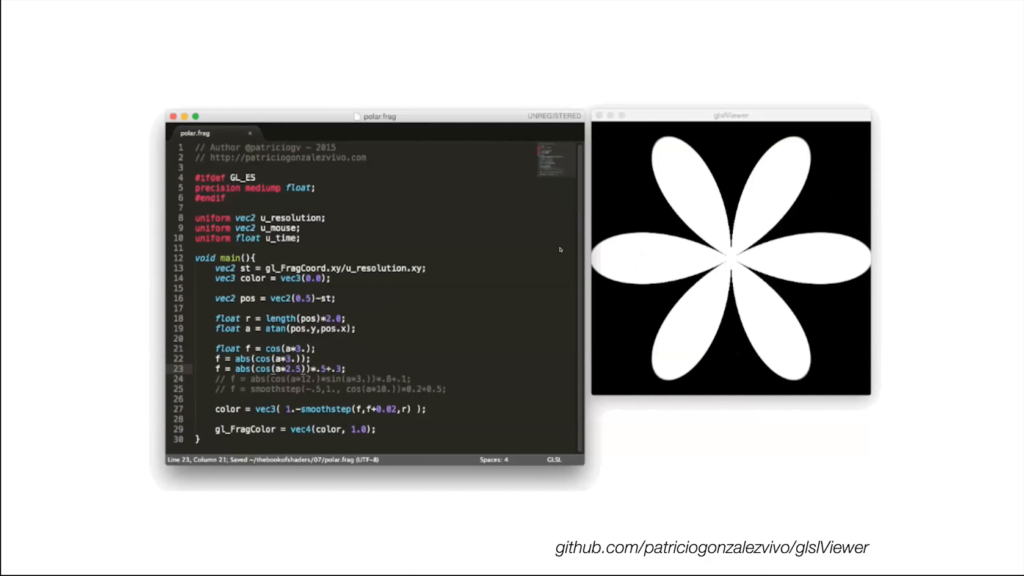

So let’s get into glslViewer. And glslViewer, to give context, was born while I was developing The Book of Shaders. The book really clicked for me when I started putting the text together with these sandboxes. Because it really helps in this jump— The concepts can be very abstract and new for some people, so sharing this path between learning a new concept and tinkering with it and poking into some code, I thought it would really help. And I think that it does, and part of the popularity of the book comes from that.

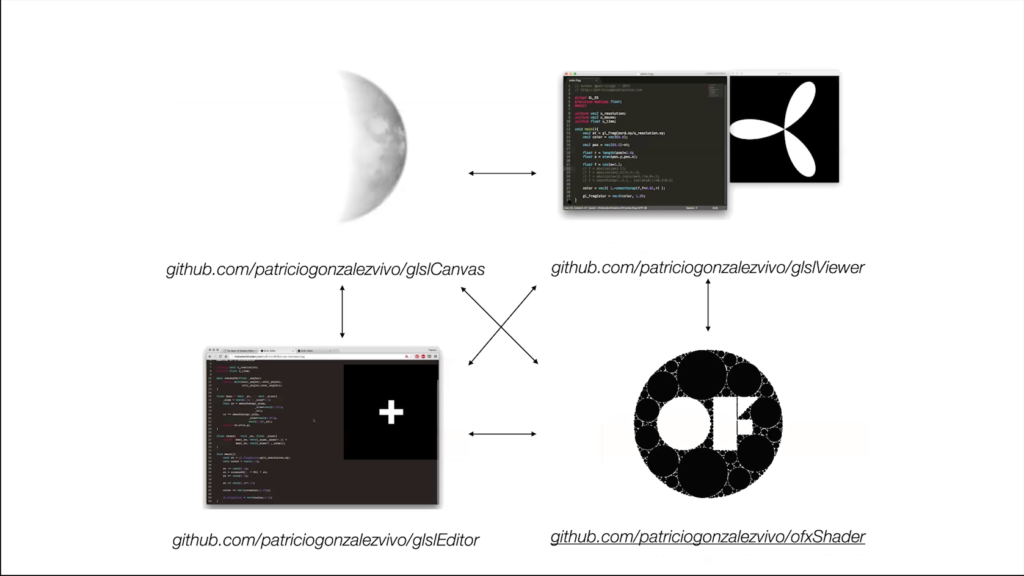

So for that I made my first sandbox, which is the glslCanvas. And for here there’s already some design decisions. Because it’s an educational text, for me was it important for the text to be the primary interface. And this is kind of major. If you go to other platforms like Shadertoy that are like, wildly more well-known, they really hide all the complexity and you are working in a wrapper of the main shader that runs.

So, I made glslViewer, which is the sandbox. And around that I made this little editor. And I want to talk a little more about this. So note here that when you touch a variable or a color, these widgets come up. And I want to emphasize how for me, the importance was to put the result of these sliders and color pickers back into the code. Because the code is the main interface. So it’s like the hood of a car. And I want the uniforms to be there. I want all the weird things that you have to put at the top of a shader to be there. And then, that code, as is with these values hardcoded, you can then take it anywhere else. Because it’s just a plain GLSL shader, you don’t have to do any weird tinkering or changing. You pretty much can take it anywhere else. Which is something that on other platforms, because they have to deal with these wrappers, it gets complicated and obfuscated. So I’m gonna keep moving forward.

So, from my own necessity of doing more complicated work, or not relying on the Internet— At that moment I was commuting between Brooklyn and Manhattan, so I had all these hours of commute time. And I was wanting to play with shaders on my little Raspberry Pi computer. So for me it was very important to make it as simple as possible and really fast on something super inexpensive like the computer that I can carry with me that doesn’t belong to the corporation where I’m working or whatever. And just enjoy kind of like the simplicity and abstraction of being just in a small computer which does very little but it does it well.

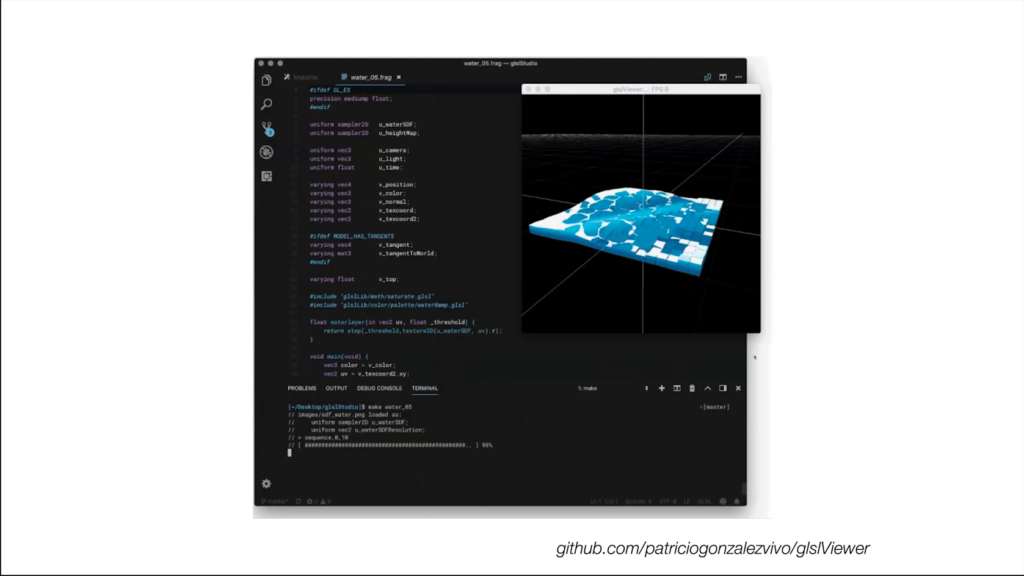

And here you can see the first design decision also is to disentangle glslViwer from the editor. So you can pick up any editor, the code is your interface. This is the way that you access the thing. And you can choose whatever editor you want to.

Then this expands more, and I made an openFrameworks addon because I feel very strongly for that community. So I want to port this code, too.

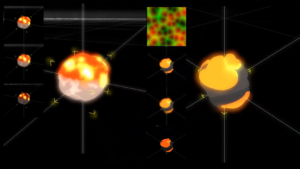

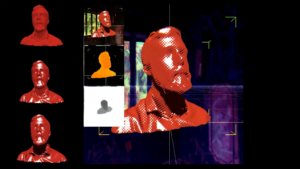

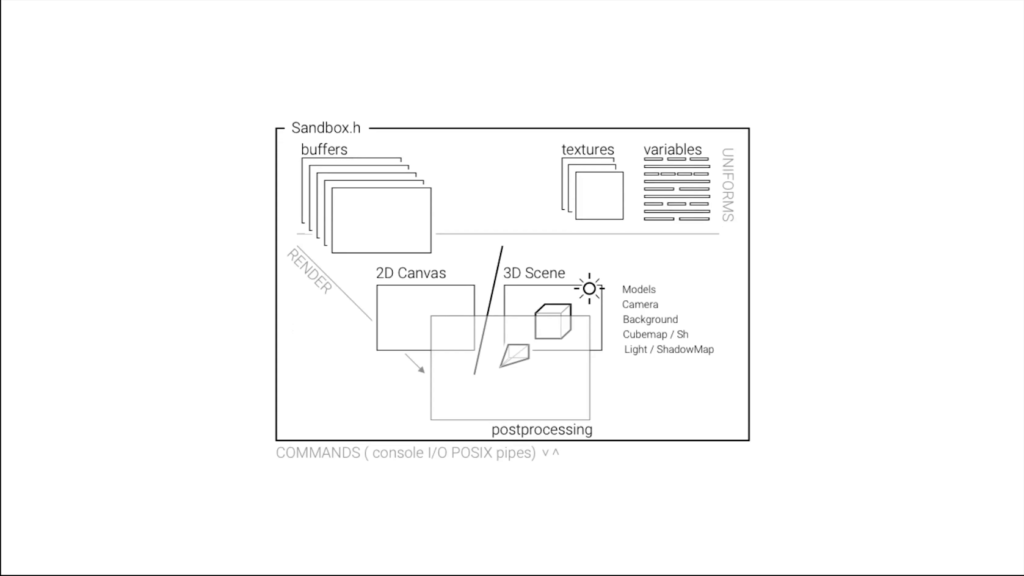

And then this is kind of like an overview of how it grows. It went from being a 2D canvas with some textures, to the ability of passing through console in, which is the pipe in…the POSIX Unix way of passing data from one program to another one. Then I added the ability to have multiple buffers. Then I added the ability to add 3D models, a camera description, a background pass, a cubemap, spherical harmonics, lights, shadows. And post-processing. So this kind of like, puts it all together. And this I think is kind of like the process of my own learning, right.

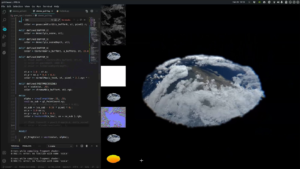

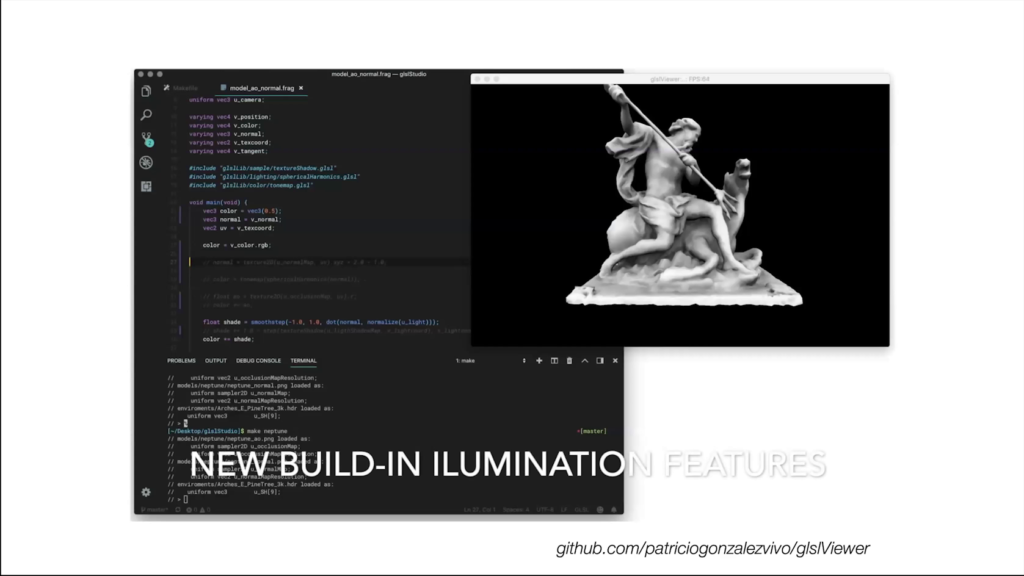

So here you can see a more mature and actual version of glslViewer. In this particular case I was working on some VR content for running on the Quest. So I’m kind of trying to figure out what is the bare minimum of code and the most effective and efficient way of doing some shaders for this very underpowered device.

And you can see that there is a console that’s down there, and this console is what allows me to do debugging, to turn lights on and off, and so forth, to check the generated light maps, etc.

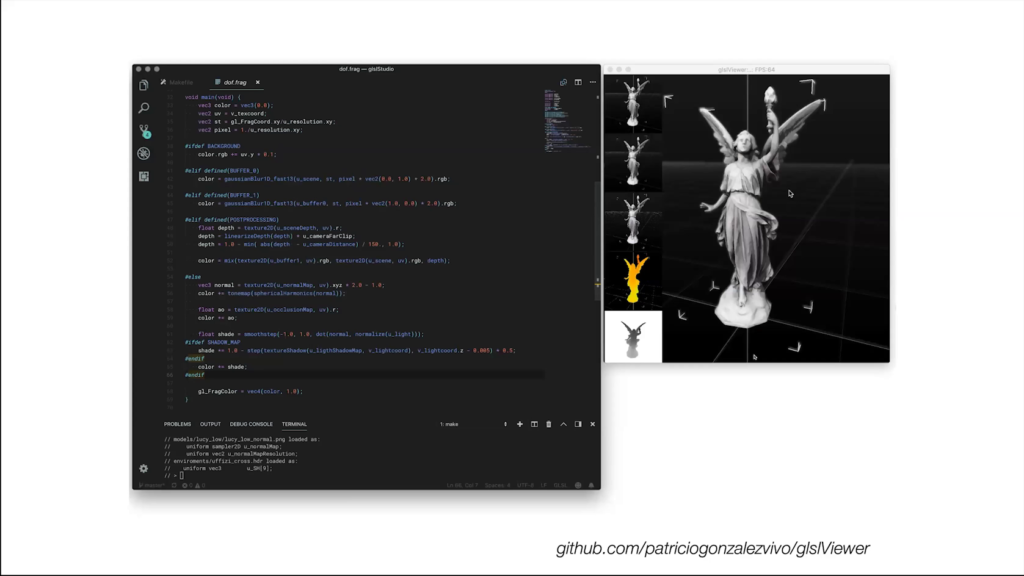

Moving forward, same. In this I’m doing more like post-processing passes.

And here I’m doing images. And this is most of what I do for a living. There’s this amount of work that happens in the code, but I found that I have the need to communicate pretty often on how I’m working on something and how it’s looking. Especially to check with creative directors and so forth. So one of the abilities is that glslViewer allows me to make really quality videos really fast. I make a PNG sequence and it’s [indistinct] to ffmpeg and I can have a video of any kind of quality that I want. I ended up integrating this feature back into the GLSL editor in the book. Because I have found that communicating with others is probably the most crucial part of my work, and I have some limitations in terms of language because English is not my first language. So this really helps.

So let’s go back to this presentation of the model and let’s cover some ground that we already covered. glslViewer is the viewer, the code happens in the code editor; it’s a different program, and then we have the controls that would happen through the console in.

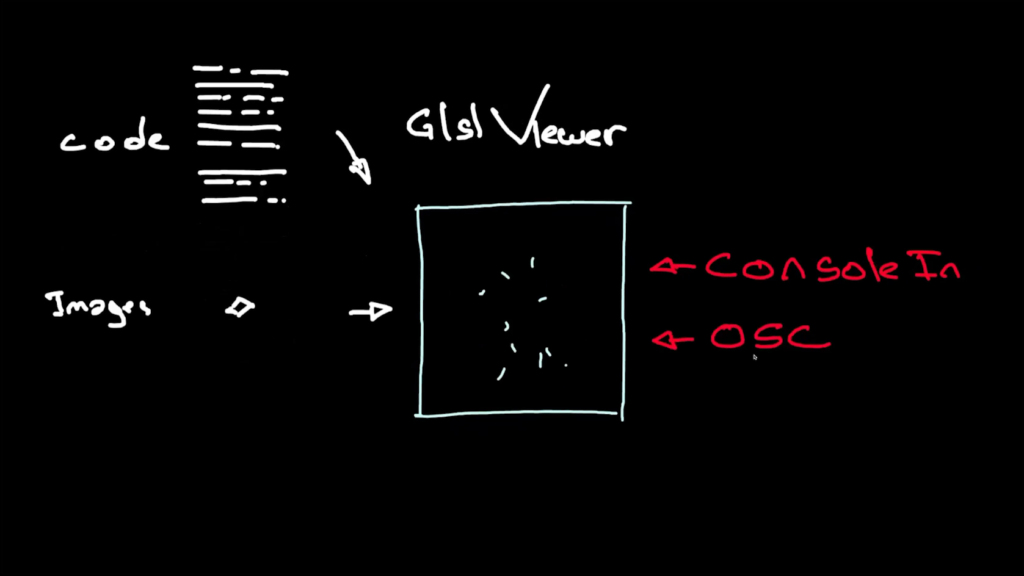

So we went through through the history and we learned that besides code, we can drop some things in also as inputs and as interfaces; can be images, and can be geometries. That means that if the image changes or the code changes, both things will change inside the viewer. So the viewer will pick it up.

And then I was showing how the console is a console in. And then most recently, I think a couple of years ago, OSC. So I can communicate with any program that uses OSC in my own computer or from the network. And this was for me a pivotal moment because this allowed me to stop thinking of my own program as an isolated island and start thinking of all the programs that are around and that can communicate with it.

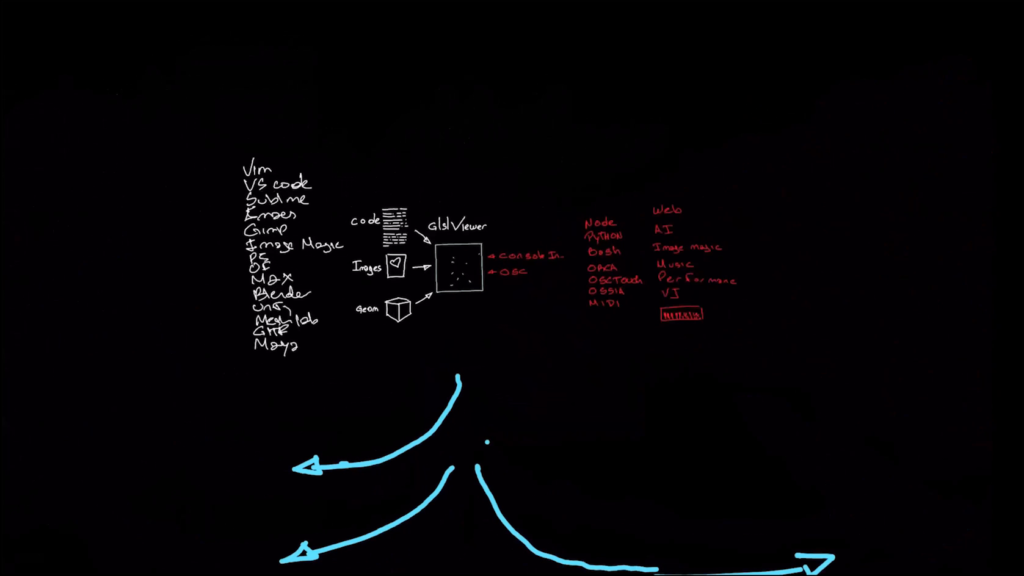

So the image can come from a game, or Blender, or the geometry can come from Blender. The code can be edited in any any code editor, like vim or Visual Studio or whatever. And from the console in, I can send commands from Python, from other programs like ORCA, or [TouchOSC], Ossia, it could be a MIDI device. So this opens to using instruments. And this really put me on the path of what really inspires me about Unix, right. It’s this ecosystem of apps that are designed to talk very well with each other. And it’s kind of like an orchestra. There’s a generosity between programs of being good collaborators. So that’s one of the things I tried to be thoughtful of when I developed glslViewer, is how can I make a good collaborator that sends something that is readable or easy to parse.

And I have found some great friends along the way, and these are friends I derive inspiration from so I want to give a free shout out to like Syphon, that Anton Marini is involved with. When I learned about Syphon, it was like this should be the paradigm instead of having these super narrow sandboxes that try to put everything there, having these more dispersed and decentralized ways of working.

Then you have Ossia, which apparently is a little less known. But it’s just a timeline that exports OSC and you can put a lot of things inside it. It can support colors, and you can really orchestrate and define your narrative there.

ORCA, and everything really that Hundredrabbits does is amazing. And they really are composing a whole operation system of interconnected apps. And I think that ORCA as a flexible sequencer really exposes that. Because it really lets you export to different formats.

And then the other one is TouchOSC, which is a great app to have because it really helps you with OSC.

So, back to glslViewer and how it works, most of my work happens and looks like this when it’s not code. And this is just a shader that turns on and runs a program and loads a video, and it’s going to render it headlessly, and it’s going to create a PNG sequence between this moment 0 and the second 3.23. So I use this for my art practice, and so for me this is my workshop. Because this is the place that I come here with these ideas and I can meditate and habitate them.

Sometimes I have to do more…work work, and so I have to design a VFX for VR.

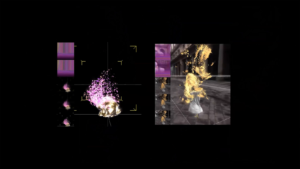

Sometimes I’m driven more by curiosity and like, how I can implement a GPU simulation on glslViewer running a couple of shaders and I jump to do it. Sometimes it’s more fun as a multiverse and I try to match aesthetics.

I have been motivated by Doré and wanted to do some engraving-style shaders.

Part of my artwork is also geospatial, so I made own LIDAR and I visualized it with glslViewer. And then I composed some crazy…I don’t know, landscapes.

More recently I have been trying, using OSC, to develop partner apps. One is MidiGyver, which allows you to connect things that are MIDI to OSC so I can connect it to glslViewer. So I’m kind of expanding my own ecosystem.

This is another video of me using a nanoKONTROL2 to control these shapes that I do in [indistinct].

And more recently I have being tinkering with an OP‑Z and sending the commands. And this small device really excites me because what I’ve seen on the OP‑Z, on these kind of like musical instruments, is that they’re instruments. They’re already designed to work over time on a composition, and accessibility. And they’re designed as instruments, to amplify our voice.

And thanks to this residency I have been able to work on the video feature, which this will kind of like, wrap it up and I feel that this program’s becoming feature complete. So I’m very grateful for this residency because I have been able to put some time into it. And I have been having a lot of fun doing different post-processing effects just with glslViewer and a couple of shaders.

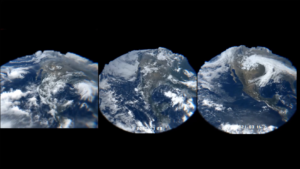

And also because my artwork…there’s a lot of it but it’s very spatial, I have been working with satellite data and deriving depth from it. So I’ve also been doing these explorations about clouds and compounding these different satellite views of specific days. And all this is glslViewer. This is how flexible and useful for me it is, and I hope other people find it too.

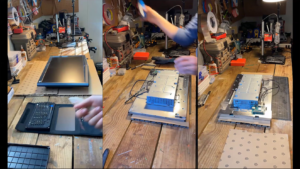

This is way more recent. I have been kind of going back to this old project where I had been disassembling old LCD monitors that I salvaged from being thrown away. And I put a little Raspberry Pi in. Because glslViewer has been designed for running on these small devices, I can make little tiny shaders that run well on them, and that’s part of my practice. So I have been working on this meditation about bringing value to these old metallic structures and kind of elevating them through art.

So before leaving and, in this little space that have, if I still have some space, I want to point out that there’s also this thing about glslViewer that you can pipe it. So this I mentioned before is part of the ability of sending one command from a different app. So I want to point out one project, one big project where I was able to kind of use this.

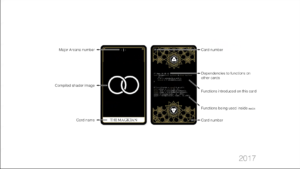

And this is PixelSpirit, which is a tarot deck that has GLSL code on the back and that compiles what is in the front. And I presented different cards, and on each card the code gets a little more complicated. I introduce different functions and those functions then get called by other cards. So the more you go into the deck, the abilities of what is possible to render becomes more complicated and intricate.

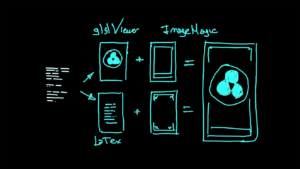

And in order to make this device I actually used glslViewer. So I made a little Python script which grabbed the text of the code—the cards are basically fragment shaders. Part of it, the shader gets rendered through glslViewer, the text gets composed by Pandoc and converted to LaTeX, and LaTeX makes a PNG from it. And then I run this through ImageMagick, aggregating all the decorations on the edges. And this gets printed. And this is actually the make routine. So I basically compile a tarot deck.

And this really speaks to my heart of what a creative operating system of interconnected creative programs means. Like the ability to—okay, you want to do something material, you want to play with satellites, you want to send something to a printer. And the tool is able to adapt to these different workflows.

And then it becomes physical objects. And these are very personal objects, so I’m very grateful when people get it.

One more thing that I want to talk about is I spent some time in 2017 doing AR filters. And one of the challenges of working with big tech companies is that sometimes you have the privilege to use the latest iPhone, and sometimes you have to develop something that has to run on a super obscure device on Android devices somewhere in the world. And that requires some discipline, and that means benchmarking usually, in the computer graphics scene.

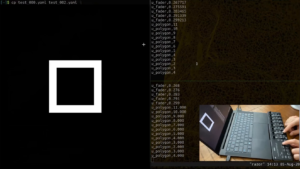

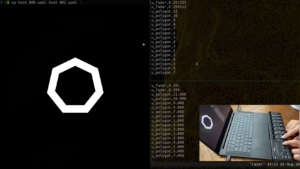

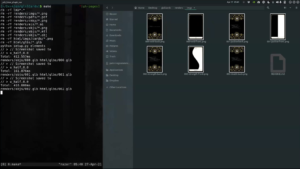

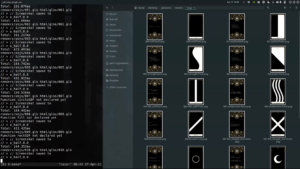

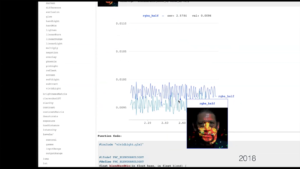

So I want to show a little video of how I’m using glslViewer running on a Raspberry Pi Zero, which is like a $5 computer, to basically test all the functions that I’ve made in compiling a library. This library’s become kind of my life and I have been adding into it. But while this is doing the testing making sure that these functions really run correctly, it’s also doing some bench performance testing on them—benchmarking. So this benchmarking for me is great because when when I have to design I can actually have a rough idea of how expensive it will be to do this or that effect.

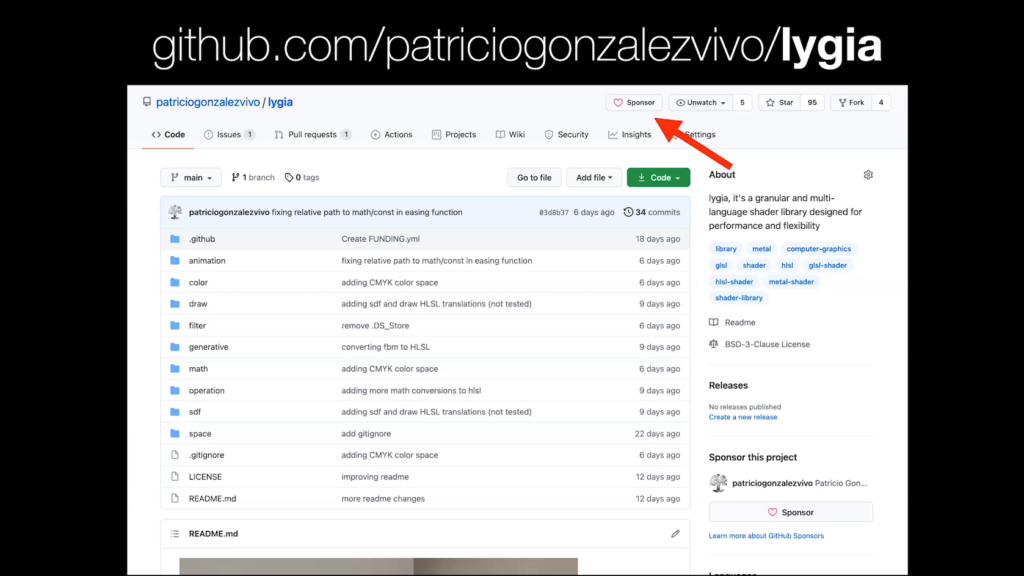

And see here I made this kind of web site that’s just to visualize what the extent of the library is, but also to see the metrics of how much each function costs.

And what I want to say is that I’m open sourcing this, and I’m very excited. I named it Lygia, after Lygia Clark’s work. It’s a muti-language GLSL shader library. It’s translating GLSL, HSLSL, and it’s going to be in Metal, too. And there are some plans for other things. People are stepping up. I haven’t talked publicly, but I’m very excited about it.

And I’m hoping that people love it and support it. So I added a little support button to all my GitHub things. So if you’re a user, sponsor.

And…that’s all. Thank you so much.

Golan Levin: Patricio, thank you so much. We have maybe thirty seconds for one question, and there’s so many ideas in this, from your ecological practice recovering LCD monitors to sort of the new kinds of tools and new ways of programming we see you exploring, and the ecologies of small tools. This has been a very technical talk, though, and I guess my quick question if you can give it just a moment is, your biography mentions alchemy. You know, you made a Pixel Spirit deck similar to tarot. You even used the ImageMagick library. And I wonder if you could briefly comment on the relationship of your work to magic.

Patricio Gonzalez Vivo: Well, in a previous life I was a clinical psychologist. And I did a specialization in expressive art therapy. When you work with expressive art therapy, you’re dangerously close to Carl Jung. And when you’re reading Carl Jung’s books you get really exposed to mythology and basically to this universe of symbols. And you understand that our way of learning is through symbols and archetypes. And I think you develop a special love for those. And so when I transitioned into code, it was hard not to see that the same mysticism was around these slightly more kind of hard symbolic structures, which are nothing more than just a mirror of our mind, just as mythology.

Levin: Thank you so much Patricio.