Beau Gunderson: Hello. I am Beau Gunderson. I made some bots. These are the bots: [slide reading “@vaporfave, @plzrevisit, @theseraps, @___said (all node, all on github)”]. I will put a link to these slides in IRC, too, because everything is linked and attributed if you want to go explore.

All my bots are in Node so I’ll probably be talking about Node a little bit. I’ve also made some modules like things that get text from news sources like CNN, some canvas stuff which is useful for image bots, and then some caching stuff. [Slide also lists bot-utilities.]

I’m going to talk about image transform bots. Just kidding, I’m going to talk about transform bots in general. But most of them are image bots. What is a transform bot? It’s a bot that you can @-reply to and get something back. There’s three main types that currently exist. You can send an image and get an image back. @badpng is the first example I’ve seen of this, by Thrice and Andi McClure, and the first tweet from that was September 2, 2014 so this is a pretty recent bot phenomenon.

Here’s a delighful image of a kitten:

https://mobile.twitter.com/hjpotel

And this is what @badpng does to it:

https://mobile.twitter.com/badpng/status/527479565644353536

Which is beautiful in its own way.

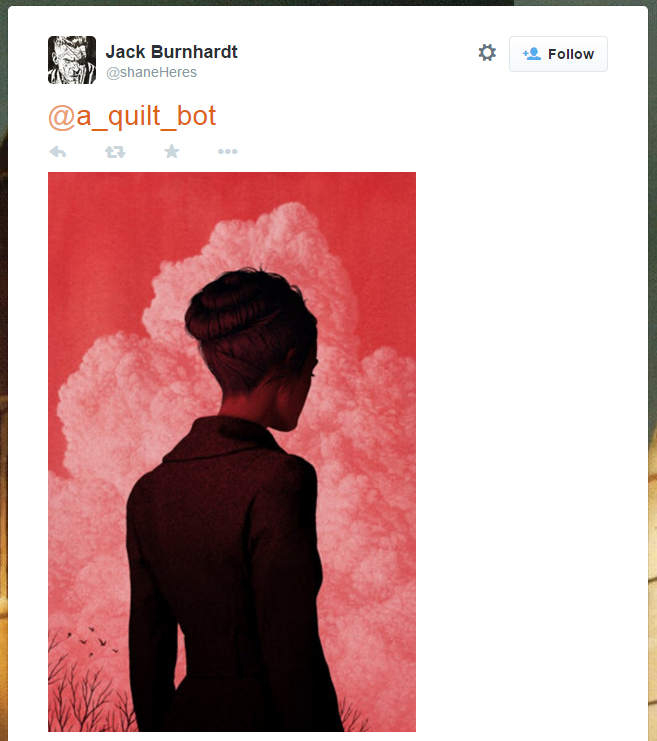

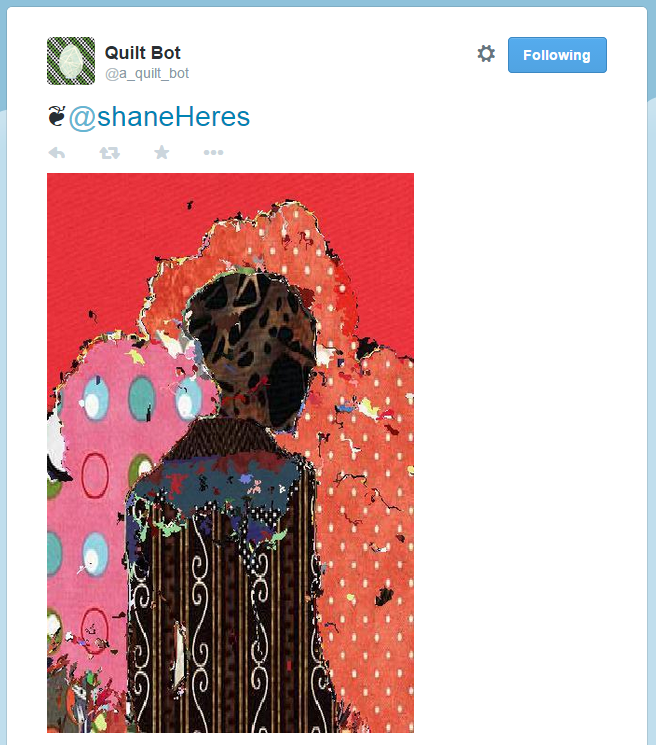

There’s @a_quilt_bot by Bob Poekert (September 8, 2014). So six days later we get a bot that will take an image like this:

And then reproduce it out of quilt fabric, which is pretty cool:

Next up is @pixelsorter by Way Spurr-Chen and its first tweet was September 11, 2014 It’ll take something like the Windows 95 logo you see here:

https://mobile.twitter.com/NickRichTea/status/531178850848632832

And sort all the pixels:

https://mobile.twitter.com/pixelsorter/status/531178896105148416

There’s a whole web page about how to interact with this, which is cool that it has its own documentation. You can change all the ways in which the pixels are sorted.

Then there’s @plzrevisit, which was also at the end of September. This one’s mine. It’ll take an image like this:

https://mobile.twitter.com/inky/status/531093255208046593

and run it through a service called revisit.link. (Harrison M is the one that gave me the idea for this bot, I think.) Revisit is like glitch as a service. You can send images to it and get back glitched images. There’s maybe 50 different things. So @plzrevist just takes whatever you give it, runs it through three to five random glitch filter and then gives you back this:

https://mobile.twitter.com/plzrevisit/status/531093423479328768

You can also send text and get text back. Dorothea Baker has @unicodifier, which happened this last month [October 3, 2014]. You can send it “ethics in game journalism:”

https://mobile.twitter.com/inky/status/526528445799735298

and get back an awesome Unicode-ified version of it:

https://mobile.twitter.com/unicodifier/status/526528499662585856

You can also send text and get an image. If you tweet to @plzrevisit, you get back your text as an image:

https://mobile.twitter.com/plzrevisit/status/530985684387913730

You should all go text @plzrevisit now and see what happens.

I realized in making this that there’s nothing you can send an image to and get text back that’s based on the image. It made me think about, there’s a blind iPhone user that did a YouTube video about using the camera and it would tell him the color that the camera was looking at. So you could imagine something similar for images.

If people have more examples of these bots that take in some inmput from Twitter or Tumblr or whatever and the modify it in some way, give them to me. These are the five I know about that are kind of this in-out format, that don’t do anything else.

In the image bots there are some differences, and they’re minor. You have this idea of a direct reply versus a prefixed reply. So if you follow @badpng, for example, I don’t think you see anything in your timeline because they’re all direct replies, whereas the other three image bots prefix their replies. There’s this decision whether to include text or not. @pixelsorter includes “hi” with every tweet. There’s also the decision whether to support multiple replies or reply loops. I don’t know if it was planned for or not, but it certainly became a newsworthy event when these bots were talking to each other and transforming these images. Mine, because I haven’t figured out the best way to prevent long loops, doesn’t yet. So there’s a choice there, or lack of a choice.

Why did this happen now? We’ve had update_with_media on Twitter via the API since August 2011, so you could upload pictures for a long time. We got a “rich photo experience” in September [2013]. “Remember the old days when you used to have to click on a picture on Twitter to see it?” But the short answer is I don’t know why we’ve had this capability for a year and nobody’s done anything with it until now in terms of transforming image bots. But once the idea arose, there was this month where like four of them poppeed into existence, which I think is pretty cool, and I want to see more of them. It’s interesting that the capability was there, but no one had used it in that way.

So what now? Make more, please. And if you use Node, I’ll help you. I will be tech support. I would also like to see some more bot-to-bot interaction in terms of transformation. This is a snippet of code from my bot utilities library. This is the “HEY_YOU” function for @-replying to people with emoji. This is one of my other bots, @vaporfave:

https://mobile.twitter.com/vaporfave/status/531131800349716480

This is an image that @vaporfave creates. It’s like, Ecco the Dolphin sprites and weird vaporwave nonsense. And it’s sending this image to @plzrevisit so that it can be transmogriphied and look like this when it comes out, which is very rainbow glitch awesomeness, and you can see @plzrevisit is like, “Yeah, double high five” when it sends it back:

https://mobile.twitter.com/plzrevisit/status/531132351469088768

I’m also curious what you all want to see. And that’s all I’ve got.

Matt Schneider: Mine’s kind of just a personal narrative. I was just kind of interested in the idea of image bots, and they first popped into my awareness when I started my @sketchcharacter bot. It was supposed to be @sketchycharacters, but you know, limits. I wanted to create brand new types of punctuation, and I started by using the Python Image Library (PIL) to try to draw things. And I thought “this is really bad” so I started using Unicode characters to create these new punctuation marks.

But then the idea stuck around because I was trying to create a text adventure bot that would randomly generate bits of text adventures and I was running into the issue that the tiny snippets that I had to work with just didn’t seem quite right, so I thought, “Okay, I’m going to cheat. I’m going to create an image that’ll say everything I want.” Then I started feeling really guilty, because the idea of creating an image to take the place text just didn’t seem so right with me and my idea of what Twitter was supposed to be. That changed just a couple days later when Andrew Vestal started his @YouAreCarrying bot, which was really really awesome and I got super jealous. So I decided to push forward with mine, but again I just didn’t feel right just posting images of text.

So rather than doing that I decided to create a fake emulator for old computer screens. You know, the green or the amber text. Once I did that I actually felt really good about what I was doing. And weirdly enough, because those screens fit so few characters, I actually didn’t even have to go over the 140 character limit. So suddenly I was able to create these images that felt really good, that seemed really right as a Twitter bot and I just started posting them guilt-free. The best part is that I could even start using things like the text to post supporting text. So I could have a command or the directories displayed as a way of attributing some of my experimental options where people were able to send direct messages to me and get them posted to it.

And that started pushing me on in wanting to do more of these Twitter bots, because I suddenly realized that even though I and a few other people I talked to felt that it was really cheating, there’s a way that you can kind of take advantage of everything that Twitter gives you, all the way from the 140 character limit to the fact that we have these image, to create really fun and interesting bots. The one that I created most recently that I’m most proud of is my @crossddestinies, which is based on Italo Calvino’s The Castle of Crossed Destinies.

What it does is it generates random tarot card layouts that Italo Calvino uses in his stories a way of communicating in this mysterious inn or castle (depending on which of the two versions that you read) where people have no voice but they’re able to lay out these stories. Then I could use the text part to generate a random description of what people were saying, so I have things like “The nun lays out an embarrassing tale.” or “The priest tells a tragic history.” and it was able to create this really interesting context for what would otherwise be random images.

This is when I started to realize the degree to which Twitter’s always built on people taking the things that are available and pushing them just a little bit further than they were originally going to go. There’s a lot of people who are doing much better work than I am, and who even started much earlier. I believe around this time last year, Joel [McCoy] had already started putting out @TatIllustrated, which I think is one of the coolest uses, just to take the @knuckle_tat bot and take it to its logical conclusion.

It’s this kind of constant progression. All the things that we take for granted in Twitter started as someone’s weird idea, even things like hashtags were originally just a thing that someone did once that really caught on.

So that’s just what I’ve been thinking about, the way that there’s this initial expectation of how we’re supposed to use Twitter, but after negotiating and pushing and trying to find something that just feels good, we’re to expand it. So I’m really curious to see what other people have to say about that. Thanks.

Joel McCoy: I’ve got one thing real quick. The nature of the rich images that are now GIFs re-encoded as MP4s when they actually end up on Twitter, and how that in a lot of situations ends the pipeline of recirculating. You can take a GIF, put it on Twitter, it turns into an MP4, pull the MP4 down, go through frame by frame and re-encode it as a GIF, re-upload to Twitter. That’s possible, but it’s absurd. I want to put that out there. You can now attach animated GIFs, but it’s not a GIF once it gets out there, and what you get back from it is a totally different thing. Weird artifacts show up that [inaudible] and so on.

Darius Kazemi: I have a friend who’s a visual artist and she was very excited about her GIFs getting on Twitter, and then she was very dismayed when she saw the quality of the encoding coming back. She was just like, “No, I’m just going to link to my web site instead, directly to the GIF.” because her stuff is about visual fidelity.

Audience 1: The flipside to that, maybe this is anecdotal, but I feel like images are more likely to leave their context on tweets and circulate without credit or attribution. I have a friend who had this format kind of like text adventure for doing his laundry, or interacting with the Internet of Things, and it got tens of thousands of retweets and somebody else [inaudible] like, “I found this. I don’t know who did it.” and his tweet about it from an hour before [inaudible; crosstalk]

Joel: That’s another weird Twitter affordance change. It used to be it was very easy to just include the URL of someone else’s media in your tweet, and if someone clicked through the media, they would go to the tweet it originated from. So it’s like a silent attribution that didn’t cost you any characters. It was amazing. Now, it [never shows?] that URL for the media. It masks it, [in at least the web client?]. So you can, if you’re looking for it, go find the image, do a search for username, and it’ll show you everyone who’s linking your image without attribution. But actual users who see the media can no longer click through. So even if you’re conscientious and you try and provide that attribution, it’s gone.

Audience 1: I’m sure that’s exactly what happened.

Joel: And it’s just weird that once again one of those features that they didn’t intend to exist but was started to [be] used in a folk way that worked, and now they’ve actually stopped that from working.

Audience 2: I haven’t yet made an image bot but I’m very excited to. I’m pretty facile with computer visual stuff, so people [inaudible] involved not just generating images but processing and understanding them, talk to me about that.

Darius: Yeah, computer vision is kind of an awesome application that I haven’t seen a whole lot of people try and [inaudible] with.

Audience 2: There’s generation on the output side, but less of the actual input.

Everest Pipkin: We should chat.

Joel: Oh, I guess I made a Tumblr bot kind of like that. Book of the Dead, which found deaths from Spelunky, and then would give you the last three seconds of the life, to the point where they died. They changed the HUD on Spelunky, so it’s retired now, before Twitter started letting you post GIFs. But there’s also the Snapchat bot, which got banned. So I’ve done some very preliminary stuff with that. It’s a lot of fun. It’s also a lot of work.

Darius: I’ve found that my image bots tend to be more popular than my text bots. My most popular stuff is the text stuff, but on average more people seem interested in image bot stuff. Also, it’s highly platform-dependent. We talk about Twitter a lot here. I tend to go Tumblr-first for image bots. My image bots that I have running simultaneously on Tumblr and Twitter, like Reverse OCR [Twitter, Tumblr], [it] has like 150 followers on Twitter, and it has 2000 followers on Tumblr. That’s a pretty common thing because images are kind of a more Tumblr-native thing, I think. Even though there are all these new affordances around images on Twitter, Tumblr is still a place where that has primacy. Someone recently was like, “Why don’t you put Museum Bot” on Instagram and I was like, “That’s actually a really good idea. I’ll do it after Bot Summit.”

Joel: Reverse OCR also got featured on Tumblr, right?

Darius: Yeah, it was a featured Tumblr [crosstalk]

Joel: [inaudible] pretty much never happened to you with a Twitter bot.

Darius: Right, because Tumblr like, likes art.

[inaudible comment]

Joel: Yeah, Tumblr chose it as like a “here’s a featured thing that’s cool people like.”

Audience 3: Yeah, Tumblr has an entire category of Tumblr accounts dedicated to art and they promote them, because it’s an “image based” blog, so they like it when there are images on your Tumblr. It’s not perfect, but they are good at that part.

Further Reference

Darius Kazemi’s home page for Bot Summit 2014, with YouTube links to individual sessions, and a log of the IRC channel.

Since Bot Summit, Cameron Cundiff (@ckundo) launched @alt_text_bot [home page], which generates text descriptions of images that are tweeted at it.

Austin Seraphin presented “Apple & Accessibility” at CocoaLove 2014, including some discussion of the Color ID app.