Lucas Introna: Thank you very much for the invitation, and I’m really awestruck at the the quality of the debate this morning. And so I’m a bit nervous to present a 8,000-word paper in twenty minutes, which I can’t read at all. I timed myself this morning, it takes five minutes to read a page. And I have seventeen pages so…that’s not gonna work. And if I’m going to try and present it by not reading it, I’ll probably present a very unsubtle, crude version of the paper. So I do encourage you to hopefully just read the paper and not depend too much on my presentation.

Okay so, I’ve been interested in algorithms for many years. I started my life as a computer programmer and I’ve always been very intrigued by the precision of that language and the power of it. So I was seduced by it early on in my life. And I’ve been very interested in the way in which algorithms function to produce a world for us which we engage in. So this paper really is trying to take a very particular perspective on algorithms, and that’s the perspective of performativity, drawing on the work of Butler, Latour, Whitehead, and so forth. And I’m really interested to see whether this helps us or not address the question which is central to the program, which is governability.

Okay, so I’ll do a few things. I did ten slides, which means I have two minutes per slide so I’m gonna save on this one and not go through the agenda, I’m just gonna jump in.

So, the question of governability of course has to do—is very centrally connected to the notion of agency. And that is the question of what algorithms really do. And you know, there’s this question that comes up as we’ve had the debates, you know what do algorithms do, how do they do it, whether they do it, etc.

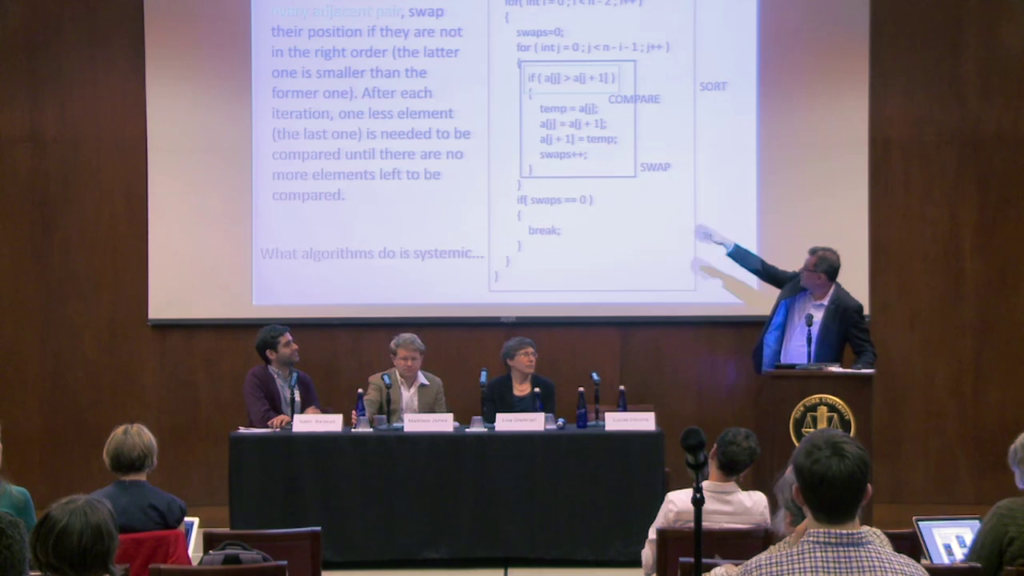

So when I was a computer science programmer, the first task I was given was to write…well, one of the first, was to write the bubble sort algorithm. And the bubble sort algorithm is an algorithm that tries to sort a list of things (numbers, or letters, whatever) by comparing adjacent pairs. And if the one is smaller than the other they would swap them around and put them in the right order. And every time you do one less until you’ve sorted the whole list. And that’s the algorithm. On the left it’s presented in words and on the right hand side it’s presented in C++, which is something I didn’t write in at all. My programming language was Fortran and I did it on punch cards. So the whole object-orientation came after my life as a programmer.

But what I can do is I can still understand some of this code. And so if you look at the green box, the green box does what the green text says, which is to compare every adjacent pair. And the blue box does what the blue text says, and that is to swap their position if they’re not in the right order, okay.

And so at a very basic level we could say the green box compares. That’s what it does. The green box compares. The blue box swaps, alright. And the red box sorts. Okay. So, we compare in order to swap, and we swap in order to sort. And we sort in order to…? So what is the “in order to?” To allocate? So maybe this is a list of students and we want to allocate some funding and we want to allocate it to the student with the highest GPA, okay. So we sort in order to allocate. We allocate in order to…administer funds. We administer funds in order to… Right.

So the point is what the algorithm does is in a sense always deferred to something else, right. So, the comparing is deferring to swapping, the swapping to sorting, the sorting to allocating and so forth. So, at one level we could say what the algorithm does is clearly not in the code as such. The code of course is very important, but that’s not exactly what the algorithm does.

So one’s response to this problem is to say well, the algorithm’s agency is systemic. It’s in the system, right. It’s in the way in which the system works. That’s what algorithms do.

Now, why do we think algorithms are problematic or dangerous? I’m reminded of Foucault’s point that power is not good or bad, it’s just dangerous. So algorithms are not good or bad, they’re just dangerous.

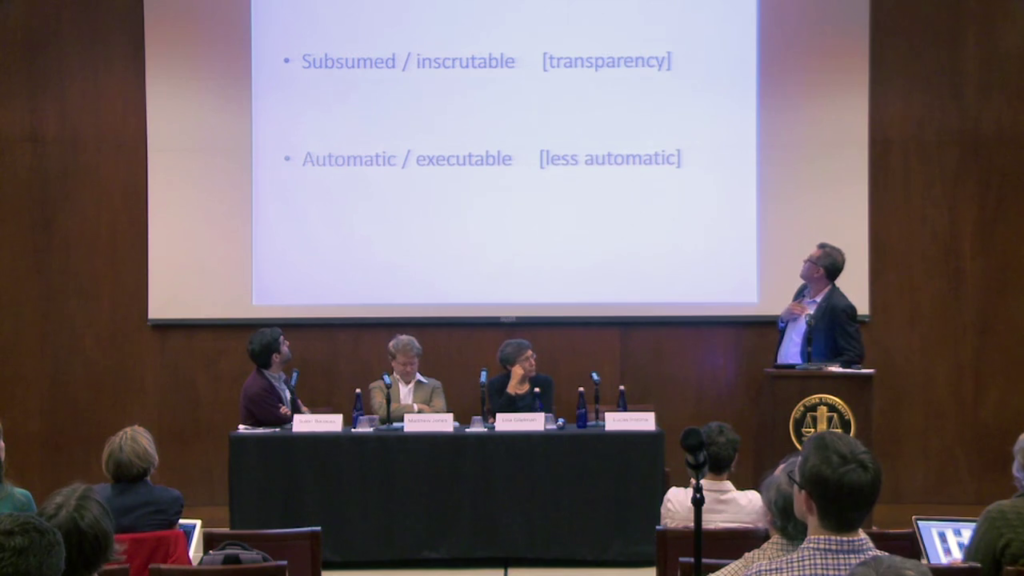

So why do we think they’re dangerous? So one argument is they’re dangerous because they’re inscrutable. They’re subsumed within information technology infrastructures, and we just don’t have access to them. And so one response to that would be we need more transparency. But as I sort of indicated just before, even if we look at the code, we would not know what the algorithm does. So if we inspect the code, are we going to achieve more? Not that I’m saying that’s not important, but that’s not going to help us in terms of understanding what algorithms do as such.

So, another problem— And I mean, this inscrutability’s deeply complex, because one of my PhD students did a PhD on electronic voting systems. And one of the problems in electronic voting systems is exactly how do you verify that the code that runs on the night of the election is in fact allocating votes in the way it should do? Now, you can do all sorts of tests. You can look at the code, you can run it as a demonstration. And there’s all sorts of things, but you can’t answer that really in the final instance.

The other problem is we say that it’s automatic. And the argument is always made that code or algorithms are more dangerous because it can run automatically. It doesn’t need a human, right. It can run in the background. And we heard the presentation last night from, what’s her name? Claudia, yeah. So she explained not only that the algorithms run automatically, but the supervision of the algorithms is algorithmic, right. So we see that as dangerous.

Okay. So what to do? So I think this question “what do algorithms do,” which points to the question of agency, I think is an inappropriate way to ask the question. I think we should rather ask the question, what do algorithms become in situated practices? And that’s different to just saying how they are used, okay. So how they are used is one of the in-order-to’s. So how do the users use it, in order to do something? But that “in order to do something” is connected to a logical context of say a business model or something. So there’s a whole set of things that are implicated in the situated practice. Not just the user, it’s the business logic, it’s many other things. Legal frameworks, regulations and so forth.

So I guess what I’m saying is that what we need is we need to understand the performative effects of algorithms within situated sociomaterial practices. Sociomaterial practices meaning the ways in which the technical and the social act together as a whole, as an assemblage. So why performative effects, or why performativity is just for a shorthand.

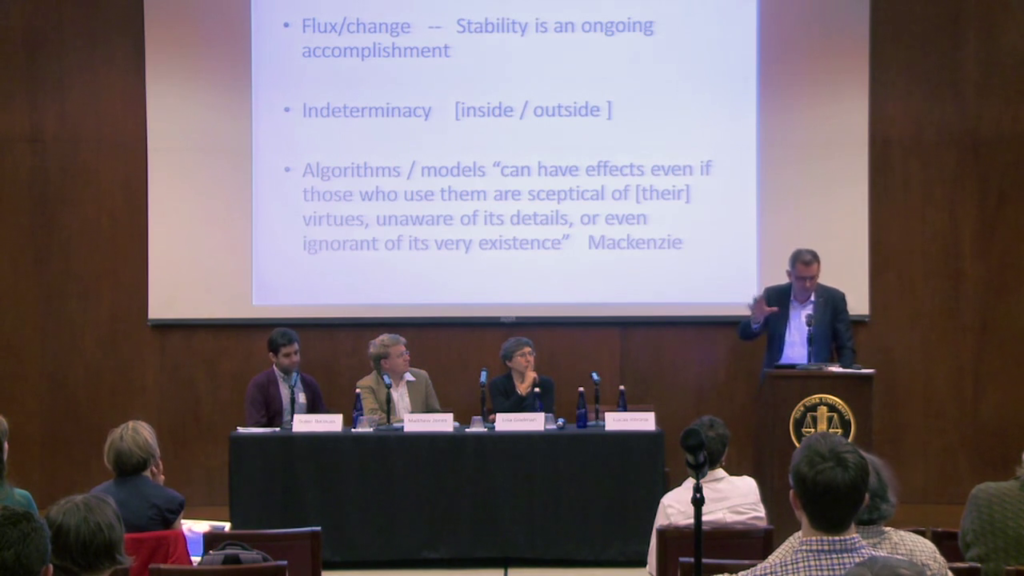

So, the ontology of performativity, which…you know, I’m not going to talk about Deleuze or any of those. Basically this ontology says one of the problems we have is that we start with stability. So we start with an algorithm. And then we try and decode it. Or we start with attributes, and they were try and classify it. Whereas in fact, what we have is we have flux and change, and stability is an ongoing accomplishment. It takes hard work, stability, to achieve. And this accomplishment is achieved by incorporation of various actors, of each other, in order to achieve this accomplishment. And so, there is no agency in the algorithm, or in the user, or in…whatever. It is the way in which they interact. Or as Barad says, intra-act; Karen Barad. And through the intra-action they define and shape and produce each other.

And therefore there’s a certain fundamental indeterminacy that’s operating in these sociomaterial assemblages. This indeterminacy means that we can’t simply locate the agency in any specific point. A beautiful example that was used this morning about the spider’s web. There’s a spider’s web, but we don’t know where the spider is. When we try and get to it moves, right. And it’s moving all the time. And that creates a very fundamental problem for us in terms of governance.

This is a quote from Donald MacKenzie’s book which has also been quoted a number of times this morning. His point is that these performative effects are there even though those who use them are skeptical of them, of their virtues, unaware of their details, or even ignorant of their very existence. So we do not need to know or understand for these effects to happen.

Okay so, I want to illustrate this by using a really small example, which is the example of plagiarism detection systems. And I have three questions which I sort of use in the paper to guide this analysis.

So the question is for me why does it seem obvious for us to incorporate plagiarism detection systems into our teaching and learning practice? Why is it obvious that for something like 100 thousand…I don’t know, Turnitin quotes on their site something like 100 thousand institutions in 126 countries use Turnitin, that 40 million texts of various sources (essays, dissertations, etc.) are submitted to this database every day? Why is it so obvious to us that we need this?

The second question is what do we inherit when we do that? This incorporation of Turnitin into our teaching and learning practice, what does it produce? What are the performative outcomes?

So, the first thing to note I think, and which I make a point in the paper is… And of course plagiarism…you know, academics…you know, this is not the best topic to choose when you talk to academics. Because plagiarism, that’s obviously a big no-no. And a couple of times when I’ve talked about plagiarism and plagiarism detection algorithms, the discussion was not on algorithms but why should we actually use these systems to catch these cheats?

So, the question for me is why do we have plagiarism detection systems in our educational practice? Now the issue of plagiarism of course is connected to the whole issue of commodification. And the historical root to this is the Roman poet Martial who basically first coined the phrase “plagiarism” because his poetry was in fact copied by other poets. And there’s a whole issue of how poetry moved from an oral tradition to a manuscript tradition. And when it moved to the manuscript tradition, there was this claim of plagiarism.

So it’s the commodification that is an important issue. And what I say in the paper is I think one of the reasons why plagiarism and the way in which it’s instantiated in our educational practice is an issue is because education has become commodified. And that in the commodification of education, plagiarism as a charge makes sense, right. Because this is about property. This is about the production. So the argument in the paper is that we have this process of commodification where writing is to get grades, grades is to get degrees, degrees is to get employment, and so forth. So we have a certain logic there that is making it obvious to us that what we want to do is we want to catch those that are offering counterfeit product, right. And we call them cheats and thieves because they’re offering counterfeit product in this practice.

And in a sense the question is, these systems—plagiarism detection systems—don’t detect plagiarism. They detect strings of characters that are maintained from a source. And if you keep a long-enough set of characters, you get detected because you have copied. These are copy detection systems, right. And there’s a whole debate as to why people copy, and why copied texts are in students’ manuscripts, etc., which is educational and has nothing to do with stealing.

So for me the question is why did we frame the educational practice in that way? Secondly, once we’ve incorporated that, what does it produce? And for me the important thing is, as I try to show in the paper, is it produces a certain understanding of what writing assessments are about, it produces a certain understanding of the sort of person that I am when I produce these assessments as producers of commodities, and therefore students have no problem in selling their essays on eBay. Because this is a commodity. And secondly, they have no problems in outsourcing this task to somebody else, because it’s a production of a commodity and the economic logic says you know, you should do it in the most efficient sort of way.

So we have a situation in which the agents in this assemblage—the students, the teachers… And the teachers often have very clear reasons why they adopt this technology. So we have an agency in which these actors become performatively produced in a way that is very counter to any of the agents’ intentions. So there’s a logic there that is playing itself out that transcends the agency of any of the actors.

Okay so, my last slide. Two minutes. Thank you. So, performativity— In the paper I also have an example of Google and so forth which you can have a look at.

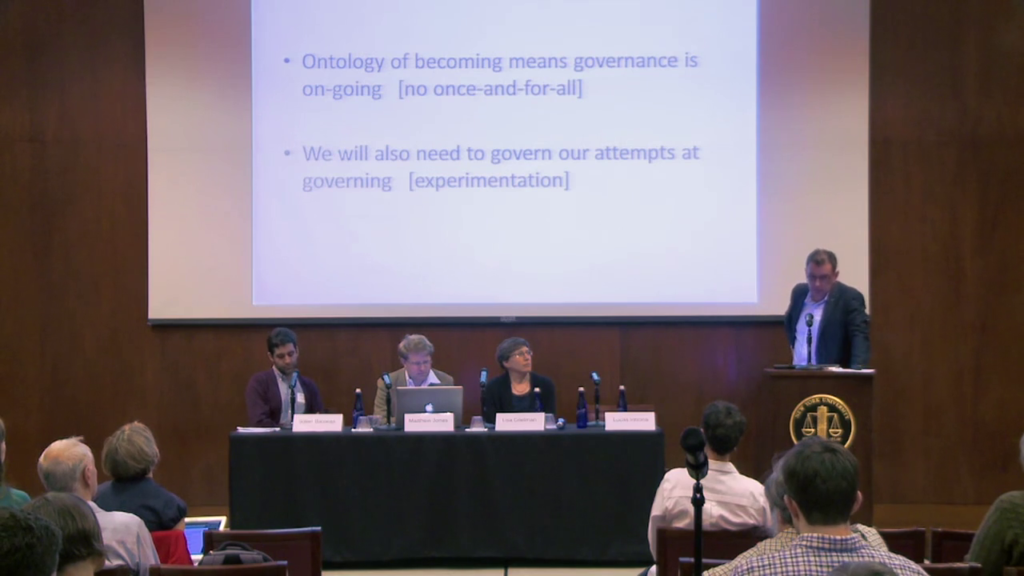

So, the ontology of becoming means for me that governance is an ongoing endeavor. There is no once-and-for-all solution. If we could locate the agency in a particular place, if we could find that location, then of course we could address that location. But since the agency is not in any particular location but is shifting between the actors as they immerse in this practice, there is no once-and-for-all solution. We can’t find the right model. There isn’t a right model. We can’t program— Even if we would open the algorithms and look at them we wouldn’t find the agency there. It’s a part. Of course it is one of the actors. What we need to understand is how these various actors—the plagiarism detection system, the students, the teachers, the educational practice—how they function together. How they become what we see them to be.

And then secondly, we need to also understand that our attempt to govern is itself one of the interventions that needs to be governed. So if we start and change… For example, if we say to Turnitin, “Change the algorithm. Change it in this way. Or make it visible. Make it transparent,” that act of making it transparent, as has been pointed out many times, would lead to gaming, which would then change the game, which then would need some further intervention. So there is no once-and-for-all, and our very attempts at governing would then produce outcomes, performatively, which we have not anticipated and which themselves need governing again. So, there is no one point in the spider’s web. Thank you.

Further Reference

The final version of Introna’s paper, published as Algorithms, Governance, and Governmentality: On Governing Academic Writing