What I’d like to do just with the few minutes that I’m up here is to set the stage. This is a huge set of questions, and I think a set of questions that are exploding into public view in a way that they hadn’t even just a few years ago. So I want to sort of like, set the broad place that some of these questions kinda live.

Archive

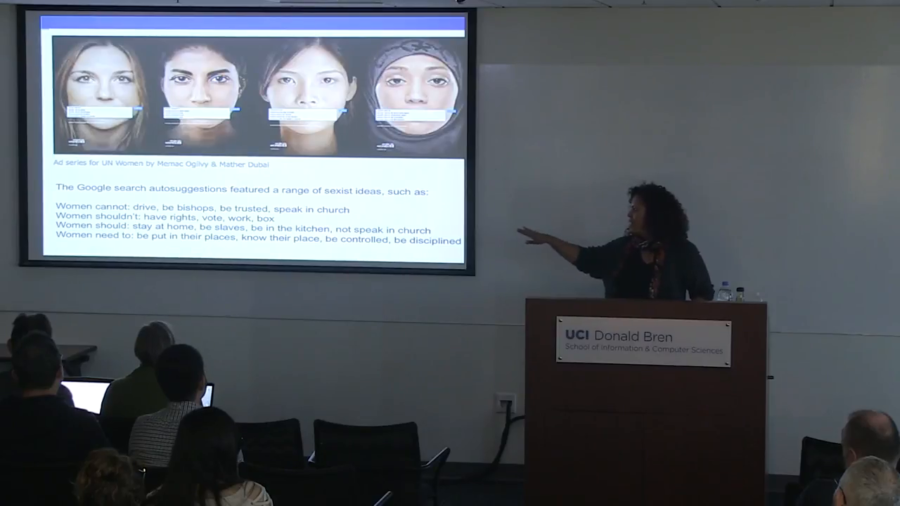

One of the things that I think is really important is that we’re paying attention to how we might be able to recuperate and recover from these kinds of practices. So rather than thinking of this as just a temporary kind of glitch, in fact I’m going to show you several of these glitches and maybe we might see a pattern.

I think that we need a radical design change. And I might ask if I were teaching an HCI class or design class with you, I would say, “How are you going to design this so that not one life is lost?” What if that were the design imperative rather than what’s your IPO going to be?

How would we begin to look at the production of the algorithmic? Not the production of algorithms, but the production of the algorithmic as a justifiable, legitimate mechanism for knowledge production. Where is that being established and how do we examine it?

It seems to me that to confront algorithms on their own terms, we may have to modify our preoccupation with the politics of knowledge and take up an interest in the politics of logistical engineering.

This is why it matters whether algorithms can be agonist, given their roles in governance. When the logic of algorithms is understood as autocratic, we’re going to feel powerless and panicked because we can’t possibly intervene. If we assume that they’re deliberately democratic, we’ll assume an Internet of equal agents, rational debate, and emerging consensus positions, which probably doesn’t sound like the Internet that many of us actually recognize.