J. Nathan Matias: I’m Nathan Matias, the founder of CivilServant.

Mason English: My name is Mason English, I’m an MD/MBA student, and I’m one of the mods over at r/politics. We actually have a couple here as well if you have any other questions.

So, a little bit about r/politics. r/politics is one of the earlier subreddits created. And I say that it’s one of the defaults. It was a default through 2013. The reasoning for that is that it has a unique link with the type of users that are members of r/politics. So Reddit, being a young technology-savvy kind of draw for a user base, r/politics has the same kind of thing, leading to those kind of users being a little bit more liberal. We have more liberal users, a higher percentage.

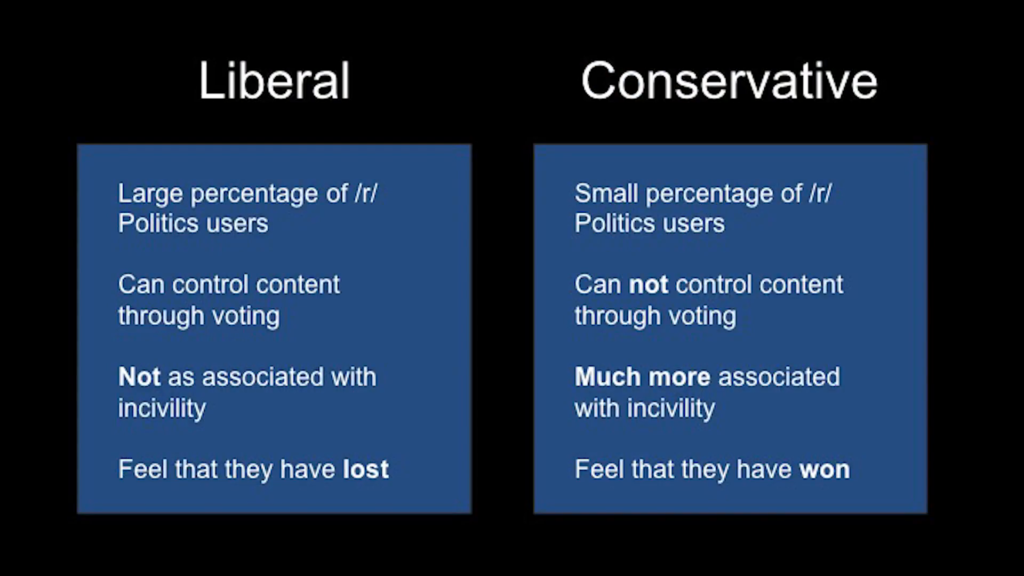

Kind of a Graph overview table here. Liberal users comprise a larger percentage of these r/politics users, while conservatives will comprise a smaller percentage. This being a huge simplization of this thing. But, through those users and through their voting, they can control what is seen and what is not seen. So a liberal user, as a block, will downvote more often than not something they don’t agree with necessarily.

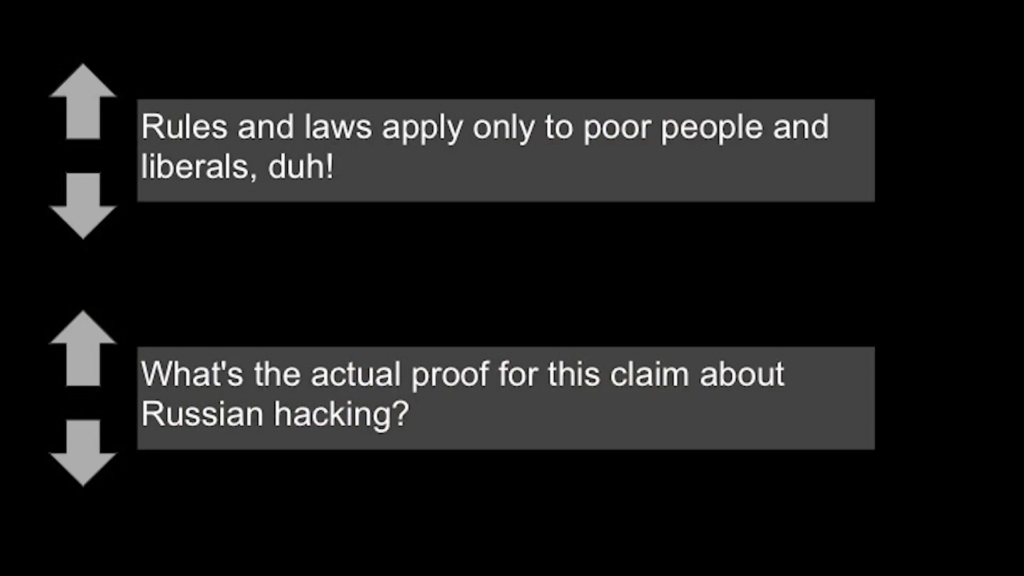

Just for example, two types of comments up here. That “Rules and laws apply only to poor people and liberals, duh!” versus “What’s the actual proof for the Russian hacking?” One’s asking a good question, one is…just…kind of a troll. But because of that type of user voting, one’s going to be seen, and the one asking possibly a legitimate question’s not going to be seen.

Going on, liberal users, less associated with incivility just as a whole. While the conservative users, just from what we’ve seen moderating, are more associated with this incivility.

Liberal users feel like they have lost. Throughout this past election, throughout the inauguration, over the last year of politics, liberal users feel like they’ve lost and control the voting.

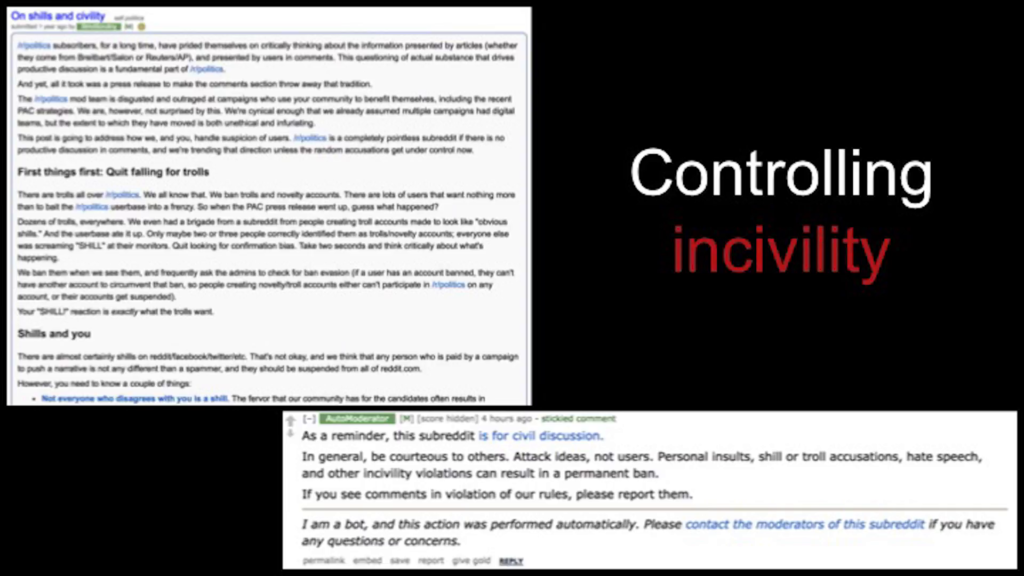

This has led to a large degree of incivility throughout the election and up to now. We have attempted to combat this with big, giant sticky posts addressing the community directly. We even stole the sticky comment thing from [r/]science to try and help with that. We weren’t able to see much change but we weren’t running a statistical model and data to actually observe that kind of thing.

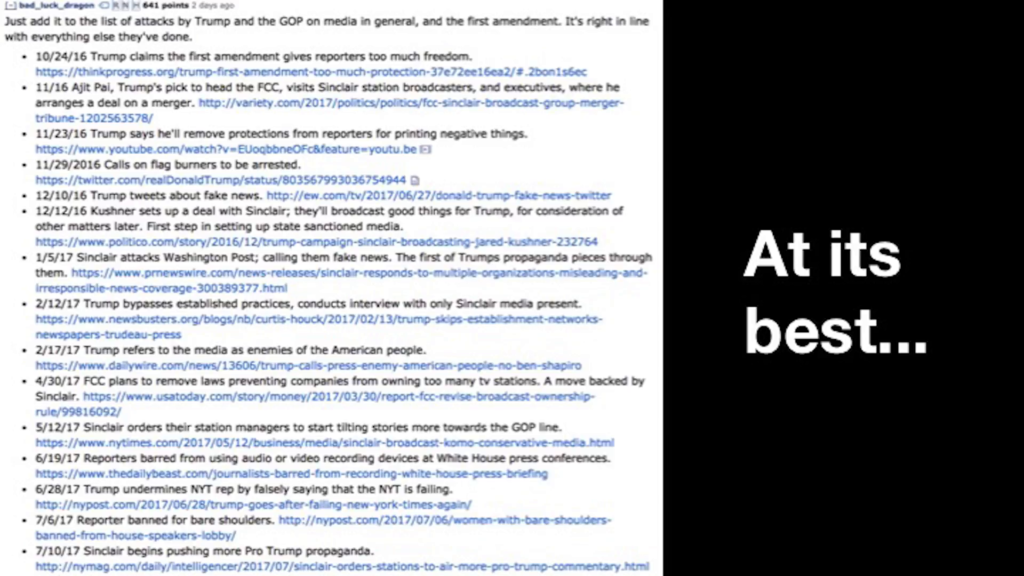

Reddit can be great. At its best it has great community reporting. Users that will go in and build amazing comments that will source themselves and really show what Reddit can do. At its worst, partisan voting and these partisan times we currently live in are leading to a hindrance to the discussion.

So working with Nate, we wanted to check in specifically on downvotes. Do downvote buttons because unruly online behavior? And can hiding these downvotes prevent unruly behavior?

Matias: So let me unpack that puzzle. So, why would we think that downvote buttons make things worse? And part of that comes back to a question that we are still asking, that’s still kind of an open question about how we design online platforms. Early in the 2000s, when the downvote button was invented, a researcher named Cliff Lampe (who’s here in the room) did one of the earliest studies of these voting systems and found that yes, if you ask people to vote on content, on average you know, people can separate high and low quality comments. Analysis suggests a qualified “yes.” And Cliff, we’re still trying to figure out exactly what that “qualified” means, as often happens with research.

Many years later, a further study by Justin Cheng and a number of collaborators looked at political discussions across four news sites and actually found that when people get downvoted, they come back more frequently, and their future comments are lower quality. They feel punished, like a minority, and perhaps…the study didn’t necessarily look at their motivations, but perhaps people come back and then kind of lash out or complain, and it escalates from there.

So, we wanted to work with the r/politics community to find out if hiding the downvote button would actually improve the quality of conversation. And particularly to look at the effect on the future behavior of people who were commenting for the first time in the community.

Now, Reddit moderators are able to hide the downvote, but it’s a bit complicated because they’re only able to do it for some users. And also, they’re only able to do it for the whole community at once.

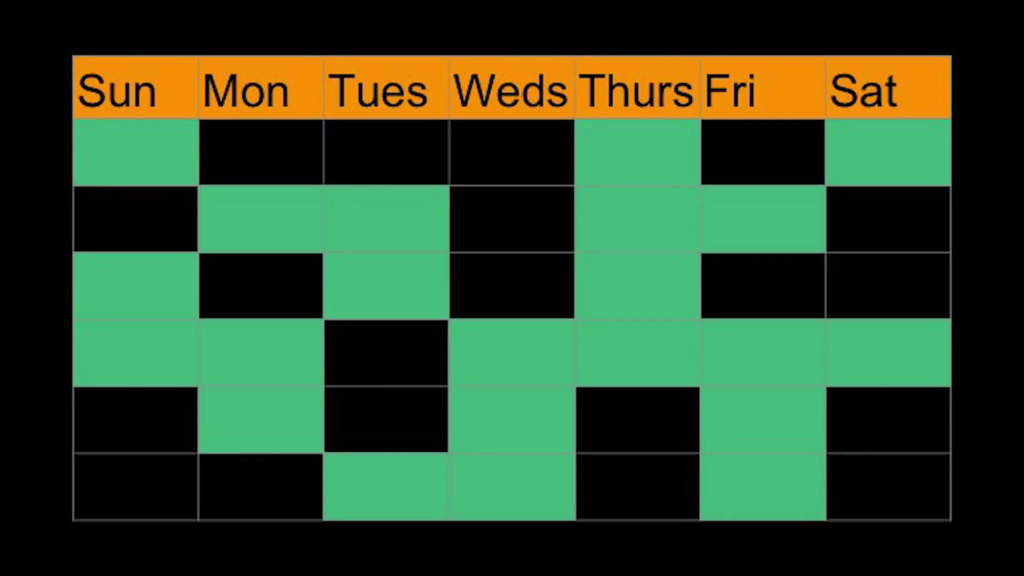

So the first thing we did was we basically set up a randomized trial where on some days the downvote button was visible in comments and other days it wasn’t.

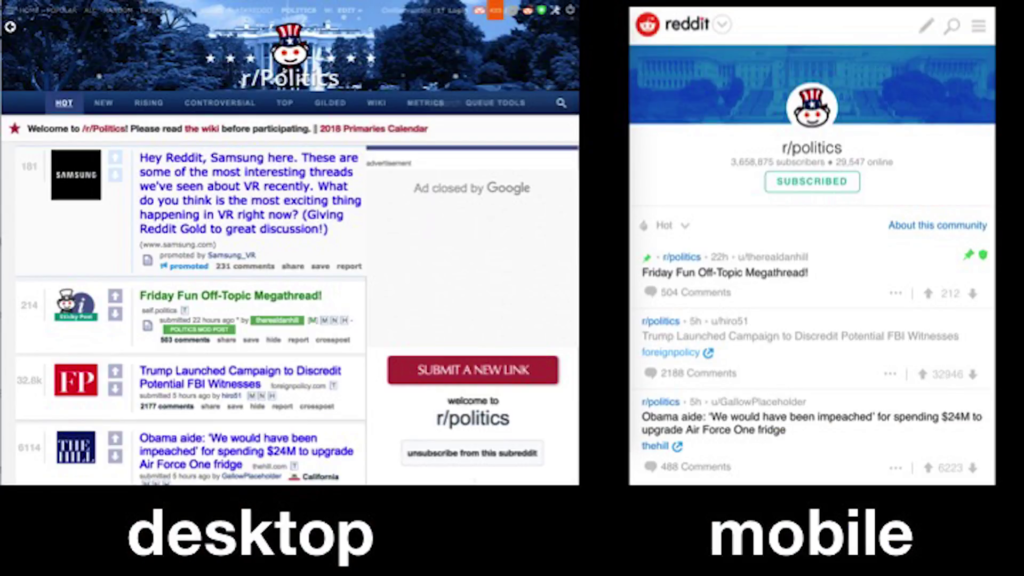

And then we also kind of acknowledged that desktop users wouldn’t see the downvote and consequently we hoped that there would be a lower share of downvotes on those days, even if mobile users who are about…we estimated about 65% of participants during that period would still be able to downvote as normal. So it’s kind of a messy first pass, but at the same time this is the tool that moderators had at their disposal and we wanted to find out what if any difference this made, especially because a lot of communities have tried this tactic of hiding downvotes over the years.

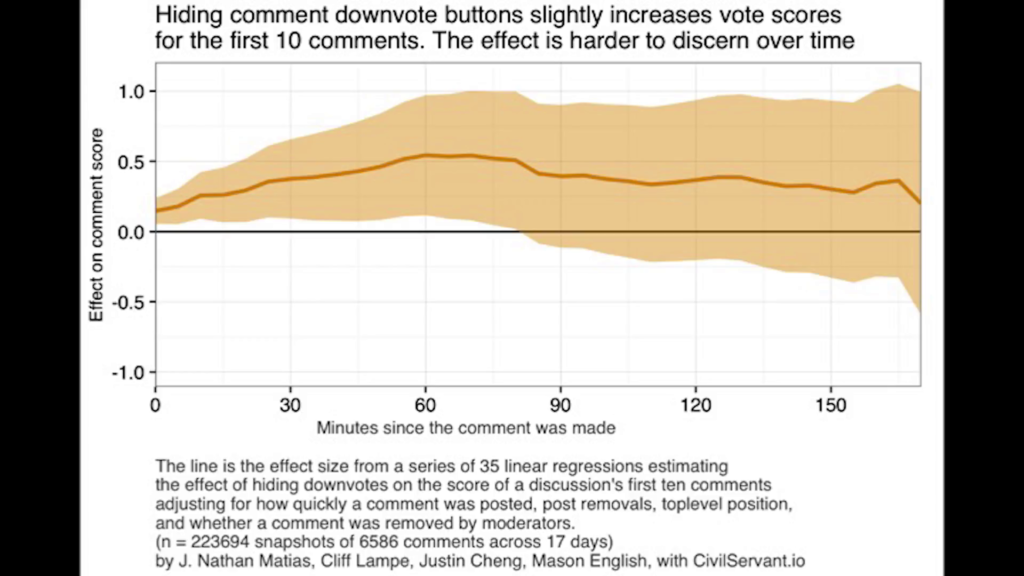

So here are some of the early findings that we have from this pilot study, which is not in any way the kind of final word on this. The first thing we did was to look at whether hiding downvotes actually affected the vote scores for comments. Because the way that Reddit works, if your comment received more upvotes, you’re given something called a higher score—it’s a little obfuscated by the platform but it still is useful sometimes for analysis. And then the visibility of your comment, it’s pushed higher in the discussion if you have more upvotes; it’s further down in the discussion if you have more downvotes. And in some cases it’s even hidden by the platform.

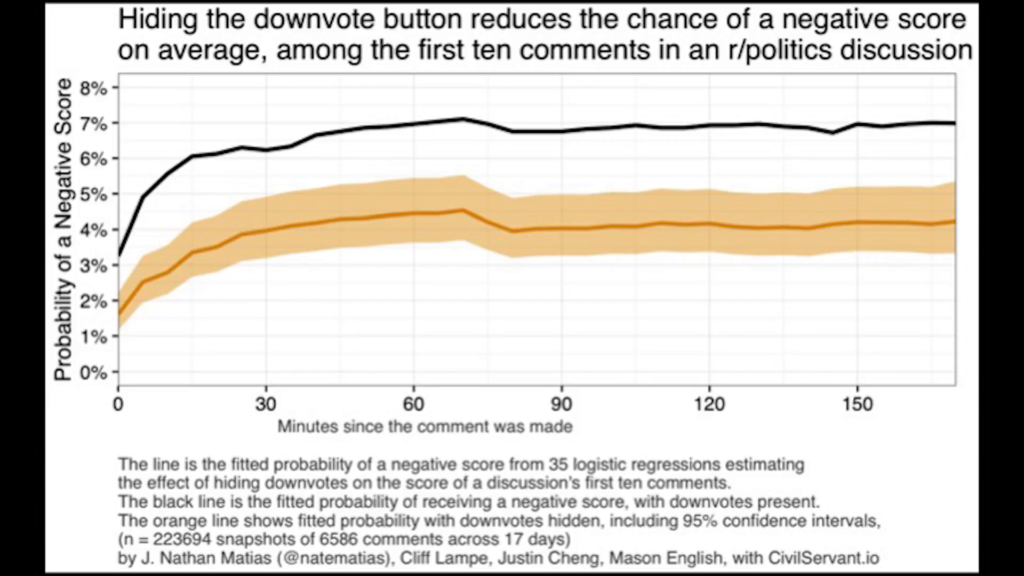

So the key to our research was to find out if hiding this button would even affect the score at all. And we found that when we monitored the first ten comments in discussion and took snapshots of their score over time, hiding the downvotes changed the score a little bit. It made the score a little higher, it reduced the share of downvotes. But not…quite as much as we were hoping for.

The next thing we looked at was the effect on whether a person’s comment would ever get a negative score. And there we found a more sharp distinction, that in that community somewhere around 6% of the first ten comments would get a negative score at some point. And we were able to reduce that by half by hiding the downvote. So were able to see that hiding the downvotes does have an effect on people’s voting behavior.

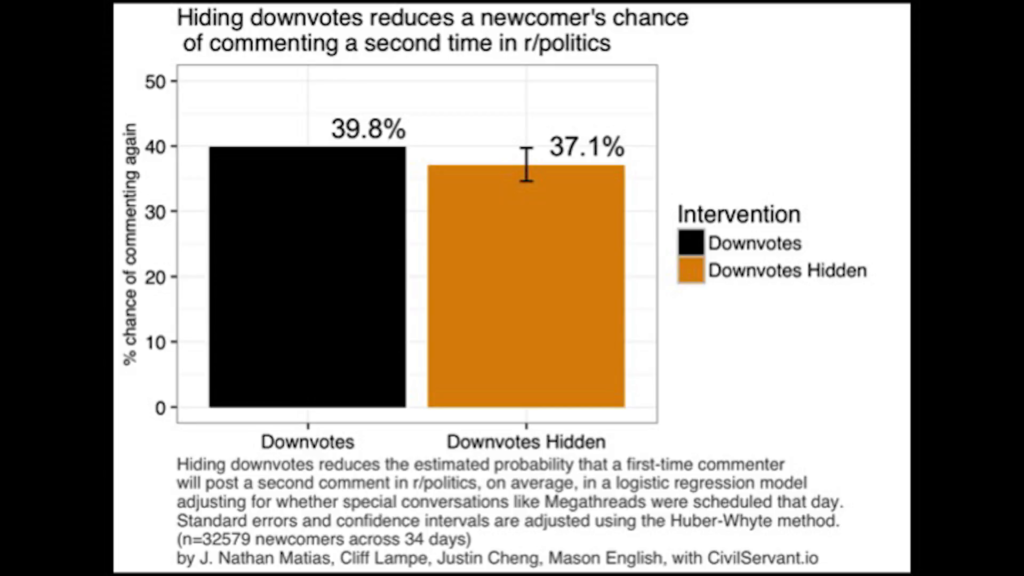

And that’s the first part of the study. We then wanted to know if people who commented for the first time in the community behaved differently in the future if they commented on a day where the share of downvotes was reduced. And we did find that similarly to what Justin Cheng found, people who do comment for the first time on a day where there is no downvoting, they actually are less likely to come back. That people who are more likely to receive downvotes are much more likely to come back and comment a second time. I should say step that back. Not much more likely, slightly more likely to come back. It’s actually a fairly small percentage point change.

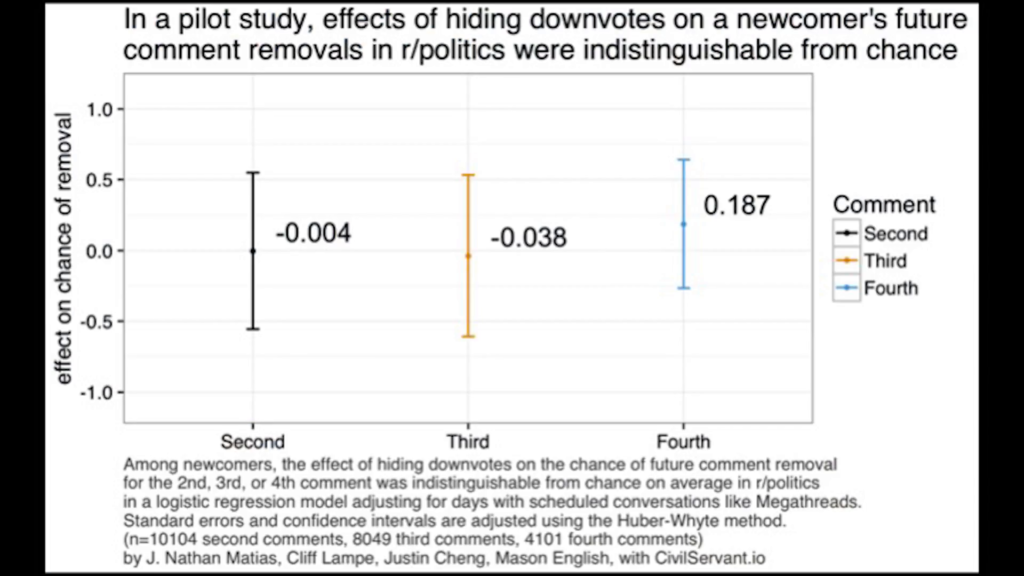

And then we were curious. Like, does this add up to any difference in how people behave in the future? And we looked at the chance of that person’s future comments to be removed by moderators. And there we couldn’t distinguish any effect that was different from chance. So it might have been possible with a larger sample size, or with better measurements of the quality of people’s comments. It’s possible that the effect is really small and we just weren’t able to see it. But using the statistical tools that we had, we weren’t able to observe any systematic differences in the future behavior of first-time commenters coming from days where we hid the downvotes than other days.

So, what do we learn from a null result like this? In our discussion with the community, many people asked why do it if you don’t necessarily get the answer you’re looking for. There are a few reasons why we might value this study. The first is that we learned that hiding the downvote button doesn’t have the dramatic effect on the ultimate votes that are given that I think a lot of people hoped for.

And that’s really valuable. Moderators hope that maybe hiding that button will really affect the votes. Not so much. It’s possible that if we were able to affect voting across mobile and desktop it would make more of a difference. We don’t know.

On top of that, we found that while hiding downvotes at least has some effects, it doesn’t have very much of an effect. So it’s not a panacea to the conflicts that we’ve already heard described happening in the community. So as the community thinks about other things that it could do, downvoting might be part of it. It may be possible that with a more reliable way to remove the downvote button there might be new ways to study this issue. But for what communities can do now, this doesn’t seem to have the effects that people are looking for.

And on top of that we learned a lot about how to measure these things. The things that people care about. And how to do work with a community in a politically contentious situation so that it can happen with the consent and transparency of the community, which I’m incredibly grateful for the r/politics subreddit for.

So as you have questions about how we can improve political conversation online, we’d love to talk to you, and you can reach us at civilservant.io Thanks.

Further Reference

Do Downvote Buttons Cause Unruly Online Behavior? at CivilServant

Gathering the Custodians of the Internet: Lessons from the First CivilServant Summit at CivilServant