J. Nathan Matias: I’m Nathan Matias, founder of CivilServant. And we’re here today to talk about the Internet.

Now, in the room today are some truly remarkable people. And I want you to know that in this slide there is more than just a dumpster fire. There are also people in suits who are training and dedicated to manage that fire. And many of those people, when it comes to the Internet, are here in the room. You’re people who’ve been creating and maintaining our digitally-connected spaces for decades. You’re community moderators, researchers, facilitators, engineers, educators, creators, bystanders, and protectors. People who look beyond the dumpster fire of whatever panic we have of the moment, recognize that there’s something deeply important worth saving, worth growing, and step in.

And in fact, there are a lot of us. Even as companies are expected to do something about online risks, far more of us take action than I think many of us recognize. According to a study by the Data & Society institute, around 46% of American Internet users have taken some kind of action to intervene on the issue of online harassment. That’s almost 100 million people.

Disinformation Is Becoming Unstoppable;

‘Our minds can be hijacked’: the tech insiders who fear a smartphone dystopia;

Pierre Omidyar: 6 ways social media has become a direct threat to democracy

Despite this we do live in pessimistic times, as society confronts the risks that come with living in a world that is inescapably digitally connected. And so, with many things competing for our fears, it’s easy to become paralyzed or uncertain about what to worry about most and what to do about those problems. Is disinformation really unstoppable? Are our our minds really being hijacked? And when we worry about our hopes for society slipping away, how can we meaningfully move from fear and uncertainty to do something that we’re confident can make a difference?

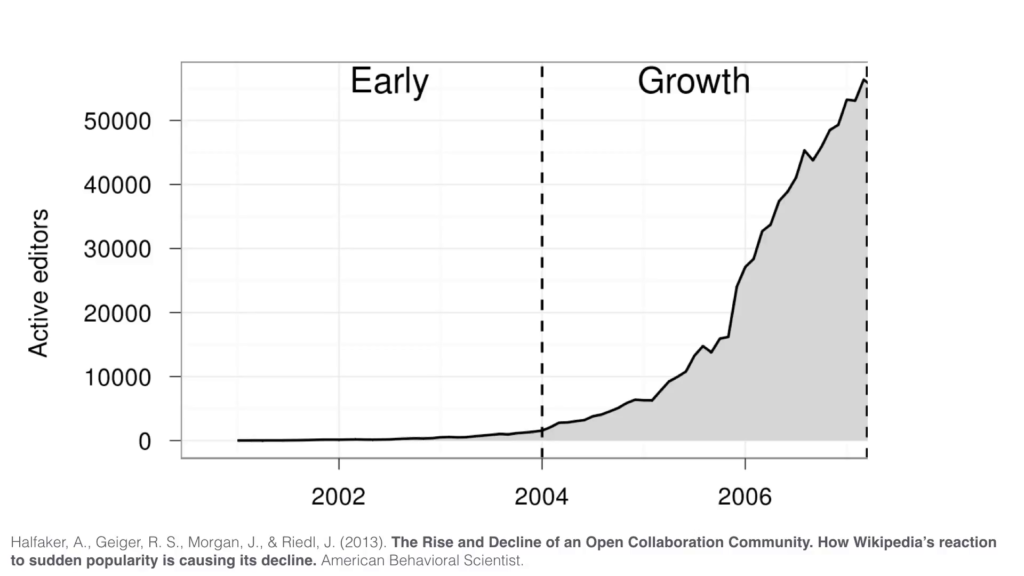

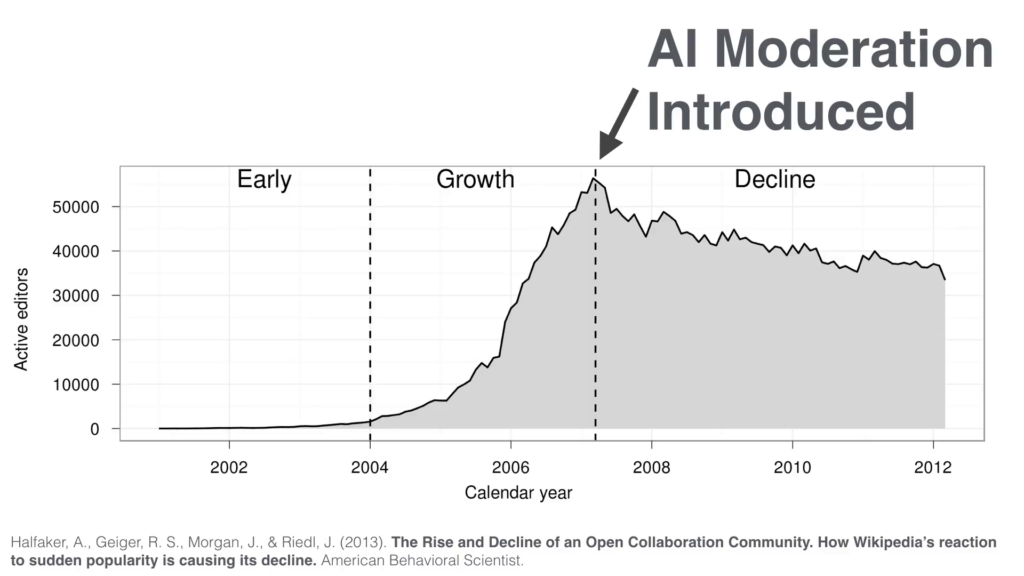

I imagine that’s how Wikipedians must’ve felt in 2007, after a year of remarkable growth that more than doubled the number of active contributors to this incredibly precious global resource.

Here’s the story as told by the researchers Aaron Halfaker, Stuart Geiger and their colleagues about a moment of crisis that they experienced.

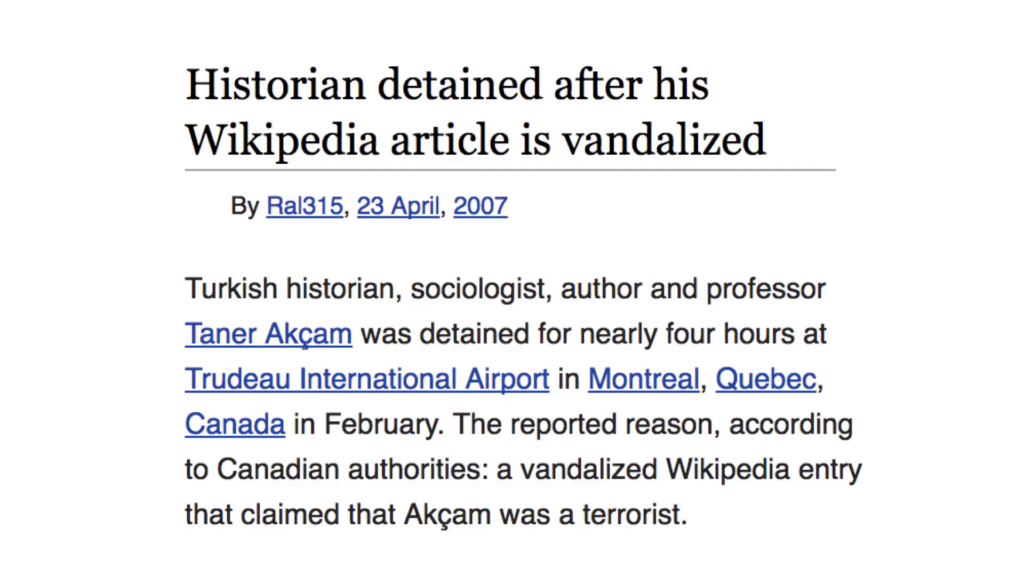

So, eleven years ago the Wikipedia community was just starting to reckon with the scale and risks of vandalism on the site as it became more and more relied on by people around the world. Wikipedia had been sued for false information that was posted to this encyclopedia that anyone can edit. And one person was even detained by Canadian border agents after someone added false information to their bio, claiming that they were a terrorist.

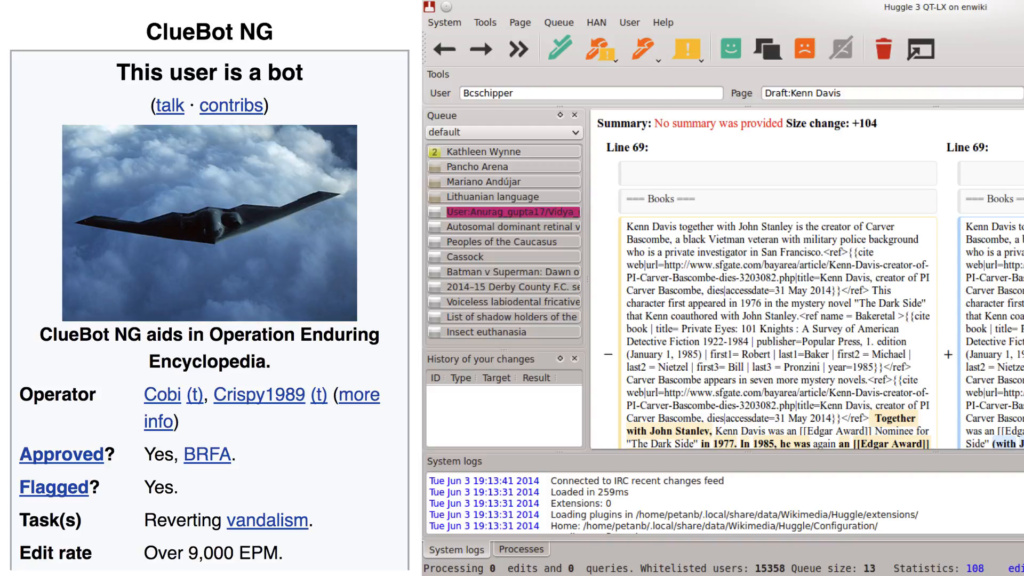

Now, Wikipedians learned, like so many other large online communities, that their collective endeavor had made them a basic part of millions of people’s lives, with all of the accompanying mess, conflict, and risk. And like many platforms, the community built social structures and software to manage those problems. They created automated moderators like the neural network ClueBot which detects vandalism, and moderation software that allowed people to train and oversee those bots. Thanks to these initiatives, Wikipedians were able to protect their community, cutting in half the amount of time that vandalism stayed on Wikipedia’s pages. It’s now impossible to maintain Wikipedia without these AI systems.

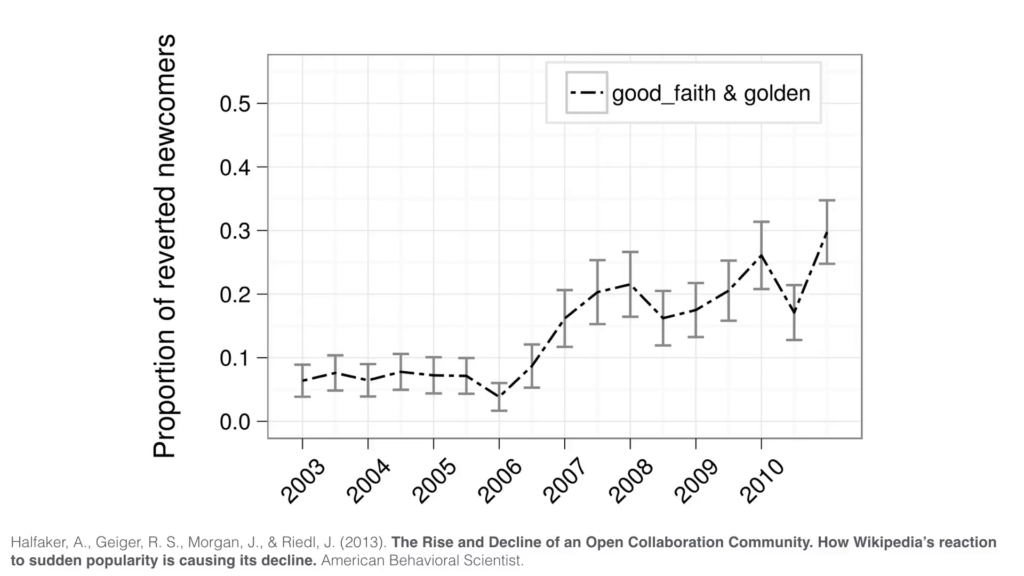

Unfortunately, this amazingly creative, successful effort had some side-effects. Soon after introducing automated moderation, Wikipedia participation went from massive growth into a slow decline. In a paper that appeared six years later, Aaron, Stuart and their collaborators noticed that this dramatic change coincided with the use of these scaled moderation systems.

They were able to show that up to 30% of edits removed by these bots were of newcomers who they imagined and estimated were actually good-faith contributors, people who might otherwise have brought their unique perspectives to this amazing shared resource of human knowledge.

Screenshot of the WikiGalaxy project

You know, Wikipedia’s one of the great treasures of our times, and to save it Wikipedians organized thousands of people in collaboration with AI systems in what has got to be one of the largest volunteer endeavors in history to fight misinformation. Each of us relies on their endeavor every single time we use Wikipedia. And yet, the long-term behavioral side-effects went undetected for years.

Today’s community research summit is the first public event for CivilServant, a project that started here at the MIT Media Lab and the Center for Civic Media, now incubated by Global Voices, we organize the public so that as we work together for a fairer, safer, more understanding Internet, we can also discover together what actually works and spot any side-effects of the powerful technologies and human processes we bring to bear.

To open up today we’ll hear from Tarleton Gillespie and Latanya Sweeney, who will set the stage for thinking about how people’s behavior is governed online, and how it’s important to hold the technology and the companies behind it accountable for the power they have in society.

We’ll also hear from Ethan Zuckerman and Karrie Karahalios about ways that people organize to understand systems and create change, and some of the barriers we may need to overcome as we try to make sense of the problems, the injustices, the mess of a complex world, and try to create more understanding societies online.

After a break, we’ll show you the mission of CivilServant by sharing lightning talks with communities that have already done research with us and organizations that are part of our mission.

We’ll then have time to hear from a number of other projects that are very much peers to what we do, and hear about the amazing work that they’re doing.

When all of that ends, we’ll also have an hour for all of you to have some refreshments and meet each other. Because as much as we are taking the opportunity to share what we’ve done together, this room has an amazing collection of people with incredible perspectives, wisdom, and capabilities that will help us think about and shape the future of a fairer, safer, more understanding Internet.

Today’s summit is made possible through funding of the Ethics and Governance of AI Fund, the Knight Foundation, the MacArthur Foundation, support from the Tow Center for Digital Journalism. Today’s event is public, and all of these talks will be filmed and put on YouTube. You should feel free to tweet about and share what you see on this stage. And we encourage you, with people’s consent, to share what you heard, attribute what people tell you. But make sure to check with someone before you share their face, their name, or their identity otherwise. Some people in this room because of the nature of the work they do have certain preferences for how they manage their identity. So we ask that you respect people’s name badges and proactively ask for consent before sharing.

Further Reference

Gathering the Custodians of the Internet: Lessons from the First CivilServant Summit at CivilServant